12 Moving to OSM Cloud Native from a Traditional Deployment

You can move to an OSM cloud native deployment from your existing OSM traditional deployment. This chapter describes tasks that are necessary for moving from a traditional OSM deployment to an OSM cloud native deployment.

Supported Releases

You can move to OSM cloud native from all supported traditional OSM releases. In addition, you can move to OSM cloud native within the same release, starting with OSM release 7.4.1.0.1.

Performing Pre-move and Post-move Tasks

Some OSM releases require running some tasks before and after moving to OSM cloud native. These tasks are described in the documentation of the target version of the OSM cloud native release. As mentioned in the documentation, before and after moving to OSM cloud native, perform these tasks.

Some of the patch readme files describe potential error conditions and workarounds listed in the Known Issues with Workaround section. Monitor and apply these too if required. An example of this (documented in the 7.3.5.1.x Patch Readmes) is the error condition where OM_DB_STATS_PKG remains in an invalid state. If you encounter this issue, apply the appropriate workaround to grant the required permissions and rebuild the package.

About the Move Process

The move to OSM cloud native involves offline preparation as well as maintenance outage. This section outlines the general process as well as the details of the steps involved in the move to OSM cloud native. However, there are various places where choices have to be made. It is recommended that a specific procedure be put together after taking into account these choices in your deployment context.

The OSM cloud native application layer runs on different hardware locations (within a Kubernetes cluster) than the OSM traditional application layer.

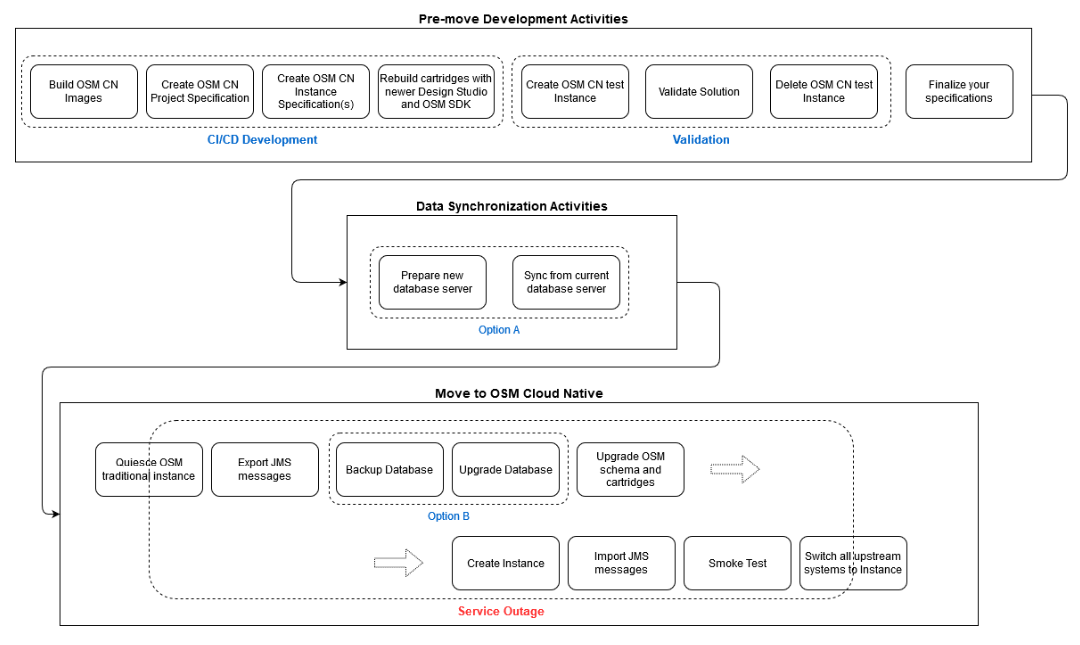

The process of moving to OSM cloud native involves the following sets of activities:

-

Pre-move development activities, which include the following tasks:

- Building OSM cloud native images (cloud native task)

- Creating project specification OSM cloud native (cloud native and solution task)

- Creating instance specification OSM cloud native (cloud native and solution task)

- Rebuilding cartridges using Design Studio and OSM SDK (solution task)

- Creating an OSM cloud native instance for testing (cloud native task)

- Validating your solution cartridges (solution task)

- Deleting the test OSM cloud native instance (cloud native task)

- Finalizing your specifications (cloud native and solution task)

-

Data synchronization activities, which include the following tasks:

- Preparing a new database server (database task)

- Synchronizing the current database server (database task)

-

Tasks for moving to OSM cloud native, which include the following:

- Quiescing the OSM traditional instance (solution task)

- Exporting JMS messages (WebLogic Server administration task)

- Backing up the database (database task)

- Upgrading the database (database task)

- Upgrading the OSM schema and cartridges (database task)

- Creating an OSM cloud native instance (cloud native task)

- Importing JMS messages (WebLogic Server administration task)

- Performing a smoke test (solution task)

- Switching all upstream systems (solution task)

The following diagram illustrates these activities.

Note:

In the diagram, the short form of "OSM CN" stands for "OSM cloud native".Figure 12-1 Move to OSM Cloud Native Process

Pre-move Development Activities

- Build OSM cloud native images. This task includes creating the OSM Docker image and the DB Installer Docker image by using the OSM cloud native download packages. See "Creating OSM Cloud Native Images" for details.

- Analyze your solution and create a project specification for your OSM cloud native instance. This specification includes details of JMS queues and topics, as well as SAF connections. See "Configuring the Project Specification" for details. If your solution requires model extensions or custom files, create the additional YAML files for those as well.

- Create an instance specification for your OSM cloud native instance. Preferably, create a test instance, pointing to a test PDB. You can later change this specification to point to the migrated database. Similarly, any SAF endpoint details should be pointing to the test components or emulators. When creating the specification, choose your cloud native production shape - prodsmall, prod, prodlarge. Alternatively, create a custom production shape by copying and modifying one of these. See "Creating Custom Shapes" for details about custom shapes.

- If your OSM cartridges were built against an OSM deployment that is older than 7.3.5, use Design Studio to rebuild them with the OSM SDK of the target release. Select the Design Studio version based on its compatibility matrix.

- Create an OSM cloud native test instance and test your specifications. To do this, create your cartridge users document and follow the process (create instance secrets, install the RCU schema, install the OSM schema, deploy your cartridges, bring up OSM, create ingress, and run test orders) to bring up the OSM cloud native instance as described in "Creating a Basic OSM Instance".

- Validate the solution.

- Shut down your test instance and remove the associated secrets, PDB, and ingress.

- Finalize your specifications for the move by picking up any changes

from your test activity and re-create instance secrets to use the migrated

database. Change the instance specification to:

-

Point to the migrated database location once it is known.

-

Switch SAF endpoints to the actual components, instead of emulators.

-

Arrange for the same number of managed servers in your OSM cloud native instance.

OSM cloud native requires the use of standard sizing for managed servers. This is represented as a set of "shapes". As a result, it is possible that your OSM cloud native instance needs a different number of managed servers to handle your workload as compared to your OSM traditional instance. For the purpose of this migration activity, it is recommended to start with the same number of managed servers, perform the import and smoke tests, and then scale (scale-up or scale-down) the OSM cloud native instance to the desired size.

If it is not possible to arrange for the same number of managed servers in your OSM cloud native instance as there are in your OSM traditional instance, it is recommended that you get as close as you can. You can then import the JMS messages from the leftover managed servers into one of the OSM cloud native managed servers. For example, consider an OSM traditional instance with four managed servers (ms1, ms2, ms3, and ms4). The analysis may show that you only need two managed servers (cn-ms1 and cn-ms2) of prod shape in your OSM cloud native instance. You can import all JMS messages from ms1 into cn-ms1, and from ms2 into cn-ms2. And then, import the remaining messages from ms3 to cn-ms1 and from ms4 to cn-ms2. Try to spread the load as evenly as possible.

-

Moving to an OSM Cloud Native Deployment

Moving to an OSM cloud native deployment from an OSM traditional deployment requires performing the following tasks:

- Quiesce the OSM traditional instance. See "Quiescing the Traditional Instance of OSM".

- Export JMS messages. See "Exporting and Importing JMS Messages".

- Take a back up and upgrade the database. See "Upgrading the Database".

- Upgrade the OSM schema and cartridges. See "Upgrading the OSM Schema and Cartridges".

- Create the OSM cloud native instance. See "Creating Your Own OSM Cloud Native Instance".

- Import JMS messages. See "Importing JMS Messages".

- Perform a smoke test. See "Performing a Smoke Test". Once the OSM cloud native instance passes smoke test and is optionally resized to the desired target value, shut down the OSM traditional instance fully.

- Switch all upstream systems to the OSM cloud native instance. See "Switching Integration with Upstream Systems".

Quiescing the Traditional Instance of OSM

At the start of the maintenance window, the OSM traditional instance must be quiesced. This involves stopping database jobs, stopping all upstream and peer systems from sending messages (for example, http/s, JMS, and SAF) to OSM, and ensuring all human users are logged out. It also involves pausing the JMS queues so that no messages get queued or dequeued. The result is that OSM is up and running, but completely idle.

Exporting and Importing JMS Messages

Irrespective of the persistence mechanism you use (file-based or JDBC) in your OSM traditional instance, you must still export and import outstanding messages as described in this section. If file-based persistence is used, this procedure accomplishes a switch to JDBC-based persistence. On the other hand, if JDBC-based persistence is already in use, this procedure brings the setup (in WebLogic and in the database) in line with OSM cloud native requirements.

Overall, this procedure consists of exporting the JMS messages to disk, switching over to the OSM cloud native instance, and importing the JMS messages from disk. Due to the configuration in the OSM cloud native instance, the imported messages will get populated into the appropriate database tables of the OSM cloud native instance rather than their original location. The time taken for the export and import depends on the number of messages that are in the persistent store to begin with.

Exporting JMS Messages

Before exporting JMS messages, validate that your OSM traditional instance has the WebLogic patch 31169032 (or its equivalent for your WebLogic version) installed. This patch is required to properly export OSM JMS messages. If it is not installed, follow the standard WebLogic patch procedures to procure and install the patch.

To export JMS messages:

- Login to the WebLogic Console and navigate to the list of OSM queues.

- For each queue, open its Monitoring tab to get the list of current destinations for the queue. The Monitoring tab shows as many destinations as the number of managed servers.

- Select each destination and click Show Messages. If there are

any messages pending in this destination of this queue, click the Export

button to export all the messages to a file. Use the queue name and destination in

the filename for ease of tracing.

Future-dated orders result in pending messages in

OrchestrationDependencyQueue. - If you have defined other JMS Modules as part of your solution, repeat steps 2 and 3 for each of those modules.

Importing JMS Messages

Before importing JMS messages, ensure that your OSM cloud native instance is up and running, but quiesced (queues paused and database jobs stopped). It is recommended that your OSM cloud native instance has the same number of managed servers as your OSM traditional instance.

To import JMS messages:

- Transfer all the exported files into the Admin Server pod using the kubectl cp command.

- Log in to the WebLogic Console and navigate to JMS Modules where the OSM queues are listed.

- For each queue for which you have an export file, open its Monitoring tab.

- For each destination on this queue for which you have an export file, find the same destination in the list

- Select the destination and click Show Messages. Click Import to specify the filename and import the messages.

- If your export contains files that came from a custom JMS module in

your OSM traditional instance, you should still see those queues in

osm_jms_modulein your OSM cloud native instance. If you do not, check that your project specification is up to date.

Upgrading the Database

To upgrade the database, you perform the following tasks:

Upgrading the Database Server

You may need to upgrade the database server itself to the version supported by the OSM cloud native release you are moving to. To identify the required version of the database server and to determine if you need a database server upgrade, see OSM Compatibility Matrix.

If you do need a database server upgrade, choose one of the following options:

- Option A: Create an additional database server: Create a second database

server of the target database version (with required patches), seeded with an RMAN

backup of the OSM traditional database. Enable Oracle Active DataGuard to

continuously synchronize data from the OSM traditional database to this new

database. Use this new database for the OSM cloud native instance. For further

details, see Mixed Oracle Version support with Data Guard Redo Transport

Services (Doc ID 785347.1) knowledge article on My Oracle Support. The exact

mechanisms to be used are subject to circumstances such as resource availability,

size of data, timing, and so on but the goal is to have a second database server

running the target database version but always containing the data from the OSM

traditional database.

This option has the following advantages:

- Allows switching from a standalone database to a Container DB and Pluggable DB model that is required for OSM cloud native, without impacting other users of the existing database.

- Reduces the duration of a service outage since you can avoid having to backup the database and upgrade it as part of the maintenance window.

- Preserves the OSM traditional database unchanged reducing the risk and cost associated with reverting to OSM traditional instance, if that becomes necessary.

- Option B: Retain the existing DB server: You can retain the existing database server, upgrading it in-place to the target database version and patches.

If you choose option A, the upgrade process must pause after the export of JMS messages, and ensure the Active DataGuard sync is complete (if there are pending redo logs). Then, before proceeding, the sync must be turned off and the new database must be brought online fully.

Preparing the Required Database Entities for OSM Cloud Native

To meet the OSM cloud native pre-requisites, you will have to create an RCU schema in the database using the DB Installer, with command 7.

To ensure a clean start for OSM cloud native managed servers, delete the leftover LLR tables. When OSM cloud native managed servers start, these tables are recreated with the required data automatically.

To delete the LLR tables:

- Connect to the database using the OSM cloud native user credentials

- Get the list of tables to

delete:

select 'drop table '||tname||' cascade constraints PURGE;' from tab where tname like ('WL_LLR_%'); - For the tables listed, run the commands for dropping a table.

Upgrading the OSM Schema and Cartridges

- Migrate the OSM schema: To migrate the schema of your OSM traditional instance into a schema that is compatible with OSM cloud native, run the OSM cloud native DB Installer with command 12.

- Upgrade the OSM Schema to the target version: If you are running a version of OSM traditional instance that is older than the target OSM cloud native version, use the OSM cloud native DB Installer with command 1 to upgrade the OSM schema to the correct version.

- Rebuild solution cartridges: Depending on the version of your current OSM traditional deployment, you may have to rebuild your solution cartridges using the latest release of Design Studio and the target OSM SDK. This is a preparatory step, and the new cartridge lineup would be reflected in the project specification that is also created as part of the preparatory step. All cartridges built targeting OSM versions prior to release version 7.3.5 require rebuilding. This rebuild is the same requirement that exists for OSM traditional deployments as well.

Switching Integration with Upstream Systems

- Ensure that the OSM cloud native instance has its JMS and SAF objects unpaused and its DB jobs restarted.

- Configure the upstream and peer systems to resume sending messages. See "Integrating OSM" for more details.

Reverting to Your OSM Traditional Deployment

During the move to OSM cloud native, if there is a need to revert to your OSM traditional deployment, the exact sequence of steps that you need to perform depend on the options you have chosen while moving to OSM cloud native.

In general, the OSM traditional deployment application layer should be undisturbed through the upgrade process. If Option A was followed for upgrading the database, the OSM traditional instance can simply be started up again, still pointing to its database.

- Revert the database server version to the earlier version (if a database server upgrade was performed as part of the switch to OSM cloud native)

- Restore the database contents from the backup taken as part of Option B for upgrading the database.

Cleaning Up

Once the OSM cloud native instance is deemed operational, you can release the resources used for the OSM traditional application layer.

If Option A was adopted for the database, then you can delete the database used for OSM traditional instance and release its resources as well. If Option B was followed and your OSM traditional instance was using JDBC persistent stores, the tables corresponding to these are now defunct and you can delete these as well.