6 Creating Your Own OSM Cloud Native Instance

This chapter provides information on creating your own OSM instance. While the "Creating a Basic OSM Cloud Native Instance" chapter provides instructions for creating a basic OSM instance that is capable of processing orders for the SimpleRabbits sample cartridge that is provided with the OSM cloud native toolkit, this chapter provides information on how you can create an OSM instance that is tailored to the business requirements of your organization. However, if you want to first understand details on infrastructure setup and structuring of OSM instances for your organization, then see "Planning Infrastructure".

Before proceeding with creating your own OSM instance, you can look at the alternate and optional configuration options described in "Exploring Configuration Options".

When you created a basic instance, you used the operational scripts and the base configuration provided with the toolkit.

Creating your own instance involves various activities spanning both instance management and instance configuration and includes some of the following tasks:

- Selecting a Deployment Topology

- Configuring OSM Runtime Parameters

- Preparing Cartridges

- Extending the WDT Model

- Working with Kubernetes Secrets

- Adding JMS Queues and Topics

- Generating Error Queues for Custom Queues and Topics

- Creating a JMS template

- Deploying Cartridges

- Provisioning Cartridge User Accounts

Selecting a Deployment Topology

OSM CNTK can be instructed to deploy only WebLogic-based components either

with or without new microservices. This can be achieved with the help of the

omsConfig.osm_runtime_type element in the project specification. The default

value of this specification is MultiService.

# Provide values to override the defaults for oms-config.xml

omsConfig:

# anything here overrides what is in shape spec in case of duplication

# anything here can be overridden by instance spec

project:

osm_runtime_type: MultiService # MultiService(CN with full topology) or WLS (CN with only core fulfillment topology)- WLS: OSM is running with only the WebLogic based components without new

microservices.

- Microservice dependent features such as TMF orders, System Interactions and Fallout Exception, are not available and cartridges that use such features cannot be deployed.

- MultiService: OSM is running with new microservices with the OSM cloud

native 7.5 features enabled.

- OSM cloud native 7.5 features which are enabled are TMF orders, System Interactions and Fallout Exception.

While deploying older cartridges, you must rebuild cartridges using Design Studio. For more information, about the Design Studio version you need to use, refer to OSM Software Compatibility. If the rebuild fails, you must not deploy the cartridges.

For more information about TMF and non-TMF cartridges, refer to "About TMF Cartridges and Non-TMF Cartridges".

- Scale down your cluster to 0.

- Upgrade the database using the database installer command code

1. - Scale your cluster back to the original clusterSize.

If you create an OSM cloud native instance with osm_runtime_type as WLS and then later upgrade to MultiService, you need to recreate Ingress to access the newly created microservices.

Note:

An OSM Instance set up as MultiService must not be downgraded to WLS even though such a change is not blocked by the CNTK.

osm-gateway.image: ""

osmRuntimeUXServer.image:""

ingress.osmgw.annotations: {}

ingress.rtux.annotations: {}

Configuring OSM Runtime Parameters

You can control various OSM runtime parameters using the oms-config.xml file. See "Configuring OSM with oms-config.xml" in OSM Cloud Native System Administrator's Guide for details.

This configuration is managed differently in OSM cloud native. While all the parameters described in the OSM Cloud Native System Administrator's Guide are still valid, the method of specifying them is different for a cloud native deployment.

Each of the three specification file tiers - shape, project, and instance - has a section called omsConfig. For example, the project specification has the following section:

omsConfig:

project:

com.mslv.oms.handler.cluster.ClusteredHandlerFactory.HighActivityOrder.CollectionCycle.Enabled: true

oracle.communications.ordermanagement.cache.UserPerferenceCache: nearSome parameters have been laid out for you in the pre-configured shape specification files and in the sample project and instance specification files. When you wish to change the value of a parameter to a different one from the documented default value, you must add that parameter and its custom value to an appropriate specification file.

For values that depend on (or contribute to) the footprint of the OSM Managed Server, the shape specification would be best. For values that are common across instances for a given project, the project specification would be best. Values that vary for each instance would be appropriate in the instance specification.

Any parameter specified in the instance specification overrides the same parameter specified in the project or shape specification. Any parameter specified in the project specification overrides the same parameter in the shape specification.

Any parameter that is not present in all three specification files (shape, project, instance) automatically has its default value as documented in OSM Cloud Native System Administrator's Guide.

Note:

All pre-defined shape specifications have theomsConfig parameters flagged as do NOT delete. These must not be edited and must be copied

as-is to custom shapes. See "Working with Shapes" for details about custom shapes.

Configuring Schema Validation

OSM Gateway handles incoming and outgoing JSON payload transformation. When a JSON payload contains data that is not registered with OSM, OSM Gateway can either fail validation or silently prune the extra data from the transformation, depending on the settings in the project specification.

Schema dictating the incoming payloads for TMF REST endpoints, are registered via the Hosted Order Specification in TMF cartridges (622 or 641).

This validation is applicable to incoming order payloads only when hosting a TMF cartridge (622 and 641). This validation is also applicable to incoming and outgoing payloads for Freeform or TMF cartridges that use a System Interaction to communicate with external systems.

# If incoming or outgoing JSON payloads contain extensions that are

# not registered with OSM, use these parameters to specify whether the

# payload should be failed (STRICT) or the unrecognized extensions

# simply pruned (PRUNE). Default is STRICT.

#validation:

# unregisteredSchema:

# incoming: STRICT

# outgoing: STRICTConfiguring Target Systems for Events and System Interactions

TMF cartridges define an Event Target System name, to identify the recipient of event notifications about the TMF resource.

Freeform cartridges and TMF cartridges that use System Interaction specifications would define a target system name to identify the external system involved in a REST interaction.

In both cases, a logical target system name is provided inside the cartridge. The configuration necessary for OSM to resolve these names at runtime is provided in the CNTK specification files.

Configuring the Project Specification

# Define targetSystem info, provide name of the targetSystem like reverseProxy.

requiredTargetSystems: [] # This empty declaration should be removed if adding items here.

#requiredTargetSystems:

# - name: BillingSystem

# description: "Oracle BRM for TMF622 COM cartridge"

# - name: ShippingSystem

# description: "Unified shipping portal"Configuring the Instance Specification

The logical system name is decoupled from the actual connection details so that cartridge deployment is not impacted by a specific environment. Each logical system name will be resolved against a set of connection details and applicable security scheme, at runtime. To enable this resolution, the connection information must be provided in the CNTK instance specification.

# Define targetSystem info, provide server details and security info

targetSystems: {} # This empty declaration should be removed if adding items here.

#targetSystems:

#systems:

#targetSystem_Name:

#url: target_system_url

#protocol: protocol

#description: description

#securityScheme: securitySchemeName

# To override default fault tolerance parameters, uncomment this section and provide values

#fault-tolerance:

#retry:

# The maximum number of retry attempts by pod when emitting a message failed

# When value is absent, pod will retry 2147483647 (Integer.MAX_VALUE) times.

#maxRetries: 2147483647

# The delay between the retry attempts

#delay: 5000

# The delay unit eg MILLIS

#delayUnit: MILLIS

# Instructs pod on which error code has to retry

#onErrorCodes:

# - 500

# - 503

# - 409

# - 429

#concurrency:

# The maximum number of concurrent connections that can be made to this target system from the OSM Gateway

# across the OSM cluster (default : 50)

#maxValue: 50

# Define security scheme for target systems enabled with security

# For each security scheme defined kubernetes secret should be created using

# ${CNTK_HOME}/scripts/manage-target-system-credentials.sh script.

#securitySchemes:

#- name: <SecuritySchemeName-1>

# type: "userPassword"

# authorizationUrl: <authorization URL>

# sessionId:

# type: <"cookie" or "header">

# name: <Cookie-name or Header-name that carries sessionID>

#- name: <SecuritySchemeName-2>

# type: "OAuth2"

# authorizationUrl: <authorization URL>

# tokenUrl: <token URL>

# scopes:

# - name: scope1

# description: "Scope 1 Description" #optional

# - name: scope2

# description: "Scope 2 Description" #optionalConfiguring Security Schemes for Target Systems

System interactions support OIDC and basic authentication security schemes for

target systems that are enabled with security. You can define these security schemes in the

Instance Specification under the element targetSystems as

securitySchemes.

${CNTK_HOME}/scripts/manage-target-system-credentials.sh -p project -i instance -n securitySchemeName -t OAuth2/userPassword createNote:

In the script above, if you use theuserPassword security scheme, you will

need to provide a username and password. If you use the OAUTH2 security scheme, then you

will need to provide a client and secret.

Using the OIDC Security Scheme

targetSystems: securitySchemes: - name: SecuritySchemeName type: "OAuth2" tokenUrl: token URL scopes: - name: scope_1 description: "Write Scope" - name: scope_2 description: "Read Scope"In the security scheme configuration above:

token URLis the access token URL which is used to obtain the access token from the Identity provider such as KeyCloak.Scopesprovide limitations to the access granted to an access token.SecuritySchemeNameis used to fetch the client id and the client secret from the Kubernetes secret.

Using the Basic Authentication Security Scheme

If the target system is enabled with basic authentication security either utilizing user

credentials or JSESSIONID, then you need to configure the userPassword

security scheme.

You can configure this security scheme using either of the following three methods:

- Basic auth with user credentials

- JSESSIONID header

- JSESSIONID cookie

Note:

The default configuration for theuserPassword security scheme is

Basic auth with user credentials.

Basic Auth with User Credentials

userPassword when the target system is enabled with

basic auth using user credentials

security.securitySchemes:

- name: SecuritySchemeName

type: "userPassword"username:password. Therefore the authentication header would look

like: Authorization: Basic base-64 encoded value of username:passwordJSESSIONID in Authentication Header

In this security scheme configuration, instead of sending user credentials, the OSM gateway sends the user session ID in an authentication header for each request. The OSM gateway exchanges the user credentials only once to obtain the user session ID from the response header.

userPassword when the target system is enabled with

authentication using the user session ID in an authentication

header.securitySchemes:

- name: SecuritySchemeName

type: "userPassword"

authorizationUrl: authorization URL

sessionId:

type: "header"

name: <Header Name>

In the above security scheme configuration:- The OSM gateway exchanges the user credentials (base-64 encoded

value of

username:password) in an authentication header using the the target system'sauthorizationUrlto obtain the user session ID. - The OSM gateway extracts the user session ID from the response headers using the header name defined the in the security scheme.

- The OSM gateway sends the user session ID in an authentication header in each request sent to the target system.

- Therefore, the authentication header would look

like:

Authorization: user session ID

JSESSIONID in Cookies

In this security scheme configuration, instead of sending user credentials, OSM gateway sends the user session ID in cookies for each request. OSM gateway exchanges the user credentials only once to obtain the user session ID from the response cookies.

userPassword when the target system is enabled with authentication

using user session ID in

cookies.securitySchemes:

- name: SecuritySchemeName

type: "userPassword"

authorizationUrl: authorization URL

sessionId:

type: "cookie"

name: Cookie NameIn

the security scheme configuration above: - The OSM gateway exchanges the user credentials (base-64 encoded

value of

username:password) in an authentication header using the target system'sauthorizationURLto obtain the user session ID. - The OSM gateway extracts the user session ID from the response cookies using the

Cookie Namedefined in the security scheme. - The OSM gateway sends the user session ID in cookies in each request to the target system.

- Therefore, the http header would look like:

cookie: user session ID

Configuring OSM Gateway Readiness

You can control different aspects of OSM Gateway behavior by editing the instance specification. Additionally, tuning parameters for OSM Gateway are available in the shape specifications.

OSM Gateway establishes a connection with the OSM managed server and waits for it to transition into the "ready" state. However, if the managed server fails to become ready within the specified timeout duration, the gateway declares it as "not ready" and proceeds to initiate Kubernetes cleanup and retry procedures. This parameter is enabled by default.

# OSM Gateway

osm-gateway: {} # This empty declaration should be removed if adding items here.

#osm-gateway:

# When enabled, in order to start, OSM Gateway app waits until the timeout is elapsed for OSM-CN to be up.

#waitForOSMReadinessBeforeStart:

#enabled : true

# Based on the ISO-8601 duration format PnDTnHnMn.nS.

# For additional information, refer https://docs.oracle.com/javase/8/docs/api/java/time/Duration.html

#timeout: "PT300S"

..............Configuring the Order Operations User Interface

Use the instance specification to configure how and when various details are displayed in the Order Operations user interface.

The following block shows the instance specification where you configure settings for the data displayed in the Order Operations user interface:

osmRuntimeUX: {} # This empty declaration should be removed if adding items here.

#osmRuntimeUX:

#alertThresholds:

# The percentage of orders that must succeed in a given time interval otherwise an alert is displayed to the user.

# Order Success Rate = OrdersCompletedCount / (OrdersCompletedCount + OrdersFailedCount) * 100

#minOrderSuccess: "98"

# The maximum number of orders that can be submitted in an hour before an alert is displayed to the user.

#maxHourlySubmittedOrder: "5000"

#configuration:

# An ISO 8601 duration period string that represents the oldest fromDatetime supported by the operations backend and UX.

# The default value "P6M" indicates the oldest fromDate allowed by the API and the UX is 6 months ago.

#minFromDateTimePeriod: "P6M"

# The maximum percentage of orders that can fail otherwise above KPI badge is displayed to the user on Order Operations KPI dashboard.

# Order Failure Rate = (OrdersFailedCount + OrdersRejectedCount) / TotalOrdersCount * 100

#maxOrderFailure: "2"

# The number seconds between automatic dashboard refresh in the Operations UX

#refreshTimeInterval: 180

# The number seconds for which landing page or iframe applications timeout when left idle

# This should always be less than default OSM core session timeout value of 1500s.

#sessionTimeOut: 1400

# The number of retries UX applications will perform on errors reaching server APIs

#onErrorMaxRetryCount: 3

# The number of seconds UX applications will wait between such retries

#onErrorRetryIntervalSecs : 30

Configuring the Alerts Displayed in the Order Operations Dashboard

You can control different aspects of the Order Operations user interface behavior by editing the instance specification. Additionally, tuning parameters for the Order Operations user interface are available in the shape specifications.

The Operations Dashboard displays a bell icon, which upon clicking opens the Alerts panel. The Alerts panel displays alerts when a threshold set on the orders is reached.

An alert is triggered and displayed when the percentage of orders drops below the number of orders that must succeed in a given time interval.

Order Success Rate = OrdersCompletedCount / (OrdersCompletedCount + OrdersFailedCount) * 100

To configure the alerts displayed in the Operations Dashboard, you configure the following parameters in the instance specification:

alertThresholds.minOrderSuccess: The order completion success threshold that must be met by every order group. Otherwise, an alert is triggered and displayed. The default value is 98.alertThresholds.maxHourlySubmittedOrder: The number of orders that can be submitted in an hour before an alert is shown. The default value is 5000.

The following block shows the instance specification where you configure parameters for alerts:

# osmRuntimeUX

osmRuntimeUX: {} # This empty declaration should be removed if adding items here.

#osmRuntimeUX:

#alertThresholds:

# The percentage of orders that must succeed in a given time interval otherwise an alert is displayed to the user.

# Order Success Rate = OrdersCompletedCount / (OrdersCompletedCount + OrdersFailedCount) * 100

#minOrderSuccess: "98"

# The maximum number of orders that can be submitted in an hour before an alert is displayed to the user.

#maxHourlySubmittedOrder: "5000"

..........Configuring Session Timeout

When logged into the application, if a user leaves the user interface idle in a tab, a pop-up window appears after 80% of the configured session timeout duration is reached. The pop-up window indicates how much time the user has before the session times out. If the user clicks OK or refreshes the page, the timer is reset.

However, if the user does not take any action, they will be logged out after the remaining time is run out. If the user opens the application in two separate browser windows or tabs, and one of them is kept idle, a pop-up window appears on the one that is left idle after 80% of the configured session timeout duration is reached. If the user does not click the OK button, or refreshes the page on the idle browser window, when the time runs out, the user will be logged out of the application on both the windows.

To configure session timeout, set the

configuration.sessionTimeOut parameter in the

instance specification. This parameter defines the number of seconds after

which the landing page or an application will timeout when left idle. By

default, this is set to 1400 seconds.

The following block shows the instance specification where you configure session timeout:

# osmRuntimeUX

osmRuntimeUX: {} # This empty declaration should be removed if adding items here.

#osmRuntimeUX:

.....

# The number seconds for which landing page or iframe applications timeout when left idle

# This should always be less than default OSM core session timeout value of 1500s.

#sessionTimeOut: 1400Preparing Cartridges

Existing OSM cartridges that run on a traditional OSM deployment can still be used with OSM cloud native, but you prepare and deploy those cartridges differently. Instead of using multiple interfaces to persist the WebLogic domain configuration (WebLogic Admin console and WLST), the configuration is added into the files that feed into the instance creation mechanism. With OSM cloud native, you use the WebLogic Admin Console only for validation purposes.

Before proceeding, you must determine which OSM solution cartridge you want to use to validate your OSM cloud native environment. For simplicity, use a setup where any communication with OSM is restricted to an application running in the same instance of the WebLogic domain.

- The list of JMS queues and topics that the cartridge needs.

- The list of credentials stored in the OSM Credential Store.

- Users that the cartridge requires.

- Applications that need to be deployed to the WebLogic server. This can include system emulators for stubbing out communication to external peer systems.

About OSM Cloud Native Cartridges and Design Studio

Existing cartridges do not always need to be rebuilt for use with OSM cloud native. As long as they were built with an OSM 7.4.0.x SDK, using the Design Studio target OSM version of 7.4.0, their existing par files can be deployed.

If cartridges have to be built afresh or re-built, use the OSM SDK packaged with OSM 7.4.1 release, and set the Design Studio target OSM version as 7.4.0. In general, use the Design Studio target OSM version that is closest to the actual OSM version but not newer than it.

About Domain Configuration Restrictions

Some restrictions on the domain configuration are necessary to keep the process simple for creating and validating your basic OSM cloud native instance. As you build confidence in the tooling and the extension mechanisms, you can remove the restrictions and include additional configuration in your specifications to support advanced features.

- Instance with no SAF setup.

- Re-directing logs (to live outside the pods) will not be configured at this time.

Changing the Default Values

The project and the instance specification templates in the toolkit contain the values used in the out-of-the-box domain configuration. These files are intended for editing, as the required information such as the PDB host needs updating and the flags controlling the optional features such as NFS need to be turned on or off, and the default values such as Java options and cluster size can be changed. If you find that the existing values need to be updated for your OSM instance, update the values in your specification files.

NFS: As per the restrictions, leavenfsdisabled in the instance specificationShape: The provided configuration uses tuning parameters that are appropriate for a development environment. This is done through the use of a shape specification that is specified in the instance specification.

Adding New WDT Metadata

The OSM cloud native toolkit provides the base WDT metadata in $OSM_CNTK/charts/osm/templates. As the OSM application requires this WDT metadata for the proper functioning, this must not be edited. Instead, the toolkit provides a mechanism whereby new pieces of WDT metadata can be included in the final description of the domain.

See "Extending the WebLogic Server Deploy Tooling (WDT) Model" for complete details on the general process for providing custom WDT. The steps described must be repeated for a variety of WDT use cases.

You must specify the JMS Queues required for your new using the WDT metadata.

- Re-using the OSM JMS Resources as described in "Adding JMS Queues and Topics". This is the suggested mechanism for your first attempt at configuring your customized OSM instance.

- Creating custom JMS Resources as described in "Adding a JMS System Resource".

Other Customizations

To support a custom OSM solution cartridge, not all changes are done using the WDT metadata. Depending on the processing needs of your OSM solution cartridge, there are other changes that are likely required:

- Credential Store

- Custom Application EAR

- Cartridge Users

Credential Store

For traditional installations, if a solution cartridge has automation plugins that needed to retrieve external system credentials, it did so by storing those credentials in the WebLogic Credential Store.

In OSM cloud native, if your cartridge uses the credential store framework of OSM, then you must provision cartridge user accounts. See "Provisioning Cartridge User Accounts" for details.

Custom Application Ear

If there are additional applications that need to be deployed to WebLogic to support the processing of your OSM solution cartridge, see "Deploying Entities to an OSM WebLogic Domain".

This method requires both WDT metadata as well as the custom OSM images. Supplemental scripts and WDT fragments are provided as samples in the $OSM_CNTK/samples/customExtensions

Cartridge Users

Cartridges may also define users who need access to OSM APIs. These user credentials need to be available in the right locations as described in "Provisioning Cartridge User Accounts". These credentials must then be made available through the configuration to OSM.

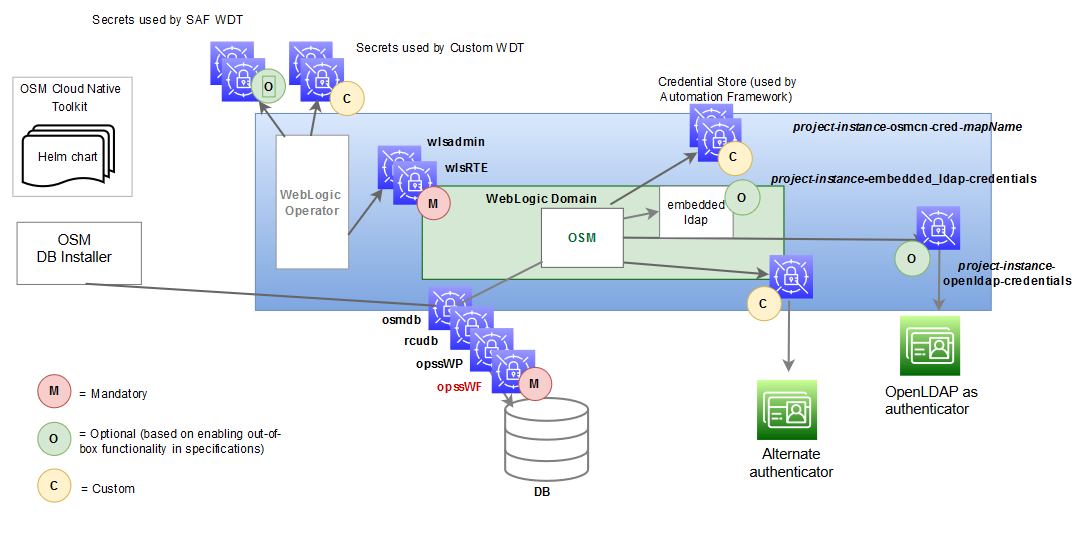

Working with Kubernetes Secrets

Secrets are Kubernetes objects that you must create in the cluster through a separate process that adheres to your corporate policies around managing secure data. Secrets are then made available to OSM cloud native by declaring them in your configuration.

When the OSM cloud native sample scripts are not used for creating secrets, the secrets you create must align to what is expected by OSM. The sample scripts contain guidelines for creating secrets.

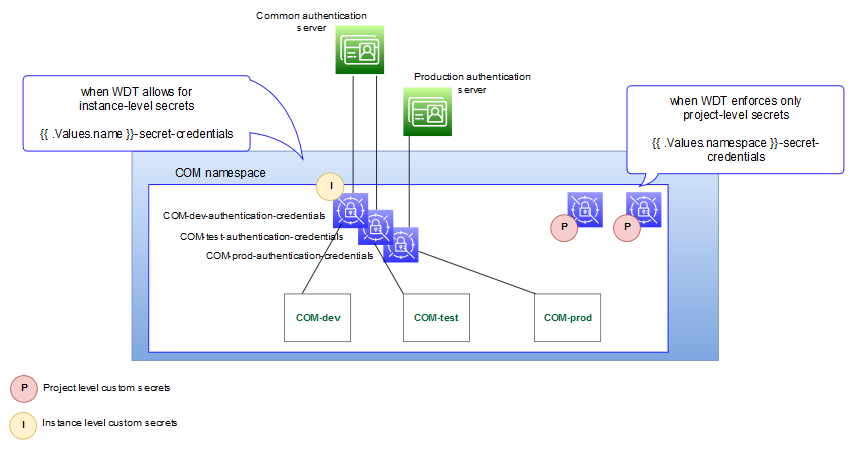

The following diagram illustrates the role of Kubernetes Secrets in an OSM cloud environment:

Figure 6-1 Kubernetes Secrets in OSM Cloud Environment

Description of "Figure 6-1 Kubernetes Secrets in OSM Cloud Environment"

There are three classifications of secrets, as shown in the above illustration:

- Mandatory (Pre-requisite) Secrets

- Optional Secrets

- Custom Secrets

About Mandatory Secrets

Mandatory secrets must be created prior to running the cartridge management scripts or the instance creation script.

The toolkit provides the sample script: $OSM_CNTK/scripts/manage-instance-credentials.sh to create the secrets for you. Refer to the script code to see the naming and internal structure required for each of these secrets.

See the following topics for more details about Kubernetes Secrets:

About Optional Secrets

Optional secrets are dictated by enabling the out-of-the-box configuration. There is some functionality that is pre-configured in OSM cloud native and can be enabled or disabled in the specification files. When the functionality is enabled, these secrets must be created in the cluster before an OSM instance is created.

- If you use OpenLDAP for authentication, OSM cloud native relies on the

following secret to have been created:

The toolkit provides a sample script to create these secrets for you ($OSM_CNTK/samples/credentials/manage-osm-ldap-credentials.sh by passing in "-o secret").project-instance-openldap-credentials - With Credential Store, the secrets hold credentials for external systems that the automation plug-ins access. These secrets are a pre-requisite to running cartridges that rely on this mechanism and must adhere to a naming convention. See "Provisioning Cartridge User Accounts" for more details.

- When SAF is configured, SAF secrets are used. SAF secrets are similar

to custom secrets and are declared in a specialized area within the instance

specification that feeds into the SAF-specific

WDT.

safConnectionConfig: - name: external_system_identifier t3Url: t3_url secretName: secret_t3_user_pass

About Custom Secrets

OSM cloud native provides a mechanism where WDT metadata can access sensitive data through a custom secret that is created in the cluster and then declared in the configuration. See "Accessing Kubernetes Secrets from WDT Metadata" to familiarize yourself with this process.

This class of secrets are required only if you need secrets for this mechanism.

To use custom secrets with WDT metadata:

Note:

As an example, this procedure uses a WDT snippet for authentication.- Add secret usage in the WDT metadata

fragment:

Host: '@@SECRET:authentication-credentials:host@@' Port: '@@SECRET:authentication-credentials:port@@' ControlFlag: SUFFICIENT Principal: '@@SECRET:authentication-credentials:principal@@' CredentialEncrypted: '@@SECRET:authentication-credentials:credential@@' - Add the secret to the project

specification.

#Custom secrets # Multiple secret names can be provided customSecrets: enabled: true secretNames: - authentication-credentials - Create the secret in the cluster, by using any one of the following

methods:

- Using OSM cloud native toolkit scripts

- Using a Template

- Using the Command-line Interface

See "Mechanism for Creating Custom Secrets" for details about the methods.

$OSM_CNTK/scripts/list-instance-secrets.sh -p project -i instance -s $SPEC_PATHsecrets:

coreSecrets:

- <project>-<instance>-database-credentials

- <project>-<instance>-rcudb-credentials

- <project>-<instance>-embedded-ldap-credentials

- <project>-<instance>-weblogic-credentials

- <project>-<instance>-opss-walletfile-secret

- <project>-<instance>-opss-wallet-password-secret

- <project>-<instance>-runtime-encryption-secret

- <project>-<instance>-oidc-credentials

- <project>-<instance>-fluentd-credentials

customSecrets:

- partitionStatisticSecret

- projectCustomSecret

- instanceCustomSecret

Accommodating the Scope of Secrets

The WDT metadata fragments are defined at the project level as the project typically owns the solution definition. Accommodating this is a simple task. However, the scenario becomes complicated when you consider that there may be project level configuration that needs to allow for instance level control over the secret contents.

To walk through this, we will use authentication as an example and introduce a COM project that includes three instances: development, test, and production. The production environment has a dedicated authentication system, but the development and test instances use a shared authentication server.

To accommodate this scenario, the following changes must be made to each of the basic steps:

- Define a naming strategy for the secrets that introduce scoping. For

instance, secrets that need instance level control could prepend the instance name.

In the example, this results in the following secret names:

COM-dev-authentication-credentialsCOM-test-authentication-credentialsCOM-prod-authentication-credentials

- Include the secret in the WDT fragment. In order for this scenario to

work, a generic way is required to declare the "scope" or instance portion of the

secret name. To do this, use the built-in Helm values:

If the fragment needs to support instance-level control, derive the instance name portion of the secret name..Values.name - references the full instance name (project-instance) .Values.namespace - references the project name (project)Host: '@@SECRET:{{ .Values.name }}-authentication-credentials:host@@' Port: '@@SECRET:{{ .Values.name }}-authentication-credentials:port@@' ControlFlag: SUFFICIENT Principal: '@@SECRET:{{ .Values.name }}-authentication-credentials:principal@@' CredentialEncrypted: '@@SECRET:{{ .Values.name }}-authentication-credentials:credential@@' - Add the secret to the instance specification. The secret name must be

provided in the instance specification as opposed to the project

specification.

## Dev Instance Spec #Custom secrets # Multiple secret names can be provided customSecrets: enabled: true secretNames: - COM-dev-authentication-credentials ## Test Instance spec #Custom secrets # Multiple secret names can be provided customSecrets: enabled: true secretNames: - COM-test-authentication-credentials ## Prod Instance Spec #Custom secrets # Multiple secret names can be provided customSecrets: enabled: true secretNames: - COM-prod-authentication-credentials - Create the secret in the cluster by following any one of the methods

described in the Mechanism for Creating Custom Secrets topic. In our example,

the secret would need to capture host, port, principal and credential. Each instance

would need a secret created, but the values provided depend on which authentication

system is being

used.

# Dev secret creation kubectl create secret generic COM-dev-authentication-credentials \ -n COM \ --from-literal=principal=<value1> \ --from-literal=credential=<value2> \ --from-literal=host=<value3> \ --from-literal=port=<value4> # Test secret creation kubectl create secret generic COM-test-authentication-credentials \ -n COM \ --from-literal=principal=<value1> \ --from-literal=credential=<value2> \ --from-literal=host=<value3> \ --from-literal=port=<value4> ##Production secret creation kubectl create secret generic COM-prod-authentication-credentials \ -n COM \ --from-literal=principal=<prodvalue1> \ --from-literal=credential=<prodvalue2> \ --from-literal=host=<prodvalue3> \ --from-literal=port=<prodvalue4>

The following diagram illustrates the secret landscape in this example:

Mechanism for Creating Custom Secrets

- Using Scripts

- Using a Template

- Using the Command-line Interface

Using Scripts to Create Secrets

Functionality such as OpenLDAP, NFS, and Credential Store that can be enabled or disabled in OSM cloud native relies on pre-requisite secrets to be created. In such cases, the toolkit provides sample scripts that can create the secrets for you. While these scripts are useful for configuring instances quickly in development situations, it is important to remember that they are sample scripts, and not pipeline friendly. These scripts are also essential because when the secret is mandated by OSM cloud native, both the secret name and the secret data are available in the sample script that populates it.

projectName-instanceName-osmcn-cred-mapNameUsing a Template

To create custom secrets using a template:

- Save the secret details into a template

file.

apiVersion: v2 kind: Secret metadata: labels: weblogic.resourceVersion: domain-v2 weblogic.domainUID: project-instance weblogic.domainName: project-instance namespace: project name: secretName type: Opaque stringData: password_key: value1 user_key: value2 - Run the following command to create the

secret:

kubectl apply -f templateFile

Using the Command-line Interface

kubectl create secret generic secretName \

-n project \

--from-literal=password_key=value1 \

--from-literal=user_key=value2Adding JMS Queues and Topics

JMS queues and topics are unique because the base JMS resources (JMS server and JMS subdeployments) already exist in the domain as the OSM core application requires them. You can add custom queues and topics to the OSM JMS resources by specifying the appropriate content in the project specification file.

To add queues or topics, uncomment the sample in your specification file, providing the values necessary to align with your requirements.

- The only mandatory values are 'name' and 'jndiName'.

- Text in angular brackets do not have a default value. You must supply an actual value per your requirements.

- The remaining parameters are set to their default values if omitted. When a different value is supplied in the specification file, it is used as an override to the default value.

Note:

There should only be one list ofuniformDistributedQueues and one list of uniformDistributedTopics in the specification. When copying

the content from the samples, ensure that you do not replicate these sections more than

once.

# jms distributed queues

uniformDistributedQueues:

- name: custom-queue-name

jndiName: custom-queue-jndi

resetDeliveryCountOnForward: false

deliveryFailureParams:

redeliveryLimit: 10

deliveryParamsOverrides:

timeToLive: -1

priority: -1

redeliveryDelay: 1000

deliveryMode: 'No-Delivery'

timeToDeliver: '-1'

# jms distributed topics

uniformDistributedTopics:

- name: custom-topic-name

jndiName: custom-topic-jndi

resetDeliveryCountOnForward: false

deliveryFailureParams:

redeliveryLimit: 10

deliveryParamsOverrides:

timeToLive: -1

priority: -1

redeliveryDelay: 1000

deliveryMode: 'No-Delivery'

timeToDeliver: '-1'Generating Error Queues for Custom Queues and Topics

You can generate error queues for all custom queues and topics automatically.

To generate error queues automatically, configure the following parameters in the project.yaml file:

errorQueue:

autoGenerate: false

expirationPolicy: "Redirect"

redeliveryLimit: 15By default, the autoGenerate parameter is set to

false. To generate error queues for all JMS queues

automatically, set this parameter to true.

When autoGenerate is set to true, all custom queues and

topics will have their own error queues.

The following sample shows the error queue generated for a custom queue:

'jms_queue_name_ERROR':

ResetDeliveryCountOnForward: false

SubDeploymentName: osm_jms_server

JNDIName: error/ jms_queue_jndiName

IncompleteWorkExpirationTime: -1

LoadBalancingPolicy: 'Round-Robin'

ForwardDelay: -1

Template: osmErrorJmsTemplateNote:

- All error queues have _ERROR as the suffix.

- For internal queues and topics in OSM, generation of error queues is always enabled. Each queue and topic has its own _ERROR queue. Messages that cannot be delivered are redirected accordingly.

- Disable this feature for O2A 2.1.2.1.0 cartridges used in an OSM cloud native environment. The O2A build generates its own project specification fragment, which must be used instead.

Creating a JMS Template

A JMS template provides an efficient means of defining multiple destinations with similar attribute settings.

# JMS Template (optional). Uncomment to define "customJmsTemplate"

# Alternatively use the built-in template "customJmsTemplate"

#jmsTemplate:

# customJmsTemplate:

# DeliveryFailureParams:

# RedeliveryLimit: 10

# ExpirationPolicy: Discard

# DeliveryParamsOverrides:

# RedeliveryDelay: 1000

# TimeToLive: -1

# Priority: -1

# TimeToDeliver: -1# jms distributed queues. Uncomment to define one or more JMS queues under a

# single element uniformDistributedQueues.

uniformDistributedQueues: {} # This empty declaration should be removed if adding items here.

#uniformDistributedQueues:

# - name: jms_queue_name

# jndiName: jms_queue_jndiName

# jmsTemplate: customJmsTemplate

# jms distributed topic. Uncomment to define one or more JMS Topics under a

# single element uniformDistributedTopics.

uniformDistributedTopics: {} # This empty declaration should be removed if adding items here.

#uniformDistributedTopics:

# - name: jms_topic_name

# jndiName: jms_topic_jndiName

# jmsTemplate: customJmsTemplateIf the queues and topics need to be created under custom JMS resources, then the OSM cloud native WDT extension mechanism should be employed as described in "Adding a JMS System Resource".

Provisioning Cartridge User Accounts

This section describes how to use the sample scripts to create credential store secrets and provide the instance configuration so that OSM cloud native can access the credentials.

- Creating Credential Store Secret

- Declaring the Secret

-

Users "hosted" by this OSM instance: These are non-human user accounts that systems use to login from outside OSM (via a User Interface, OSM XML API or OSM Web Service API) or are logged in internally (as a Run As user for an automation plugin).

These users:- Require mappings to OSM groups.

- Use the

osmmapname, with_sysgen_keyname. - Must be added to the project specification "cartridgeUsers" list.

-

Users "hosted" by external systems: The external systems could be UIM, ASAP or another instance of OSM. These users are used by cartridge automation while interacting with external systems to authenticate themselves to the external system.

These users:- Require Kubernetes secret entries. OSM group mapping is not required.

- Use the mapname and keyname the cartridge developer has decided upon in the code.

- Must be added to the project specification "externalCredStore.secrets.mapNames" list.

It is possible for the same credentials to be required in two ways - as a non-human cartridge user and as an access credential for an "external" system. An example would be an instance that hosts both SOM and TOM cartridges. The TOM cartridge may require a non-human user called "tom", while the SOM cartridge needs an access credential to send the TOM order. For flexibility, the instances that send the TOM order would not assume co-location and would fetch access credentials for the user "tom". When the same credentials need to exist for both categories, that user ID must be duplicated - once for each category, as per the syntax of that category. Ensure that the passwords are in sync, as OSM cloud native views these as independent entries.

Creating Credential Store Secret

Note:

If you use custom code that relies on the OPSS Keystore Service, you need to make changes for OSM cloud native as that mechanism is no longer supported. For details, see "Differences Between OSM Cloud Native and OSM Traditional Deployments".A text file is used to describe the details required to provision the user accounts properly. Each user is captured in one line and has the following format:

map_name:key_name:username:credential-system[:osm-groups]$OSM_CNTK/samples/credentials/osm_users.txt serves as a template for user credentials that need to be created for both human and automation users.

Copy this file to your private specification repository under the instance specific directory and rename it to something meaningful. For example, rename the file as repo/cartridge_user_text_file.txt.

The mapName parameter is a mandatory parameter, as this value is used as the prefix of the secret name to be created.

The choice of map name and key name affects which OSM automation framework API can be used to retrieve the value within the automation plugin:

- Use "osm" as map name and _sysgen_ as key name. The

credential record is accessed with the

context:getOsmCredentialPasswordAPI. - Any other map name and key name needs access with the

context:getCredentialAsXMLorcontext:getCredentialAPIs.Refer to the OSM SDK for more details.

The credential-system parameter is a mandatory parameter and must be set to:

- secret: Creates the human user or automation user against the Kubernetes Secret.

The osm-groups parameter represents a list of OSM groups to associate the user to the embedded LDAP server.

- OMS_client

- OMS_designer

- OMS_user_assigner

- OMS_workgroup_manager

- OMS_xml_api

- OMS_ws_api

- OMS_ws_diag

- OMS_log_manager

- OMS_cache_manager

- Cartridge_Management_WebService

- OSM_automation

- osmEntityClientGroup

- osmRestApiGroup

osm:_sysgen_:osmlf:secret:OMS_xml_api,OSM_automation,OMS_ws_api

uim:uim:uim:secret

tom:osm:tomadmin:secret- The first line creates a Kubernetes secret entry

for "osmlf" user in the "osm" credential secret. The entry

contains a username, password, and the group associations.

Use the

API to retrieve the password. Add "osmlf" to the project specification's "cartridgeUsers" list.context:getOsmCredentialPassword - The second line creates a Kubernetes secret entry

for the user "uim" with the access key name "uim". The entry

contains a username and a password. Use the

context:getCredentialAPI or thecontext:getCredentialAsXMLAPI to retrieve both username and password from map "uim" with key "uim". Add "uim" to the project specification's "externalCredStore.secrets.mapNames" list. - The third line creates a Kubernetes secret entry

for the user "tomadmin" with the access key name "osm". The

entry contains a username and a password. Use the

context:getCredentialAPI or thecontext:getCredentialAsXMLAPI to retrieve both username and password from map "tom" with key "osm". Add "tom" to the project specification's "externalCredStore.secrets.mapNames" list.

The secrets that the manage-cartridge-credentials.sh script

creates are named project-instance-osmcn-cred-mapName as per the naming conventions

required by OSM. For each unique mapName that you provide, the script

creates one secret. This means if five user entries exist for "uim", each

entry will be available in a single secret named

project-instance-osmcn-cred-uim. The script prompts for passwords

interactively.

To create the credential store secret:

- Run the following

script:

$OSM_CNTK/samples/credentials/manage-cartridge-credentials.sh \ -p project \ -i instance \ -c create \ -f fileRepo/customSolution_users.txt # You will see the following output secret/project-instance-osmcn-cred-uim created - Validate that the secrets are

created:

kubectl get secret -n project NAME project-instance-osmcn-cred-uim

Creating Cartridge User Accounts in Embedded LDAP

cartridgeUsers section in

project.yaml, add all the cartridge users (only those from

the prefix/map name osm). During the creation of the OSM server

instance, for all the cartridge users listed, an account is created in

embedded LDAP with the same username and password and groups as the

Kubernetes

secret.cartridgeUsers:

- osm

- osmoe

- osmde

- osmfallout

- osmoelf

- osmlfaop

- osmlf

- tomadminDeclaring the Secret

After the secret is created, declare the secret used by the credential store mechanism by editing your project specification. In the project specification, specify only mapName. The prefix project-instance-osmcn-cred is derived during the instance creation.

#External Credentials Store

externalCredStore:

secrets:

mapNames:

-mapNameThe OSM cloud native configuration provides a start-up parameter that allows the OSM core application to determine whether the credentials are held in a WebLogic Credential Store (for traditional deployments) or in a Kubernetes Secret Credential Store (for cloud native) so that the configuration is set for you. Cartridges that rely on accessing these credentials are now enabled for execution.

Working with Cartridges

This section describes how you build, deploy, and undeploy OSM cartridges in a cloud native environment.

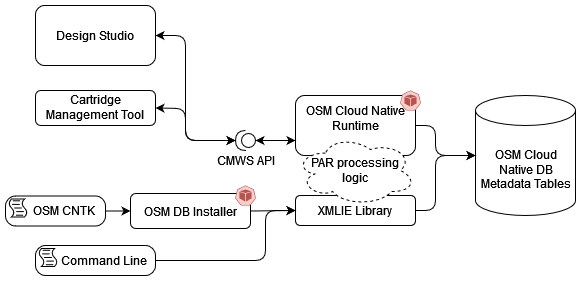

OSM cartridges are built using either Design Studio or build scripts, which are the methods used for building cartridges in traditional environments. There are multiple ways to deploy cartridges, but they all result in cartridge information extracted from the par files and stored in various OSM metadata tables in the OSM DB.

The following diagram illustrates cartridge deployment paths.

Cartridge Deployment Tool in OSM Cloud Native

To deploy cartridge par files, OSM cloud native employs a mechanism using the OSM cloud native toolkit's manage-cartridges.sh script.

-

-p projectName: Mandatory. Name of the project.

-

-i instanceName: Mandatory. Name of the instance.

-

-s specPath: Mandatory. The location of the specification files. A colon(:) delimited list of directories.

-

-m customExtPath: Use this to specify the path of custom extension files. Takes a colon(:) delimited list of directories. If the path provided is empty with the custom flag enabled as true in the specifications, then the script is stopped.

-

-o : Enables online cartridge deployment.

-

-c commandName: Mandatory. Use the following command names:

- parDeploy: Use this to deploy a cartridge par file from your local file system. Use this for development environments only.

- sync: Use this to synchronize cartridges using the project specification and remote file repository. Use this for all controlled environments.

-

-f parPath: Mandatory if parDeploy is used. This specifies the path of the cartridge par file that you want to deploy.

-

-q: Optional. Disables verbose progress indicators.

The manage-cartridges.sh script spins up a pod to perform the requested deployment activities.

Single or One-off Cartridge Deployment

To deploy a single cartridge par file, use the parDeploy command to the manage-cartridges.sh script along with the -fparPath parameter. The script must be run such that it has access to the specified cartridge par file as well as the kubectl cp privileges on the pod that is spun up in the project namespace.

Specification-driven Cartridge Deployment

For more control and traceability in the OSM cartridge loadout, use the sync command to the manage-cartridges.sh script. You must first describe the desired list of cartridges in your project specification. The sync command performs the required deploy, redeploy and fast-undeploy changes to modify the in-database set of cartridges to match the list given in the specification. This command also sets the default cartridge as per the specification.

The list in the project specification must depict the desired or target state.

Note:

In the actions listed below, "cartridge" refers to "cartridge+version".- If a cartridge is listed as deployed in the specification, but is not deployed in the database: it is deployed.

- If a cartridge is listed as deployed in the specification and the same version exists in the database, the two cartridge’s contents are compared; if there is a difference, the new par file is redeployed.

- If a cartridge is listed in the specification with a default setting that does not match with what is in the database, the default setting in the database is updated to match the specification; no change is done to this setting if they already match.

- If a cartridge is listed as fastundeployed in the specification and it exists as active in the database, it is fast-undeployed in the database. If the cartridge is already fast-undeployed in the database, nothing is done. If the cartridge does not exist in the database, nothing is done.

The OSM cloud native toolkit ignores the default flag encoded in the cartridge par file when the sync command is used - it enforces the list as specified in the project specification. For each cartridge, the sync validation ensures that exactly one version is tagged as default.

Each entry in the list of cartridges describes a specific cartridge using the name of the cartridge, its version, the intended deployment state and the intended default state. In addition, it specifies a URL that can be used to download the cartridge par file into the cartridge management pod. Alternatively, it can specify a container image that carries the cartridge par. The URL would be pointing to a remote file repository that may require authentication or other parameters. The cartridge entry’s param fields can be used to provide parameters (in the form of "curl" command line parameters) as well as a secret that carries the username and password information.

Refer to the "Cartridge par Sources" section for details on the different options possible.

cartridges: - name: name of the cartridge - Mandatory, (must match the cartridge name in the par file) url: URL of the location where to download the cartridge par file - Provide the URL or the image details. secret: Kubernetes secret in the project namespace - Optional. Required only if remote URL server requires authentication. image: image built with par file- Provide either the image or the URL, but not both. imagePullSecret: The secret required to pull the image built for cartridge deployment via image. params: Commandline parameters will be passed to curl - Optional. User can provide additional parameters such as proxy settings for curl. version: cartridge version, Example 1.0.0.0.0 - Mandatory. Cartridge version must match the cartridge version in the par file. default: true|false - Mandatory. Specify if this cartridge is the default cartridge. deploymentState: deployed|fastundeployed - Mandatory. Indicate the desired target state of the cartridge.

Use the manage-cartridges.sh script from the CNTK with the command option -c of parDeploy

Offline Cartridge Deployment Using the OSM Cloud Native Toolkit

This deployment mode supports deployment of new cartridges, deployment of new versions of existing cartridges, and redeployment of existing cartridge versions with changes.

For offline cartridge deployment, all managed servers in your environment must be shut down. The script stops running if there are managed servers up and running.

vi spec_Path/project-instance.yaml # Change the cluster size to 0 #cluster size clusterSize: 0 $OSM_CNTK/scripts/upgrade-instance.sh -p project -i instance -s spec_Path

Run the manage-cartridges.sh script with the desired command – parDeploy or sync.

Note:

cartridgeManagement.resources (from the shape specification) is used by the pod while deploying the cartridges.

Additionally, when you are using the sync command and the cartridges are listed in the project specification using container images, cartridgeInitContainer.resources is used to pull the cartridge images.

Edit the instance spec to restore the original number for the cluster size and run the same upgrade-instance.sh command as before to bring up all the managed servers.

Online Cartridge Deployment Using the OSM Cloud Native Toolkit

This deployment mode only supports deployment of new cartridges and deployment of new versions of existing cartridges.

Deploying cartridges in an OSM cloud native environment provides the following key benefits:

- You can deploy the cartridges without needing to isolate OSM from order processing at the JMS/HTTP level.

- You can describe the cartridges for an environment in a declarative fashion.

In online mode, you can deploy cartridges to your OSM cloud native running instance with zero down time. During the deployment process, OSM CN remains reachable by external HTTP clients and JMS/SAF endpoints – this means OSM will continue to accept orders and will continue to work on existing orders.

You use the manage-cartridges.sh script with the -o option to enable online deployment of cartridges. After deploying the cartridges, the script performs a rolling restart of all the managed servers in your environment. This restart loads the new cartridge+version into memory and when all managed servers have the changes loaded, OSM switches to using the new cartridge+version in sync across all managed servers.

Note:

When deploying cartridges in online mode, the running instance of OSM must continue to run and the required cluster size is at least 2.Run the manage-cartridges.sh command with the additional -o command-line option to deploy cartridges in online mode, with either parDeploy or sync

- If no managed servers are running, a warning is shown that no managed server is up and running and that the deployment mode is switching to offline deployment. The script continues with offline deployment.

- If only one managed server is running, then the script fails to perform the deployment.

Deploying Cartridges Using Design Studio

You can deploy cartridges directly from Design Studio using the Eclipse user interface or headless Design Studio. However, use Design Studio for deploying cartridges in scenarios where there is a lot of churn in the build, deploy and test cycle, but not for production environments. If used in conjunction with the OSM cloud native cartridge management mechanism, then the deployed cartridges become out of sync with what is listed in the source controlled specification file. For this reason, deploying cartridges using Design Studio is not recommended for environments where the specification file is considered the single source of truth for the set of deployed cartridges.

In order to incorporate Design Studio into the larger OSM cloud native ecosystem, you need to have previously taken care of the mapping of the hostname to the Kubernetes cluster or the load balancer as described in "Planning and Validating Your Cloud Native Environment".

- Ensure that the connection URL of the Design Studio environment project matches

your OSM cloud native environment. This is likely:

http://instance.project.osm.org:30305/cartridge/wsapi. The suffixosm.orgis configurable. - In the Design Studio workspace, depending on your network setup, you

may need to set the Proxy bypass field in the Network Connection

Preferences to:

instance.project.osm.org.

Listing Deployed Cartridges Using the OSM Cloud Native Toolkit

This command provides a report on all the cartridges present in the OSM cloud native instance. The report contains the cartridge name, cartridge version, cartridge ID, whether it is the default version or not, number of orders still open for it and number of orders completed by it.

$OSM_CNTK/scripts/manage-cartridges.sh -p project -i instance -s spec_Path -c listCartridge par Sources

This topic outlines the sources from where you can deploy the Cartridge par files. For OSM, Cartridge par files can be deployed from the following sources:

- Local Files

- Remote File Repository

- Container Images

Local Files

Use this method for only development environments. You can use the parDeploy command with the manage-cartridges.sh script to deploy a cartridge par file from your system.

Remote File Repository

Using a remote file repository is more preferable than local files. However, choose between this option and the container images as per your convenience. Use this method with the sync command with the manage-cartridges.sh script.

There are two approaches to using the Remote File Repository – Secured and Unsecured. The Unsecured approach could be suitable for test environments. You can also disable host verification. However, it is recommended that you do not opt for this as it can be a security risk.

Details of the cartridge, its version and the remote repository must be specified in the project specification as entries in the “cartridges” list. See the comments against this element in the sample specification file for full details:

cartridges: - name: cartridge-name version: cartridge-version default: true-or-false deploymentState: deployed-or-fastundeployed url: url-to-cartridge-par-file-in-remote-repository secret: name-of-kubernetes-secret-holding-login-credentials-if-secured-remote-repository params: any-parameters-that-must-be-passed-to-curl-to-connect-to-above-url

secret is only required if the remote repository is secured. The

Kubernetes secret must be in the same project namespace and must contain two fields,

username and password, with the appropriate values.

params is optional and depends solely on the networking and

nature of the remote repository.

Container Images

Having a repository for cartridges is not always feasible or sustainable. However, there is one kind of repository that is already a mandatory requirement for OSM cloud native (and in general, for any work on Kubernetes). It is the container image repository. Allowing OSM to pull cartridges as images from such an image repository allows for reuse of existing infrastructure and security.

Use this method with the sync command with the manage-cartridges.sh script. Details of the cartridge, its version and the image location must be specified in the project specification as entries in the cartridges list (see the comments against this element in the sample specification file for full details):

cartridges: - name: cartridge-name version: cartridge-version default: true-or-false deploymentState: deployed-or-fastundeployed image: image-name-and-tag imagepullsecret: credentials-for-image-repository

imagepullsecret is only required if the image repository

demands authenticated access. It is the standard Kubernetes image pull secret.

Building Cartridge Images

OSM Cloud Native Image Builder provides a sample utility to create a container image for a cartridge par file for use in deployment activities. See osm-image-builder/samples/cartridgeAsImage.

#Script that builds container image using the par that is provided in -f parameter

./buildCartridgeImage.sh -n <Cartridge_Name> -v <Cartridge_Version> -f /path/to/par/file

Example:

./buildCartridgeImage.sh -n SimpleRabbits -v 1.7.0.1.0 -f /home/user/Cartridge/SimpleRabbits.parThis creates an image with tag name as

<Cartridge_Name>:<Cartridge_Version> in lowercase. For the

example above, the tag would be simplerabbits:1.7.0.1.0.

Note:

Cartridge_Name and Cartridge_Version must match what

is encoded within the par file.

Selecting Deployment Style and Cartridge Source

This topic outlines the deployment styles and methods you can use to deploy cartridge par files. We can consider there to be two categories of environments where cartridges need to be managed:

- Open Environments

- Controlled Environments

Deploying Cartridges in Open Environments

- Design Studio deploy (during active cartridge development activity).

- Online or offline pardeploy using local file.

- Online or offline sync deploy using remote file repository or container images.

Deploying Cartridges in Controlled Environments

To install cartridges in controlled environments such as UAT, pre-production, and production, use only the declarative approach. Such environments require careful control of content as well as strong auditing of changes. Using the sync approach with an online or offline deployment using manage-cartridges.sh will ensure fitment to pipelines and strong validation and traceability. Choose remote file repositories or container image repositories to serve the cartridge par files in a secure, versioned and auditable way. Use Design Studio’s headless build to automate building the par file itself from source as a precursor to putting it into the desired secured location.