20 Publishing Data

Learn about publishing data and reports in Oracle Communications Solution Test Automation Platform (STAP).

Publishing Data Using the Command-Line Interface

You can use the STAP utility to publish actions, environments, and scenarios.

Publishing data uploads your scenarios, actions, or environments to the cloud so they can be executed as jobs. This allows you to run tests remotely, share results, and use cloud resources instead of your local machine.

Note:

To publish components, you must add the TDS environment details in your environment configurations.

./stap --publish -action "workspace=path"./stap --publish -scenario "workspace=path"./stap --publish -environment "workspace=path"./stap --publish -environment workspace=sampleWorkSpace

=============================================================

STAP Automation Platform CLI

Version : version

=============================================================

WARNING: Runtime environment or build system does not support multi-release JARs. This will impact location-based features.

Workspace Location : /home/opc/STAP/sampleWorkSpace

=========================================================================================

CONFIGURING ENVIRONMENT PUBLISH UTILITY

=========================================================================================

Loading REST environment

Loading configuration /home/opc/STAP/sampleWorkSpace/config/config.properties

Loading environment connections from /home/opc/STAP/sampleWorkSpace/config/environments

=========================================================================================

PUBLISH ENVIRONMENT

=========================================================================================

adding basic...bXVqaWJ1ci5zaGFpa0BvcmFjbGUuY29tOndlbGNvbWUx

End Point=http://123.456.7.890:12345/environment/complete

{"name":"Publish env test","description":"STAP environment","build":"1.0","release":"3.0 Productize","connection":[]}

Path : http://123.456.7.890:12345/environment/complete

Target : http://123.456.7.890:12345/environment/complete

=========================================================================================

PUBLISH RESULT

=========================================================================================

Status : SUCCESS

Response:

{"_id":1}

=========================================================================================Generating Automation Reports

Learn about generating automation reports in a PDF format or using a web server in an HTML format.

Publishing PDF Reports

You can use the PDF Generator Adapter in the STAP to generate PDF reports. The PDF Generator Adapter is a configurable module in the STAP Design Experience that generates PDF reports from structured data and HTML templates. It supports summary and evidence report formats and can create single or multiple documents.

- Summary Report: Provides an overview of key results. It includes high-level information about each scenario with it's duration and status, along with an overall summary chart. For more information, see "Summary Report".

- Evidence Report: Provides a detailed report of each scenario run, alongside information of each case within the scenario, with request and response data. For more information, see "Evidence Report".

These reports can either have a single file or multiple files based on their configuration.

Setting Up The PDF Adapter In Your Workspace

Before configuring the method of generating PDF reports, ensure the PDF Generator adapter is set up correctly in your workspace directory. The folder summaryPDFGenerator is shipped with the STAP DE package, under the adapters folder in config folder..

- .properties

file. You can generate PDF reports using the STAP DE, the command-line

interface, or the TES microservice. Each method requires a different properties

file:

- pdfGenerator.config.properties: The configuration file for generating reports using the STAP DE.

- pdf-adapter.properties: The configuration file for generating reports using the command-line interface.

- pdfGenerator.config.properties: The configuration

file generating reports using the TES microservice.

The property pdf.generate within the .properties file determines the reports to generate: evidence or summary. To customize this, create a comma-separated list of the reports you want to generate:

pdf.generate=evidenceReport,summaryReport

- Config Folder: Contains a sub-folder titled Configs,

which contains configuration files specific to each PDF report generated in JSON

format:

- evidenceReport.pdf.config.json: The JSON input file that provides structured data for the evidence report.

- summaryReport.pdf.config.json: The JSON input file that provides structured data for the summary report.

- Templates Folder: Template files for the summary report, titled summaryReport.template, and the evidence report, titled evidenceReport.template. These can either be in an HTML or an FTL format. By default, they are in HTML format.

- Output Folder: The generated evidence and summary reports in PDF format.

- Resources Folder: Contains the static components of the PDF report: the company or project logo in PNG format, and the report font in TTF format. By default, the resources file ships with Oracle's logo and default font. However, you can change the logo and font by replacing the PNG and TTF files with your custom files in the same format.

- Configuration Properties file (dependent on the report generation method)

- Config folder

- Configs Folder

- evidenceReport.pdf.config.json

- summaryReport.pdf.config.json

- Configs Folder

- Templates folder

- summaryReport.template.html

- evidenceReport.template.html

- Output folder

- EvidenceReport.pdf

- SummaryReport.pdf

- Resources folder

- logo.png

- font.ttf

- To publish PDF reports using the STAP DE, see "Generating PDF Reports With The STAP DE".

- To publish PDF reports using the command-line interface, see "Generating PDF Reports With The Command-Line Interface".

- To publish PDF reports using the TES microservice, see "Generating PDF Reports With The TES Microservice".

Generating PDF Reports With The STAP DE

- Start

WireMock:

sh myWorkSpace/WireMock/startWireMock.sh

- Run the PDF Generator jar

file:

sh run.sh

This lets the PDF Generator Adapter read the files within the adapter's folder, alongside the scenario execution result JSON file generated in the data folder when the scenario is run.

- PDF reports of the scenarios run are generated in the Output folder.

Generating PDF Reports With The Command-Line Interface

- Start

WireMock:

sh myWorkSpace/WireMock/startWireMock.sh

- Run the PDF Generator jar

file:

sh pdfGenerate.sh myWorkspace jsonResultDirectory

Where:- pdfGenerate.sh is the PDF Generator Adapter JAR file

- myWorkspace is your workspace directory

- jsonResultDirectory is the path to the scenario's results JSON file in its Data folder.

This lets the PDF Generator Adapter read the files within the adapter's folder, alongside the scenario execution result JSON file generated in the data folder when the scenario is run.

- PDF reports of the scenarios run are generated in the Output folder.

Generating PDF Reports With The TES Microservice

When generating PDF reports using the TES microservice, you do not need to perform any additional steps.

${TES_HOME}/config/adapters/adapters.config.json

PDF reports for all jobs run will by default be saved in the Output folder.

Viewing PDF Reports

Summary and Evidence reports are pre-structured. For more information about the components of Summary Report, see "Summary Report". For more information about the components of Evidence Report, see "Evidence Report".

Summary Report

- Cover Page: The first page of the summary report. For more information, see "Cover Page".

- Summary:

- Summary Table: A table summarizing metrics of all scenarios run. For more information, see "Summary Table".

- Summary Chart: A visual representation of the scenarios run. For more information, see "Summary Chart".

- Test Scenarios: Report of each test scenario run. For more information, see "Test Scenarios".

Cover Page

Table 20-1 shows the fields of the cover page of the summary report.

Table 20-1 Cover Page Fields

| Field | Description |

|---|---|

| Company Title | The title of the company or project. By default, it is set to Oracle. |

| Report Type | The Type of report. By default, it is set to STAP Automation Report. |

| Author | The author of the report. |

| Creation Date | The date the report is created. |

| Last Updated | The date the report is updated last. |

| Version | The version of the report. |

| Approvals | Names of approvers for the report. You can add approvers in the configuration JSON file. If there are none, the rows are blank. |

Summary

Contains the Summary Table and Summary Chart.

Table 20-2 shows the fields of the summary table in the summary report.

Table 20-2 Summary Table

| Field | Description |

|---|---|

| Name | The name of the scenario. |

| Status | The status of the scenario. |

| Pass | Number of scenarios passed. |

| Fail | Number of scenarios failed. |

| Error | Number of scenarios containing errors. |

| Skip | Number of scenarios skipped. |

| Start Time | The date and time that the test was started. |

| End Time | The date and time that the test was complete. |

| Duration | The amount of time the test took to run, in milliseconds. |

Summary Chart

Shows a visual representation of the number of scenarios passed and failed in a pie chart format.

Test Scenarios

Table 20-3 shows the fields of the test scenarios table in test scenarios.

Table 20-3 Test Scenarios

| Field | Description |

|---|---|

| Name | Name of the scenario. |

| Description | Description of the scenario. |

| Duration | The amount of time the scenario took to run, in seconds and milliseconds. |

| Status | The status of the scenario: passed or failed. |

| Tags | Any tags set for the scenario. |

Evidence Report

The Evidence Report contains these components:

- Cover Page: The first page of the evidence report. For more information, see "Cover Page".

- Scenario Summary: Summarizes metrics of all scenarios run. For more information, see "Scenario Summary".

- Test Case: Details of each test case run. For more information, see "Test Case".

- Test Case Summary: Summarizes metrics of all test cases run. For more information, see "Test Case Summary".

Cover Page

Table 20-4 shows the components of the cover page of the summary report.

Table 20-4 Cover Page Components

| Component | Description |

|---|---|

| Company Title | The title of the company or project. By default, it is set to Oracle. |

| Report Type | The Type of report. By default, it is set to Evidence Report. |

| Author | The author of the report. |

| Creation Date | The date the report is created. |

| Last Updated | The date the report is updated last. |

| Version | The version of the report. |

| Approvals | Names of approvers for the report. You can add approvers in the configuration JSON file. If there are none, the rows are blank. |

Scenario Sumary

Provides an overall summary of the scenario run. Table 20-5 shows the components of the scenario summary.

Table 20-5 Scenario Summary

| Component | Description |

|---|---|

| Status | The status of the scenario. |

| Description | The description of the scenario. |

| Tags | Any tags set for the scenario. |

| Start Time | The date and time that the test was started. |

| End Time | The date and time that the test was complete. |

| Duration | The amount of time the test took to run, in milliseconds. |

Test Case

This section details runtime results of test case within the scenario, and each step run. Table 20-6 describes the components under Test Case.

Table 20-6 Test Case

| Field | Description |

|---|---|

| Test Case | The title of the test case. |

| Step | The title of the step. |

| Action Name | The title of the action. |

| Action Type | The type of action. For example, REST. |

| Data | The data within the step. This includes its name, description, and ID. |

| Save | That data to save. This includes name, description, ID. |

| Validate | The data to validate. This includes its status. |

Test Case Summary

Displays the list of cases, along with request and response payloads for the steps within each case. Table 20-6 shows the fields in Test Case Summary.

Table 20-7 Test Case Summary

| Field | Description |

|---|---|

| Case ID | The ID of the case. |

| Name | The name of the case. |

| Status | The status of the case: passed or failed. |

| Start Time | The date and time that the case was started. |

| End Time | The date and time that the case was complete. |

| Duration | The amount of time the case took to run, in milliseconds. |

| Step Name | The name of the step. |

| Start Time | The date and time that the step was started. |

| End Time | The date and time that the step was complete. |

| Duration | The amount of time the step took to run, in milliseconds. |

| Status | The status of the step: passed or failed. |

| Request | The request payload for the step. |

| Response | The response payload for the step. |

Publishing Reports Using Third-Party Web Servers

You can publish user-interactive reports of the scenarios run using third-party web servers.

- To publish reports using Tomcat, see "Configuring Tomcat to View Automation Reports".

- To publish reports using NGINX, see "Viewing Automation Reports Using NGINX".

- To publish reports using the Apache HTTP server, see "Viewing Automation Reports Using Apache HTTP Server".

Configuring Tomcat to View Automation Reports

- Install Tomcat. For more information, see the Tomcat website:

Verify that your Tomcat server is running successfully by running the following in the URL of the Tomcat server:

https://<tomcat-host>:<tomcat-server-port> - In the Workspace_home/config/config.properties file,

edit the following lines to set up the location for the published

reports:

results.home=${WORKSPACE}/results/reports results.publish=YES results.publish.file=${WORKSPACE}/results/results.js - Edit the Tomcat server configuration file

Tomcat_Home/conf/server.xml.

- Edit these lines to configure the STAP-DE automation

execution

reports:

<Connector port="8080" protocol="HTTP/1.1" connectionTimeout="20000" redirectPort="8443" maxParameterCount="1000" /> <Connector port="8099" protocol="HTTP/1.1" redirectPort="8443" /> - Edit the Context element inside the Host

element to configure the path for the automation

reports:

Context docBase="${STAP_HOME}/sampleWorkSpace/results/" path="/stap-reports"This creates an endpoint titled /stap-reports which stores the automation reports.

- Edit these lines to configure the STAP-DE automation

execution

reports:

- Restart the Tomcat server.

https://TomcatHost:TomcatPort/stap-reportsTo access individual automation execution results, click on the link for the job.

Viewing Automation Reports Using NGINX

- Install NGINX. For more information, see the NGINX website:

- As an administrator, navigate to the command prompt in your system, and start the NGINX server.

- In the Workspace_home/config/config.properties file,

edit the following lines to set up the location for the published

reports:

results.home=${WORKSPACE}/results/reports results.publish=YES results.publish.file=${WORKSPACE}/results/results.js - Configure the path for the automation reports by editing the

following lines in the nginx.conf

file:

server { listen 80; server_name localhost; root ${STAP_HOME}/sampleWorkSpace/results; index index.html index.htm; location / { autoindex on; try_files $uri $uri/ /index.html; } } - Restart the NGINX server.

https://NGINXhost:NGINXport/To access individual automation execution results, click on the link for the job.

Viewing Automation Reports Using Apache HTTP Server

To publish automation reports using Tomcat, follow these steps:

- Install and configure the Apache HTTP server. For more information, see the Apache website:

- Start the Apache HTTP server. Verify the successful installation by navigating to the port.

- In the Workspace_home/config/config.properties file, edit

the following lines to set up the location for the published

reports:

results.home=${WORKSPACE}/results/reports results.publish=YES results.publish.file=${WORKSPACE}/results/results.js - Configure the path for the automation reports in Apache HTTP Server's

httpd.conf file by running the

following:

DocumentRoot "${STAP_HOME}/sampleWorkSpace/results/" <Directory "${STAP_HOME}/sampleWorkSpace/results/"> - Restart the Apache HTTP server.

https://ApacheHost:ApachePort/To access individual automation execution results, click on the link for the job.

Viewing HTML Reports

After configuring HTML reports using a web server, you can access it them a user-interactive format.

Upon launching a report, it opens an index page titled STAP Execution Results with the total number of jobs run listed. You use the search bar to search for a particular job. To search for a job using its execution ID, select ID. To search for a job using its name, select Name.

Each job row has these fields:

Table 20-8 Web Server Report Index Page

| Field | Description |

|---|---|

| Job Execution ID | The ID of the job run. |

| Name | The job's name. |

| Total | Number of scenarios in the job. |

| Passed | Number of scenarios passed. |

| Failed | Number of scenarios failed. |

| Error | Number of scenarios containing errors. |

| Skipped | Number of scenarios skipped. |

| Duration | The amount of time the job took to run, in seconds and milliseconds. |

| Start | The date and time that the test was started. |

| End | The date and time that the test was complete. |

| Result | The result of the scenario: passed or failed. |

To view more details about the job run, click on its respective row. The STAP Automation Report opens.

- Total number of scenarios in the job, including the number of scenarios passed, failed, skipped, or those containing errors. Additionally, it shows the total percentage of scenarios passed, and the amount of time taken to run the job.

- The total number of scenarios in the job, including the number of scenarios passed, failed, skipped, or those containing errors in a pie chart format.

- A failure analysis pie chart that presents the number of scenarios failed, and those with errors.

- The amount of time taken to run each scenario in a horizontal graph.

To return to the index page, click Home on the top right corner.

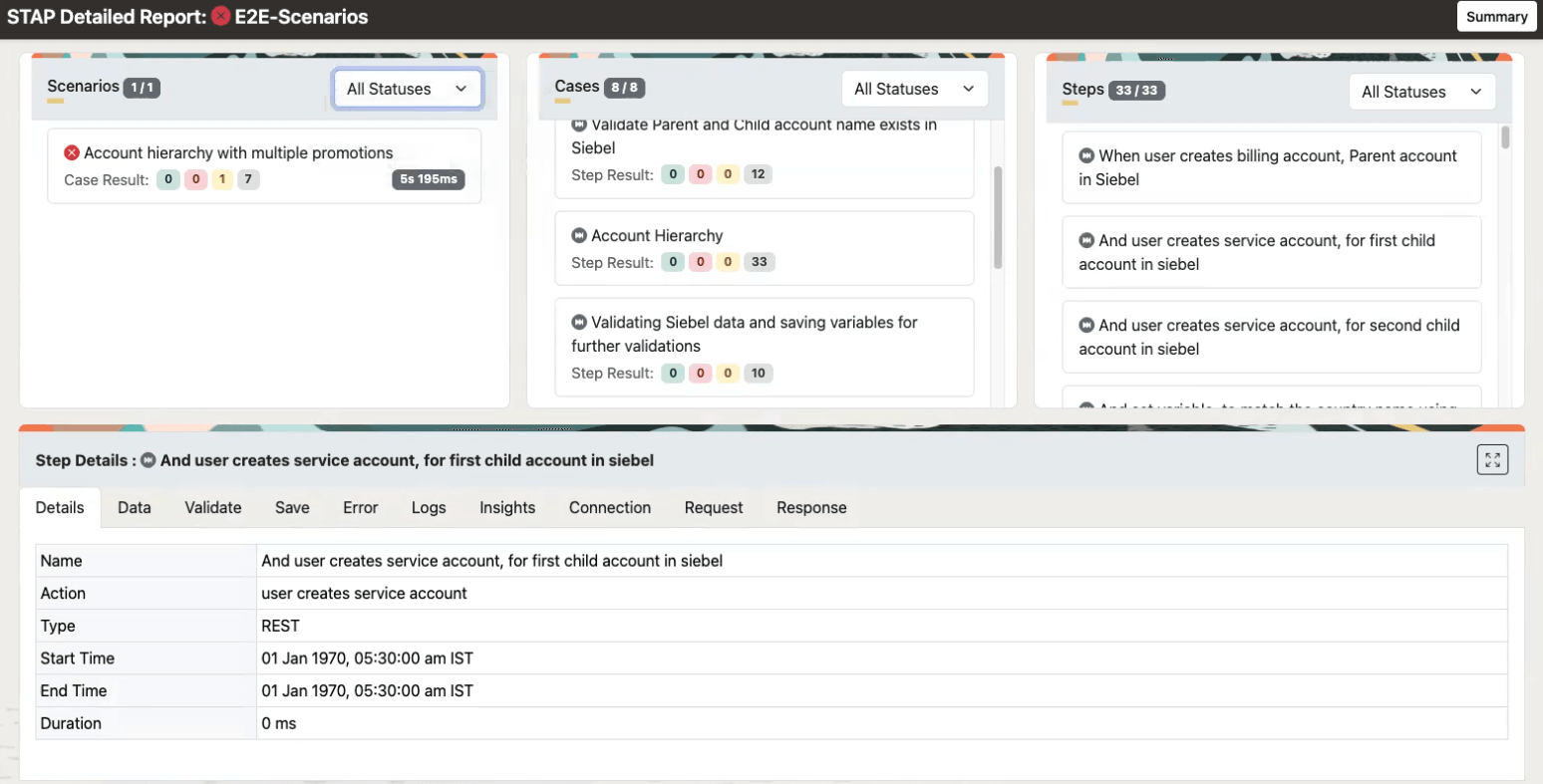

To view the detailed report of each scenario, click on the scenario name in the list under Scenario Summary Report. Upon clicking on a scenario report, you can view detailed metrics of the scenario, each case within the scenario, and each step within the case.

Table 20-9 Color Coded Summary

| Color | Description |

|---|---|

| Green | The number of steps passed. |

| Red | The number of steps failed. |

| Yellow | The number of steps containing errors. |

| Grey | The number of steps skipped. |

By default, the report displays data for all statuses: passed, failed, skipped, and errors. To filter a case or step using its status, select the status that you want to view under the drop-down menu titled Statuses.

Table 20-10 Detailed Case Report

| Field | Description |

|---|---|

| Details | The step's details: its name, action name, type, start time, end time, and duration. |

| Data | Displays data configured in the step's BDD. If no data is configured, this section is blank. |

| Validate | Displays validations created under the step in BDD. |

| Save | Displays saved variables and values present in the step. |

| Error | Details of an error when the action was performed. If there is no error, the column is blank. |

| Logs | Displays a detailed report of each action performed. |

| Insights | Display screenshots after each step of UI automation. |

| Connection | Provides details about the endpoint server and its credentials. |

| Request | The request body of the action. |

| Response | The response body of the action. |

To go back to the STAP Automation Report, click on Summary on the top-right corner.