MDM Extract Parameters and Bucket Configuration

- BI Oriented Master Configuration

- BI Oriented Extendable Lookup Configuration

- Service Point Configuration

- BI Aggregators

- Setting up the Dynamic Group Extracts

- Setting up Snapshot Fact Extracts

Note:

You must complete these setup and configuration steps before starting the ELT processes to load data into the Oracle Utilities Analytics Warehouse (OUAW).- Meter Data Analytics Configuration Page

- Analytics-Oriented Master Configuration Details

- Analytics-Oriented Extendable Lookup Configuration

- Measurement Condition Lookup Value

- Days Since Last Normal Measurement Lookup Value

- Usage Snapshot Type Lookup Value

- Days Since Last Usage Transaction Lookup Value

- Unreported Usage Analysis Snapshot Type Lookup Value

- External System Mapping Lookup Value

- External System Entity Name Lookup Value

- External System ID Mapping Lookup Value

- Service Point Configuration

- BI Aggregators

- Aggregator Measuring Components

- Set Up Aggregation Parameters

- Create and Aggregate BI Aggregators

- BI Aggregation Views

- Set Up the Dynamic Usage Group Extracts

- Set Up the Snapshot Fact Extracts

Meter Data Analytics Configuration Page

The Oracle Utilities Meter Data Management (MDM) source application provides the Analytics Configuration portal that holds all the BI-oriented and the Oracle Data Integrator-based ELT configuration tasks.

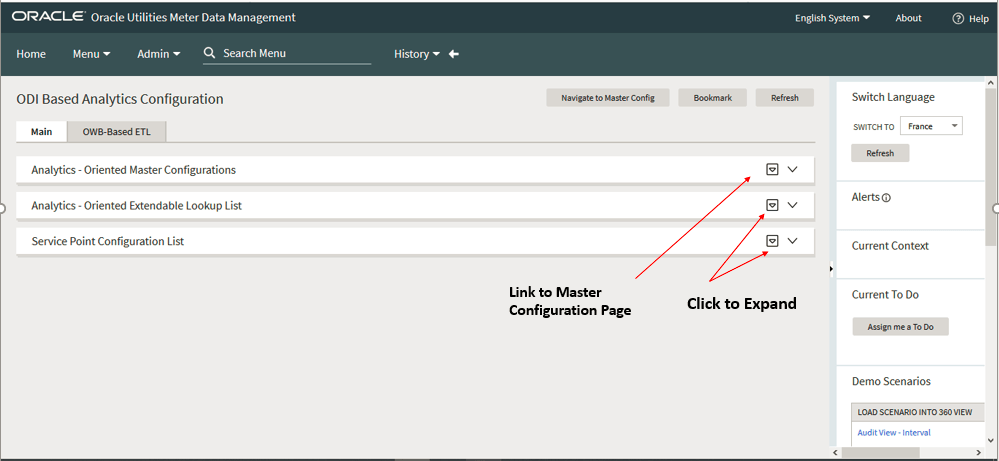

The Meter Data Analytics Configuration portal is a display-only portal that gives an overview of the configurations set up for Oracle Utilities Extractors and Schema, providing links and guidelines for further configurations required to successfully run the data extraction process on MDM.

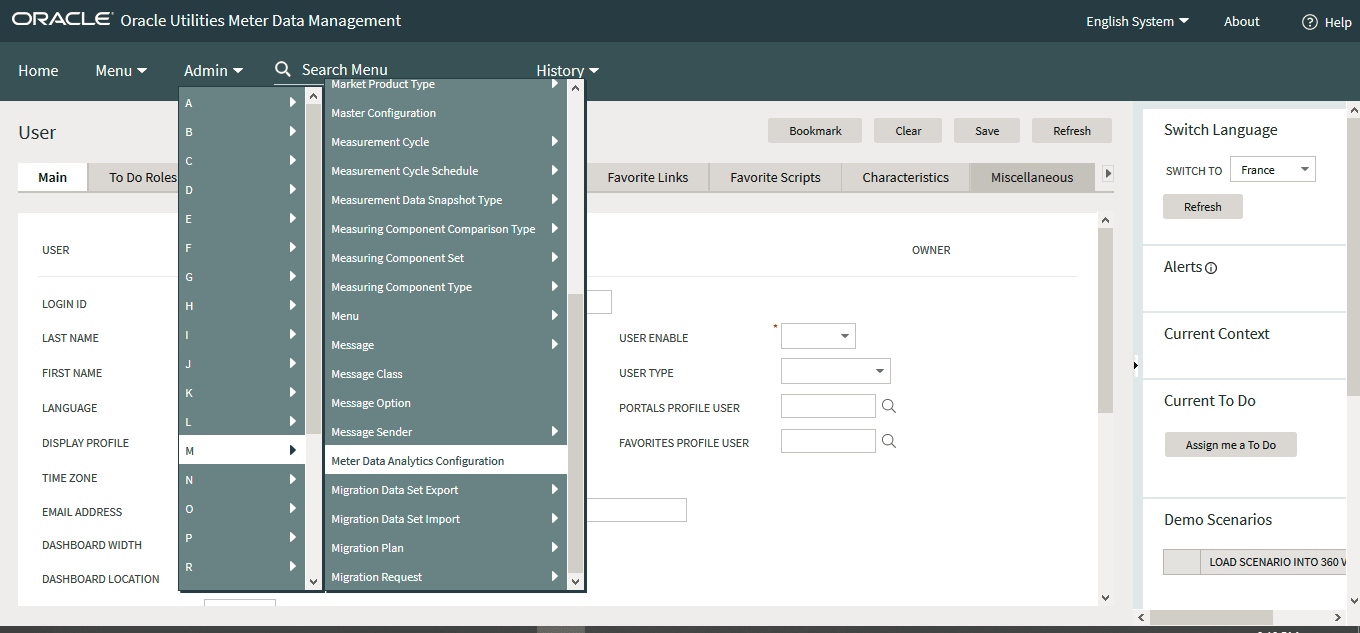

To access the Meter Data Analytics Configuration portal, log in to the source application and select Meter Data Analytics Configuration from the Admin menu.

- Analytics-Oriented Master Configuration

- Analytics-Oriented Extendable Lookup List

- Service Point Configuration List

On the right side of each of the configuration sections, you will find a link to the Master Configuration page.

Analytics-Oriented Master Configuration Details

There are certain parameters that need to be configured during the extraction of source data to the BI Data Warehouse to identify or filter data in accordance with business requirements. Once these parameters have been set up by the end user, the ELT process can then use this information to selectively extract or transform data from the source application and to populate it into the warehouse.

Note:

The ELT job that loads these parameters into the warehouse is configured to be initial load only. Any changes made to these buckets after the initial run are not going to be automatically captured in Oracle Utilities Analytics Warehouse because they could cause inconsistency in the loaded data. However, in case it is necessary to reconfigure the parameters, they can be modified on the source system and reloaded into warehouse through certain additional steps. Note that the star schema tables would also need to be truncated and reloaded. For the detailed steps involved in reloading the parameters, see Configure ETL Parameters and Buckets.- Market Relationship Types

- Subscription Types

- Activity Category Types

- Device Event Business Objects (BOs) to Exclude

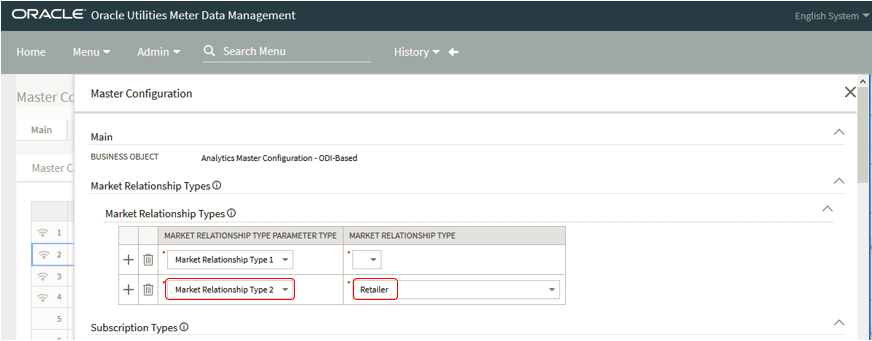

A service point may have several service providers (for example, distributor, retailer, and so on) where each is defined with a specific market relationship type on either the service point directly or on the service point's market indirectly. All fact tables for meter data management populate SPR1_KEY and SPR2_KEY column based on two configured market relationship types.

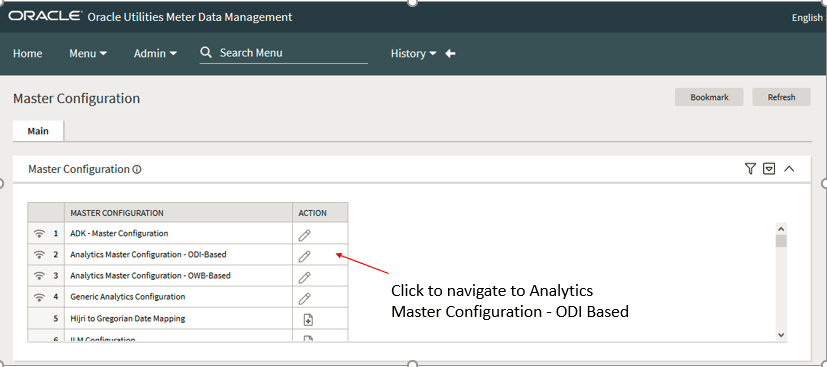

- Navigate to the Master Configuration page from the Analytics-Oriented Master configuration portal.

- Navigate to the Oracle Data Integrator-Based master configuration option in the table and click the Edit button under the Action column. This will open the Oracle Data Integrator-Based master configuration page where the values for the parameters can be set.

Note:

It is not an error if no usage subscription is found.- Navigate to the Subscription Types parameter on the Oracle Data Integrator-Based master configuration page as shown in Market Relationship Types parameter description above.

- Select the applicable subscription type from the drop-down menu and click Save.

- To add multiple values, click the plus (+) button on the left, and set the parameter values accordingly.

The Activity accumulation fact (CF_DEVICE_ACTIVITY) can be limited to activities of specific activity-type categories. Only activities whose Activity Type Category is specified on this master configuration BO are included in the extract. This fact will not load any data if this parameter is not configured.

- Navigate to the Activity Category Types parameter on the Oracle Data Integrator-Based master configuration page as shown in Market Relationship Types parameter description above.

- Select the applicable subscription type from the drop-down menu and click Save.

- To add multiple values, click the plus (+) button on the left, and set the parameter values accordingly.

Device Event accumulation fact (CF_DEVICE_EVT) can be filtered to exclude certain device events. Thus, the device events whose Device Event BO is specified on this master configuration BO are excluded from the extract. For example, if this BO is configured with deviceEventBOs value, then ELT will exclude any related device event.

- Navigate to the Device Event BOs to Exclude parameter on the Oracle Data Integrator-Based master configuration page as shown in Market Relationship Types parameter description above.

- Select the applicable subscription type from the drop-down menu and click Save.

- To add multiple values, click the plus (+) button on the left, and set the parameter values accordingly.

Note:

Some of the parameters that have already been set will have a foreign key link to the parent page of the parameter type, while those that have not been set will only have the parameter name header column.Analytics-Oriented Extendable Lookup Configuration

- Measurement Condition

- Days Since Last Normal Measurement

- Usage Snapshot Type

- Days Since Last Usage Transaction

- Unreported Usage Analysis Snapshot Type

- External System Mapping

- External System Entity Name

- External System ID Mapping

- Click the corresponding link under the Description section to navigate to the Extendable Lookup Maintenance portal.

- Click the Edit button for the bucket for which the modification is to be made. This will take you to the page for the individual bucket.

- Modify the values appropriately and click Save.

This extendable lookup is used to define a measurement, such as the source or type of the measurement (for example, a system estimate versus a normal read versus a human override). For more details on how condition codes are used on measurements, see the Measurements section in the Oracle Utilities Meter Data Management Business User Guide.

These values are extracted into the Measurement Condition Code dimension (CD_MSRMT_COND).

This extendable lookup is used to define the age ranges for days since the last normal measurement was received. Each active instance in this extendable lookup is a bucket definition, where you describe what it is and what is the bucket's upper threshold. If a bucket is meant to have no upper limit (for example, 90+ days), it should be defined with an empty threshold (there should ideally only be one). The lookup value codes should be defined in such a way that when all the instances for the lookup BO are read in ascending order of the lookup value code, their corresponding upper thresholds are also in ascending order. This is important because the extract logic retrieves all the buckets in ascending order of lookup code, will compare the actual number of days on the ordered threshold, and will also apply whichever matches first (less than the upper threshold or the empty threshold). What this means is that the catch-all bucket (empty threshold) should ideally be defined so that it will be the last bucket retrieved. Otherwise, any other bucket definition after the empty threshold will be of no use.

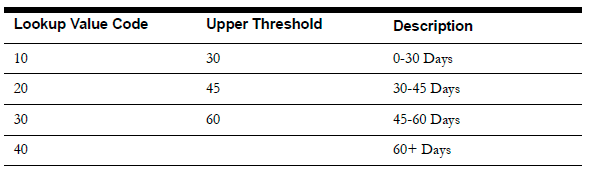

For example, the desired bucket definition is as follows: 0-30 Days, 30-45 Days, 45-60 Days and 60+ Days. This means that the following extendable lookups should be created:

The SP Snapshot fact has a measure for the number of days since the service point's last normal measurement, and this configuration is used to find the age bucket that corresponds with that number of days since the normal measurement.

These configured values are extracted into the Days Since Last Normal Measurement dimension (CD_DAYS_LAST_MSRMT).

This extendable lookup is used to define the granularity of the aggregated consumption of a service point. It defines the TOU map that is applied to the service point's consumption, where every resultant TOU and condition results in a row on the SP Usage Snapshot fact. It is also used to define the target UOM that is used to convert the source UOM prior to TOU mapping (for example: convert KW to KWH).

- On/Off/Sh for CCF

- Day of Week for Therm

- Seasonal On/Off/Sh for Loss Adjusted kWh

This means that a given service point can have several consumption snapshots (but most implementations will have just one).

This extendable lookup is used to define the bucket definitions for the number of days since the last usage transaction was created for the service point. These age bucket definitions are used while extracting data for the Unreported Usage Snapshot fact. Unlike the Days Since Last Normal Measurement lookup value, the upper threshold is not defined here because of the possibility of differing bill cycles for different customer classes. For example, residential customers that bill quarterly vs. commercial or industrial customers that bill monthly. The thresholds are defined on the service point type configuration instead.

For more details, see Service Point Configuration.

This extendable lookup is used to define the different aging snapshots that can be taken for a service point for different types of usage subscriptions. Multiple snapshots of a single service point are allowed, as implementations could have multiple systems to send consumption to, and it may need a snapshot for each.

This allows an implementation to have different snapshots, such as Oracle Utilities Customer Care and Billing, Distribution, and so on.

This means that a given service point can have different Unreported Usage Analysis snapshots (for different types of usage subscriptions).

This extendable lookup provides a mapping of a configured Oracle Utilities Application Framework-based external system and its data source indicator to the configured MDM source in OUAW. The data source indicator is used to uniquely identify the source of the system data.

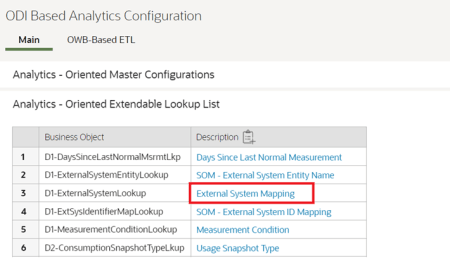

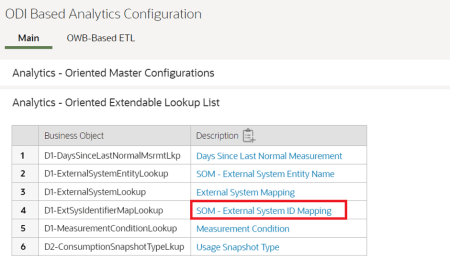

- Navigate to Meter Data Analytics Configuration > Analytics-Oriented Extendable Lookup List.

-

Click External System Mapping in the Description column.

-

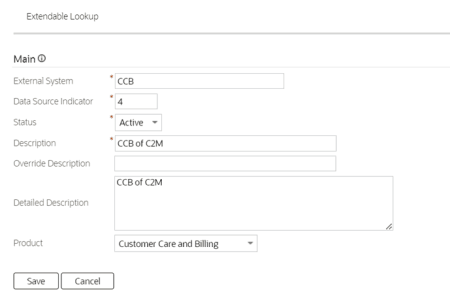

Add an external system value and populate the details. This image illustrates the addition of an external system value for CCB.

Field Name Description External System The name of external system to be mapped. This name would be referred again in External System ID Mapping. Data Source Indicator Data source indicator value of the external product to be mapped.

This value could be identified from Apex > ETL Configuration > Product Instance > Value of ‘Data Source Indicator’ of the configured Source Product.In this example, CCB DSI value = 4.

Status Status of external system to be mapped. Description Description of external system to be mapped. Override Description Override Description of external system to be mapped. Detailed Description Detailed Description of external system to be mapped. Product Select the external product from drop-down. - Click Save.

This extendable lookup defines the names of the entities that can be received from external systems.

- Navigate to Meter Data Analytics Configuration > Analytics-Oriented Extendable Lookup List.

-

Click SOM - External System Entity Name in the Description column.

-

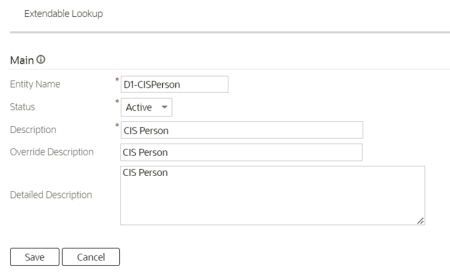

Add an external system entity name and populate the details. This image illustrates the addition of an external system entity for CCB.

Field Name Description Entity Name The entity name of the external system. This name would again be used in External System ID mapping. Status Status of external system entity name. Description Description of external system entity name. Override Description Override Description of external entity name. Detailed Description Detailed Description of external entity name. - Click Save.

This extendable lookup provides a mapping between the external identifiers captured in Oracle Utilities Meter Data Management and the external system where that identifier is from. Since it is possible that different sets of records for a particular entity may be synchronized from multiple external systems, it is necessary to specify the identifier type to use for each external system. The key information that is captured in this mapping is the maintenance object (which identifies the MO that stores the identifier collection), the identifier type, the external system, and the entity name (which identifies the type of entity that uses this identifier as its external primary key). For example, the Service Point MO can contain identifiers for the Oracle Utilities Customer Care and Billing Service Point ID and Oracle Utilities Customer Care and Billing Premise ID, as well as the Oracle Utilities Operational Device Management Service Point ID.

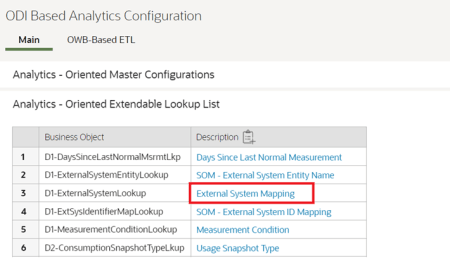

- Navigate to Meter Data Analytics Configuration > Analytics-Oriented Extendable Lookup List.

-

Click SOM - External System ID Mapping in the Description column.

-

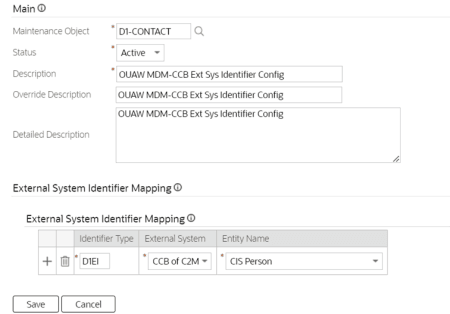

Add an external system ID mapping and populate the details. This image illustrates the addition of an external system ID for CCB.

This page displays the Main and External System Identifier Mapping sections.

This table lists the fields of the Main section.

Field Name Description Maintenance Object The maintenance object name of the external system ID mapping. Status Status of external system ID mapping. Description Description of external system ID mapping. Override Description Override Description of external system ID mapping. Detailed Description Detailed Description of external system ID mapping. This table lists the fields of the External System Identifier Mapping section.

Field Name Description Identifier Type Identifier for the external system ID mapping. External System External system mapping name which was set up in Meter Data Analytics Configuration > Analytics – Oriented Extendable Lookup List > External System Mapping > Description. Entity Name External system entity name which was set up in Meter Data Analytics Configuration > Analytics – Oriented Extendable Lookup List > External System Entity Name > Description. - Click Save.

Service Point Configuration

This section lists all the service point types in the system and indicates whether the BI configuration has been set up for each of them or not. It provides a navigation link to the service point type where the necessary configuration can be set up or modified.

- Usage Snapshot Configuration

- Unreported Usage Snapshot Configuration

This section of the service point type defines the configurations to be used to take the weekly or monthly usage snapshots. The configuration here is used when extracting data for the Usage Snapshot fact.

The Usage Snapshot Type defines the type of usage snapshot. Its extendable lookup definition contains the TOU map (used to map the consumption), and the target unit of measure (used if it is necessary to convert the source UOM to a target UOM prior to TOU mapping). The UOM, TOU, and SQI are used to define the source MCs value identifier that will be TOU mapped.

A given service point type can have many usage snapshot types if there are different ways to look at the monthly consumption. This is not limited to just different TOU maps, but could also be used to create snapshots of different measured values. For example, if a measurement contains two values, Actual and Normally Used, this can be used to create a snapshot of normal usage so it can be compared to a separate snapshot of actual usage. If the service point type does not have at least one configuration type, service points of this type do not have their usage snapshot taken.

This section of the service point type defines the configurations to be used to take the weekly or monthly unreported usage snapshots. The configuration here is used while extracting data for the Unreported Usage Snapshot fact. The Unreported Usage Analysis Snapshot Type defines the type of unreported usage snapshot. The UOM, TOU, and SQI are used to define the source MC's value identifier that will be used to calculate the amount of unreported usage in various age buckets.

The Subscription Type is the type of subscription that the analysis will perform on for this type of snapshot. If the service point is not linked to such a subscription, the fact will be linked to a None-usage subscription, so analysis of consumption with no usage subscription can be performed.

The Days Since UT Buckets and their corresponding descriptions are used to categorize into different age buckets the amount of consumption that has not been billed. For example, if bucket 1 is defined as 30, bucket 2 as 45, and bucket 3 as 60, any unbilled consumption that is less than or equal to 30 days old will fall into bucket 1. Any unbilled consumption that is older than 30 days but is less than or equal to 45 days old will fall into bucket 2. Any unbilled consumption that is older than 45 days but is less than or equal to 60 days old will fall into bucket 3. Any unbilled consumption that is older than 60 days will fall into bucket 4.

A separate snapshot can be taken for different subscription types, so that a given service point can have multiple snapshots for a given month or week. If the service point type does not have at least one unreported usage configuration type, service points of this type do not have their unreported usage snapshot calculated.

BI Aggregators

- Postal Code: This is retrieved from the service point’s address information.

- City: This is retrieved from the service point’s address information.

- Head-End System: This is retrieved either from the override head-end system defined on the device or from the fallback head-end system on the device type, if there is no override on the device.

- Usage Group: This is retrieved either the override usage group on the usage subscription or the fallback usage group on the usage subscription type, if none on the usage subscription. The Usage Group can be set up as an optional dimension, and if so, service points without primary usage subscription can be included.

- Market: This is retrieved from the service point’s market. The Market can be set up as an optional dimension, and if so, service points not participating in a market can be included.

- Service Provider of Role ‘X’: If the service point participates in a deregulated market, the service provider is for the role specified. The Service Provider can be set up as an optional dimension, and if so, service points that do not participate in a market and do not have any service provider for a given role can be included.

- Service Type: This is retrieved from the service point's details.

- Device Type: This is retrieved from the device currently installed on the service point.

- Manufacturer and Model: This is retrieved from the device. The Manufacturer and Model can be set up as optional dimensions, and if so, devices with no manufacturer or model definition can be included.

- Geographic Code: This is retrieved from the service point. The Geographic Code can be set up as an optional dimension, and if so, service points without geographic information can be included.

- Aggregator Measuring Components

- Setting Up Aggregation Parameters

- Creating and Aggregating BI Aggregators

- Refreshing Materialized Views

Aggregator Measuring Components

For every combination of the dimensions listed above and for each type of aggregation there is a distinct aggregator measuring component. These measurements include the aggregated totals for their constituent measuring components.

- Measured Quantity: In this type of aggregation, the aggregated measurements of the constituent measuring components are spread across buckets in accordance with their measurement conditions.

- Quality Count: In the quality count aggregation type, a count for each interval related to a constituent measuring component is placed into one of the quality buckets.

- Timeliness Count: In this aggregation type, a count for each interval related to a constituent measurement component is placed into one of the late buckets.

- Timeliness Quantity: In this aggregation type, the aggregated measurements of the constituent measuring components are spread across late buckets as per their measurement conditions.

Set Up Aggregation Parameters

- Master versus Sub Aggregator

- Measurement BOs

- Timeliness Master Configuration

- Determining Initial Measurement Data Timeliness

- Value Identifiers on Aggregator MC Type (Recommended)

Note:

This is a required configuration.-

Nominate one of the BI aggregation types as the master aggregator, particularly if the data analysis in BI for various aggregation types is for the same set of customers. Nominating a master aggregator makes the setup and aggregation processes easier, because the master aggregator controls how and when the aggregations are to be performed.

-

Define this type's aggregator measuring component type as the master (the Master Aggregation Hierarchy Type option must be set as its MC Type BO). The master aggregator measuring component type controls the aggregation parameters (horizon, lag, and cutoff time), the valid measuring component types to aggregate, and its sub-aggregator measuring component types.

-

Ensure that the measuring component BO of the master aggregator measuring component type has the appropriate algorithms plugged in: a BO system event Find Constituent Measuring Components algorithm (which contains the logic on how to find the constituent measuring components) and an Enter algorithm on its Aggregate state (which contains the logic on how to aggregate the measurements).

Note:

- The sub-aggregator measuring component types can only define the value identifiers that are applicable to it. Its aggregation parameters and valid measuring component types are inherited from the master aggregator measuring component type. Similarly, the Find Constituent MC and Aggregate algorithms are defined on the master (defining these algorithms on the sub-aggregators BO will be ineffectual as they will never get triggered).

- The usage of each type of the BI aggregation depends on the implementation. In this case, the implementation should exclude defining this type of BI aggregation as master aggregator or in any of the sub-aggregator types.

Note:

This is a required configuration.-

Define the special measurement BOs for the four types of BI aggregation (described under Aggregator Measuring Components). These are used to differentiate the aggregated measurements from normal measurements created by initial measurement data.

-

Define the business objects on the corresponding aggregation type's measurement component type. The materialized views that were built to aggregate the individual intervals use this specific measurement BOs for performance reasons. These views are accessed directly by the BI analytics.

- Measured Quantity: Measured Quantity Measurement (D2-MeasuredQuantityMsrmt)

- Quality Count: Quality Count Measurement (D2-QualityCountMsrmt)

- Timeliness Count: Timeliness Count Measurement (D2-TimelinessCountMsrmt)

- Timeliness Quantity: Timeliness Quantity Measurement (D2-TimelinessQuantityMsrmt)

Note:

This is a required configuration.For this configuration you must add the appropriate definitions for the timeliness buckets in the Timeliness Master Configuration. This is where definitions, gradation and ranges for lateness are set.

Depending on its severity, the lateness can be defined as On Time, Late, Very Late, or Missing (a measurement is considered missing if it does not exist or if its condition is either System Estimated or No Read-Other). In addition, this master configuration is where the heating and cooling degree day’s factors are set up.

Note:

This is a required configuration.Mark the initial measurement data with the number of hours of lateness. This definition is used to qualify whether or not a measurement arrived on time or late. This is done on the initial measurement data-level to allow dynamic configuration of what it means for initial measurement data (and measurements) to be late without the need to re-configure the aggregation logic. There is a measure on the initial measurement data's processed data that should store the number of hours of lateness that the initial measurement data have.

The base application is delivered with an Enter algorithm that calculates the initial measurement data's timeliness as the difference between the initial measurement data's end date time and its actual creation date/time in the system (Determine initial measurement data's Timeliness D2-DET-TML). This algorithm can be plugged in on the Pending state of the initial measurement data life cycle.

Note:

This is a recommended configuration.-

Measured Quantity

- Measurement Value: Measured Quantity

- Value 1: Regular Measurement Quantity

- Value 2: Estimated Measurement Quantity

- Value 3: User-Edited Measurement Quantity

- Value 4: Misc Condition 1 Measurement Quantity

- Value 5: Misc Condition 2 Measurement Quantity

- Value 6: MC Count (per interval)

- Value 7: Heating Degree Days

- Value 8: Cooling Degree Days

- Value 9: Average Consumption

- Quality Count

- Value 1: Regular Measurement Count

- Value 2: Estimated Measurement Count

- Value 3: User Edited Measurement Count

- Value 4: No Measurement/No IMD Count

- Value 5: No Measurement/IMD Exists Count

- Value 6: No Read Outage Count

- Value 7: No Read Other Count

- Value 8: Missing Count

- Value 9: Misc 1 Count

- Value 10: Misc 2 Count

The late buckets for Timeliness Count and Quantity are configured via the Timeliness Master Configuration. Similarly, it is recommended that these buckets get reflected on the corresponding aggregator measuring component type's Value Identifiers definition.

Create and Aggregate BI Aggregators

The BI aggregators can be created and aggregated either manually or automatically, just like the other aggregators. For more information, refer to the Aggregation section in the Oracle Utilities Meter Data Management Configuration Guide.

BI Aggregation Views

-

A view that contains all the BI aggregator measuring components only, where the dimensional values are flattened on the view. The pseudo-dimensions in BI are:

- Measured Quantity: D2_MEASR_QTY_MV

- Quality Count: D2_QUALITY_CNT_MV

- Timeliness Count: D2_TIMELINESS_CNT_MV

- Timeliness Quantity: D2_TIMELINESS_QTY_MV

-

A view that contains all the aggregated measurements for BI only. The pseudo-facts in BI are:

- Measured Quantity: D2_MEASR_QTY_AGR_MV

- Quality Count: D2_QUALITY_CNT_AGR_MV

- Timeliness Count: D2_TIMELINES_CNT_AGR_

- Timeliness Quantity: MVD2_TIMELINES_QTY_AGR_MV

Note:

For MDM Versions prior to 2.4.x and C2M Versions prior to 2.8.x, the above given views are available as Materialized views. Whenever the dimension scanning or the aggregation is done, it is important that these materialized views are refreshed to make sure they contain the latest data set. An idiosyncratic batch job called Materialized View Refresh (D2-ACTMV) is delivered to perform this refresh. This batch job can refresh all the materialized views in one run, so long as the materialized view names are provided as batch parameters in the run.Note:

For MDM Versions greater than 2.4.x and C2M Versions greater than 2.8.x, the above given views are available as normal views and these no longer need a data refresh.Set Up the Dynamic Usage Group Extracts

Oracle Utilities Analytics Warehouse allows the use of usage groups to break down the meter data analytics facts. However, in Oracle Utilities Meter Data Management, usage groups are determined dynamically through an algorithm plugged into the usage subscription's type.

Due to this dynamic configuration of usage groups, it is difficult to determine and replicate the logic that will be involved in determining the usage group. A download table is created instead to store the effective usage groups for usage subscriptions.

An idiosyncratic batch process can be run on Initial Load or Incremental Load mode to determine the effective dynamic usage group of usage subscriptions. The batch process also writes the results into the US usage snapshot download table. This batch job is recommended to run the incremental mode daily, and to ensure that data in the download table will not be stale.

Run the Usage Subscription Usage Group Download (D2-DUGDL) batch process to determine the effective usage group of usage subscriptions.

Set Up the Snapshot Fact Extracts

Note:

- Taking weekly or monthly snapshots involves messaging or transforming the measurement data by applying a few core Oracle Utilities Meter Data Management functionalities, such as the conversion of scalar consumption to intervals, the axis conversion, and the time-of-use (TOU) mapping.

- It is not easy to replicate in the BI data warehouse the huge volume of measurement data and the core Oracle Utilities Meter Data Management functionalities. Accordingly, these snapshots will be taken into Oracle Utilities Meter Data Management through idiosyncratic batch jobs that will write the results into download tables. These download tables are the ones that the ELT process will replicate and load into the data warehouse.

The consumption snapshot allows KPIs that report on every service point's measured consumption. Weekly or monthly, the system applies a TOU map to the consumption of every active service point. Every resultant TOU code, quantity, and measurement condition results in a separate row on the Consumption Snapshot fact.

Set Up the Consumption Snapshot

-

Navigate to the appropriate Service Point BO and ensure that the correct algorithm is plugged into the system event Usage Snapshot.

Note:

This event controls if and how snapshots are taken for the service point. If there is no such algorithm plugged into the service point's BO, the service point is skipped.- Deactivate the algorithm Aggregate SP Usage Snapshot and Write to Flat File (D2-SPCA) if it is plugged in and has not been deactivated yet.

-

Plug in the algorithm Aggregate SP Usage Snapshot and Write to Download Table (D2- SP-USG-DL) for the system event Usage Snapshot.

Note:

If the Business Object Algorithm table's caching regime is configured to be Cached for Batch, it is necessary to clear the cache for the algorithm changes to take effect. This can be done by either restarting the thread pool worker or running the Flush All Cache (F1-FLUSH) batch job.

- Set up the Usage Snapshot Type(s). See the BI Oriented Extendable Lookups section under BI Configuration portal for details on how to set up the data.

-

Configure the Service Point Type's usage snapshot configuration. The Service Point Type holds the information that controls the extract:

- Usage Snapshot Type

-

UOM/TOU/SQI: These are used to define the source measuring component's value identifier that will be TOU mapped. If there are multiple measuring components linked to the service point with such a combination of UOM/TOU/SQI, all will be mapped. If the service point has no measuring components with this combination, it will be skipped.

- A given Service Point Type can have many Usage Snapshot Types for different ways to look at the monthly consumption. This is not limited to just TOU maps. This feature can also be used to create snapshots of different measured values. For example, if a measurement contains two values (actual and normally used), use this feature to create a snapshot of normal consumption, so it could be compared with a different snapshot of actual consumption.

- If the Service Point Type does not have at least one entry in the Usage Snapshot Configuration list, it means service points of this type do not have their snapshot taken.

Extract the Consumption Snapshot

The Usage Snapshot fact has its own idiosyncratic batch process that takes the monthly or weekly consumption snapshot of all active service points in the system.

Run the Service Point Usage Snapshot Usage Download (D2-USGDL) batch process to retrieve the service point's consumption and populate the SP Usage Snapshot download table.

The Service Point Snapshot fact allows KPIs that report on the number of service points and devices that are installed over time. Weekly or monthly, the system takes a snapshot of every service point in the system, extracting service point-oriented information as well as information about the service point's install event and the snapshot date.

Set up the Service Point Snapshot

-

Navigate to the appropriate Service Point BO and ensure that the correct algorithm is plugged into the system event Service Point Snapshot.

Note:

This event controls if and how snapshots are taken for the service point. If there is no such algorithm plugged into the service point's BO, the service point is skipped.- In case the algorithm Take SP Snapshot and Write to Flat File (D1-SPSNAP-SE) is plugged in and has not been deactivated yet, deactivate it.

-

Plug in the algorithm Take SP Snapshot and Insert to Download Table (D1-SPSNAPDL).

Note:

If the Business Object Algorithm table's caching regime is configured to be Cached for Batch, it is necessary to clear the cache for the algorithm changes to take effect. This can be done by either restarting the thread pool worker or running the Flush All Cache (F1-FLUSH) batch job.

- Set up the Days Since Last Normal Measurement. Refer to the BI Oriented Extendable Lookups section under BI Configuration portal for details on how to set up the data. This will be used to reference the dimension containing the specific age bucket that corresponds with the number of days since the last normal measurement of the service point was received.

Extract the Service Point Snapshot

The Service Point Snapshot fact has its own idiosyncratic batch process that takes the monthly or weekly snapshot of all active service points in the system.

Run the Service Point Snapshot Download (D1-SPSDL) batch process to extract service point's information and populate the SP Snapshot download table.

The Unreported Usage Analysis Snapshot fact allows KPIs that show the consumption that has not appeared on usage transactions. Weekly or monthly, the system reviews all active service points and determines the consumption had since the most recent usage transaction in the Sent status.

Set up the Service Point Unreported Usage Snapshot

-

Navigate to the appropriate Service Point BO and ensure that the correct algorithm is plugged into the system event Unreported Usage Analysis Snapshot.

Note:

This event controls if and how snapshots are taken for the service point. If there is no such algorithm plugged into the service point's BO, the service point is skipped.- In case the algorithm Analyze Unreported Usage Snapshot and Write to Flat File (D2-SP-UT-AGE) is plugged in and has not been deactivated yet, deactivate it.

- Plug in the algorithm Take SP Unreported Usage Snapshot and Insert to Download Table (D2-SPUT-DL).

Note:

If the Business Object Algorithm table's caching regime is configured to be Cached for Batch, it is necessary to clear the cache so the algorithm changes take effect. This can be done by either restarting the thread pool worker or running the Flush All Cache (F1-FLUSH) batch job.

- Set up the Days Since Last Usage Transaction. This will be used to reference the dimension containing the specific age bucket that corresponds to the number of days since the most recent sent usage transaction of the service point. See the Unreported Usage Analysis Snapshot Type dimension for the setup instructions.

- Set up the Unreported Usage Analysis Snapshot Type. See the Unreported Usage Analysis Snapshot Type dimension for the setup instructions.

- Configure the Service Point Type's unreported usage analysis snapshot configuration. The Service Point Type holds the following information that controls the extract:

- Unreported Usage Analysis Snapshot Type

- UOM/TOU/SQI: These are used to define the source measuring component's value identifier that will be used to calculate the unbilled consumption in the various age buckets. If there are multiple measuring components linked to the service point with such a combination of UOM/TOU/SQI, all will be mapped. If the service point has no measuring components with this combination, it will be skipped.

- Subscription Type: This is the type of subscription that the analysis will be performed on for this type of snapshot; if the service point is not linked to such a subscription, the fact will be linked to the None US, so that the analysis of consumption with no US can be performed.

- The Days Since UT buckets and their corresponding descriptions: These are used to categorize the unbilled consumption into different age buckets. For example, if Bucket 1 is defined as 30; Bucket 2 is 45; and Bucket 3 is 60, then any unbilled consumption that is less than or equal to 30 days old will fall into bucket 1, any unbilled consumption that is older than 30 days but less than or equal to 45 days old will fall into bucket 2, and any unbilled consumption that is older than 45 days but is less than or equal to 60 days old will fall into bucket 3.

A separate snapshot can be taken for different subscription types so that a given service point can have multiple snapshots for a given month or week. If the Service Point Type does not have at least one entry in the Unreported Usage Snapshot Configuration list, it means that the service points of this type do not have their snapshot taken.

Extract the Service Point Unreported Usage Snapshot

The Unreported Usage Analysis Snapshot fact has its own idiosyncratic batch process that takes the monthly or weekly snapshot of all active service points in the system.

Run the SP Unreported Usage Snapshot Download (D2-UUSDL) to extract the service point's information and populate the SP Unreported Usage download table.

The Service Point VEE Exception Snapshot fact allows KPIs that report on VEE Exceptions. Weekly or monthly, the system looks at every service point and count the amount of initial measurement data with and without exceptions. For initial measurement data with exceptions, it further subdivides the count by VEE Exception Type, IMD Type, VEE Severity, VEE Group, and Rule. Every resultant subtotal will have a row on the VEE Exception Snapshot fact.

Set up the Service Point VEE Exception Snapshot

To successfully configure a Service Point VEE Exception Snapshot fact extract:

Note:

This event controls if and how snapshots are taken for the service point. If there is no such algorithm plugged into the service point's BO, the service point is skipped.- In case the algorithm Aggregate SP VEE Exceptions and Write to Flat File (D2- SPVEEEXC) is plugged in and has not been deactivated yet, deactivate it.

-

Plug in the algorithm Aggregate SP VEE Exceptions and Insert to Download Table (D2-SPVEE-DL).

Note:

If the Business Object Algorithm table's caching regime is configured to be Cached for Batch, it is necessary to clear the cache in order for the algorithm changes to take effect. This can be done by either restarting the thread pool worker or running the Flush All Cache (F1-FLUSH) batch job.

Extract the Service Point VEE Exception Snapshot

The Service Point VEE Exception Snapshot fact has its own idiosyncratic batch process that takes the monthly or weekly snapshot of all active service points in the system.

Run the SP VEE Exception Snapshot Download (D2-VEEDL) to extract the service point information and populate the SP VEE Exception download table.