Migrating the On-premises Data to ADB

This section provides steps on how to migrate on-premises data to ADB Instance using the data pump export and import process.

Prerequisites

Make sure the following prerequisites are met:

• Prior knowledge of Database (DBA) and Autonomous Database instance (ADB).

• Before starting the migration, take a full backup of the entire database. This is necessary to perform object-level compression before taking the export backup again which will be used for migration/loading into the ADB instance.

• Keep the object storage bucket and credentials ready for to copy the exported dump and log files.

• Keep the ADB instance admin credentials ready to connect to the database.

• Before taking the export at source database, thoroughly check for any unusable indexes and invalid objects and fix them at source.

• Before you start the import, move the full export backup to the respective ADB storage bucket for executing the import.

• Before starting the migration, make sure the source and target timezone file versions are in sync or the target can be higher. Use the following query to check this at both the source and the target level.

select * from v$timezone_file;

For more information about timezone at the target level, refer to https://docs.oracle.com/en-us/iaas/autonomous-database-serverless/doc/manage-time-zone-file-version.html.

• This document uses ‘CISADM’ which is the admin schema of the product. However, if your environment has a different schema name, replace it in the SQL statements in the respective steps.

Pre-Migration Steps

• Make sure all pre-requisites are taken care before starting the actual migration to avoid issues during migration.

• Before taking an export at the source database, check for any unusable indexes including invalid objects, and fix them at the source database.

Example: Run the following queries to check unusable indexes:

select count(*),owner,status from dba_indexes where status='UNUSABLE' group by owner,status;

select count(*),index_owner,status from dba_ind_partitions where status='UNUSABLE' group by index_owner,status;

select count(*),index_owner,status from dba_ind_subpartitions where status='UNUSABLE' group by index_owner,status;

• To rebuild the partitioned index and normal index, use the following dynamic query to get the SQL statements. Run them one after the other.

select 'alter index '||owner||'.'||index_name||' rebuild ;' from dba_indexes where status='UNUSABLE';

select 'alter index '||index_owner||'.'||index_name||' rebuild partition '||partition_name||';' from dba_ind_partitions where status='UNUSABLE';

Example: To rebuild D1T304P0 owned by CISADM schema and having 8 partitions, run the following queries:

alter index CISADM.D1T304P0 rebuild partition P1;

alter index CISADM.D1T304P0 rebuild partition P2;

alter index CISADM.D1T304P0 rebuild partition P3;

alter index CISADM.D1T304P0 rebuild partition P4;

alter index CISADM.D1T304P0 rebuild partition P5;

alter index CISADM.D1T304P0 rebuild partition P6;

alter index CISADM.D1T304P0 rebuild partition P7;

alter index CISADM.D1T304P0 rebuild partition P8;

• Before taking a backup, check and perform partition/sub-partition compression at the object level. In ADB, there is no support for tablespace-level compression.

Please note that we have only DATA tablespace available in ADB. Hence, perform object-level compression and only then take the backup.

To compress table partitions/sub-partitions when compression is either ADVANCED or QUERY HIGH:

Important! Check and make necessary changes to the following scripts/PL-SQL block to include compression methods other than (ADVANCED/QUERY HIGH) to whatever exists in your database (Example: BASIC, ADVANCED, QUERY LOW, ARCHIVE LOW, ARCHIVE HIGH) and update the scripts accordingly before executing them.

1. Query the tables (DBA_TAB_PARTITIONS and DBA_TAB_SUBPARTITIONS) at the source to check what partitions and subpartitions are compressed.

select * from dba_tab_partitions where compress_for ='ADVANCED';

select * from dba_tab_subpartitions where compress_for ='ADVANCED';

select * from dba_tab_partitions where compress_for ='QUERY HIGH';

select * from dba_tab_subpartitions where compress_for ='QUERY HIGH';

If an other type of compression is enabled in your environment other than ADVANCED and QUERY HIGH, use any of the PL/SQL blocks (2 & 3 OR 4 & 5) for compression (partition and sub-partition) and replace compress_for accordingly including the ALTER statement in the PL/SQL block.

2. Compress sub-partitions for QUERY HIGH compression type.

BEGIN

for c1 in (select * from dba_tab_subpartitions where compress_for ='QUERY HIGH')

loop

execute immediate 'alter table '||c1.table_owner||'.'||c1.table_name||' modify subpartition '||c1.subpartition_name||' compress for Query high';

end loop;

END;

3. Compress partitions for QUERY HIGH compression type.

BEGIN

for c1 in (select * from dba_tab_partitions where compress_for ='QUERY HIGH' and subpartition_count=0)

loop

execute immediate 'alter table '||c1.table_owner||'.'||c1.table_name||' modify partition '||c1.partition_name||' compress for Query high';

end loop;

END;

4. Compress sub-partitions for ADVANCED compression type.

BEGIN

for c1 in (select * from dba_tab_subpartitions where compress_for ='ADVANCED')

loop

execute immediate 'alter table '||c1.table_owner||'.'||c1.table_name||' modify subpartition '||c1.subpartition_name||' compress for all operations';

end loop;

END;

5. Compress partitions for ADVANCED compression type.

BEGIN

for c1 in (select * from dba_tab_partitions where compress_for ='ADVANCED' and subpartition_count=0)

loop

execute immediate 'alter table '||c1.table_owner||'.'||c1.table_name||' modify partition '||c1.partition_name||' compress for all operations';

end loop;

END;

• Take metadata DDL of all the IOT partitioned indexes that will be used to re-create later after importing data into the ADB Instance due to restrictions in ADB where the partitioned IOT indexes will be created as NORMAL indexes during the import.

Set serveroutput on size 200000

Set head off

Set feed off

Set pages 0

Set lines 1000

SELECT to_char(DBMS_METADATA.GET_DDL ('INDEX', index_name, owner))

FROM dba_indexes where owner='CISADM' and

table_name in (select table_name from dba_tables where partitioned='YES' and IOT_TYPE='IOT' and table_name like 'D1%' and owner='CISADM');

• Make sure to recheck the unusable indexes and rebuild them before taking the backup. Migration will fail without this check.

• After completion of all the prerequisites, take an export backup of the schema. Run the following command:

expdp system/<system password>@<TNSENTRY_NAME> directory=<dump_directory_name> dumpfile=<dumpfile_name> logfile=<logfile_name> schemas=CISADM

If the database size is huge, you can enable parallelism while taking the backup. Use the parallel parameter in the command mentioned above.

• After the export is completed, verify the logfile to make sure no errors were encountered during the export. If there are any, fix them and re-run the export backup.

Migration

To migrate on-premises data to an ADB instance:

1. Create credentials in the ADB instance to access Object Storage.

This can be done either through the user’s private key or an auth token. For more information, refer to the Oracle Cloud Infrastructure documentation at: https://docs.oracle.com/en-us/iaas/Content/Registry/Tasks/registrygettingauthtoken.htm

Example:

begin

DBMS_CLOUD.CREATE_CREDENTIAL(credential_name => 'OCI_NATIVE_CRED', username => 'UGBU_Enterprise_DPIMP_ServiceUser', password => 'auth_token');

end;

/

Make sure to modify the service usernames and passwords accordingly before executing the command.

2. Query the dictionary for validation.

select * from dba_credentials;

3. Upload dump files to the Object Storage Bucket.

There are two ways to copy dump file(s) to the bucket:

• OCI Console GUI Mode:

a. Get the dump file in your local system and login to the OCI console.

b. Navigate to Storage > Buckets.

c. Select the compartment and bucket.

d. Navigate to Objects > Upload the dump file(s), and then click Upload.

• Curl Command:

curl -v -X PUT -u '<username>:<AUTH_TOKEN>' --upload-file <dumpfile_name> <URL_to_bucket>/<dumpfile_name>

Example:

curl -v -X PUT -u 'UGBU_Enterprise_DPIMP_ServiceUser:<AUTH_TOKEN>' --upload-file exp_demo.dmp https://objectstorage.us-phoenix-1.oraclecloud.com/n/idvvfekwvhut/b/UGBU-Dev-CMA-Bucket/o/exp_demo.dmp

4. Validate or list the files in the Object Storage Bucket.

After the dump files are copied to the bucket, list the files.

Make sure to check and update the bucket URL and credential name. Execute the SQL accordingly.

select * from

dbms_cloud.list_objects (credential_name => 'credentialName',

location_uri => 'bucketurl') order by last_modified desc;

5. Create user in the ADB instance before running data pump import.

Connect to Autonomous Database as ADMIN user and create users CISUSER and CISREAD.

CREATE USER CISUSER PROFILE DEFAULT IDENTIFIED BY <PASSWORD> DEFAULT TABLESPACE DATA TEMPORARY TABLESPACE TEMP;

GRANT SELECT ANY TABLE TO CISUSER;

GRANT CIS_USER TO CISUSER;

GRANT CIS_READ TO CISUSER;

GRANT CONNECT TO CISUSER;

CREATE USER CISREAD IDENTIFIED BY <PASSWORD> DEFAULT TABLESPACE DATA TEMPORARY TABLESPACE TEMP;

GRANT SELECT ANY TABLE TO CISREAD;

GRANT CIS_READ TO CISREAD;

GRANT CONNECT TO CISREAD;

6. Get the list of all tablespaces using the following query from the source database except the system tablespaces to remap those to the DATA tablespace in ADB because ADB does not support the creation of tablespaces.

SELECT TABLESPACE_NAME FROM DBA_TABLESPACES WHERE TABLESPACE_NAME NOT IN ('SYSTEM','SYSAUX','USERS','UNDOTBS1','TEMP');

7. Import the dump file to the ADB instance.

Connect to your cloud shell and import the data. Use the following example.

Note that the following snippet is just an example. Make sure to update the fields, such as bucket name, credentials, tablespace names to be remapped, etc accordingly before executing the import command.

impdp admin/**********r@<target_servicename> directory=<dump_directory_name> credential=<credential_name> dumpfile=<URL_Path_to_dumpfile>/<dumpfilename> schemas=<admin_Schema_Name> remap_tablespace=TABLESPACE1:DATA remap_tablespace=TABLESPACE2:DATA remap_tablespace=TABLESPACE2:DATA (YOU CAN ADD AS MANY TABLESPACES AS YOU WANT) logfile=<logfile_name>

8. Import all data tablespaces to DATA and all TEMP tablespaces to TEMP.

Important! Do not include system tablespaces except for users which are not available in ADB.

The following snippet is just an example. Make sure to thoroughly check tablespaces in the source database and then remap accordingly.

impdp admin/**********r@D1ILMNME_high directory=DATA_PUMP_DIR credential=data_pump_imp dumpfile=https://objectstorage.us-phoenix-1.oraclecloud.com/n/idvvfekwvhut/b/UGBU-Devv-Bucket/o/expdp_D1FRENCH_for_ADB.dmp schemas=CISADM remap_tablespace=CISTS_01:DATA remap_tablespace=CM_D1T304_P2011JAN_S181:DATA remap_tablespace=CM_D1T304_P2011JAN_SMAX:DATA remap_tablespace=CM_D1T304_P2011FEB_S181:DATA remap_tablespace=CM_D1T304_P2011FEB_SMAX:DATA remap_tablespace=CM_D1T304_P2011MAR_S181:DATA remap_tablespace=CM_D1T304_P2011MAR_SMAX:DATA remap_tablespace=CM_D1T304_P2011APR_S181:DATA remap_tablespace=CM_D1T304_P2011APR_SMAX:DATA remap_tablespace=CM_D1T304_P2011MAY_S181:DATA remap_tablespace=CM_D1T304_P2011MAY_SMAX:DATA remap_tablespace=CM_D1T304_P2011JUN_S181:DATA remap_tablespace=CM_D1T304_P2011JUN_SMAX:DATA remap_tablespace=CM_D1T304_P2011JUL_S181:DATA remap_tablespace=CM_D1T304_P2011JUL_SMAX:DATA remap_tablespace=CM_D1T304_P2011AUG_S181:DATA remap_tablespace=CM_D1T304_P2011AUG_SMAX:DATA remap_tablespace=CM_D1T304_P2011SEP_S181:DATA remap_tablespace=CM_D1T304_P2011SEP_SMAX:DATA remap_tablespace=CM_D1T304_P2011OCT_S181:DATA remap_tablespace=CM_D1T304_P2011OCT_SMAX:DATA remap_tablespace=CM_D1T304_P2011NOV_S181:DATA remap_tablespace=CM_D1T304_P2011NOV_SMAX:DATA remap_tablespace=CM_D1T304_P2011DEC_S181:DATA remap_tablespace=CM_D1T304_P2011DEC_SMAX:DATA remap_tablespace=CM_D1T304_PMAX_S181:DATA remap_tablespace=CM_D1T304_PMAX_SMAX:DATA remap_tablespace=CM_D1T304_IND:DATA remap_tablespace=CM_D1T314_IND:DATA logfile=import.log

9. When the import is completed, the import log file is generated in the local data pump location. Copy it to the storage bucket to access it.

Every Autonomous Database includes a predefined DATA_PUMP_DIR directory (/u03/dbfs/018847F69FD49DCAE0638614000A86BC/data/dpdump). Before copying the files, query the local data pump directory for log files, and then copy it to the object storage.

SELECT * FROM dbms_cloud.list_files('DATA_PUMP_DIR') order by created desc;

10. Copy the log files from local data pump directory to the object storage bucket.

Note that the following snippet is just an example. Modify/update the bucket and log file name accordingly.

BEGIN

DBMS_CLOUD.PUT_OBJECT(

credential_name => 'OCI_NATIVE_CRED',

object_uri => 'objectstorage_bukcet url',

directory_name => 'DATA_PUMP_DIR',

file_name => 'import_log.log');

END;

/

11. Fix the known issues or errors during the import.

After the log file is generated in the object storage bucket, look for ORA-errors in the file. There should not be any errors except the known errors mentioned below along with the fixes.

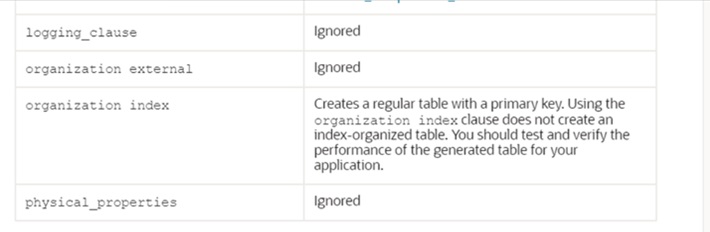

The following table lists the errors, and the respective fixes, during import:

Error | Fix |

|---|---|

ORA-39083: Object type ROLE_GRANT failed to create with error | Can be ignored |

ORA-01924: role 'DBA' not granted or does not exist | ADMIN user not able to grant the DBA role to other users |

ORA-39082: Object type PACKAGE BODY:"CISADM"."OUAF_SECURITY" created with compilation warnings. | To resolve this, grant the following to CISADM schema and recompile the package: GRANT EXECUTE ON DBMS_CRYPTO TO CISADM; ALTER PACKAGE CISADM.OUAF_SECURITY COMPILE; ALTER PACKAGE CISADM.OUAF_SECURITY COMPILE BODY; |

12. After the import is successful, recreate the IOT indexes in the ADB instance.

Verify if the import is completed sucessfully before proceeding with this step. Due to restrictions in ADB, the partitioned IOT indexes will be created as NORMAL indexes during the import process.

Take the DDL of IOT partitioned indexes from the source and then drop the same in ADB, and then re-create as partitioned IOT indexes.

Below is a dynamic SQL to pull DDL of IOT indexes:

SELECT to_char(DBMS_METADATA.GET_DDL ('INDEX', index_name, table_owner))

FROM dba_indexes

WHERE table_name in (select table_name from dba_tables where partitioned='YES' and IOT_TYPE='IOT' and table_name like 'D1%' and table_owner='CISADM');

Note: Make sure to replace the tablespace name with ‘DATA’ wherever applicable in the create index DDL before executing it in the target ADB and add the ‘LOCAL’ keyword if not added already.

13. Validate the object count after import.

Compare the object count at both source and target databases and the count should be the same.

select owner, object_type, count(*) from dba_objects where owner = 'CISADM' and object_name NOT IN (select object_name from dba_recyclebin where owner = 'CISADM') group by owner, object_type order by 1;