General Configurations if Big Data Processing License is Enabled

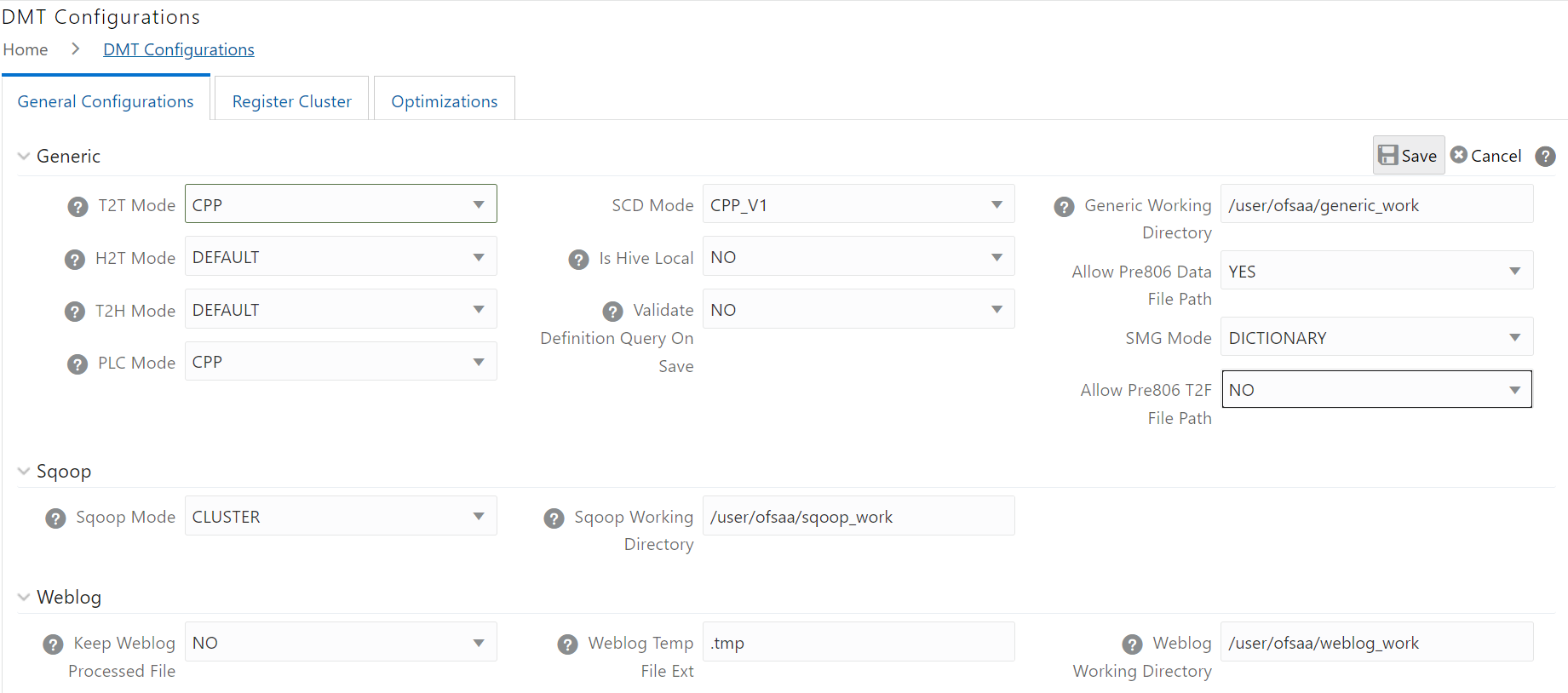

Figure 8-32 DMT Configurations window

Table 8-10 Fields in the DMT Configuration window and their Description

| Property Name | Property Value |

|---|---|

| Generic | |

| T2T Mode | Select the mode of T2T to be used for execution of Data Mapping definition, from the list. The options are Default (for Java engine) and CPP (for CPP engine). |

| H2T Mode |

Select the mode of H2T to be used for execution of Data Mapping definition, from the list. The options are Default, Sqoop, and OLH. OLH (Oracle Loader for Hadoop) must have been installed and configured in your system. For more information on how to use OLH for H2T, see Oracle® Loader for Hadoop (OLH) Configuration section in OFS Analytical Applications Infrastructure Administration Guide. Sqoop should have been installed and configured in your system. For more information, see the Sqoop Configuration section in OFS Analytical Applications Infrastructure Administration Guide. Additionally, you should register the cluster information of the source Information domain using the Register Cluster tab. |

| T2H Mode |

Select the mode of T2H to be used for execution of Data Mapping definition, from the list. The options are Default and Sqoop. For the Default option, additional configurations are required, which is explained in the Data Movement from RDBMS Source to HDFS Target (T2H) section in OFS Analytical Applications Infrastructure Administration Guide. Additionally, you should register the cluster information of the target Information domain using the Register Cluster tab. For the Sqoop option, Sqoop should have been installed and configured in your system. For more information, see the Sqoop Configuration section in OFS Analytical Applications Infrastructure Administration Guide. Additionally, you should register the cluster information of the source Information domain using the Register Cluster tab. |

| PLC Mode | Select the mode of execution to be used for Post Load Changes definition, from the list. The options are Default (for Java engine) and CPP (for CPP engine). |

| SCD MODE |

This field is applicable only if SCD uses a merge approach.

Note: For the Backdated Executions containing type 2 column mappings, below column mappings are mandatory :

|

| Is Hive Local |

This is applicable for T2H and F2H. Select Yes if HiveServer is running locally to OFSAA, else select No, from the drop-down list. |

| Validate Definition Query on Save | Select Yes to validate the SQL Query of the Data Mapping definition on save. |

| Generic Working Directory | Specify the path of the HDFS working directory for generic operations. By default, the path is set as /user/ofsaa/GenericPath. |

| Allow Pre806 Data File Path |

This field is applicable only in case of upgrade from an earlier version to 8.1.0.0.0 version. If yours is a fresh installation of 8.1.0.0.0 version using Full installer, this field is not applicable. For F2T, the path for Data File in versions before 8.0.6.0.0 is /<ftpshare>/STAGE/<FileBasedSource>/<MISDate>/<dataFile.dat>. In 8.1.0.0.0, it is changed to /ftpshare/<INFODOM>/dmt/source/<Data Source Code>/data/<MISDATE>/<dataFile.dat>. Select Yes to allow the old Data File path in 8.1.0.0.0 version. |

| SMG Mode |

By default, the Source Model Generation (SMG) mode is set as Dictionary. When SMG Mode is selected as Dictionary, the time taken for generating Source models of Views from the database is optimized. Select Default for the earlier mode. |

| Allow Pre806 T2F File Path |

In the versions before 8.0.6.0.0, the T2F extract file path is <ftpshare>/STAGE/<SOURCE_CODE>/<MISDATE>. Select Yes, if you want to set the preceding extract path. If you select No, the extract file path is set to <ftpshare>/<INFODOM>/dmt/def/<DEFINITION_CODE>/<BATCH_ID>_<TASK_ID>/<MISDATE>. |

|

Sqoop (This section is applicable only if you select Sqoop for T2H Mode or H2T Mode.). |

|

| Sqoop Mode |

Select Client to execute Sqoop in client mode or select Cluster to execute Sqoop in cluster mood, from the drop-down list. If you select Cluster as Sqoop Mode, you should register the cluster from Register Cluster tab. For more details, see Registering a Cluster. Note: Copying of any Sqoop jars and Hadoop/Hive configuration XMLs to OFSAAI is not required in cluster mode. |

| Sqoop Working Directory | Specify the path of the HDFS working directory for Sqoop related operations. |

|

WebLog (This section is applicable only for L2H) |

|

| Keep Weblog Processed File |

Select Yes or No from the drop-down list. Yes- The working directory will be retained with the processed WebLog files. If the data loading was successful, the WebLog file name will be appended with Processed. Else, the WebLog file name will be appended with Working. No- The working directory will be deleted after data loading. |

| Weblog Temp File Ext | Enter the extension of the Weblog temporary file. |

| Weblog Working Directory | Enter the name of the temporary working directory in HDFS. |

| File Encryption | |

| Encryption At rest | Select Yes from the drop-down list, if encryption is required for T2F or H2F and decryption is required for F2T or F2H. |

| Key File Name | Enter the name of the Key File that you used for encrypting the Data File. |

| Key File Path | Enter the absolute path of the Key File that you used for encrypting the Data File. |

Note:

You can use theBackendServerProperties.conf in

the ficdb/conf layer to support the required

Timezone and Time

Format in the CPP

logs.