8 Task Execution Engine

Task execution engine enables you to invoke common database operations during data conversion. The tasks include gathering database stats, killing a session, taking table backups, and table purging. These operation are performed by an admin user and should be carefully invoked such that there execution does not overlap with data migration run and vice versa.

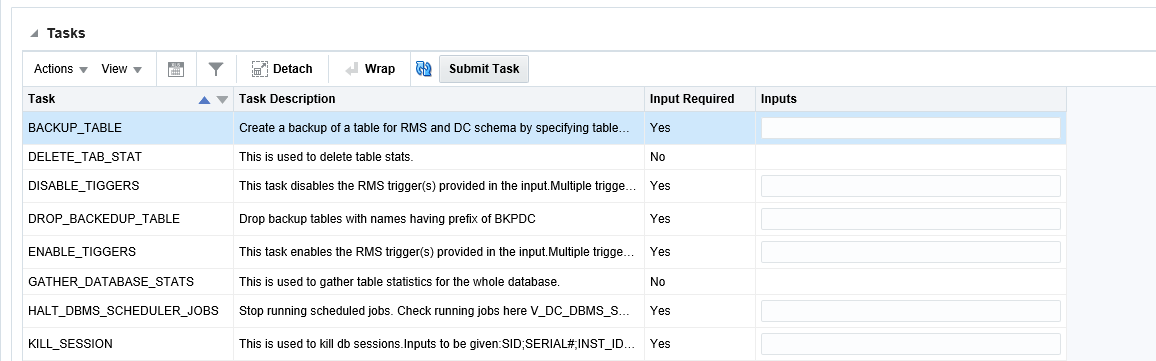

These tasks are accessed from the System Administration screen in the Data Conversion tool. From the Tasks section, select an operation and provide input parameters as required. If you need to clear the Inputs field click the refresh icon button (![]() ). To start the selected task, click on Submit Task button in the table toolbar. Once the task is triggered, it runs as an asynchronous process.

). To start the selected task, click on Submit Task button in the table toolbar. Once the task is triggered, it runs as an asynchronous process.

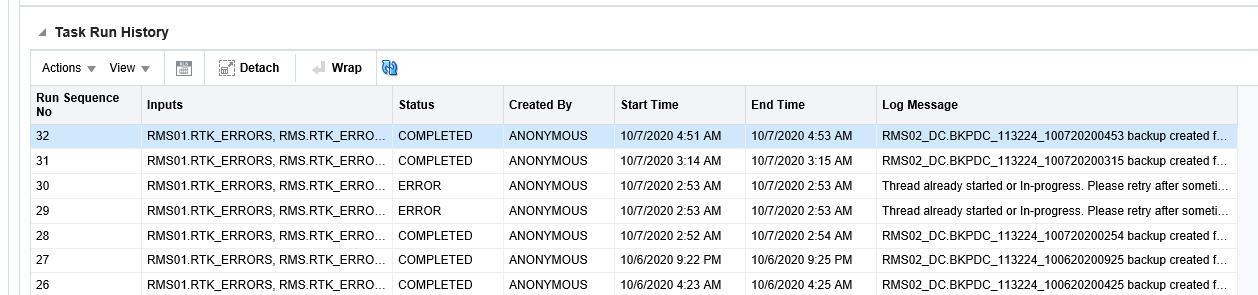

To check the status, click on Refresh icon(![]() ) in the Task Run History section below. The Task Run History section shows the current and past execution history including provided inputs, excution timings and status for a task.

) in the Task Run History section below. The Task Run History section shows the current and past execution history including provided inputs, excution timings and status for a task.

The following database operations are supported by this feature:

| Task | Task Description |

|---|---|

|

GATHER_DATABASE_STATS |

This task gathers the DB statistics. This should not be executed when a conversion is under process as it may impact the performance of an ongoing data conversion run and vice versa. This can be used as an alternate to running the GATHER_STATS job from POM. Input Format: Not required |

|

KILL_SESSION |

This task kills a data conversion session in the conversion schema. It will not impact uses in the Merchandising schema. You can get the session information by querying the V_DC_SESSION_INFO view using the APEX Data Viewer by prefixing the data conversion schema name. Input Format: <SID>;<SERIAL#>;<INST_ID> All three values are to be provided separated by semicolon, for example: 1;1;1 |

|

PURGE_TABLE |

This task truncates data conversion or Merchandising tables which are not seed tables. You should ensure tables are truncated as per their dependencies (that is, the child tables are truncated before the parent). Input Format: <SCHEMA>.<TABLE_NAME> Input is the table name prefixed with the schema name. For example, RMS01.ITEM_LOC can be given as input to truncate ITEM_LOC table of Merchandising schema RMS01. |

|

BACKUP _TABLE |

This can be used to backup any Merchandising or data conversion table if you wish to save a copy for lookup before you truncate/purge. The table is saved in the conversion schema with a generated name prefixed with "BKPDC". The generated table name would be available in the Log Message in the Task Run History section of the System Administration screen. You can query the generated table's data using the APEX Data Viewer by prefixing the data conversion schema name. Input format: <SCHEMA_NAME>.TABLE_NAME Multiple tables can be given as input with comma as separator, for example: RMS01.ITEM_MASTER, RMS01_DC. SVC_ITEM_MASTER |

|

ENABLE_TRIGGER |

This can be used to enable one or more triggers. You can also choose to enable all triggers by passing 'ALL' as the input parameter. The trigger names are to be provided as input. Input Format: <TRIGGER_NAME1>,<TRIGGER_NAME2> Multiple triggers can be given as input with comma as separator. For example, EC_TABLE_UIF_AIUDR,EC_TABLE_UIL_AIDR,EC_TABLE_VI_AIUDR,EC_TABLE_ISU_AIUDR |

|

DISABLE_TRIGGER |

This can be used to disable one or more triggers. The trigger names are to be provided as input. Input Format: <TRIGGER_NAME1>,<TRIGGER_NAME2> Multiple triggers can be given as input with comma as separator. For example, EC_TABLE_UIF_AIUDR, EC_TABLE_UIL_AIDR, EC_TABLE_VI_AIUDR, EC_TABLE_ISU_AIUDR |

|

HALT_DBMS_SCHEDULER_JOBS |

This task will stop scheduled jobs that are currently running for data conversion. To view details of a scheduled job whether it is running or already completed, query the view V_DC_DBMS_SCHD_JOB_RUN_DETAILS using the APEX Data Viewer by prefixing the data conversion schema name. Input Format:<JOB_NAME> Multiple jobs can be given as input with comma as separator. For example, DC_ITEM_LOC_11, DC_ITEM_LOC_12, DC_ITEM_LOC_13 |

|

DROP_BACKEDUP_TABLE |

This task will drop backup tables previously backed up into the data conversion schema through the BACKUP_TABLE task. Input Format: <TABLE_NAME> Multiple tables can be given as input with comma as separator. For example, BKPDC_113224_091120200259, BKPDC_113224_091120200319 |

|

DELETE TABLE STATS |

This works similar to Merchandising DELETE_TAB_STATS.KSH batch. This truncates temporary tables and locks stats for performance.Input Format: Not required |

|

DISABLE_PUBLISHING_TRIGGERS |

This task disables all MFQUEUE publishing triggers. This should be run prior to the start of data conversion. To enable required publishing triggers post data conversion, execute ENABLE_TRIGGER task. Input Format: Not required |

|

INIT_PUBLISHING |

This task initializes the merchandising publishing tables. It clears MFQUEUE tables (if any data), populates PUB_INFO tables as needed and sets the published indicator to Y for the converted data. This task should be run after completing conversion of data, prior to lift and shift. Input Format: Not required |

|

STOCKLEDGER_PROCESSING |

This task initializes the starting inventory totals in the stock ledger tables based on the stock on hand conversion. Verify and align data in the following tables before you execute the task:

Input Format: <NO_OF_THREADS>;<RECALC_OPTION> It accepts two input parameters,

|

|

UPDATE_DC_SYSTEM_OPTIONS |

This task updates values in the DC_SYSTEM_OPTIONS table. The following fields can be updated using this task:

All these fields except MASTER_PROCESS_ID can be set 'Y' or 'N'. For the MASTER_PROCESS_ID column, the allowed value is NULL and should be used when a mass upload process is terminated using a kill session option. In this case, DC_SYSTEM_OPTIONS.MASTER_PROCESS_ID would still have the old process id and this needs to be cleaned up before starting a new mass upload process. Input Format: < FIELD_NAME>;< FIELD_VALUE > Input is field name and value separated by semicolon, for example, |

|

DC_RPM_SEED_FUTURE_RETAIL |

This task seeds the future retail values in Pricing for all active item/locations in the system at the time the process is run. Verify and align item/locations be-fore you execute the task, Input Format: <NO_OF_THREAD> It requires total number of threads for parallel ex-ecution as the input parameter. |

Important Notes

-

The operations covered here should be invoked with caution by users with higher privileges. Some of these tasks might impact an on-going conversion run and should not be triggered when a data conversion is in progress. Similarly, conversions should not be run while these tasks are under execution.

-

Avoid any spaces in the input string to these tasks.

-

Maximum data length for the input string can up to 1000 characters. If required, break the activity into multiple calls to required tasks.