Note:

- This tutorial requires access to Oracle Cloud. To sign up for a free account, see Get started with Oracle Cloud Infrastructure Free Tier.

- It uses example values for Oracle Cloud Infrastructure credentials, tenancy, and compartments. When completing your lab, substitute these values with ones specific to your cloud environment.

Export OCI Audit Logs in CSV format for a custom time range

Introduction

OCI Audit Logs are related to events emitted by the Oracle Cloud Infrastructure Audit service. These logs are available from the OCI Console Logging Audit page, or are searchable on the Search page alongside the rest of your logs. You can export Oracle Cloud Infrastructure (OCI) Audit Logs in JSON format for a custom time range of maximum 14 days from the Oracle Cloud Console.

In this tutorial, you’ll learn how to deploy a custom script for exporting Audit Logs for a duration greater than 14 days in CSV format.

Note: The maximum retention period of Audit Logs is 365 days only.

Objectives

Deploy a custom Python script to export OCI Audit Logs in CSV format for any custom time range (less than 365 days).

Prerequisites

- The VM on which the script is deployed should be a Linux instance.

- Install OCI CLI on the VM or run the script from the Cloud Shell.

- Install Python3 on the VM.

Task 1: Deploy the custom Python script

-

Verify if Python is installed on the VM using the command.

python3 –-version -

Install the Pandas library using the command.

pip3 install pandas -

Create a new directory and change to the directory for deploying the script.

mkdir <directory-name> cd <directory-name> -

Create a file with the name main.py and edit the file using any editor (here vim editor has been used).

touch main.py vim main.py -

Copy-paste the contents of the script as shown below within the main.py file and save the file contents by typing :x and clicking Enter.

import pandas as pd import csv import os import datetime from datetime import timedelta,date import subprocess import json import sys import getopt def myfunc(argv): START_DATE = "" END_DATE = "" COMPARTMENT_ID = "" arg_help = "\nUsage:\n\npython3 main.py -s <log_start_date in YYYY-MM-DD Format> -e <log_end_date in YYYY-MM-DD Format> -c <Compartment_ID>\n".format(argv[0]) try: opts, args = getopt.getopt(argv[1:],"hs:e:c:") except: print("\nenter valid argument values. Try -h option with script to know the usage.\n") sys.exit(2) for opt, arg in opts: if opt in ("-h"): print(arg_help) # print the help message sys.exit(2) elif opt in ("-s"): START_DATE = arg elif opt in ("-e"): END_DATE = arg elif opt in ("-c"): COMPARTMENT_ID = arg INC = -1 year1, month1, day1 = map(int, START_DATE.split('-')) DATE1 = datetime.date(year1, month1, day1) year2, month2, day2 = map(int, END_DATE.split('-')) DATE2 = datetime.date(year2, month2, day2) NUM_DAYS = DATE2 - DATE1 NUM_DAYS = NUM_DAYS.days # Function for converting JSON to .CSV format def convert_csv(): with open('out.json', 'r') as file: data = json.load(file) datetimez = [] compartmentID = [] compartmentName = [] message = [] tenantId = [] userAgent = [] path = [] ingestedtime = [] type = [] id = [] for ele in data['data']['results']: datetimez.append(ele['data']['datetime']) compartmentID.append(ele['data']['logContent']['data']['compartmentId']) compartmentName.append(ele['data']['logContent']['data']['compartmentName']) message.append(ele['data']['logContent']['data']['message']) tenantId.append(ele['data']['logContent']['data']['identity']['tenantId']) userAgent.append(ele['data']['logContent']['data']['identity']['userAgent']) path.append(ele['data']['logContent']['data']['request']['path']) ingestedtime.append(ele['data']['logContent']['oracle']['ingestedtime']) type.append(ele['data']['logContent']['type']) id.append(ele['data']['logContent']['id']) finaldate = [] for ts in datetimez: finaldate.append(datetime.datetime.fromtimestamp(int(ts) / 1000).strftime('%Y-%m-%d %H:%M:%S')) output = zip(finaldate, compartmentID, compartmentName, message, tenantId, userAgent, path, ingestedtime, type, id) output = list(output) df = pd.DataFrame(output) df.to_csv('/tmp/out.csv', header=False , mode='a',index=False) return None # Check and validate the .CSV file in the /tmp directory os.system("touch /tmp/out.csv" ) os.remove("/tmp/out.csv") header=['Date-Time', 'CompartmentID', 'CompartmentName', 'Message', 'TenantId', 'UserAgent', 'Path', 'Ingested-Time', 'Type', 'ID'] data = [] with open('/tmp/out.csv', 'a') as f: writer = csv.writer(f) writer.writerow(header) writer.writerows(data) # Block for saving Audit Logs in JSON format to out.json file for i in range(INC, NUM_DAYS): print("\ncollecting logs for", DATE1) p = subprocess.Popen(''' oci logging-search search-logs --search-query 'search "''' + str(COMPARTMENT_ID) + '''/_Audit" | sort by datetime desc' --time-start ''' + str(DATE1) + '''"T00:00:00Z" --time-end ''' + str(DATE1) + '''"T23:59:00Z" > out.json ''', shell=True, stdout=subprocess.PIPE) (output, err) = p.communicate() convert_csv() PAG = subprocess.check_output(''' oci logging-search search-logs --search-query 'search "''' + str(COMPARTMENT_ID) + '''/_Audit" | sort by datetime desc' --time-start ''' + str(DATE1) + '''"T00:00:00Z" --time-end ''' + str(DATE1) + '''"T23:59:00Z" | grep "opc-next-page" | awk -F":" '{print $2}' | tr -d '"' | tr -d " " | tail -1 ''', shell=True).strip().decode('ascii') while (PAG != ""): p = subprocess.Popen(''' oci logging-search search-logs --search-query 'search "''' + str(COMPARTMENT_ID) + '''/_Audit" | sort by datetime desc' --time-start ''' + str(DATE1) + '''"T00:00:00Z" --time-end ''' + str(DATE1) + '''"T23:59:00Z" --page ''' + str(PAG) + ''' > out.json ''', shell=True, stdout=subprocess.PIPE) (output, err) = p.communicate() convert_csv() PAG = subprocess.check_output(''' oci logging-search search-logs --search-query 'search "''' + str(COMPARTMENT_ID) + '''/_Audit" | sort by datetime desc' --time-start ''' + str(DATE1) + '''"T00:00:00Z" --time-end ''' + str(DATE1) + '''"T23:59:00Z" --page ''' + str(PAG) + ''' | grep "opc-next-page" | awk -F":" '{print $2}' | tr -d '"' | tr -d " " | tail -1 ''', shell=True).strip().decode('ascii') print("successfully collected logs for", DATE1) DATE1 += timedelta(days=1) i = i + 1 print("\nThe .csv file is saved in location /tmp/out.csv") if __name__ == "__main__": myfunc(sys.argv)

Task 2: Execute the custom Python script

-

Provide execute permissions to the main.py file using the command.

chmod +x main.py -

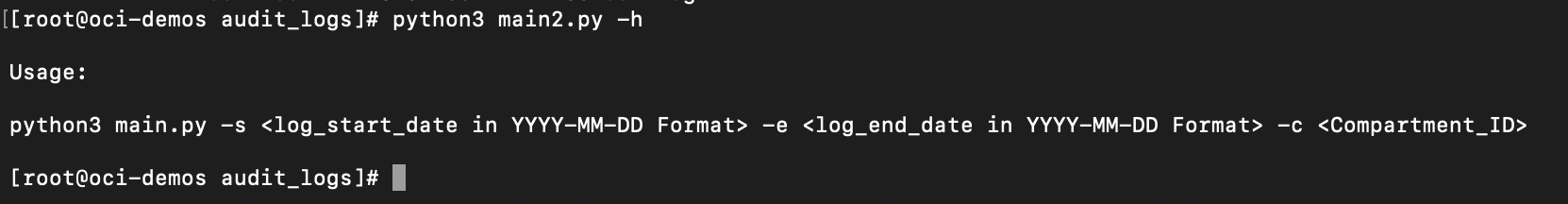

The script needs to be run with log start date, log end date and compartment-id parameters. To check the exact usage of the script run the script with ‘-h’ option as shown below.

python3 main.py -hThis will display the correct syntax of the script.

-

After running the script, the date for which the script is running for will be displayed on the screen with a success message, followed by the message stating the output .csv file location.

Note:

You must enter the start and end date for the Audit Logs in YYYY-MM-DD format along with the Compartment ID of the compartment for which you want to export the Audit Logs.

For larger environments or for larger time durations the amount of Audit Logs to be collected and converted to .csv format will be in millions for which the script might take few hours to run. It will be better to run the script for such environments in the background with ‘&’ option.

You can use

nohuplinux command while running the script in the background so that all the output logs will be directed to thenohup.outfile which you can review later. You can run the script with thenohuputility using the following command and later check the logs in thenohup.outfile.nohup python3 main.py -s <log_start_date in YYYY-MM-DD Format> -e <log_end_date in YYYY-MM-DD Format> -c ><Compartment_ID> &

The CSV file is generated and stored in the following folder: /tmp/out.csv.

Task 3: Schedule the collection of Audit Logs (optional)

If you want to schedule a monthly/weekly collection of Audit Logs you can do so by setting the cronjobs such that the older .csv file is copied to another file and new logs are saved in the out.csv file.

-

Type the following command to edit the crontab.

crontab -e -

Add the following cronjobs to be scheduled. Ensure that you replace the **

** parameter with the compartment id of your compartment/Tenancy. 0 1 1 * * export DATE=$(date +'%Y-%m-%d-%T') && cp /tmp/out.csv out-$DATE.csv 15 1 1 * * export DATE1=$(date +'%Y-%m-%d') && export DATE2=$(date -d "$DATE1 -1 month" +%Y-%m-%d) && python3 main.py -s DATE1 -e DATE2 -c <Compartment_ID>The above jobs will run on the 1st of every month at 1 A.M. and 1.15 A.M. respectively. You can change the time based on your requirements.

Note: You can schedule the job to run on the 30th or 31st of a month so that your script does not miss a day or return an error.

-

Save the file and review the active cronjobs using the following command.

crontab -l

Related Links

Acknowledgments

- Author - Maninder Singh Flora (Cloud Architect)

More Learning Resources

Explore other labs on docs.oracle.com/learn or access more free learning content on the Oracle Learning YouTube channel. Additionally, visit education.oracle.com/learning-explorer to become an Oracle Learning Explorer.

For product documentation, visit Oracle Help Center.

Export OCI Audit Logs in CSV format for a custom time range

F57892-02

December 2022

Copyright © 2022, Oracle and/or its affiliates.