Note:

- This tutorial requires access to Oracle Cloud. To sign up for a free account, see Get started with Oracle Cloud Infrastructure Free Tier.

- It uses example values for Oracle Cloud Infrastructure credentials, tenancy, and compartments. When completing your lab, substitute these values with ones specific to your cloud environment.

Push Oracle Cloud Infrastructure Logs to Amazon S3 with OCI Functions

Introduction

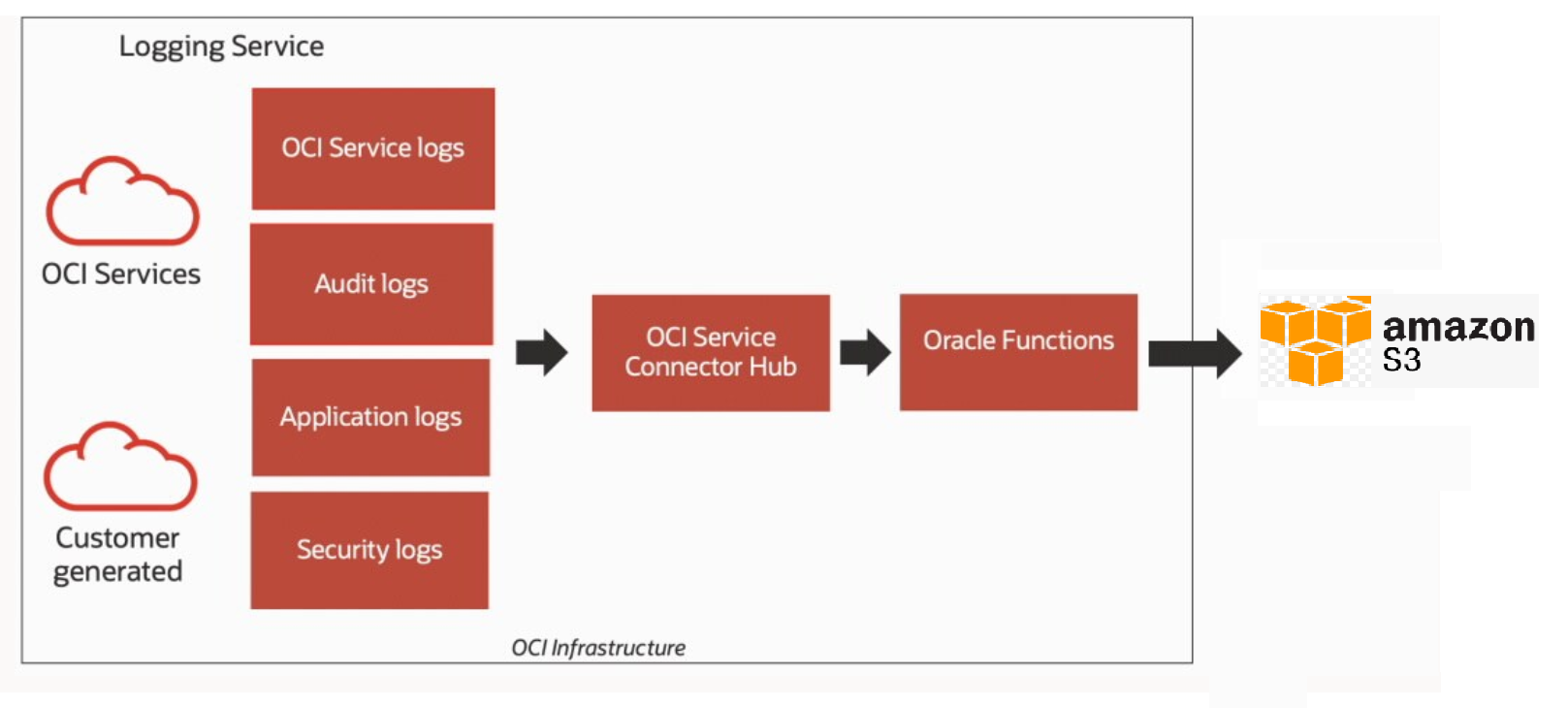

The Oracle Cloud Infrastructure (OCI) Logging Service provides a unified view of all logs generated by OCI services and resources, as well as custom logs created by users.

OCI Functions is a serverless compute platform that empowers developers to build and deploy lightweight, event-driven applications in the cloud.

Amazon S3 is a highly scalable and reliable cloud storage service provided by Amazon that enables users to store and retrieve any amount of data from anywhere in the world.

The high-level process includes using a Service Connector to collect logs from OCI Logging service and sending to the target function which then pushes the data to Amazon S3.

Objective

Step-by-step guide on how to push OCI Logs to Amazon S3 with OCI Functions.

Prerequisites

-

Users in Amazon Web Services (AWS) must have the required policies to create the bucket and be able to read and write objects in the bucket. For more information on the bucket policies, follow this page.

-

Users in OCI must have the required polices for Functions, Service Connector Hub and Logging services to manage the resources. Policy reference for all the services are here.

Task 1: Configure AWS settings

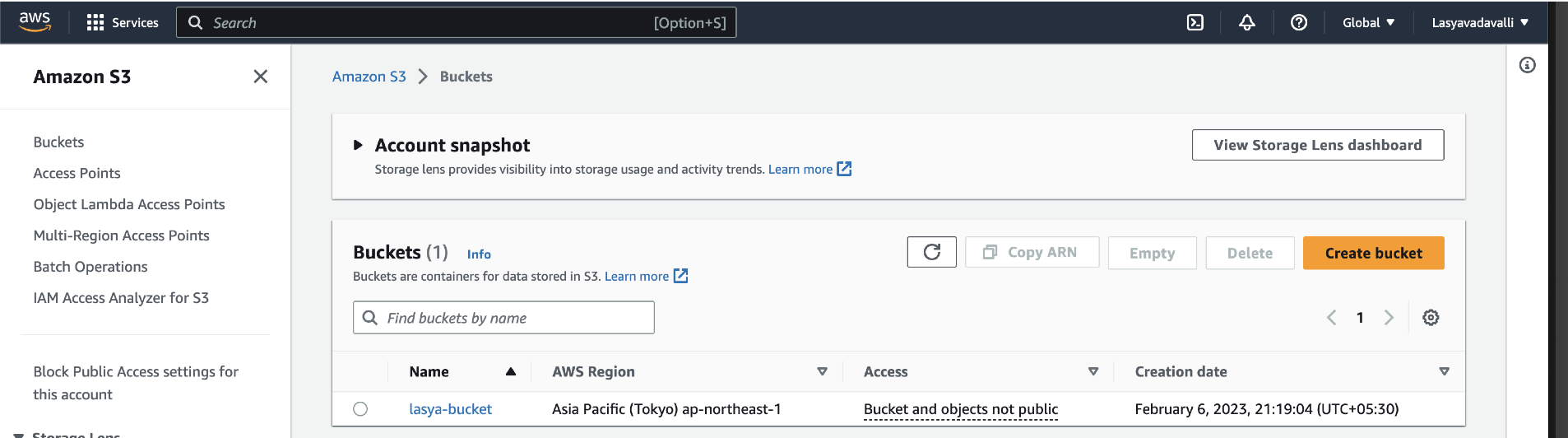

-

Create an Amazon S3 bucket.

-

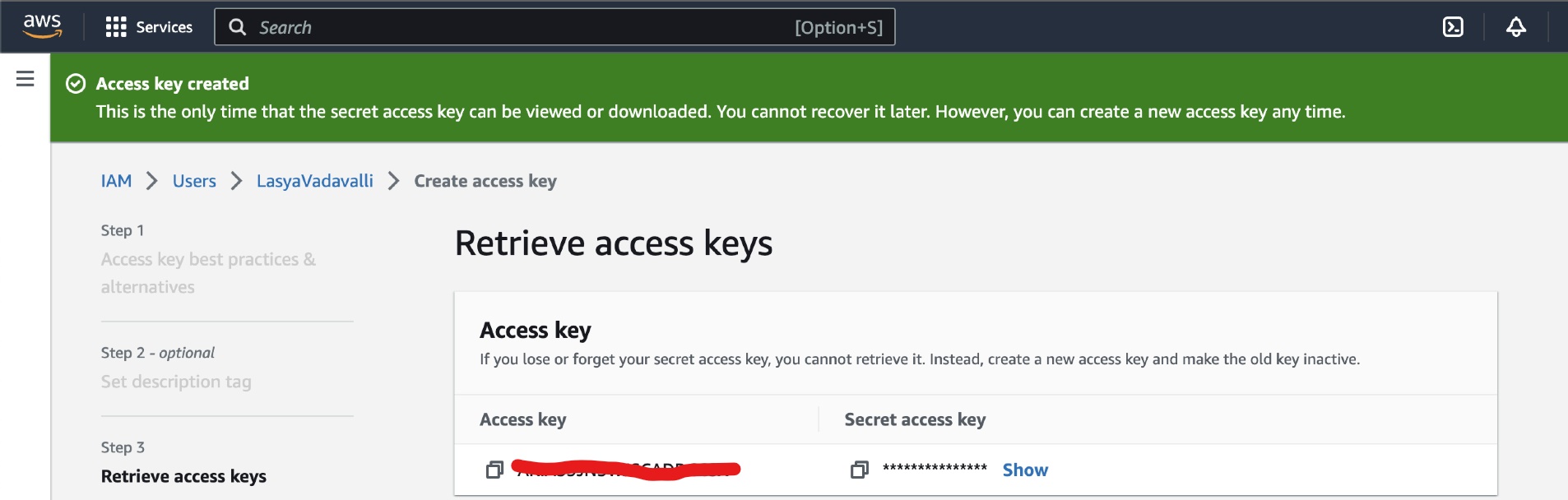

Create an AWS access key and secret key for the user under IAM.

-

Navigate to IAM, Users, Security Credentials, Create access key, Third-party service.

-

Copy the generated AWS access key and secret key to a Notepad file.

-

Task 2: Configure Oracle Cloud Infrastructure settings

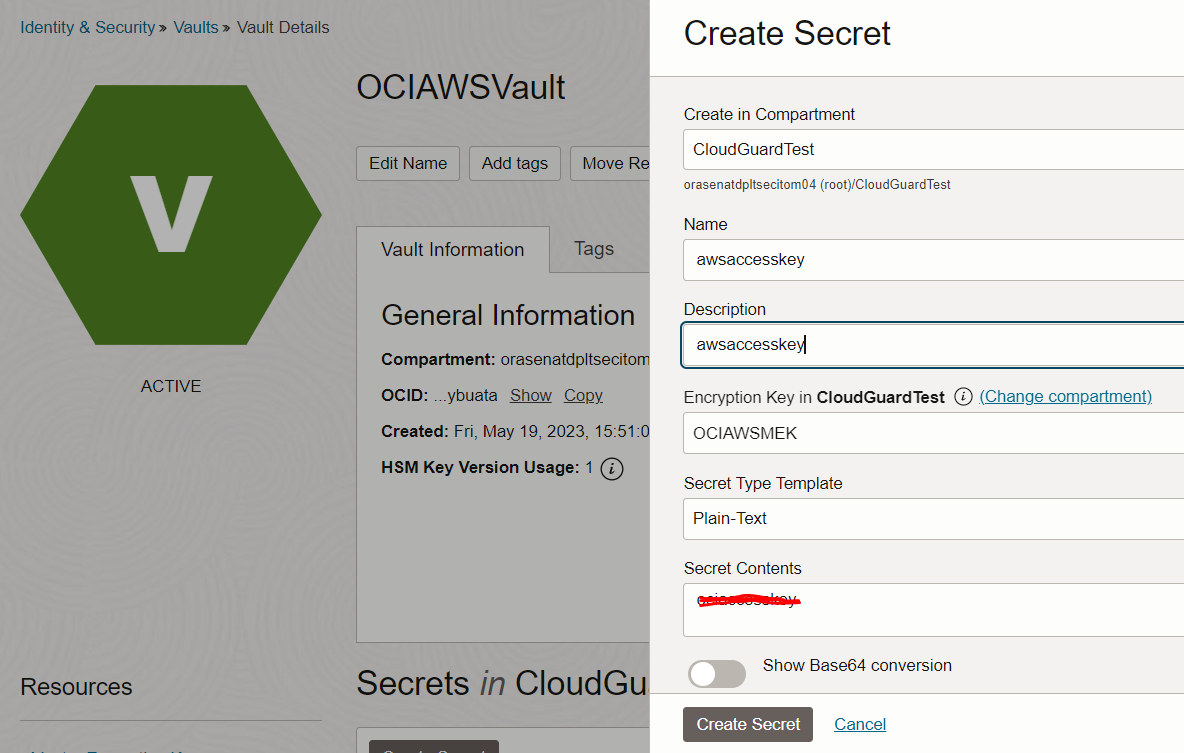

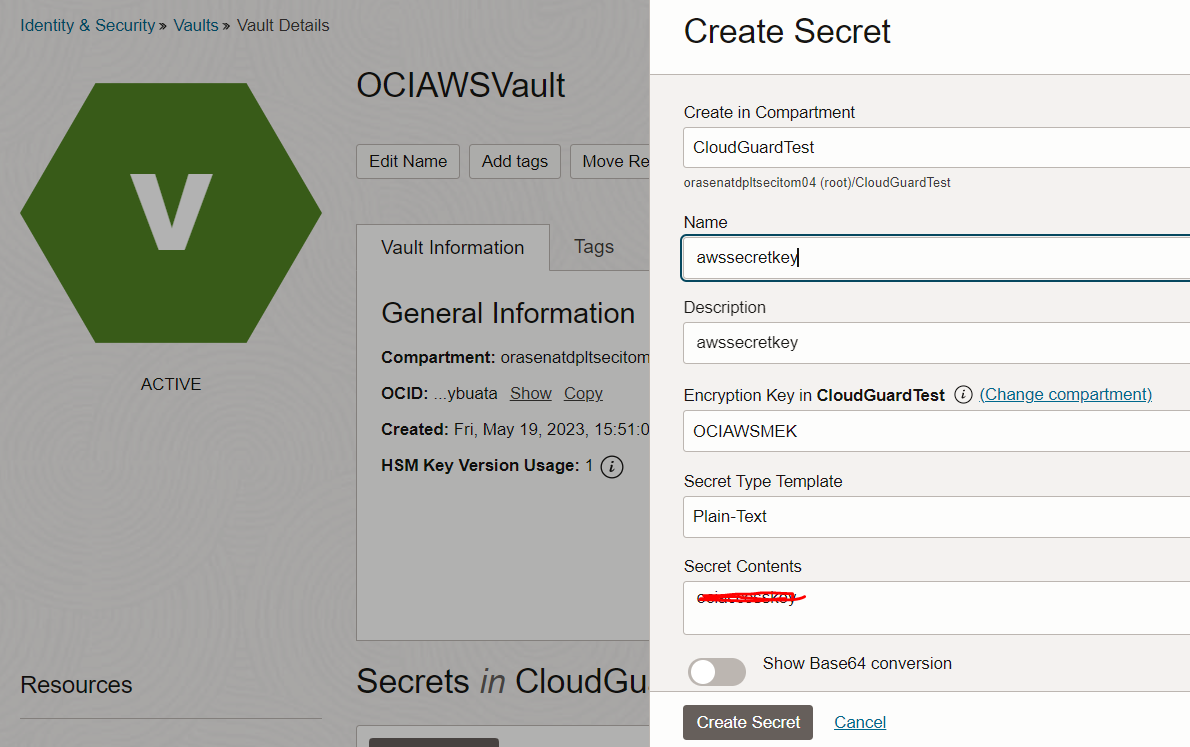

Store AWS access key and secret key in OCI Vault

-

Navigate to Identity and Security, Vaults. Create a Vault ,MEK if not created. For more information, follow this doc.

-

Create 2 secrets in the Vault, one for storing AWS access key and other for AWS secret key. Images below are for reference.

Create a dynamic group

-

Navigate to Identity & Security, Identity, Dynamic Groups.

-

Create a dynamic group for all functions in your compartment with the below matching rule.

ALL {resource.type = 'fnfunc', resource.compartment.id = '<your_compartment_id>'}

Create a policy for the dynamic group

-

Navigate to Identity & Security, Identity, Policies.

-

Create a policy for the above dynamic group to let all functions to access secrets-family for the respective compartment. The policy can be narrowed down to a single vault as well.

allow dynamic-group <dynamic_group_name> to manage secret-family in compartment <compartment_name>

Create an OCI Function in Python or any supported language to send OCI logs to Amazon S3

-

In the Oracle Cloud Console menu, navigate to Developer services and select Functions.

-

Select an existing application or click Create Application. Create a function within your application.

-

In this tutorial, we are using the OCI Cloud Shell to create the function.

Note: Cloud Shell is a recommended option as it does not require any setup prerequisites. If you are new to OCI Functions, follow sections A, B, and C in the Functions Quick Start on Cloud Shell..

-

We recommend creating a sample Python function first. The following command generates a folder

ociToawswith three files:func.py,func.yaml, andrequirements.txt.fn init --runtime python pushlogs -

Change

func.pywith the following code. Replace secret_key_id, access_key_id in the code with respective secret ocids from OCI Vault which got created previously.import io import json import logging import boto3 import oci import base64 import os from fdk import response # The below method receives the list of log entries from OCI as input in the form of bytestream and is defined in func.yaml def handler(ctx, data: io.BytesIO = None): funDataStr = data.read().decode('utf-8') # Convert the log data to json funData = json.loads(funDataStr) # The Secret Retrieval API is used here to retrieve AWS access keys and secret key from vault. These keys are required to connect to Amazon S3. # Replace secret_key_id, access_key_id with respective secret ocids. secret_key_id = "<vault_secret_ocid>" access_key_id = "<vault_secret_ocid>" signer = oci.auth.signers.get_resource_principals_signer() secret_client = oci.secrets.SecretsClient({},signer=signer) def read_secret_value(secret_client, secret_id): response = secret_client.get_secret_bundle(secret_id) base64_Secret_content = response.data.secret_bundle_content.content base64_secret_bytes = base64_Secret_content.encode('ascii') base64_message_bytes = base64.b64decode(base64_secret_bytes) secret_content = base64_message_bytes.decode('ascii') return secret_content awsaccesskey = read_secret_value(secret_client, access_key_id) awssecretkey = read_secret_value(secret_client, secret_key_id) for i in range(0,len(funData)): filename = funData[i]['time'] logging.getLogger().info(filename) # Send the log data to a temporary json file. /tmp is the supported writable directory for OCI Functions with open('/tmp/'+filename+".json", 'w', encoding='utf-8') as f: json.dump(funData[i], f, ensure_ascii=False, indent=4) # Send the log file to Amazon S3 target bucket session = boto3.Session(aws_access_key_id= awsaccesskey, aws_secret_access_key= awssecretkey) s3 = session.resource('s3') s3.meta.client.upload_file(Filename='/tmp/'+filename+'.json', Bucket='lasya-bucket', Key=filename+'.json') os.remove('/tmp/'+filename+'.json') -

Update

func.yamlwith the following code.schema_version: 20180708 name: pushlogs version: 0.0.1 runtime: python build_image: fnproject/python:3.9-dev run_image: fnproject/python:3.9 entrypoint: /python/bin/fdk /function/func.py handler memory: 256 -

Change

requirements.txtwith the following code.fdk>=0.1.56 boto3 oci -

Deploy the function with the following command.

fn -v deploy --app ociToaws

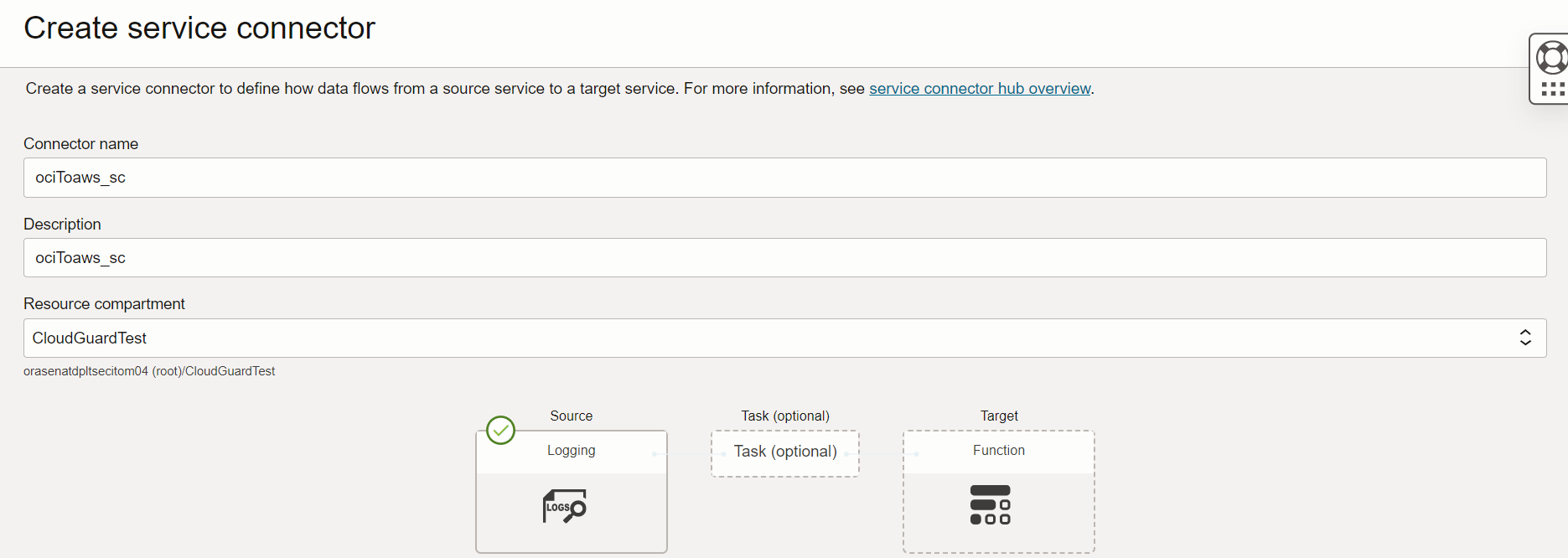

Create a Service Connector to send OCI logs from Logging to Functions

-

In the Console menu, select Analytics & AI, Messaging, and then Service Connector Hub.

-

Select the compartment where we want to create the service connector.

-

Click Create Service Connector and provide the respective values. Under Configure Service Connector, select Streaming as the source and Logging Analytics as the target.

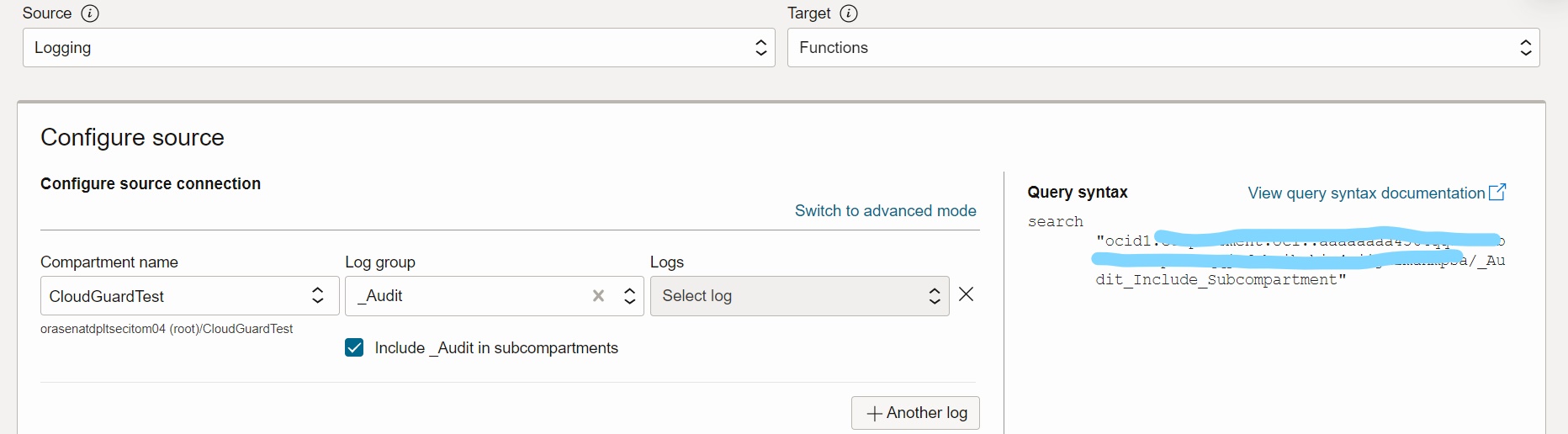

-

Under Configure Source, select the logs to be pushed to Functions

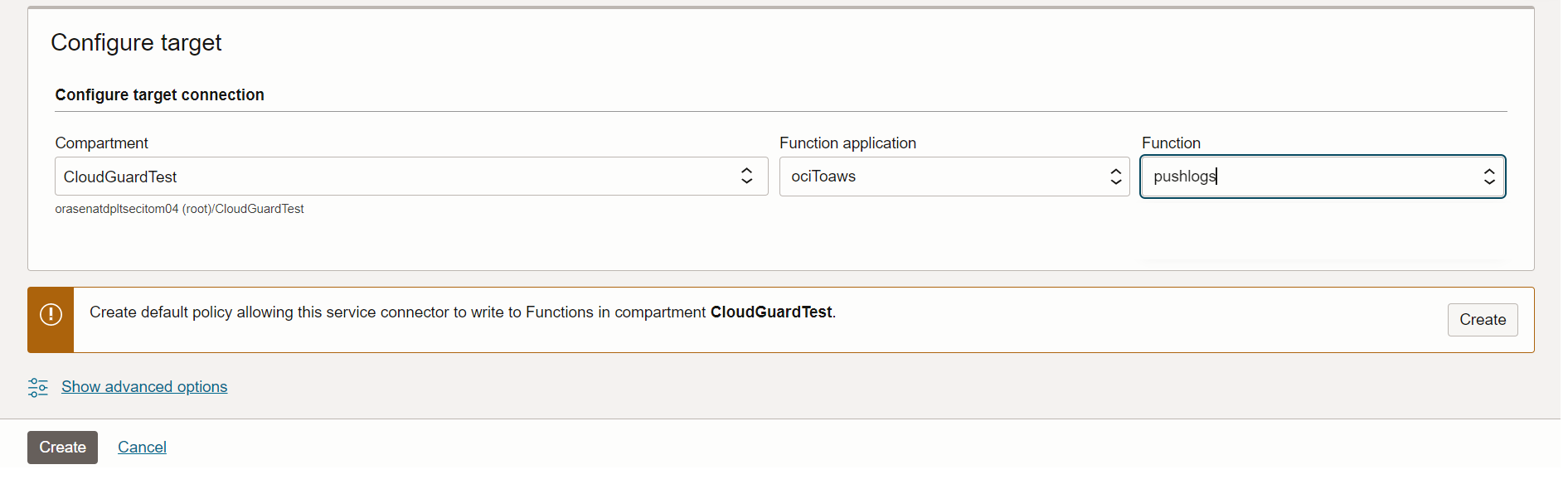

-

Under Configure Destination, select the Function Application we created in Step 4.

-

Create the default policies that appear on the screen and click Create.

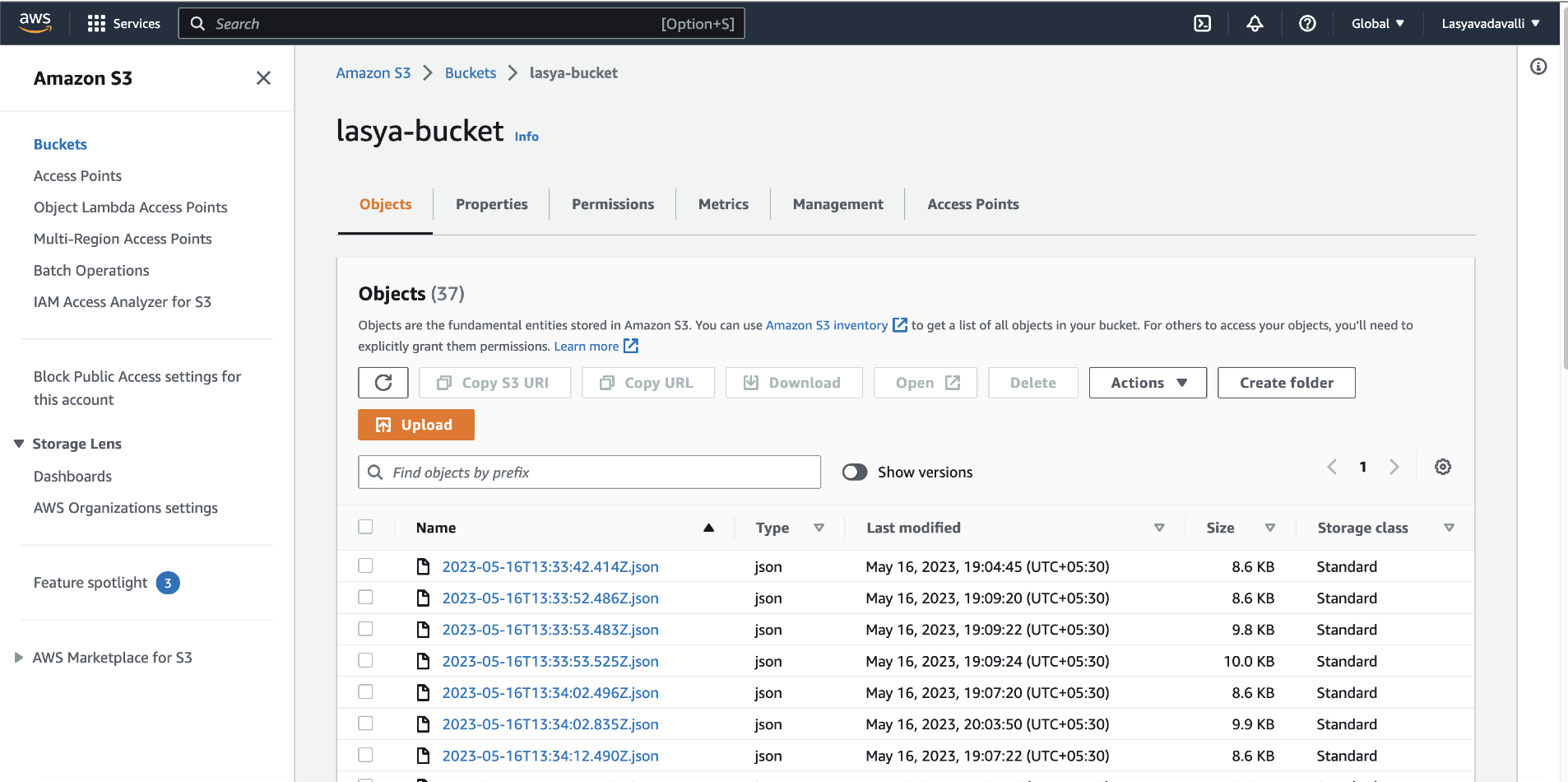

Task 3: Verify if the logs are pushed to Amazon S3

-

Navigate to the Amazon S3 bucket which you created previously to verify if the OCI logs are available.

Next Steps

This tutorial showed how Oracle Cloud Infrastructure logs can be pushed to Amazon S3 using Service Connector Hub and Functions. The OCI log data can be utilized for multiple storage strategies for redundancy and compliance and also for detailed analysis with your existing connectors within AWS.

Acknowledgments

Authors - Vishak Chittuvalapil (Senior Cloud Engineer), Lasya Vadavalli (Cloud Engineer-IaaS)

More Learning Resources

Explore other labs on docs.oracle.com/learn or access more free learning content on the Oracle Learning YouTube channel. Additionally, visit education.oracle.com/learning-explorer to become an Oracle Learning Explorer.

For product documentation, visit Oracle Help Center.

Push Oracle Cloud Infrastructure Logs to Amazon S3 with OCI Functions

F82371-01

June 2023

Copyright © 2023, Oracle and/or its affiliates.