Note:

- This tutorial requires access to Oracle Cloud. To sign up for a free account, see Get started with Oracle Cloud Infrastructure Free Tier.

- It uses example values for Oracle Cloud Infrastructure credentials, tenancy, and compartments. When completing your lab, substitute these values with ones specific to your cloud environment.

Monitor GPU Superclusters on Oracle Cloud Infrastructure with NVIDIA Data Center GPU Manager, Grafana and Prometheus

Introduction

Artificial Intelligence (AI) and Machine Learning (ML) on Oracle Cloud Infrastructure (OCI) NVIDIA GPU Superclusters was announced earlier this year. For many customers running these types of GPU based workload at scale, monitoring can be a challenge. Out-of-the-box, OCI has excellent monitoring solutions, including some GPU instance metrics, but deeper integration into GPU metrics for OCI Superclusters is something our customers are interested in.

Setting up GPU monitoring is a straight forward process. If you are a customer already using the HPC Marketplace stack, then most of the tooling is already included in that deployment image.

Objectives

- Monitor GPU OCI Superclusters with NVIDIA Data Center GPU Manager, Grafana and Prometheus.

Prerequisites

Ensure you have these prerequisites installed on each GPU instance. The monitoring solution then requires installation and execution of the NVIDIA Data Center GPU Manager (DCGM) exporter Docker container, to capture the output metrics using Prometheus, and finally display the data in Grafana.

Task 1: Install and Execute the NVIDIA DCGM exporter Docker container

Installation and Execution of the NVIDIA DCGM container is done with a docker command.

-

Run the following command for a non-persistent Docker container. This execution method will not restart the docker container in the event of docker service being stopped, or the GPU server being rebooted.

docker run -d --gpus all --rm -p 9400:9400 nvcr.io/nvidia/k8s/dcgm-exporter:3.2.3-3.1.6-ubuntu20.04 -

If persistence is desired, then this execution method should be used instead.

docker run --restart unless-stopped -d --gpus all -p 9400:9400 nvcr.io/nvidia/k8s/dcgm-exporter:3.2.3-3.1.6-ubuntu20.04Note: Ensure that the docker service is set to load on-boot for this example to persist through reboots.

Both of these methods require the GPU nodes to have internet access (NAT Gateway is most common) in order to download and run the DCGM exporter container. This container needs to be running on each GPU node being monitored. Once running, the next step is to set up Prometheus on a separate compute node, ideally one which is in an edge network and can be accessed directly over the internet.

Task 2: Install Prometheus

Installation of Prometheus is done by downloading and unpacking the latest release. Update this syntax when newer releases are available by changing release numbers as applicable.

-

Fetch the package.

wget https://github.com/prometheus/prometheus/releases/download/v2.37.9/prometheus-2.37.9.linux-amd64.tar.gz -O /opt/prometheus-2.37.9.linux-amd64.tar.gz -

Change directory.

cd /opt -

Extract the package.

tar -zxvf prometheus-2.37.9.linux-amd64.tar.gz -

Once unpacked, a Prometheus config YAML needs to be generated in order to scrape the DCGM data from GPU nodes. Start with the standard headers – in this case we are deploying to

/etc/prometheus/prometheus.yaml.sudo mkdir -p /etc/prometheus -

Edit the YAML file with your editor of choice. The header section includes standard global config.

# my global config global: scrape_interval: 5s # Set the scrape interval to every 5 seconds. Default is every 1 minute. evaluation_interval: 30s # Evaluate rules every 30 seconds. The default is every 1 minute. # scrape_timeout is set to the global default (10s). # Alertmanager configuration alerting: alertmanagers: - static_configs: - targets: # - alertmanager:9093 # Load rules once and periodically evaluate them according to the global 'evaluation_interval'. rule_files: # - "first_rules.yml" # - "second_rules.yml" # A scrape configuration containing exactly one endpoint to scrape: -

Now for the targets to scrape, entries for each GPU host needs to be inserted. This can be generated programmatically and must use either host IPs or resolvable DNS hostnames.

scrape_configs: - job_name: 'gpu' scrape_interval: 5s static_configs: - targets: ['host1_ip:9400','host2_ip:9400', … ,'host64_ip:9400']Note: The targets section is abbreviated for this article, and the “host1_ip” used here should be a resolvable IP or hostname for each GPU host.

-

Once this file is in place, a SystemD script needs to be created so that the Prometheus service can be started, managed, and persisted. Edit

/lib/systemd/system/prometheus.servicewith your editor of choice (as root or sudo).[Unit] Description=Prometheus daemon After=network.target [Service] User=root Group=root Type=simple ExecStart=/opt/prometheus-2.37.9.linux-amd64/prometheus --config.file=/etc/prometheus/prometheus.yml \ --storage.tsdb.path=/data \ --storage.tsdb.max-block-duration=2h \ --storage.tsdb.min-block-duration=2h \ --web.enable-lifec PrivateTmp=true [Install] WantedBy=multi-user.target -

Finally, a data directory needs to be created. Ideally a block storage volume should be used here to provide dedicated, resilient storage for the Prometheus data. In the above example, the target is a block volume mounted to

/dataon the compute node as seen in thestorage.tsdb.pathdirective. -

All Prometheus pieces are now in place, and the service can be started.

sudo systemctl start prometheus -

Check the status to ensure it’s running using the following command.

systemctl status prometheus -

Verify data is present.

ls /data/

Task 3: Set up the Grafana Dashboard

The last piece of this setup is the Grafana Dashboard. Installing Grafana requires enabling the Grafana software repository. For more details, see Grafana docs.

-

For Oracle Enterprise Linux, install the GPG key, set up the software repository, and then run a yum install for Grafana server.

-

Start Grafana server and ensure the VCN has an open port to access the GUI. Alternately, an SSH tunnel can be used if edge access is prohibited. Either way the server can be accessed on TCP port 3000.

sudo systemctl start grafana-server -

Log in to the Grafana GUI at which point the admin password will need to be changed as part of the first login step. Once that is done, navigate to the data sources in order to setup Prometheus as a data source.

-

The Prometheus source entry uses localhost:9090 in this case by selecting “+ Add new data source”, select Prometheus, and then fill in the HTTP URL section.

-

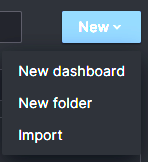

Once that is complete, scroll down and click Save & test and validate the connection. Finally, the DCGM dashboard from NVIDIA can be imported. Navigate to the Dashboards menu.

-

Choose New and Import.

-

Enter the NVIDIA DCGM exporter dashboard id “12239”, click Load, select Prometheus as the data source from the drop-down list, and click Import.

Once imported the NVIDIA DCGM dashboard will display GPU information for the cluster hosts targeted by Prometheus. Out-of-the-box, the dashboard includes valuable information for a given date/time range.

Examples:

-

Temperature

-

Power

-

SM Clock

-

Utilization

This monitoring data can be invaluable for customers who want to have deeper insights into their infrastructure while running AI/ML workloads on OCI GPU Superclusters. If you have AI/ML GPU based workloads which require ultrafast cluster networking, consider OCI for its industry leading scalability at a much better price than other cloud providers.

Related Links

Acknowledgments

Author - Zachary Smith (Principal Member of Technical Staff, OCI IaaS - Product & Customer engagement)

More Learning Resources

Explore other labs on docs.oracle.com/learn or access more free learning content on the Oracle Learning YouTube channel. Additionally, visit education.oracle.com/learning-explorer to become an Oracle Learning Explorer.

For product documentation, visit Oracle Help Center.

Monitor GPU Superclusters on Oracle Cloud Infrastructure with NVIDIA Data Center GPU Manager, Grafana and Prometheus

F87825-01

October 2023

Copyright © 2023, Oracle and/or its affiliates.