2 Setting Up a Disaster Recovery Deployment

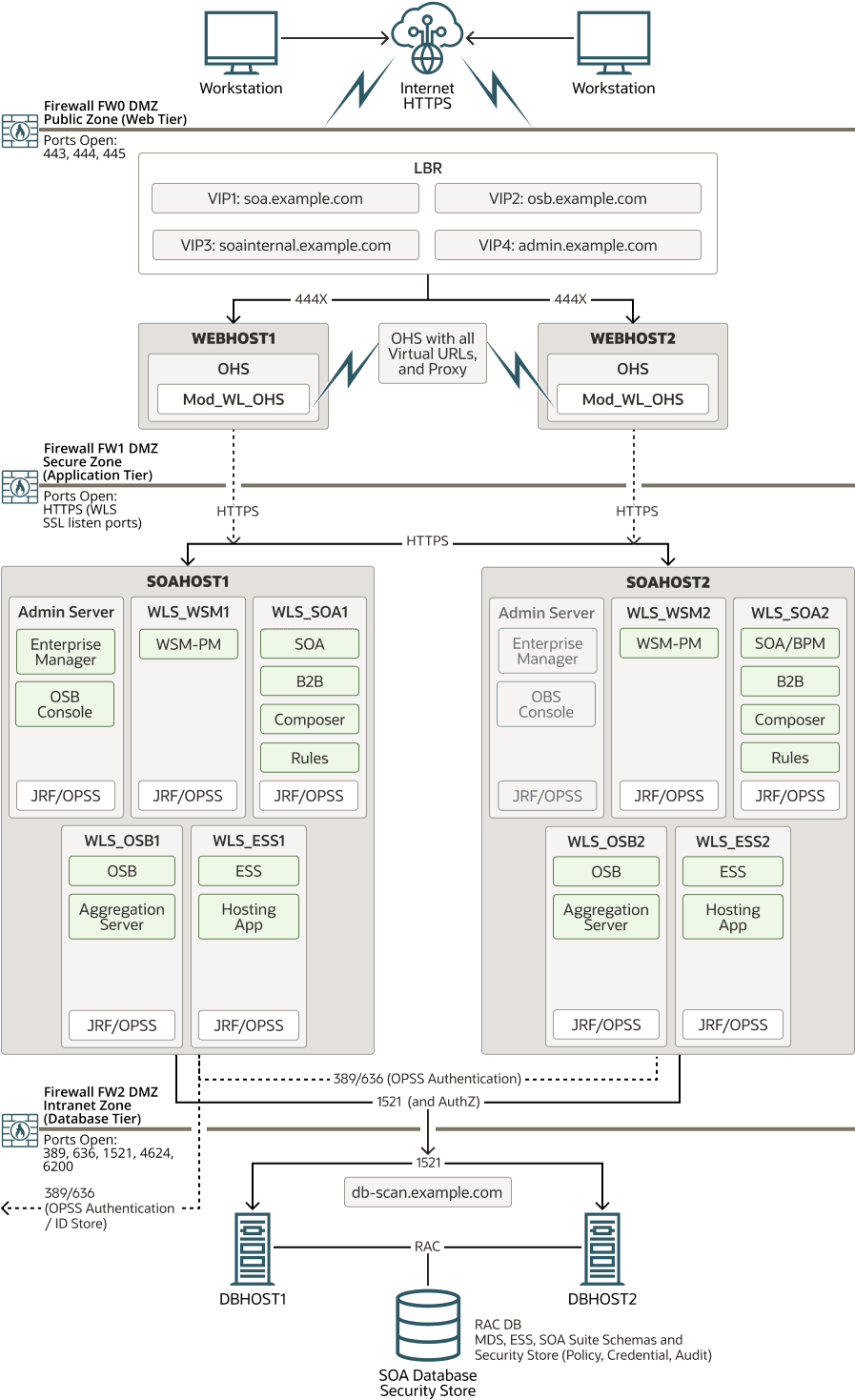

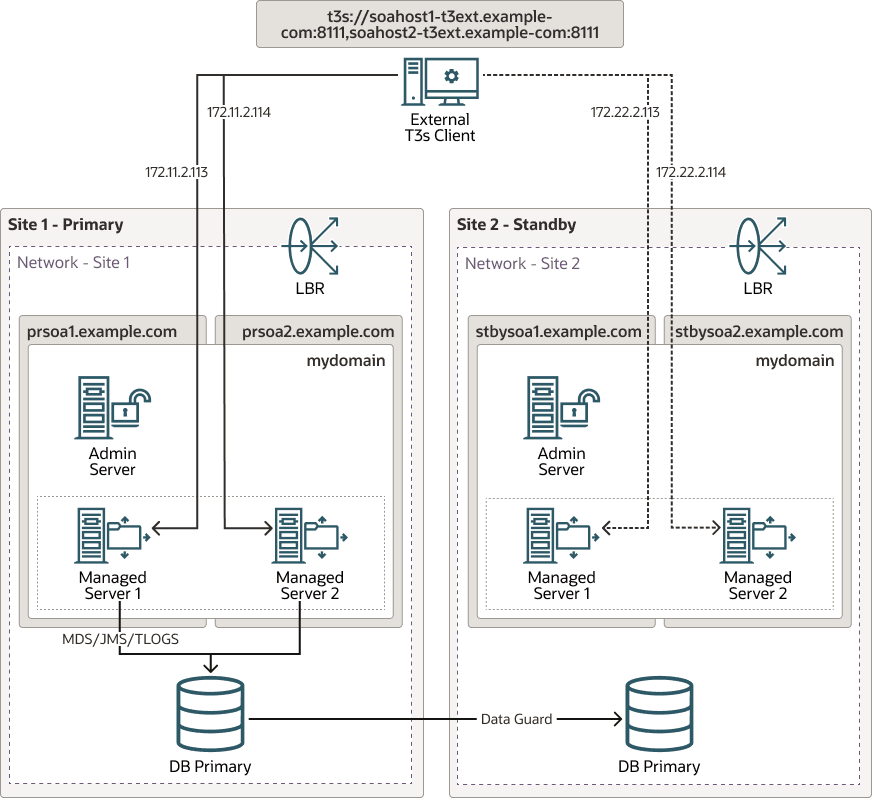

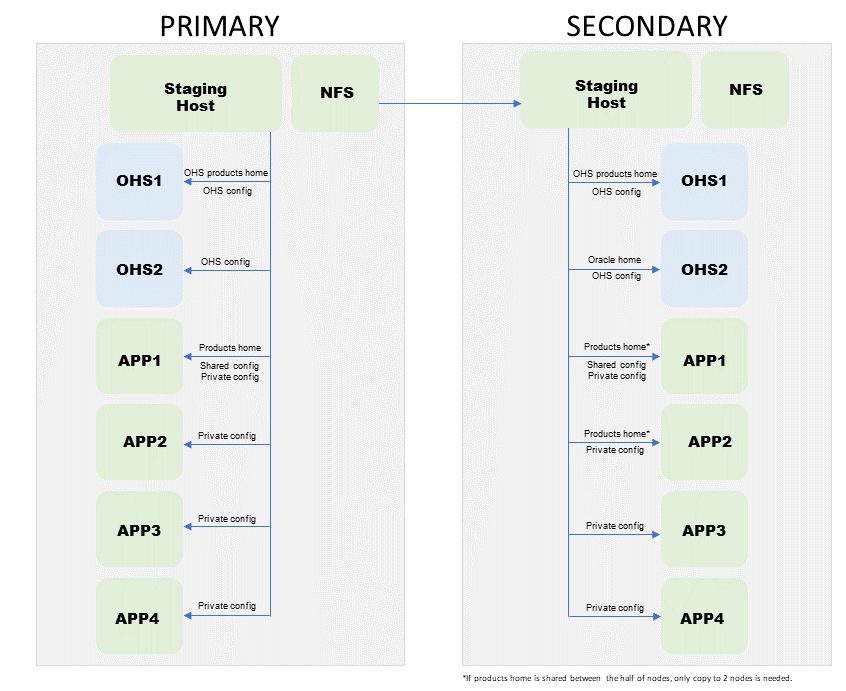

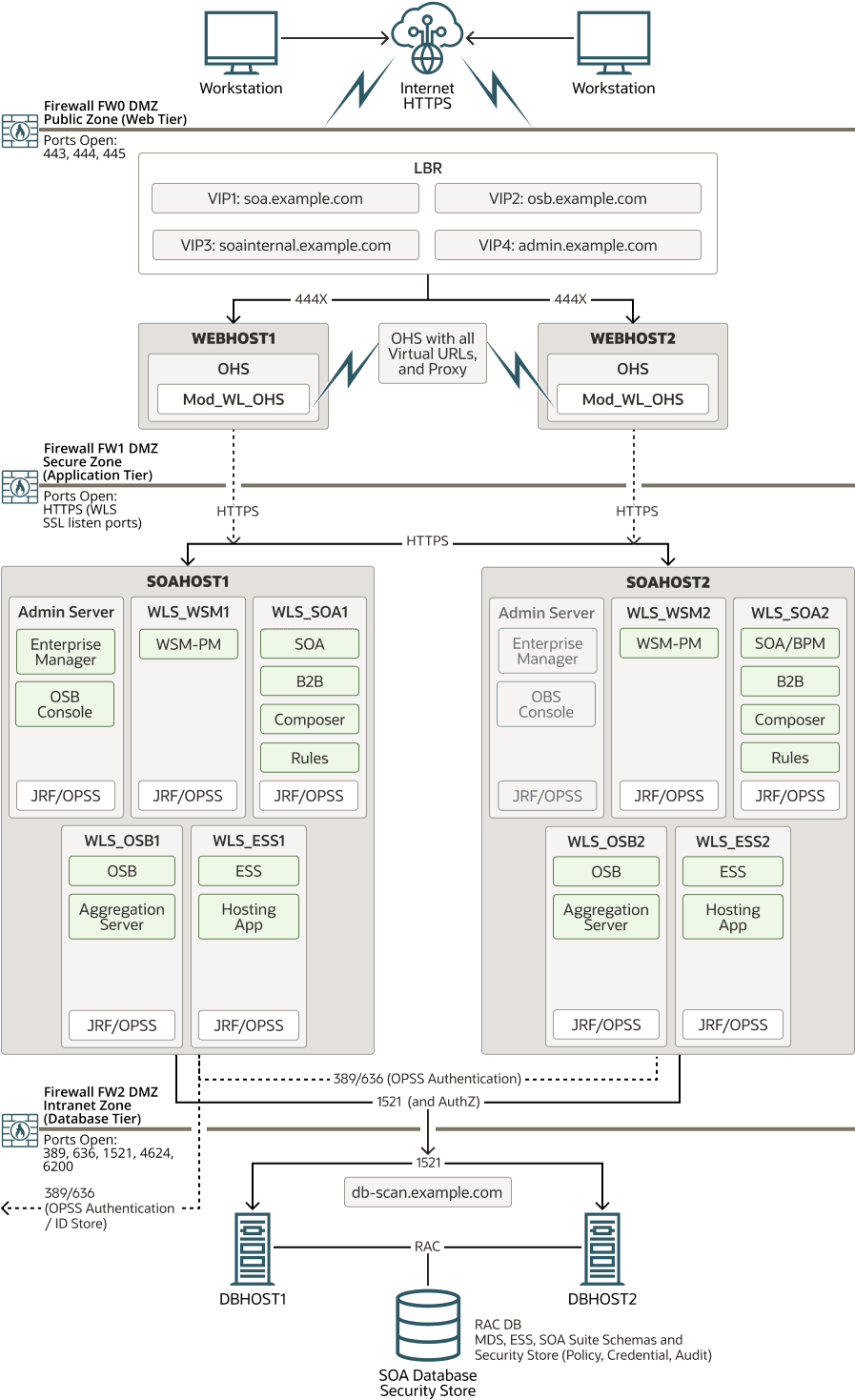

This chapter provides instructions about how to set up an Oracle Fusion Middleware Disaster Recovery topology for the Linux and UNIX operating systems. The procedures use the Oracle SOA Suite Enterprise Deployment (see Figure 2-1) in the examples to illustrate how to set up the Oracle Fusion Middleware Disaster Recovery solution for a specific case. After you understand how to set up the disaster recovery for the Oracle SOA Suite enterprise topology, use the same information to set up a disaster recovery system for the other enterprise deployments.

Figure 2-1 Deployment Used at Production and Secondary Sites for Oracle Fusion Middleware Disaster Recovery

Disaster Recovery Deployment Process Overview

Setting up a disaster protection system for Oracle Fusion Middleware involves the following procedures.

-

Planning Host Names

Determines how the listen addresses used by the different components are going to be configured and virtualized so that no changes are required on the secondary when the configuration is propagated from the primary. Deciding how these addresses will be resolved may impact your system’s manageability and the RTO of your disaster protection solutions.

-

Planning a File System Replication Strategy

Determines what replication technology and approach is going to be used to meet the RTO and RPO requirements for the different artifacts that need to be copied from the primary to the secondary.

-

Preparing the Primary System for Disaster Protection

For the optimum configuration, some changes may need to be applied in the primary in preparation for the disaster protection configuration. These changes primarily affect the storage configuration and database’s address configuration.

-

Preparing the Secondary System for Disaster Protection

The secondary system can also be set up based on the information provided in the Enterprise Deployment Guides. The infrastructure used by the Fusion Middleware system needs a specific configuration that will optimize the behavior of a disaster protection solution.

-

Setting Up the Secondary System

Setting up the secondary system involves the following three tasks:

-

Replicating the database by setting up the Data Guard for the database used by Fusion Middleware.

-

Replicating the primary Fusion Middleware file systems to the secondary storage.

-

Updating the TNS alias for the secondary’s database address.

-

The following sections describe the procedures listed above in detail.

Planning Host Names

In a disaster recovery topology, the host name addresses used by the Fusion Middleware components must be resolvable to valid IP addresses in each site.

There are different types of host names that are used in this document:

-

Physical Host Names

These are the host names used to uniquely identify a node in a network. This is the address used to establish an SSH connection to that node. A physical host name is mapped to a fixed IP that is attached to the main Network Interface Controller (NIC) that the node uses. Physical host names are attached to a specific host and cannot be moved to a different one because they provide the main access point to the machine they belong to.

-

Host Name Aliases or Virtual Hostnames

These are host names that can float from node to node. They can be assigned to a node and then disabled in that node and assigned to a different one. Virtual hostnames are used as listen address for processes and components that can move across different nodes. They enable accessors to abstract themselves from the physical host name where the process or components runs at a precise point in time.

As mentioned in the Enterprise Deployment Guides, Oracle recommends creating host name aliases along with the existing physical host names to decouple the system’s configuration from those physical host names (which are different in each site and attached to specific nodes). Physical host names will typically differ between the primary and the secondary but the Fusion Middleware configuration uses virtual hostnames that are valid in both locations.

This section describes how to plan alias host names for the middle-tier hosts that use the Oracle Fusion Middleware instances at the production and secondary sites. It uses the Oracle SOA Suite Enterprise Deployment shown in Figure 2-1 for the host name examples. Each host at the production site has a peer host in the secondary site. The Fusion Middleware components of the peer host in the secondary site are configured with the same listen addresses and ports as their counterparts in the primary.

When you configure each component, use host-name-based configuration instead

of IP-based configuration. For example, if you want an Oracle WebLogic Managed Server to

listen on a specific IP address (for example, 172.11.2.113), then use

the host name SOAHOST1.EXAMPLE.COM in its configuration and then use

your OS to resolve this host name to IP 172.11.2.113. You must not

include IPs directly in the configuration or in your WebLogic domain and applications or

in the configuration of Fusion Middleware system components such as the Oracle HTTP

Server.

The following section shows how to set up host names at the disaster recovery production and standby sites.

Note:

In the examples listed, IP addresses for hosts at the initial production

site have the format 172.11.x.x and IP addresses for hosts at the

initial standby site have the format 172.22.x.x.

Sample Host Names for the Oracle SOA Suite Production and Standby Site Hosts

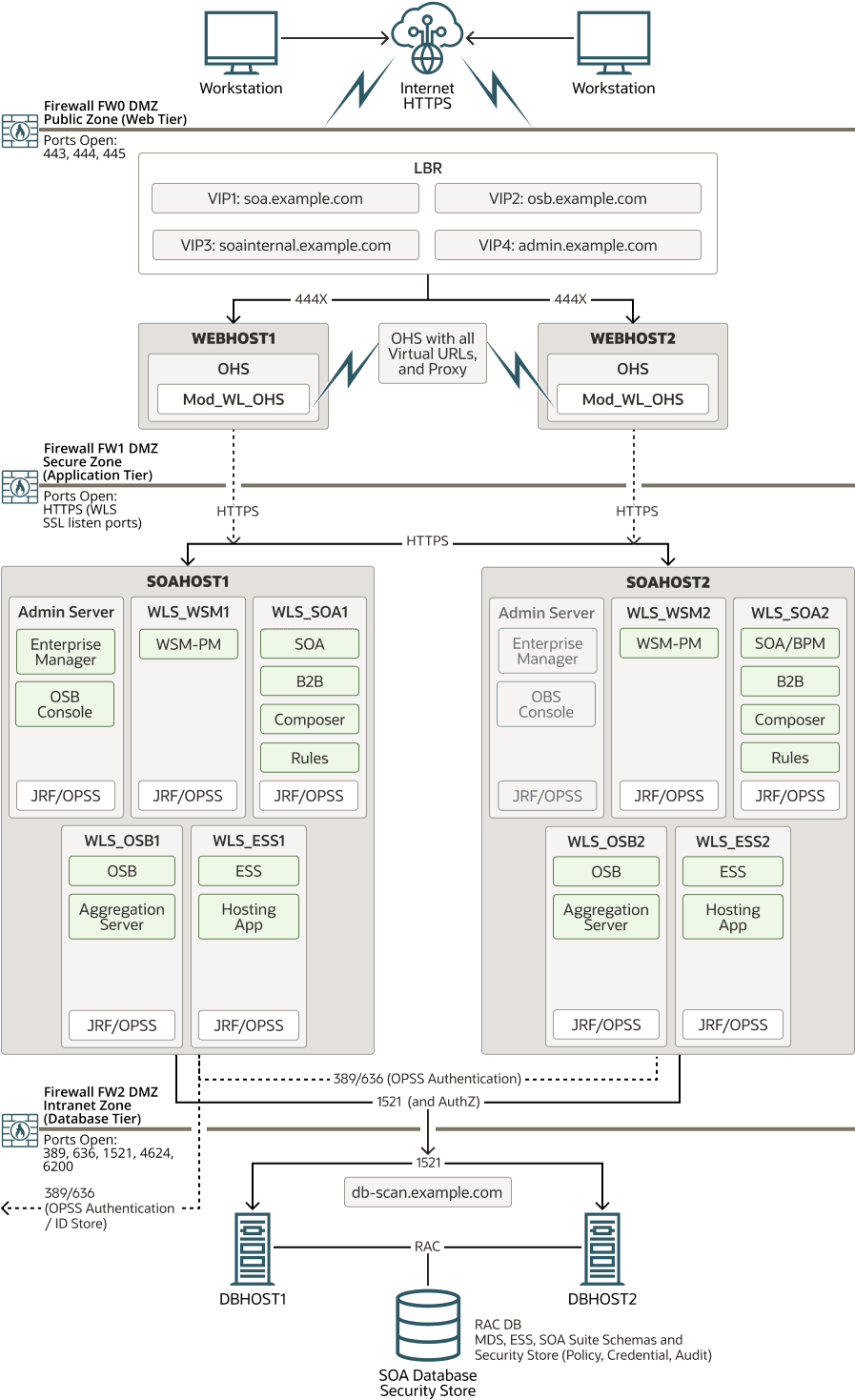

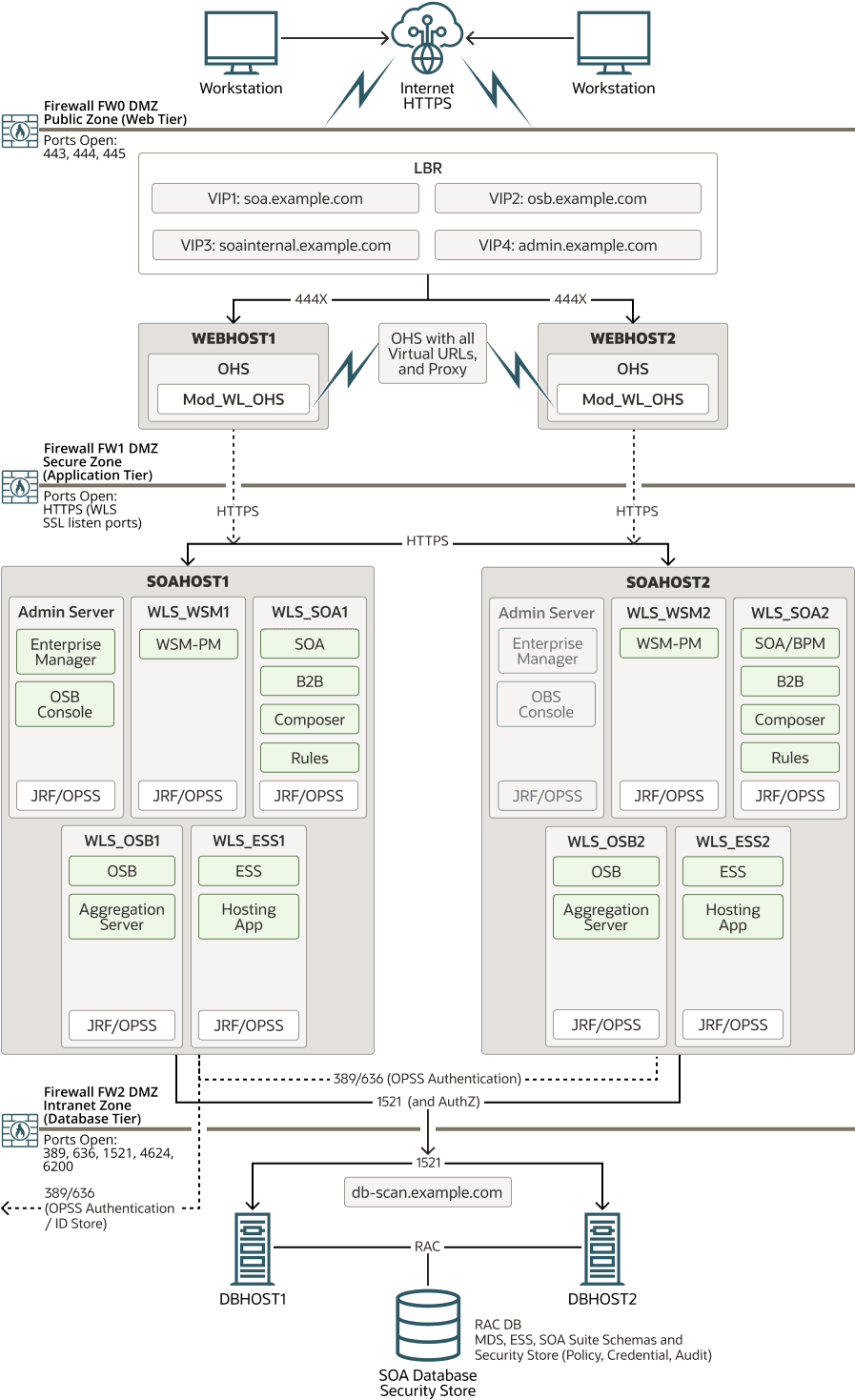

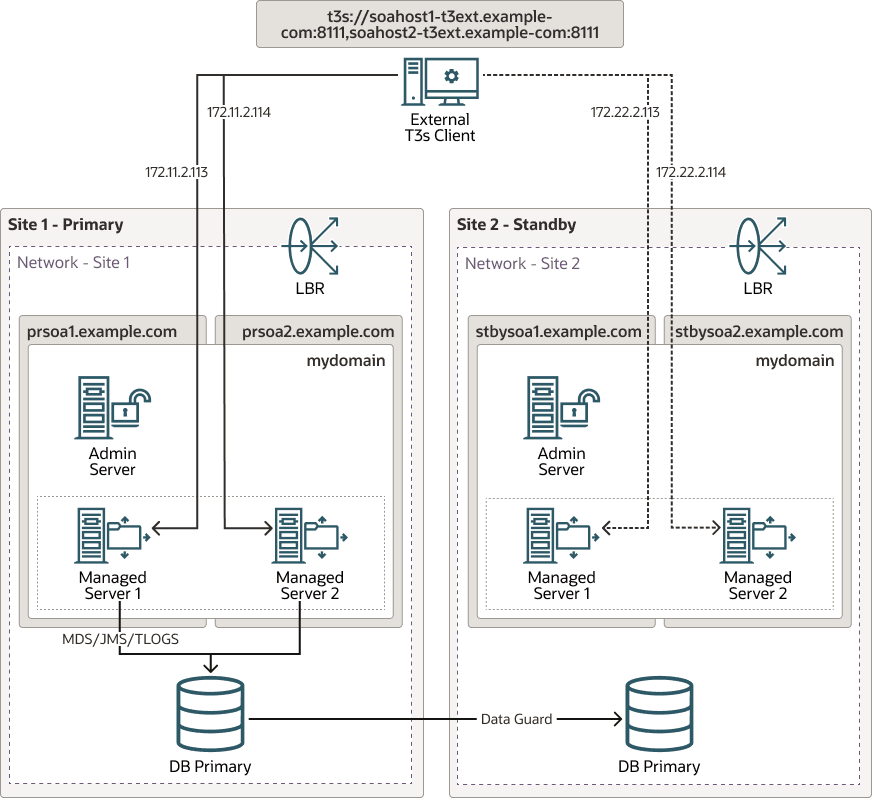

Figure 2-2 shows the Oracle Fusion Middleware Disaster Recovery topology that uses the Oracle SOA Suite enterprise deployment in the primary site.

Figure 2-2 Deployment Used at Production and Secondary Sites for Oracle Fusion Middleware Disaster Recovery

Table 2-1 lists the IP addresses, physical host names, and aliases that are used for the Oracle SOA Suite Enterprise Deployment Guide (EDG) primary site hosts. Figure 2-2 shows the configuration of the Oracle SOA Suite EDG deployment at the production site.

Table 2-1 IP Addresses and Physical Host Names for SOA Suite Production Site Hosts

| IP Address | Physical Host Name | Host Name Alias |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Note:

This document usesWEBHOST1, WEBHOST2,

SOAHOST1, SOAHOST2, and ADMINVHN

as abstract placeholders for the actual alias host names. For example, in your system,

SOAHOST1 may have a value of

soahost1.example.com.

Figure 2-3 shows the physical host names that are used for the Oracle SOA Suite EDG deployment at the standby site.

Figure 2-3 Physical Host Names Used at Oracle SOA Suite Deployment Standby Site

Table 2-2 lists the IP addresses, physical host names, and aliases that are used for the Oracle SOA Suite Enterprise Deployment Guide (EDG) deployment standby site hosts.

Table 2-2 IP Addresses and Physical Host Names for SOA Suite Standby Site Hosts

| IP Address | Physical Host Name | Host Name Alias |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Note:

If you use separate DNS servers to resolve host names, then you can use the same physical host names for the production site hosts and standby site hosts and you do not need to define the alias host names on the standby site hosts. For more information about using separate DNS servers to resolve host names, see Resolving Host Names Using Separate DNS Servers.

Virtual IP Considerations

Besides using virtual hostnames to facilitate disaster protection configuration and in the context of local failures, some Oracle WebLogic servers may use a floating hostname as the listen address that is mapped to an IP that can be enabled in different nodes. These are called floating IPs and can be moved across different nodes inside the same region by disabling them in a network or virtual network interface in one node and enabling them in a different one. This allows failing over of the WebLogic servers and system components without reconfiguring their listen addresses which is the case for Oracle WebLogic Administration Server. In the Oracle WebLogic Cluster, VIPs and servers can also be moved across nodes by the WebLogic infrastructure and this mechanism is called WebLogic Server Migration.

Note:

The WebLogic Administration Server cannot be clustered and therefore cannot use server migration.

In Oracle Fusion Middleware 14c, most applications support Oracle WebLogic Automatic Service Migration (ASM) where only the services running inside WebLogic (JMS, JTA) are migrated to other running WebLogic Servers. This improves recovery time because instead of entire servers being restarted, just few WebLogic subsystems are failed over. As a result, it is no longer necessary to reserve Virtual IPs for the Managed Servers in the domain (required for server migration). Those products or applications that do not support ASM, may require a VIP to configure server migration for the WebLogic Server where they run. Refer to your specific Fusion Middleware component’s documentation to determine if it supports ASM. As indicated, a virtual hostname and its mapping VIP is needed for the administration server (Enterprise Deployment Guide recommendation). The WebLogic administration server cannot be clustered so it needs a mobile hostname that can be failed over to other nodes in a loss of host scenario.

Ensure that you provide the floating IP addresses for the administration server with the same virtual hostnames on the production site and the standby site.

Table 2-3 Floating IP Addresses

| Virtual IP Address | Virtual Name | Alias Host Name |

|---|---|---|

|

|

(in production site) |

|

|

|

(in standby site) |

|

Host Name Resolution

Host name resolution means mapping a host name to the proper IP address required for accessing a node, a service, or an endpoint in a system. Host name resolution is a key aspect in all disaster protection systems because it allows virtualizing addresses and using primary sites' configuration in the secondary site without manipulations. A primary system may have its own host name resolution mechanics (Enterprise Deployment Guides do not prescribe a specific methodology in this domain). However, depending on the number of nodes, the number of virtual hostnames, and the components used in the Fusion Middleware topology, different host name resolution mechanisms based on a system’s need is required.

In a disaster protection system, hostname aliases or virtual hostnames are used in the configuration of Fusion Middleware so that the configuration can be reused without any modifications in a different site by simply remapping the virtual hostnames to IPs that are valid in the secondary site. Host name resolution enables this dynamic mapping of hostnames to IPs and can be configured using any of the following procedures:

-

Resolving Host Names Locally

Local host name resolution uses the host name to IP address mapping that is specified by the

/etc/hostsfile on each host.For more information about using the

/etc/hostsfile to implement local host name file resolution, see Resolving Host Names Locally . -

Resolving Host Names Using DNS

A DNS server is a dedicated server or a service that provides DNS name resolution in an IP network. You can use global DNS services (common to primary and secondary) or use separate DNS services in each location.

For more information about the two methods used for implementing DNS server host name resolution, see Resolving Host Names Using Separate DNS Servers and Resolving Host Names Using a Global DNS Server.

You must determine the method of host name resolution that you will use for your Oracle Fusion Middleware Disaster Recovery topology when you plan the deployment of the topology. Most site administrators use a combination of these resolution methods in a precedence order to manage host names.The Oracle Fusion Middleware hosts and the shared storage system for each site must be able to communicate with each other.

Host Name Resolution Precedence

To determine the host name resolution method used by a particular host, search for

the value of the hosts parameter in the

/etc/nsswitch.conf file on the host.

If you want to resolve host names locally on the host, make the

files entry the first entry for the hosts

parameter, as shown in Example 2-1. When files is the first entry for the

hosts parameter, entries in the host

/etc/hosts file are first used to resolve host names.

If you want to resolve host names by using DNS on the host, make the

dns entry the first entry for the hosts

parameter, as shown in Example 2-2. When dns is the first entry for the

hosts parameter, DNS server entries are first used to resolve

host names.

For simplicity and consistency, Oracle recommends that all the hosts within a site (production or standby site) should use the same host name resolution method (resolving host names locally or resolving host names using separate DNS servers or a global DNS server).

The recommendations in the following sections are high-level that you can adapt to meet the host name resolution standards used by your enterprise.

Example 2-1 Specifying the Use of Local Host Name Resolution

hosts: files dns nis

Example 2-2 Specifying the Use of DNS Host Name Resolution

hosts: dns files nis

Resolving Host Names Locally

Local host name resolution uses the host name for IP mapping that is defined

in the /etc/hosts file of a host.

When you resolve host names for your disaster recovery topology in this way, use the following procedure:

Example 2-3 Adding /etc/hosts File Entries for a Production Site Host

127.0.0.1 localhost.localdomain localhost 172.11.2.134 prsoa-vip.example.com prsoa-vip ADMINVHN.EXAMPLE.COM ADMINVHN 172.11.2.111 prweb1.example.com prweb1 WEBHOST1.EXAMPLE.COM WEBHOST1 172.11.2.112 prweb2.example.com prweb2 WEBHOST2.EXAMPLE.COM WEBHOST2 172.11.2.113 prsoa1.example.com prsoa1 SOAHOST1.EXAMPLE.COM SOAHOST1 172.11.2.114 prsoa2.example.com prsoa2 SOAHOST2.EXAMPLE.COM SOAHOST2

Example 2-4 Adding /etc/hosts File Entries for a Standby Site Host

127.0.0.1 localhost.localdomain localhost 172.22.2.134 stbysoa-vip.example.com stbysoa-vip ADMINVHN.EXAMPLE.COM ADMINVHN 172.22.2.111 stbyweb1.example.com stbyweb1 WEBHOST1.EXAMPLE.COM WEBHOST1 172.22.2.112 stbyweb2.example.com stbyweb2 WEBHOST2.EXAMPLE.COM WEBHOST2 172.22.2.113 stbysoa1.example.com stbysoa1 SOAHOST1.EXAMPLE.COM SOAHOST1 172.22.2.114 stbysoa2.example.com stbysoa2 SOAHOST2.EXAMPLE.COM SOAHOST2

Note:

The subnets in the production site and standby site are different.Resolving Host Names Using Separate DNS Servers

You can also use separate DNS servers to resolve host names for your disaster recovery topology (one in the primary and another in the secondary site).

When you use separate DNS servers to resolve host names for your disaster recovery topology, use the following procedure:

Example 2-5 DNS Entries for a Production Site Host in a Separate DNS Servers Configuration

PRSOA-VIP.EXAMPLE.COM IN A 172.11.2.134 PRWEB1.EXAMPLE.COM IN A 172.11.2.111 PRWEB2.EXAMPLE.COM IN A 172.11.2.112 PRSOA1.EXAMPLE.COM IN A 172.11.2.113 PRSOA2.EXAMPLE.COM IN A 172.11.2.114 ADMINVHN.EXAMPLE.COM IN A 172.11.2.134 WEBHOST1.EXAMPLE.COM IN A 172.11.2.111 WEBHOST2.EXAMPLE.COM IN A 172.11.2.112 SOAHOST1.EXAMPLE.COM IN A 172.11.2.113 SOAHOST2.EXAMPLE.COM IN A 172.11.2.114

Example 2-6 DNS Entries for a Standby Site Host in a Separate DNS Servers Configuration

STBYSOA-VIP.EXAMPLE.COM IN A 172.22.2.134 STBYWEB1.EXAMPLE.COM IN A 172.22.2.111 STBYWEB2.EXAMPLE.COM IN A 172.22.2.112 STBYSOA1.EXAMPLE.COM IN A 172.22.2.113 STBYSOA2.EXAMPLE.COM IN A 172.22.2.114 ADMINVHN.EXAMPLE.COM IN A 172.22.2.134 WEBHOST1.EXAMPLE.COM IN A 172.22.2.111 WEBHOST2.EXAMPLE.COM IN A 172.22.2.112 SOAHOST1.EXAMPLE.COM IN A 172.22.2.113 SOAHOST2.EXAMPLE.COM IN A 172.22.2.114

Note:

If you use separate DNS servers to resolve host names, then you can use the same host names for the production site hosts and standby site hosts. You do not need to define the alias host names.Resolving Host Names Using a Global DNS Server

You can use a global DNS server to resolve host names for your disaster recovery topology as an alternative to the two previous options.

The term global DNS server refers to a disaster recovery topology, where a single DNS server is used for both the production and standby site.

When you use a global DNS server to resolve host names for your disaster recovery topology, use the following procedure:

Example 2-7 DNS entries for production site and standby site hosts when using a global DNS server configuration during normal operations (when the workload is sustained by the original primary)

PRSOA-VIP.EXAMPLE.COM IN A 172.11.2.134 PRWEB1.EXAMPLE.COM IN A 172.11.2.111 PRWEB2.EXAMPLE.COM IN A 172.11.2.112 PRSOA1.EXAMPLE.COM IN A 172.11.2.113 PRSOA2.EXAMPLE.COM IN A 172.11.2.114 STBYSOA-VIP.EXAMPLE.COM IN A 172.22.2.134 STBYWEB1.EXAMPLE.COM IN A 172.22.2.111 STBYWEB2.EXAMPLE.COM IN A 172.22.2.112 STBYSOA1.EXAMPLE.COM IN A 172.22.2.113 STBYSOA2.EXAMPLE.COM IN A 172.22.2.114 ADMINVHN.EXAMPLE.COM IN A 172.11.2.134 WEBHOST1.EXAMPLE.COM IN A 172.11.2.111 WEBHOST2.EXAMPLE.COM IN A 172.11.2.112 SOAHOST1.EXAMPLE.COM IN A 172.11.2.113 SOAHOST2.EXAMPLE.COM IN A 172.11.2.114

Example 2-8 DNS entries for production site and standby site hosts when using a global DNS server configuration, after a switchover to standby site

PRSOA-VIP.EXAMPLE.COM IN A 172.11.2.134 PRWEB1.EXAMPLE.COM IN A 172.11.2.111 PRWEB2.EXAMPLE.COM IN A 172.11.2.112 PRSOA1.EXAMPLE.COM IN A 172.11.2.113 PRSOA2.EXAMPLE.COM IN A 172.11.2.114 STBYSOA-VIP.EXAMPLE.COM IN A 172.22.2.134 STBYWEB1.EXAMPLE.COM IN A 172.22.2.111 STBYWEB2.EXAMPLE.COM IN A 172.22.2.112 STBYSOA1.EXAMPLE.COM IN A 172.22.2.113 STBYSOA2.EXAMPLE.COM IN A 172.22.2.114 ADMINVHN.EXAMPLE.COM IN A 172.22.2.134 WEBHOST1.EXAMPLE.COM IN A 172.22.2.111 WEBHOST2.EXAMPLE.COM IN A 172.22.2.112 SOAHOST1.EXAMPLE.COM IN A 172.22.2.113 SOAHOST2.EXAMPLE.COM IN A 172.22.2.114

Example 2-9 Production site /etc/hosts file entries when using a global DNS server configuration

127.0.0.1 localhost.localdomain localhost 172.11.2.134 prsoa-vip.example.com prsoa-vip ADMINVHN.EXAMPLE.COM ADMINVHN 172.11.2.111 prweb1.example.com prweb1 WEBHOST1.EXAMPLE.COM WEBHOST1 172.11.2.112 prweb2.example.com prweb2 WEBHOST2.EXAMPLE.COM WEBHOST2 172.11.2.113 prsoa1.example.com prsoa1 SOAHOST1.EXAMPLE.COM SOAHOST1 172.11.2.114 prsoa2.example.com prsoa2 SOAHOST2.EXAMPLE.COM SOAHOST2

Example 2-10 Standby Site /etc/hosts File Entries when using a global DNS server configuration

127.0.0.1 localhost.localdomain localhost 172.22.2.134 stbysoa-vip.example.com stbysoa-vip ADMINVHN.EXAMPLE.COM ADMINVHN 172.22.2.111 stbyweb1.example.com stbyweb1 WEBHOST1.EXAMPLE.COM WEBHOST1 172.22.2.112 stbyweb2.example.com stbyweb2 WEBHOST2.EXAMPLE.COM WEBHOST2 172.22.2.113 stbysoa1.example.com stbysoa1 SOAHOST1.EXAMPLE.COM SOAHOST1 172.22.2.114 stbysoa2.example.com stbysoa2 SOAHOST2.EXAMPLE.COM SOAHOST2

External T3/T3s Clients Considerations

Systems directly accessing the WebLogic servers in a topology need to be aware of the

listen address that is used by those WebLogic servers. An appropriate host name

resolution needs to be provided to those clients so that the hostname alias used by the

servers as listen address is correctly resolved. This is also applicable when performing

deployments from Oracle JDeveloper or other development tools and scripts. Using the

example addresses provided in the EDG, the client hosting Oracle Jdeveloper needs to map

the SOAHOSTx and ADMINVHN aliases (corresponding to

different WebLogic servers and administration server) to correct IP addresses for

management and RMI operations to succeed. Depending on how controllable and how many

external clients are accessing the system, a different host name resolution approach may

be better for an optimum RTO and simplified management.

Note:

This section does not apply to T3/T3s clients that reside in the same

WebLogic domain as the T3/T3s servers. When connecting to a cluster in the same

domain, internal clients can utilize the T3/T3s cluster syntax such as

cluster:t3://cluster_name. You can utilize this cluster syntax,

only when the clusters are in the same domain.

WebLogic uses the T3/T3s protocol for Remote Method Invocation (RMI). It is used by several WebLogic services such as JMS, EJB, JTA, JNDI, JMX and WLST. The external T3/T3s clients that do not run in the same domain as the T3/T3s servers, can use different ways to connect to a WebLogic cluster. For more information, see Using WebLogic RMI with T3 Protocol.

t3://host1.example.com:9073,host2.example.com:9073The external T3/T3s clients can also access the T3/T3s services through a TCP Load Balancer (LBR). In this scenario, the client provides URL points to the load balancer service and port. The requests are then load balanced to the WebLogic Server's T3/T3s ports. In the initial contact for the JNDI context retrieval, the external clients connect to a WebLogic Server through the load balancer and download the T3 client stubs. These stubs contain the connect information that is used for the subsequent requests.

In general, the WebLogic T3/T3s is TCP/IP based so it can support the TCP

load balancing when services are homogeneous such as JMS and EJB. For example, a JMS

front-end can be configured in a WebLogic cluster in which remote JMS clients can

connect to any cluster member. By contrast, a JTA subsystem cannot safely use TCP load

balancing in transactions that span across multiple WebLogic domains. The JTA

transaction coordinator must establish a direct RMI connection to the server instance

that acts as a sub coordinator of the transaction when that transaction is either

committed or rolled back. Due to this, the load balancer is normally used only for the

JNDI initial context retrieval. The WebLogic Server load balancing

system controls the future T3/T3s requests, which connect to the WebLogic managed

servers' addresses and ports (default or custom channels) indicated in the stubs

retrieved during this initial context retrieval.

This section explains how to manage T3/T3s clients and its impact on disaster protection configuration. It also explains how to use the load balancer for all T3/T3s communications (both initial and subsequent requests) when JTA is not used.

Note:

External clients can access the T3 services using the T3 tunneling over HTTP. This approach creates a HTTP session for each RMI session and uses standard HTTP protocols to transfer the session ID back and forth between the client and the server. This introduces some overhead and is used less frequently.

Different Approaches for Accessing T3/T3s Services

A disaster recovery scenario presents specific aspects which affect the host name resolution configuration of external T3/T3s clients. This topic explains different approaches that you can use when accessing T3/T3s services from external clients and how to manage them in a disaster recovery scenario. For each approach a configuration example is provided and the advantages and disadvantages while managing clients and switchover are explained.

Note:

These approaches apply to a disaster recovery scenario that complies with the MAA guidelines for Fusion Middleware, where the secondary domain configuration is a replica of the primary system.Direct T3/T3s Using Default Channels

In this approach, the external T3/T3s client connects directly to the

WebLogic managed server's default channels. These default channels listen in the

Listen Address and Listen Port specified in the

general configuration of each WebLogic managed server.

Figure 2-4 Direct T3/T3s Using Default Channels

Configuration

-

The provider URL in the T3 client uses the list of the WebLogic servers' default listen addresses and ports.

Example: In the following disaster recovery example, the listener addresses of the WebLogic servers are the primary hostnames:

t3://soahost1.example.com:8001,soahost2.example.com:8001In case you are using the T3s protocol, the port must be set to the server’s

SSL Listen Port.Example:t3s://soahost1.example.com:8002,soahost2.example.com:8002 -

External clients must resolve these hostnames with the primary host’s IPs. These IPs must be reachable from the client. It is possible to use Network Address Translating (NAT), if there is a NAT IP address for each server. In that case, the client will resolve each server name with the appropriate NAT IP for the server.

Example: Naming Resolution at the External Client Side

This is achievable through

local /etc/hostsor formal DNS server resolution.172.11.2.113 soahost1.example.com 172.11.2.114 soahost2.example.com

Switchover Behavior

In a switchover scenario, there is no need to update the client's provider

URL. You need to perform an update of the entries in the DNS (or client's

/etc/hosts) of the client. After the switchover, the names used to

connect to the servers must resolve with the IPs of the secondary servers.

172.22.2.113 soahost1.example.com

172.22.2.114 soahost2.example.comAdvantages

-

You do not require additional configuration either at the server’s side or at the front-end tier. All you require is opening the specific ports to the client.

Disadvantages

- When a switchover happens, you need to update the host resolver (DNS or

the clients's

/etc/hosts) to alter the resolution of all the hostnames that the external client uses to connect to the managed servers. For more information about implications of the client’s cache, see About T3/T3s Client’s DNS Cache. - Clients must be able to resolve and reach the hostnames set in

WebLogic's

Listen Address. It is not possible to use alternate names because the default channels use the WebLogic Server’s defaultListen Address. - The WebLogic default channels listen for T3(s) and also HTTP(s) requests. You cannot disable this setting. If you open the default port for the clients, direct HTTP(s) to the server is also allowed which can result in security concerns.

- You need to modify the client’s provider URL if you have to scale out or scale in a WebLogic cluster. The first contact in a T3/T3s invocation uses only the list in the client’s provider URL. So, even without updating the list, the subsequent requests can connect to any server of the cluster as the recovered stubs list all of the members. It is recommended that the client’s provider URL matches the real list of the servers for failover purposes in the first contact.

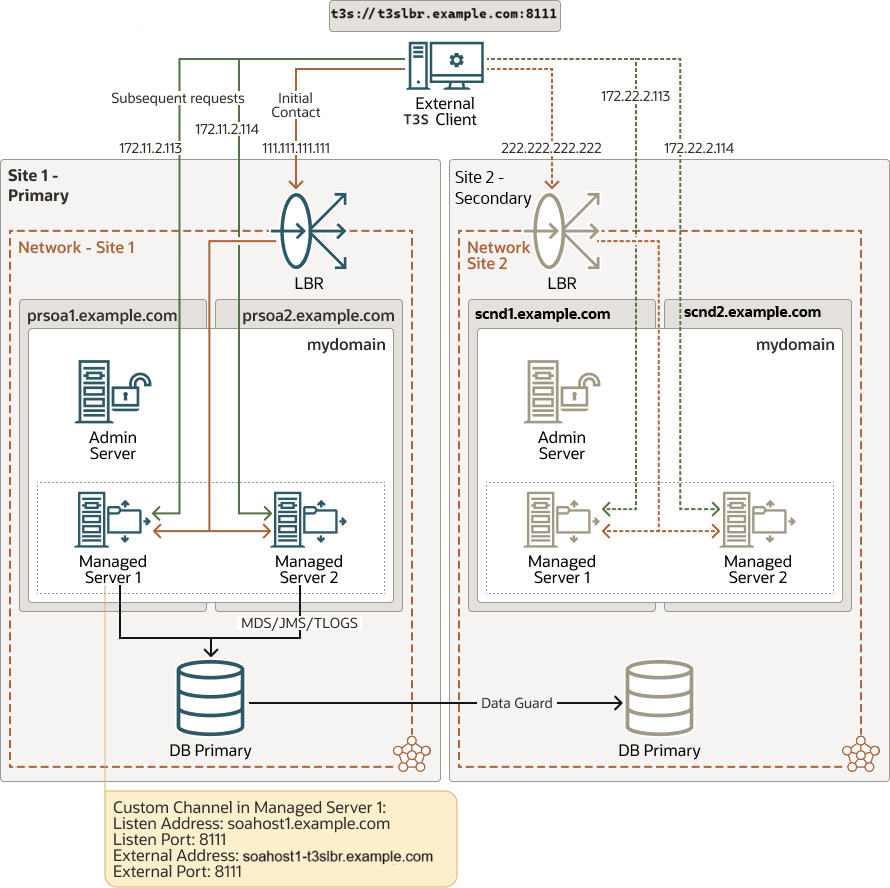

Direct T3/T3s Using Custom Channels

In this approach, the external T3/T3s client connects directly to custom

channels defined in the WebLogic servers of the cluster. The Listen

Address, the External Listen Address, and the External

Port are customizable values in the custom channels. These values can differ

from the WebLogic server’s default listen values.

Figure 2-5 Direct T3/T3s Using Custom Channels

Once the T3/T3s external client is connected to the server during the

initial context retrieval, the subsequent T3 calls connects directly to one of the

listen addresses and ports configured in the custom channels as External Listen

address and External Port. These requests will be load

balanced according to the mechanism specified in the connection factory defined in the

WebLogic and used by the client.

This approach is similar to Direct T3/T3s Using Default Channels with the exception that you can customize the addresses and ports used in T3/T3s calls when using WebLogic custom channels.

Configuration

-

Each WebLogic server has the appropriate custom channels configured and these custom channel uses a unique

External Listen addressthat points to that server node. Table 2-4 and Table 2-5 provides example of Custom Channel in Server 1 and Server 2.Table 2-4 Custom Channel in Server 1

Name Protocol Enabled Listen Address Listen Port Public Address Public Port t3_external_channelt3 true soahost1.example.com8111 soahost1-t3ext.example.com8111 Table 2-5 Custom Channel in Server 2

Name Protocol Enabled Listen Address Listen Port Public Address Public Port t3_external_channelt3 true soahost2.example.com8111 soahost2-t3ext.example.com8111 -

The external T3 client’s provider URL contains the list of the external address and port of the custom channels.

Example:

t3://soahost1-t3ext.example.com:8111,soahost2-t3ext.example.com:8111If you are using the T3s protocol, you must create the custom channels with T3s protocol. Then the clients will connect using T3s protocol and appropriate port.

Example:

t3s://soahost1-t3ext.example.com:8112,soahost2-t3ext.example.com:8112In a T3s channel, you can add a specific SSL certificate for the name used as an

External Listen address. -

The external T3/T3s client must resolve the custom channels’ external hostnames with the primary host’s IPs. These hostnames must be reachable from the client. It is possible to use NAT if there is a NAT address for each server. In that case, the client will resolve each server name with the appropriate NAT IP for the server.

Example: Naming Resolution at the External Client Side

172.11.2.113 soahost1-t3ext.example.com 172.11.2.114 soahost2-t3ext.example.comWith this approach, all the requests from the external client connect to

soahost1-t3ext.example.comandsoahost2-t3ext.example.com, both for the initial context retrieval and for the subsequent calls. You can control these requests by using the WebLogic Server load balancing mechanism.

Switchover

In a switchover scenario, you do not have to update the client's provider

URL. You must update the entries in the DNS or in the /etc/hosts of the

client. After the switchover, the names used to connect to the servers must resolve with

the IPs of the secondary servers.

172.22.2.113 soahost1-t3ext.example.com

172.22.2.114 soahost2-t3ext.example.comAdvantages

-

This method allows using specific hostnames for the external T3/T3s communication different from the server's default listen address. This is useful if you do not want to expose the default server's

Listen Addressto the external clients for security reason. -

This method is useful if you are using NAT IPs and you do not want to resolve the servers' default listen addresses with different IPs internally and externally.

-

This method is useful in case you want to use different names for external T3/T3s accesses just for organizational purposes. You can also use this method to isolate protocols in different interfaces or network routes.

-

The protocol in the custom channel can only be limited to T3/T3s. The HTTP(s) protocol can be disabled in the custom channels.

Disadvantages

- When a switchover happens, you need to update the DNS or the clients's

/etc/hostsat the external client side for all the hostnames used to connect to the managed servers. For more information about the implications of the client’s cache, see About T3/T3s Client’s DNS Cache. - If you are using T3s, then you must create and configure specific SSL certificates for the external names in the custom channels to avoid SSL hostname verification errors in the client.

- You have to modify the client’s provider URL if you have to scale out or scale in a WebLogic cluster. The first contact only uses the list in the client’s provider URL. So, even without updating the list, the subsequent requests can connect to any server of the cluster as the recovered stubs list all of the members. It is always a good practice to ensure that the client’s provider URL matches the real list of the servers for failover purposes in the first contact.

Using Load Balancer for Initial Lookup

In this scenario, the client’s provider URL points to the load balancer address.

Direct T3/T3s Using Default Channels and Direct T3/T3s Using Custom Channels approaches can also use a load balancer for the initial context lookup.

However, the subsequent T3/T3s calls connects to WebLogic servers directly. If you use the default channel for these invocations, the request goes to the default channel’s listen address and port. Similarly, if you use the custom channels then the subsequent request goes to the external listen address and port defined in the customs channels.

Figure 2-6 Using Load Balancer for Initial Lookup

Configuration

-

You will need a TCP service in the load balancer to load balance the requests to the WebLogic Servers' T3/T3s ports (either to the default channels or to the custom channels when used).

-

The external T3/T3s client’s provider uses the front-end name and port of the load balancer as the point of contact.

Example:

t3://t3lbr.example.com:8111 -

You can use default channels or custom channels as explained in Direct T3/T3s Using Default Channels and Direct T3/T3s Using Custom Channels scenarios. The external T3/T3s client must resolve the custom channels’ external hostnames (or the default server’s listeners hostnames, if you are using default channels) with the primary host’s IPs. The client must be able to access them. It is possible to use NAT as long as there is a NAT address for each server. In that case, the client will resolve each server name with the appropriate NAT IP for the server.

Example: Naming Resolution at the External Client Side111.111.111.111 t3lbr.example.com 172.11.2.113 soahost1-t3ext.example.com 172.11.2.114 soahost2-t3ext.example.com

Switchover

In a switchover scenario, you do not have to update the client's provider

URL. You must update the entries in the DNS or in the /etc/hosts used

by the client so that they resolve with the IPs of the secondary site. The name used to

connect to the load balancer must resolve with the IP of the secondary load balancer and

the server names used in subsequent requests must point to the IPs of the secondary

servers.

222.222.222.222 t3lbr.example.com

172.22.2.113 soahost1-t3ext.example.com

172.22.2.113 soahost2-t3ext.example.comAdvantages

You do not have to modify the client’s provider URL if you add or remove the WebLogic Server nodes from the WebLogic cluster.

Disadvantages

-

Despite using a load balancer in front, the client still needs to reach the servers directly.

-

When a switchover happens, you need to update the DNS (or

/etc/hosts) of servers’ addresses at the external client side. For more information about the implications of the client’s cache, see About T3/T3s Client’s DNS Cache. -

The complexity of this method is higher for the T3s cases. The client connects both through the front-end LBR and directly to the server using a secure protocol. In this case, you will need to either skip the hostname verification at the client side or use an SSL certificate that is valid for front and back addresses (For example, wildcard or SAN certificates).

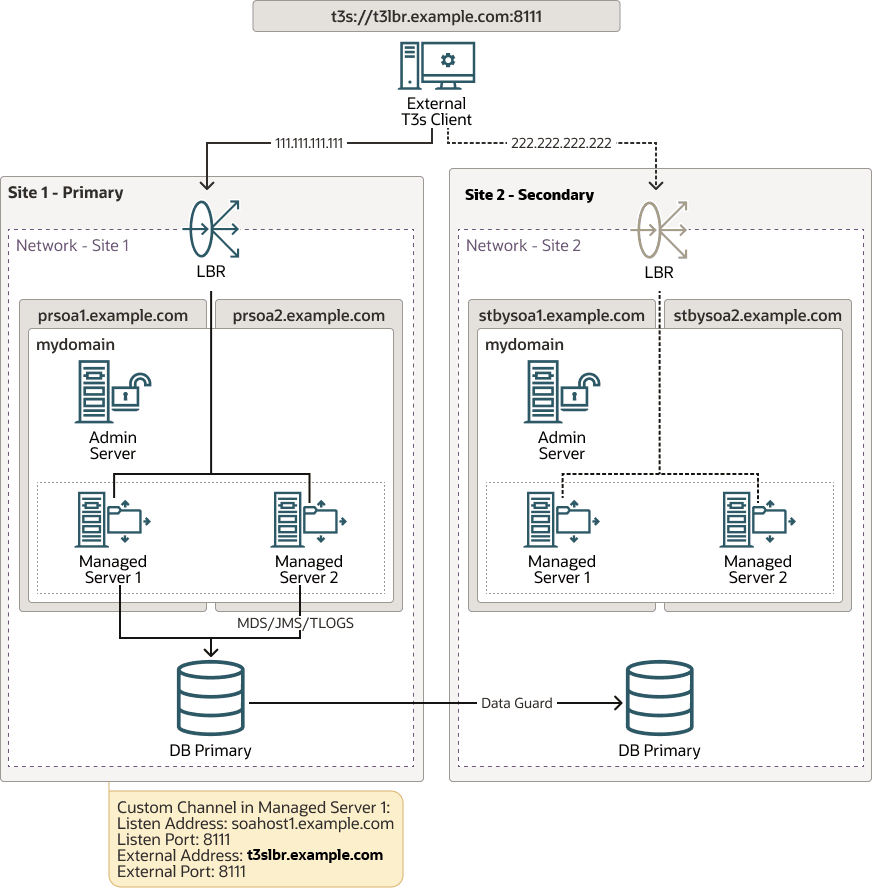

Using Load Balancer for All Traffic

In this approach, the initial context lookup goes through the load balancer and the subsequent connections. There are no direct connections from the external T3/T3s client to the servers.

Oracle does not recommend using LBR for load balancing all types of T3/T3s communications. It is only recommended for initial context lookup. For more information, see WebLogic RMI Integration with Load Balancers. However, there are T3/T3s use cases, where you can use the load balancer for complete T3/T3s communication flow. WebLogic T3/T3s are TCP/IP-based protocols, so it can support TCP load balancing when services are homogeneous such as JMS and EJB. For example, you can configure an LBR front-ended JMS subsystem in a WebLogic cluster in which remote JMS clients can connect to any cluster member.

However, this approach will not work with external clients that use JTA connections. A JTA subsystem cannot safely use TCP load balancing in transactions that span across multiple WebLogic domains. When the transaction is either committed or rolled back the JTA transaction coordinator must establish a direct RMI connection to the server instance that has been chosen as the transaction's sub coordinator.

This method is also not suitable for cases where you require direct connection to a specific server like JMX or WLST when you want to connect to a particular server only.

Figure 2-7 Using Load Balancer for All Traffic

Configuration

-

The load balancer requires a TCP service to load balance the requests to the WebLogic servers’ T3/T3s ports defined in the custom channels.

-

The external T3/T3s client’s provider URL uses the front-end name and port of the load balancer as the point of contact.

Example:t3://t3lbr.example.com:8111 -

The external client must resolve the load balancer address with the IP of the primary site’s load balancer.

Example:111.111.111.111 t3lbr.example.com -

WebLogic Server requires custom channels. Configure the external listen address and port of these custom channels with the load balancer's address and port. Table 2-6 and Table 2-7 provides examples of Custom Channel in Server 1 and Server 2.

Table 2-6 Custom Channel in Server 1

Name Protocol Enabled Listen Address Listen Port Public Address Public Port t3_external_channelt3 true soahost1.example.com8111 t3lbr.example.com8111 Table 2-7 Custom Channel in Server 2

Name Protocol Enabled Listen Address Listen Port Public Address Public Port t3_external_channelt3 true soahost2.example.com8111 t3lbr.example.com8111

Switchover

In a switchover scenario, you do not have to update the client's provider

URL. You must update the entries in the DNS (or in the /etc/hosts) of

the client. After the switchover, the names used to connect to the servers resolves with

the IP of the secondary LBR service.

222.222.222.222 t3lbr.example.comAdvantages

-

All the communication goes through the load balancer. The client only needs to know and reach the load balancer’s service.

-

If you have to either add or remove the WebLogic Server nodes from the WebLogic cluster, you do not have to modify the client’s provider URL

-

In case of a switchover, you only have to update the load balancer’s front-end name in the DNS (or in the

/etc/hosts).Note:

Although only one DNS name is updated, you need to refresh the client’s DNS cache. For more information, see About T3/T3s Client’s DNS Cache. -

In T3s cases, you can use the same SSL certificate in all the custom channels associated with the load balancer service’s front-end name.

Disadvantages

-

This approach is not suitable for all the T3/T3s cases. It also cannot be used in scenarios with JTA as JTA needs to explicitly connect to the server instance that acts as the sub coordinator of the transaction.

About T3/T3s Client’s DNS Cache

All the approaches explained to access T3/T3s services mostly require a DNS update in disaster recovery switchover scenarios. Therefore, you must set the limit for DNS Cache TTL (Time to Live) in DNS server and client's specific cache.

The TTL limit in the DNS service is a setting that tells the DNS resolver how long to cache a query before requesting a new one. Note that the TTL value of the DNS entries will affect the effective RTO of the switchover. If the TTL value is high (for example, 20 minutes), the DNS change will take that time to be effective in the clients cache. Using lower TTL values will make this switchover faster, but this can cause overhead because the clients check the DNS more frequently. A good approach is to set the TTL to a low value temporarily (for example, one minute) before the DNS update. Once the change and switchover procedure is completed, set the TTL to the normal value again.

Besides the DNS server's TTL, networkaddress.cache.ttl Java

property controls the Java clients' cache TTL. This Java property indicates the caching

policy for successful name lookups from the name service. Specify the value as an

integer to indicate the number of seconds to cache the successful lookup. Ensure you set

a limit to the networkaddress.cache.ttl Java property so the client's

Java cache does not cache the DNS entries forever or you might have to restart the

client with each switchover.

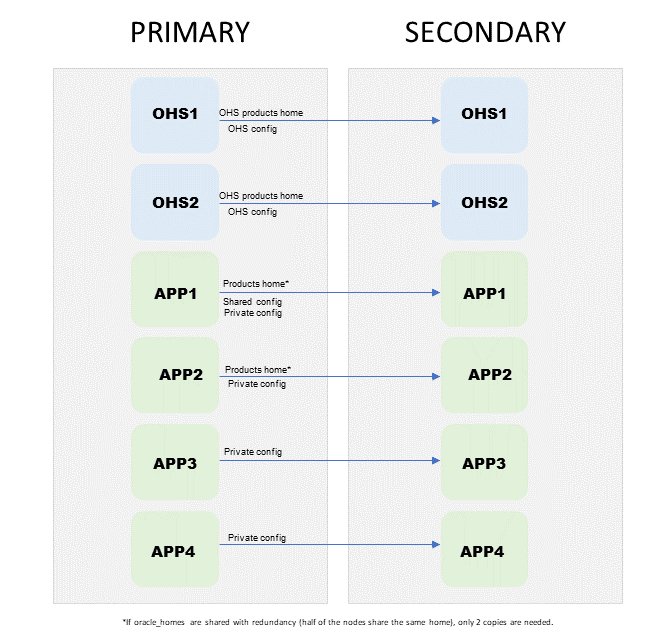

Planning a File System Replication Strategy

Defining a file system replication strategy is important while designing a disaster protection system. Oracle does not recommend creating and managing the secondary system by repeating installations and configuration steps.

Applying changes first in the primary system (for example, deploying a data source) and then repeating the step in the secondary system is not a reliable approach. Even when using deployment scripts and automated pipelines, there is rarely a guarantee of transaction. This can lead to changes being applied only in the primary system and not propagated properly to the secondary system. Some configuration changes require WebLogic servers to be running and this may cause duplicated processing and undesired behavior. But more importantly, a dual change approach typically gives room to inconsistencies and management overhead that can affect the RTO and RPO of the disaster protection solution.

Instead, different file replication strategies can be used to replicate configuration changes across sites. File system replication is used in the initial setup and during the ongoing lifecycle of a system. Costs, management overhead, and RTO and RPO may have different implications during setup and ongoing lifecycle, so different replication approaches can be used in each case. Also, there are different data types involved in a Fusion Middleware topology and each one may also have different RTO and RPO needs depending on their size on the file system and how frequently they are generated and modified. You must first understand the different data types involved in a disaster protection topology to decide which replication strategy is suitable for your needs.

Data Tiers in a Fusion Middleware File System

All files involved in the Fusion Middleware system should be replicated from the primary to the secondary at the same point in time. However, different file types may need different replication frequency to simplify management costs and to reduce the total cost of ownership of a disaster protection system. This is important when you design the volumes and file systems that you want to use for replication. Some artifacts are static whereas others are dynamic.

Oracle Home and Oracle Inventory

An Oracle Home is the directory into which all the Oracle software is installed and is referenced by Fusion Middleware and WebLogic environment variables. Oracle Fusion Middleware allows you to create multiple Oracle WebLogic Server Managed Servers from one single binary file installation. The Oracle Fusion Middleware Enterprise Deployment Guides provide recommendations on how to configure storage to install and use Oracle Home so that there is no single point of failure in the Fusion Middleware system while optimizing the storage size and simplifying maintenance.

It is not necessary to install Oracle Fusion Middleware instances at the secondary site as it is installed at the production site. When the production site storage is replicated to the secondary site storage, the Oracle software installed on the production site volumes are replicated at the secondary site volumes.

A secondary system needs to behave exactly like the primary system when

a failover or switchover occurs. It should admit patches and upgrades as a

first-class installation. This means that when a failover or switchover occurs, the

secondary system must use a standard inventory for patches and upgrades. To maintain

consistency, it is required to replicate the Oracle Inventory with the same

frequency as the Oracle Homes used by the different Fusion Middleware components

(RTO, RPO, and consistency requirement of the Oracle Inventory must be the same as

the one prescribed for Oracle Homes). The Oracle Inventory includes

oraInst.loc and oratab files which are located

in the /etc directory.

When an Oracle Home or a WebLogic home is shared by multiple servers in

different nodes, Oracle recommends that you keep the Oracle Inventory and Oracle

Home list in those nodes that are updated for consistency in the installations and

application of patches. To update the inventory files in a node and attach an

installation in a shared storage to it, use the

ORACLE_HOME/oui/bin/attachHome.sh script.

Both Oracle Home and Oracle Inventory are static artifacts during runtime and typically require a low RTO (since they are the binaries from which Fusion Middleware domains run). It is not required to copy them across regions too frequently because they change only when patches and fixes are applied. From a consistency point of view, runtime artifacts are not usually too restrictive. For example, most patches will support previous configurations of a WebLogic Server domain. So even if you do not propagate a patch in a WebLogic Oracle Home across regions, the secondary domain configuration will work properly.

Configuration Artifacts

Configuration artifacts are files that change frequently and includes the following:

-

WebLogic Domain Home: Domain directories of the Administration Server and the Managed Servers.

-

Oracle instances of system components such as Oracle HTTP Server: Oracle Instance home directories.

-

Application artifacts like

.earor.warfiles. -

Database artifacts like the MDS repository and the JDBC persistent stores definitions.

-

Deployment plans used for updating technology adapters like the file and JMS adapters. They need to be saved in a location that is accessible to all nodes in the cluster where the artifacts are being deployed to.

Configuration artifacts change frequently depending on application updates. They require a low RTO and a high replication frequency. It is important to maintain consistency for configuration artifacts in different stores, else the applications may stop working after a restore. For example, WebLogic domain configuration reflecting a new JMS server must be aligned with the database table that it uses as persistent store. Replicating only the WebLogic domain configuration without replicating the pertaining table will cause a WebLogic Server failure.

Runtime Artifacts

Runtime artifacts are files generated by applications at runtime. For example, files generated by SOA’s file or FTP Adapters, files managed by Oracle MFT, or any other information that applications generate through their business logic and that is stored directly on the file system. These files may change very frequently. Their RTO and RPO are purely driven by business needs. In some cases, these type of artifacts may need to be discarded after a short period of time. For example, a bid order that expires in a short period. In other cases, these files may contain transactional records of operations completed by an application that need to be preserved. How frequently they need to be replicated and how important it is to preserve this type of files in a disaster event is usually a business-driven decision.

Main Replication Options

Oracle verifies different approaches to replicate the different types of data used by Fusion Middleware deployments on the file system. Although there are other options, only the following ones are formally tested and can be utilized for Fusion Middleware deployments.

Storage Level Replication

The Oracle Fusion Middleware Disaster Recovery solution can use storage replication technology to replicate the secondary Oracle Fusion Middleware middle-tier primary system. This means using specific storage vendor technology to replicate storage volumes across regions. Typical solutions in this area involve creating an initial or baseline snapshot of the different volumes presented in the EDG and then manually, on schedule or continuously replicating changes incrementally to a secondary location.

Do not use storage replication technology to provide disaster protection for Oracle databases. Ensure that disaster protection for any database that is included in the Oracle Fusion Middleware Disaster Recovery production site is provided by Oracle Data Guard.

Ensure that your storage device's replication technology guarantees consistent replication across multiple volumes. Otherwise, problems may arise when different configuration and runtime artifacts used by the Fusion Middleware systems are replicated at different points in time. Most storage vendors guarantee consistency when multiple nodes are using different storage volumes and it is needed to take a backup of all of them (or replicate their contents) in a precise point in time. Volumes are grouped in a single logical unit frequently called consistency volume group, consistency group, or volume group. Volume groups provide a point in time copy of the different volumes used in an EDG so that a consistent snapshot of all of them is propagated to the secondary. Imagine a situation where a database module deployment resides in volume A while the application that uses it resides in volume B. Without consistency groups it could occur that a new version of the WAR for the application is replicated while the data source configuration it requires is not, thus causing a consistency problem in the secondary.

Storage level replication can be used as a general-purpose approach for all types of data in the EDG (Oracle Home/Oracle Inventory, configuration artifacts, and runtime artifacts). It is a valid approach both for the initial setup and ongoing replication cycles during the lifetime of a system.

Rsync

Rsync can be used as an alternative to using storage vendor-specific replication for replicating the primary Fusion Middleware system to the secondary system. Rsync is a versatile copying tool, that can copy locally or to/from another host over any remote shell. It offers a large number of options that control every aspect of its behavior and permits very flexible specification of the set of files to be copied.

Rsync implements delta-transfer algorithm which reduces the amount of data sent over the network by sending only the differences between the source files and the existing files in the destination. Due to these advantages and convenience, it is a widely used tool for backups and mirroring. To guarantee a secure copy in all cases, it is recommended using rsync over SSH.

Although rsync is reliable and implements implicit retries, network outages and other connectivity issues can still cause failures in the file synchronization. Additionally, rsync does not guarantee consistency across different nodes. It does not provide a distributed point in time replication. Hence, rsync can be used as an alternative to the storage level replication under the following conditions:

-

When the storage replication is not possible or feasible and/or does not meet the cost requirements.

-

When primary and standby use a reliable and secure network connection for the copy.

-

When checks are performed across the different nodes involved in the copy to ensure that it is valid.

-

When the disaster recovery site is validated on a regular basis.

Rsync is present in most Linux distributions these days. You can install and

set up rsync to enable replication of files from a production site host

to its secondary site peer host. For instructions about how to install, set up, and use

the utility, see the utility manual and rsync.

In a rsync replication approach, there are two main alternatives to copy from the primary to the secondary:

Figure 2-8 Peer-to-Peer Copy

In this case, the copy can be done directly from each host to its remote peer. Each node has SSH connectivity to its peer and uses rsync commands over SSH to replicate the primary system.

shows an overview of using rsync commands over SSH to replicate the primary system.

In this approach, each node uses individual scripts to rsync its Fusion Middleware installation and configuration to the remote peer node. Each node can cron the scripts. Different data types can have different schedules for the cron jobs. For example:

-

Products once a month.

-

Configuration once a day.

This is an easy setup that does not need additional hardware resources. However, it requires maintenance across many nodes since scripts are not centralized (for example, large clusters add more complexity to the solution). It is also difficult to guarantee consistency at a point in time since each node stores a separate copy. The consistency and validation of this approach can be improved using a central host to launch the scripts in each node (acts like a single scheduler) and to validate the copies.

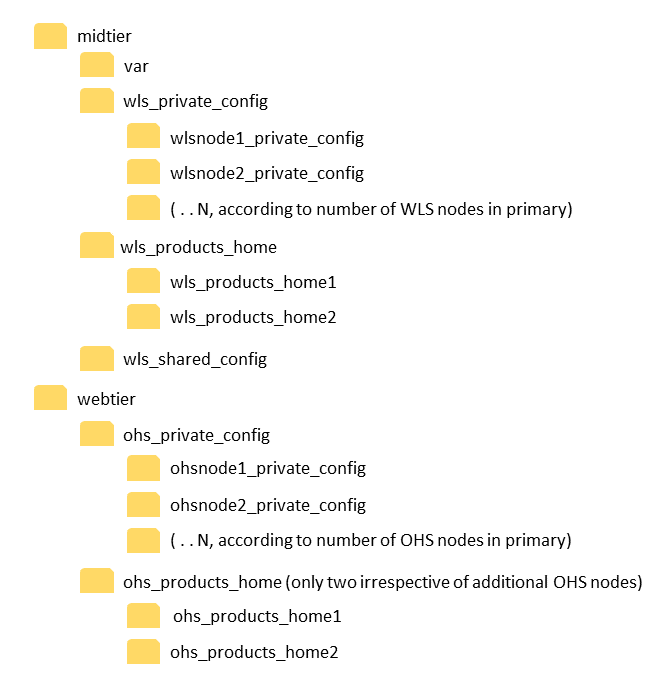

Staging Location

In this approach, a staging location is used and typically a node acts as a coordinator connecting to each individual host that needs to be replicated. This coordinator copies the required configuration and binary data to a staging location. This can be on a shared storage attached to all nodes or through a staging host. This staging location can be validated without affecting the primary or the secondary nodes. This approach offloads the individual nodes from the overhead of the copies and maintains consistency for binaries by using a single install that is distributed to the rest of the nodes. It is for this reason that this approach is typically preferred to the peer-to-peer copy. When there are strong security restrictions in terms of connection allowed across data centers, it is also possible to use a node local to the primary that stages the copy from the primary nodes and distributes them to the secondary staging location. This approach limits the access across regions to just two nodes and offloads the remote copies (typically incurring in longer latency and overhead) to the coordinator box.

Figure 2-9 Staging Location

Rsync can be used as a general-purpose approach for all types of data in the EDG but needs to be tested in each specific deployment when applications are generating large amounts of data (constantly changing) during runtime. Rsync may overload the nodes running the replication and workload tests need to be executed to verify its validity. For configuration and binaries, rsync should not cause any performance issues both for the initial setup and ongoing replications. For runtime artifacts it is required to consider the required RTO and RPO to calculate data modification rates and possible overhead caused by the rysnc copy.

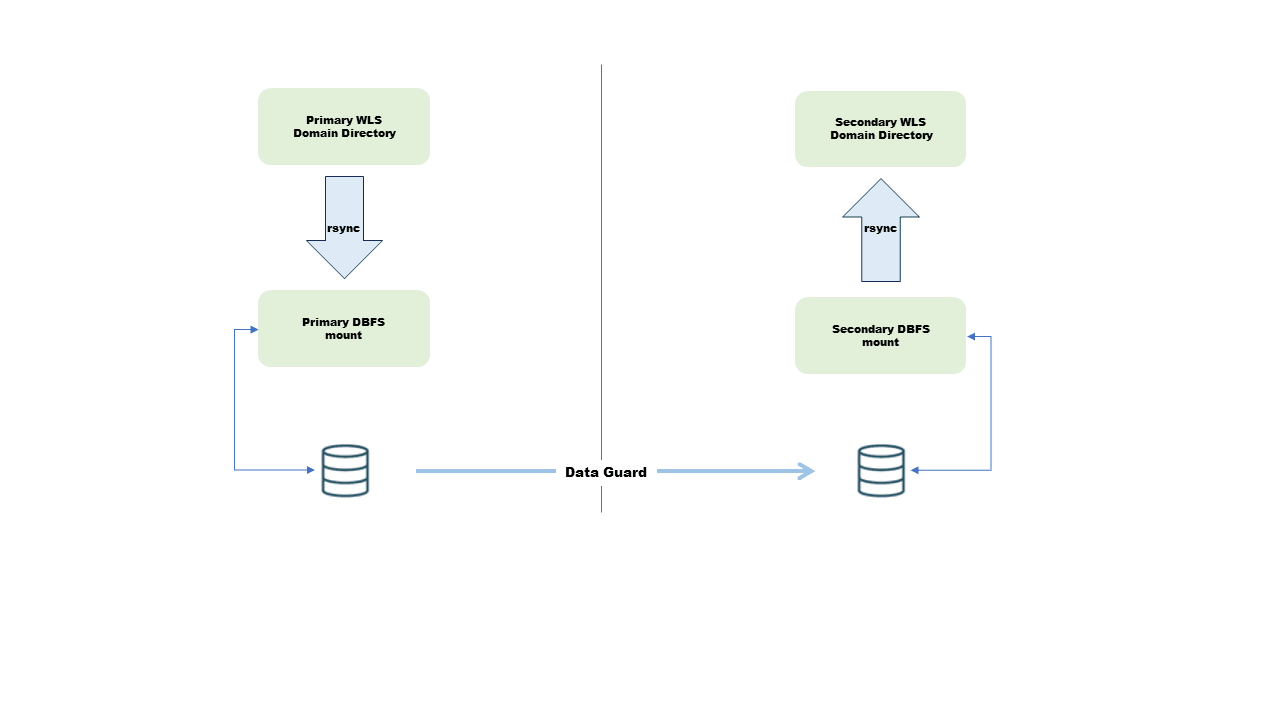

Oracle Database File System

Oracle Database File System (DBFS) is an additional method that can be used for replicating primary to standby. It is mainly intended to deal with configuration or data that does not grow and changes very frequently (because of the overhead it generates in the database both at the connection and the storage level). Conceptually, a database file system is a file system interface for data that is stored in database tables.

DBFS is similar to NFS which provides a shared network file system that looks like a local file system and has both a server component and a client component. The DBFS file system can be mounted from the mid-tier hosts and accessed as a regular shared file system. Any content that is copied to the DBFS mount (as it resides in the database), is automatically replicated to the secondary site through the underlying Data Guard replication.

This method takes advantage of the robustness of the Data Guard replica. It has good availability through Oracle Driver’s retry logic and provides a resilient behavior. It can be used in scenarios with medium or high latencies between the data centers. However, using DBFS for configuration replication has additional implications from the setup, database storage, and lifecycle perspectives as follows:

-

It introduces some complexity due to the configuration and maintenance required by the DBFS mount. It requires the database client to be installed in the host that is going to mount it and requires an initial setup of some artifacts in the database (tablespace, user, and so on) and in the client (the wallet, the

tnsnames.ora, and mount scripts). -

It requires additional capacity in the database because the content copied to the DBFS mount is stored in the database.

-

Oracle does not recommend storing the WebLogic domain configuration or the binaries directly in the DBFS mount. This would create a very strong dependency between the Fusion Middleware files and the database. Instead, it is recommended to use the DBFS as an "assistance" file system like an intermediate staging file system to place the information that is going to be replicated to the secondary site. Any replication to standby implies two steps in this model — from the primary's origin folder to the intermediate DBFS mount and then in the secondary site from the DBFS mount to the standby's destination folder. The intermediate copies are done using rsync. As this is a low latency and local rsync copy, some of the problems that arise in a remote rsync copy operation are avoided with this model. The following diagram illustrates the replication flow

Figure 2-10 Oracle Database File System (DBFS)

-

The DBFS directories can be mounted only if the database is open. When the Data Guard is not an Active Data Guard (ADG), the standby database is in mount state. Hence, to access the DBFS mount in the secondary site, the database needs to be converted to snapshot standby. When ADG is used, the file system can be mounted for reads and there is no need to transition to snapshot.

Due to the above stated reasons, it is not recommended to use DBFS as a general purpose solution to replicate all the artifacts (especially runtime files) to the standby. Using DBFS to replicate the binaries is an overkill. However, this approach is suitable to replicate (through a staging directory) few artifacts like the domain shared configuration where other methods like the storage replication or rsync do not fit the system needs. Cost or unreliable network connections between the primary and the secondary makes DBFS the best approach for file replication.

Comparing Different Replication Methods

Defining a file system replication strategy implies making a decision considering the RTO, RPO, management complexity, and total cost of ownership of each approach. Different aspects may drive the decision and the following is a list with the most relevant features. You must analyze your specific application needs and how these aspects may affect your disaster recovery design.

Management Complexity

The configuration replication procedures in the DBFS and rsync (using a staging location) methods are similar irrespective of the number of nodes in the WebLogic domain. Contrary to this, shared storage replication management gets more complicated as the number of replicated volumes increases. It requires a good lifecycle management of the different volumes and volume groups. Also, switchover and failover operations are more complex when using storage replication than in the rsync and DBFS methods. There may be additional pre and post switchover or failover steps including the activation and attachment of the replicated volumes. This complexity increases when each WebLogic managed server node uses private volumes that need to be replicated individually.

Cost Implication

In the DBFS approach, the increased costs are related to the additional storage required in the database to host the DBFS tables. Typical database tablespace and storage maintenance operations are required for an efficient recovery of allocated space. The network overhead in the cross-region copy is typically neglectable in comparison to the overall Data Guard traffic requirements.

With rsync, the storage requirement is low since file storage allocation for a WebLogic domain is typically below 100 gigabytes and the copy over rsync does not have a big network impact either.

With storage replication, once you enable replication for a volume or share, additional costs emerge in most storage vendors. Replication efficiency is also a factor affecting network and storage costs. As part of the replication process, all data being updated on the source volumes or shares is transferred to the volume replica, so volumes with continual updates incur higher network costs.

Replication Overhead

Copying through a staging directory in the DBFS and rsync scenarios does have an impact on the replication coordinator server (typically the Weblogic administration node). Additional memory and CPU resources may be required in it depending on the frequency of the copy, how big the WebLogic domain is, and whether other runtime files need to be replicated across regions in the same replication cycle.

Recovery Time Objective (RTO) for File System Data

The switchover RTO is similar in the DBFS, rsync, and storage replication approaches. For failover operations, additional steps are required by shared storage replication (activate snapshots, attach or mount volumes, and so on). These operations typically increment the downtime during a failover.

Recovery Point Objective (RPO) for File System Data

Storage replication process is continuous in most vendors with the typical Recovery Point Object (RPO) target rate being as low as a few minutes. However, depending on the change rate of data on the source volume the RPO can vary. In the DBFS and rsync methods, the user can have a finer control on the RPO for the WebLogic configuration because the information is replicated using a scheduled script and the amount of information replicated is lower than the entire storage volume. On the other hand, in the DBFS and rsync methods, it is typically the administration server’s node that acts as "manager" of the domain configuration received, so this node’s availability and capacity drives the speed of the configuration copy. When using storage replication, the replication keeps taking place regardless of the secondary nodes being up and running which can be used as an offline replication approach.

In summary, your system’s RTO, RPO, and cost needs will drive the decision for the best possible replication approach. Analyze the requirements of your applications to determine the best solution.

Preparing the Primary System

It is assumed that the primary site is created following the best practices prescribed in the Oracle Fusion Middleware Enterprise Deployment Guide. The network, hostnames, storage and database recommendations provided in the EDG need to be already present in the primary system.

This guarantees that before providing protection against disaster, local outages in the different layers (expected to happen much more frequently than disasters affecting an entire data center) will also be preempted.

The listen addresses, storage allocations, and directory considerations included in the EDG are already designed with disaster protection in mind. It is possible that some alternatives may have been adopted in the primary which can be corrected to implement disaster protection. The most common ones are using local storage for binaries and configuration information (instead of the shared storage recommended by the EDG) or not using the appropriate aliases as listen address for the different Fusion Middleware components. The following sections describe how to prepare the primary site for disaster recovery.

Preparing the Primary Storage for Storage Replication

Moving Oracle Homes To Shared Storage

When you use storage replication for Oracle Fusion Middleware Disaster Recovery, the Oracle Home and middle-tier configuration should reside on the shared storage according to the recommendations provided in the Enterprise Deployment Guide for your Fusion Middleware component. This facilitates maintenance of Oracle Homes while providing high availability since at least two Oracle Home locations are used (one for even nodes and one for odd nodes).

If the production site was initially created without disaster recovery in mind, the directories for the Oracle Fusion Middleware instances that comprise the site might be located on the local storage private to each node. In this scenario, Oracle recommends migrating the homes completely to shared storage to implement the Oracle Fusion Middleware Disaster Recovery solution. This operation may incur downtime on the primary system and needs to be planned properly to minimize its impact. This change is recommended because not only does it facilitate the DR strategy but also simplifies the Oracle Home and domain configuration management.

Follow these guidelines for migrating the production site from the local disks to the shared storage:

-

Perform offline backup of the folders that are going to be moved to the shared storage. If the backup is performed using OS commands, it must be done as the root user and the permissions must be preserved.

See Types of Backups and Recommended Backup Strategy in Oracle Fusion Middleware Administering Oracle Fusion Middleware

-

Although you move the content to NFS, the path will be preserved. The current folder that is going to be moved to an NFS (for example,

/u01/oracle/products) will become a mount point. In preparation for this, move or rename the current folder to another path so the mount point is empty. -

Ensure that the mount point where the shared storage will be mounted exists, is empty, and has the correct ownership.

-

Mount the shared storage in the appropriate mount point. The directory structure on the shared storage must be set up as described in the Enterprise Deployment Guide.

-

Once the shared storage is mounted, copy the content from the backup (or from the renamed folder) to the folder, that is now in the shared storage.

For example, moving a products folder, that is in

/u01/oracle/products, from local disk to an NFS folder.

-

To backup the content in the local folder, a copy is performed by user root, preserving the mode:

[root@soahost1]# cp -a /u01/oracle/products /backups/products_backup -

The current folder is moved because the folder that will become the mount point should be empty:

[root@soahost1]# mv /u01/oracle/products /u01/oracle/products_local -

After renaming the folder, check that the mount folder point exists (if it does not exist, it must be created) and verify that it is empty and with the correct ownership. The mount point is

/u01/oracle/productsin the following example:[root@soahost1]# mkdir -p /u01/oracle/products [root@soahost1]# ls /u01/oracle/products [root@soahost1]# chown oracle:oinstall /u01/oracle/products -

The NFS volume is mounted in the mount point.

[root@soahost1]# mount -t nfs nasfiler:VOL1/oracle/products/ /u01/oracle/products/ -

To make this persistent, add the mount to the

/etc/fstabon each host. -

Now the content from the backup is copied to the mount:

[root@soahost1]# cp -a /backups/products_backup /u01/oracle/products

You can use a similar approach to move private WebLogic domain directories to shared storage. This will facilitate the replication without requiring a staging location for the managed servers domain directory content. Refer to the EDG for details on how to use shared storage for the managed server private configuration directories.

Creating Volume Groups

Once the pertaining volumes on shared storage have been allocated according to the EDG recommendations, you need to prepare snapshots and volume groups for replication.

Most storage vendors guarantee consistency when multiple nodes are using different storage volumes and it is needed to take a backup of all of them (or replicate their contents) at a precise point in time. Volumes are grouped in a single logical unit frequently called consistency volume group, consistency group or volume group. Volume groups provide a point in time copy of the different volumes used in an Enterprise Deployment Guide so that a consistent snapshot of all of them is propagated to the secondary.

Imagine a situation where a database module deployment resides in volume A while the application that uses it resides in volume B. Without consistency groups it could occur that a new version of the WAR for the application is replicated while the data source configuration it requires is not, thus causing a consistency problem in the secondary. To avoid situations like this and while using the different volumes suggested in the Oracle Fusion Middleware Enterprise Deployment topology, Oracle recommends the following consistency groups. This example is using the precise case of Oracle Fusion Middleware SOA Suite but can be extrapolated to other Fusion Middleware systems:

-

Create one consistency group with the volumes that contains the domain directories for the Administration Server and Managed Servers as members (

DOMAINGROUPin Table 2-8). In Oracle Fusion Middleware 14.1.2, the EDG places the Identity and Truststores required for configuring SSL for the different components (Node Manager, WebLogic Servers) in the same volume as the Administration Server’s domain configuration. If you are placing SSL certificates and stores in a different location, ensure that it is included as part of theDOMAINGROUPconsistency group, since the SSL configuration for the WebLogic parts has a dependency on theKESYTORE_HOMElocation (refer to the EDG for details on configuring SSL for the different components). -

Create one consistency group with the volume that contains the runtime artifacts generated by the applications running in the WebLogic Server domain (if any) (

RUNTIMEGROUPin Table 2-8). -

Create one consistency group with the volume that contains the Oracle Home as members (

FMWHOMEGROUPin Table 2-8).

Table 2-8 provides a summary of Oracle recommendations for consistency groups for the Oracle SOA Suite topology as shown in Figure 2-1.

Table 2-8 Consistency Groups for Oracle SOA Suite

| Tier | Group Name | Members | Comments |

|---|---|---|---|

|

Application |

|

|

Consistency group for the Administration Server, and the Managed Server domain directory |

|

Application |

|

|

Consistency group for the shared runtime content |

|

Application |

|

|

Consistency group for the Oracle Homes |

Preparing the Primary Storage for Rsync Replication

Using Peer-to-Peer

When using the rsync replication approach in a peer-to-peer model, preparing primary involves preparing the pertaining rsync scripts for the initial copy to each peer (one per node). It is recommended to offload the primary systems from performing the copy and running checksum validations. In terms of CPU, memory impact, and number of Disk I/O operations there is no significant difference whether secondary pulls information from the primary or the primary pushes the file system copy to the secondary. However, starting the rsync from secondary has other advantages as follows:

-

Cron jobs’ scheduling does not interfere with the primary (primary does not need to schedule anything and does not need to be manipulated to start rsync ops).

-

There is a better control on hard stop for errors during rsync operations.

-

Log and analysis of the operations is better offloaded to secondary.

It is for these reasons that in the peer-to-peer model, rsync scripts

are typically prepared in secondary and not considered part of the primary

preparation. The only aspect that needs to be accounted for is the appropriate SSH

connectivity between the secondary and primary. Before running the rsync operations,

ensure the SSH connectivity is allowed and working between each node in secondary

and its peer in primary. It is recommended to use a SSH key for the access. Try a

simple ssh -i path_to_keyfile primary_wlsnode1_ip_primary from the

wlsnode1 peer in secondary. Repeat the verification for each OHS and WLS node

participating in the topology. The scripts and first replications will be executed

as part of the secondary setup.

Using a Staging Location

When using the staging approach, you should create a central location (ideally on shared storage) to host the staging copy and scripts to transfer from the primary nodes to the staging directory and scripts to transfer from the staging directory to the target secondary nodes. When using two staging locations (one in the primary and one in the secondary to reduce the connectivity requirements across sites), three sets of scripts are required: to copy from primary nodes to the staging location in primary, to copy from the staging location in primary to the staging location in secondary, and to copy from the staging location in secondary to the different nodes in secondary. This approach is also useful as an extension to a backup strategy. Each location has a backup copy that can be distributed to the different nodes. Prepare a script in the node responsible for the staging (typically the admin server node in secondary) per node in primary. You should characterize each nodes WebLogic domain copy. For example, using a structure as follows:

Figure 2-11 Staging Location

Before running the rsync operations ensure SSH connectivity is allowed and working

between each node in primary and the staging node in primary and between the staging

node in primary and the staging one in secondary (if the two-staging-locations model

is used). Try a simple ssh -i path_to_keyfile

primary_stagenode_ip_primary from each WebLogic and OHS node in

primary. If you are using staging locations both in primary and secondary, check SSH

connectivity from the staging orchestrator in secondary also.

For each production site host on which one or more Oracle Fusion Middleware components have been installed, set up rsync to copy the following directories and files to the same directories and files on the secondary to the staging host:

-

Oracle Home directory and subdirectories.

-

Oracle Central Inventory directory which includes the Oracle Universal Installer entries for the Oracle Fusion Middleware installations.

-

Shared config folder such as the WebLogic Administration Server domain directory, deployment plans, applications, keystores, and so on. Since this folder is shared by all the mid-tier hosts in the EDG, you do not have to have a copy for each host. Do not replicate the shared WebLogic domain directory only. In context of the EDG, there are dependencies in the

/u01/oracle/config/applicationsand/u01/oracle/config/keystoresdirectories that need to be copied with the WebLogic domain configuration. -

Private config folders such as the WebLogic managed server's domain and local node manager home on the host.

-

Shared runtime folder (if applicable).

-

Oracle Fusion Middleware static HTML pages directory for the Oracle HTTP Server installations on the host (if applicable).

You can use the scripts at GitHub as an example to prepare the primary

system for the staging copy. The script rsync_for_WLS.sh is a

wrapper that invokes rsync_copy_and_validate.sh. This second script

contains the real logic to perform rsync copies with the recommended rsync

configuration and executes a thorough validation of files after the copy is

completed. If any differences are detected after several validations retries, these

are logged so that they can be acted upon.

By default, the rsync_copy_and_validate.sh uses

oracle as the user to perform the copies. If a different

user owns the origin folders, customize the property USER in the

script.

It also uses the environment variable DEST_FOLDER to

determine the target location that will be used to rsync contents. If the variable

is not set, the script copies contents to the node executing the script to the exact

same directory path used in the source node. While using the staging approach, set

the variable to the desired path (for example , for the first WLS node private

configuration)

/staging_share_storage/midtier/wls_private_config/wlsnode1_private_config.

-

To rsync any folder from primary nodes, use

rsync_for_WLS.shin the staging node in primary as the user owning the directory in each primary node .rsync_for_WLS.shneeds to be executed with three parameters when using SSH key file for SSH connections: the IP of the node from which the copy will be pulled, the path to be replicated, and the ssh key file. For example:./rsync_for_WLS.sh 10.1.1.1 /u01/oracle/config/domains/soaedg/ /home/oracle/keys/SSH_KEY.privIt can also be executed with only two parameters (the IP of the node from which the copy will be pulled and the path to be replicated). In this case, the SSH connection used by rsync will prompt for SSH password (password-based SSH needs to be set up before running the script).

./rsync_for_WLS.sh 172.11.2.113 /u01/oracle/config/domains/soaedg/ -