17 Understanding Multi-Data Centers

The Access Manager Multi-Data Center (MDC) topology scales horizontally - within a single data center by clustering multiple nodes, or across multiple data centers. This model provides for load balancing as well as failover capabilities in the case that one of the nodes or data centers goes down. This chapter contains introductory details.

17.1 Introducing the Multi-Data Center

Large organizations using Access Manager typically deploy their applications across Multi-Data Centers (MDC) to distribute load as well as address disaster recovery. Deploying Access Manager in Multi-Data Centers allows for the transfer of user session details transparently after configuration of Single Sign-On (SSO) between them.

The scope of a data center comprises protected applications, WebGate agents, Access Manager servers, and other infrastructure entities including identity stores and databases.

Note:

Access Manager supports scenarios where applications are distributed across two or more data centers.

The Multi-Data Center approach supported by Access Manager is a Master-Clone deployment in which the first data center is specified as the Master and one or more Clone data centers mirror it. (Master and Clone data centers can also be referred to as Supplier and Consumer data centers). During setup of the Multi-Data Center, session adoption policies are configured to determine where a request would be sent if the Master Data Center is down. Following the setup, a manner of replicating data from the Master to the Clone(s) will be designated. This can be done using the Automated Policy Sync (APS) Replication Service or it can be done manually.

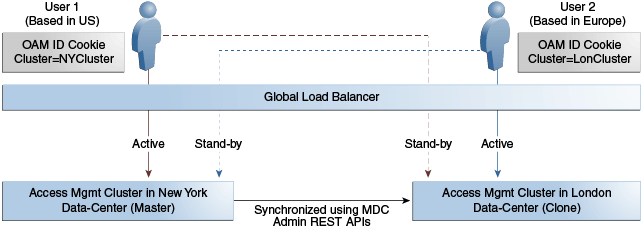

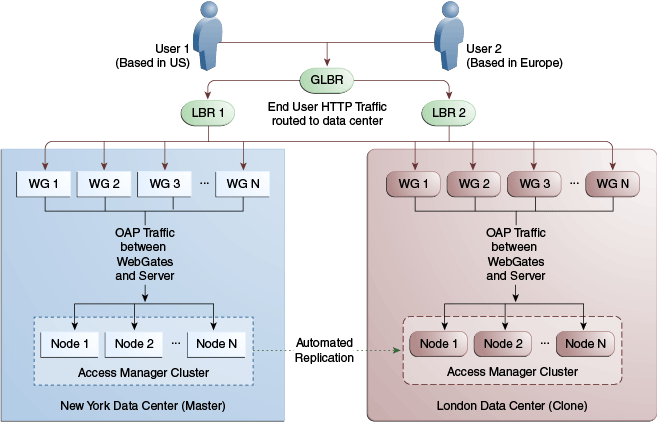

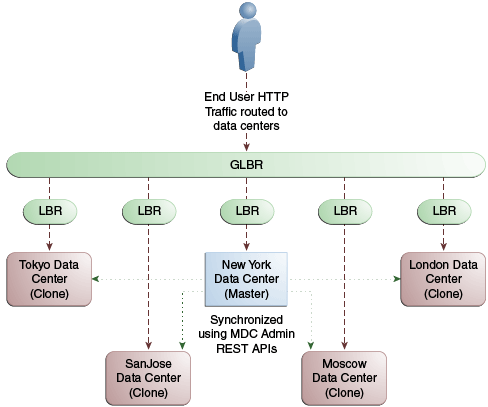

A data center may include applications, data stores, load balancers, and the like. Each data center includes a full Access Manager installation. The WebLogic Server domain in which the instance of Access Manager is installed will not span data centers. Additionally, data centers maintain user to data center affinity. Figure 17-1 illustrates the Multi-Data Center system architecture.

Figure 17-1 Multi-Data Center System Architecture

Description of "Figure 17-1 Multi-Data Center System Architecture"

Note:

Global load balancers are configured to route HTTP traffic to the geographically closest data center. No load balancers are used to manage Oracle Access Protocol traffic.

All applications are protected by WebGate agents configured against Access Manager clusters in the respective local data centers. Every WebGate has a primary server and one or more secondary servers; WebGate agents in each data center have Access Manager server nodes from the same data center in the primary list and nodes from other data centers in the secondary list.

Thus, it is possible for a user request to be routed to a different data center when:

-

a local data center goes down

-

there is a load spike causing redistribution of traffic

-

certain applications are deployed in only one data center

-

WebGates are configured to load balance within one data center but failover across data centers

This section describes the following topics:

17.1.1 Understanding Cookies for Multi-Data Center

SSO cookies (OAM_ID, OAMAuthn, and OAM_GITO) are enhanced and used by the Multi-Data Centers.

This section describes the following topics:

17.1.1.1 OAM_ID Cookie

The OAM_ID cookie is the SSO cookie for Access Manager and holds the attributes required to enable the MDC behavior across all data centers.

If a subsequent request from a user in the same SSO session is routed to a different data center in the MDC topology, session adoption is triggered per the configured session adoption policies. ‘Session adoption’ refers to the action of a data center creating a local user session based on the submission of a valid authentication cookie (OAM_ID) that indicates a session for the user exists in another other data center in the topology. (It may or may not involve re-authentication of the user.)

When a user session is created in a data center, the OAM_ID cookie will be augmented or updated with the following:

clusteridof the data centersessionidlatest_visited_clusterid

In MDC deployments, OAM_ID is a host-scoped cookie. Its domain parameter is set to a virtual host name which is a singleton across data centers and is mapped by the global load balancer to the Access Manager servers in the Access Manager data center based on the load balancer level user traffic routing rules (for example, based on geographical affinity). The OAM_ID cookie is not accessible to applications other than the Access Manager servers.

17.1.1.2 OAMAuthn WebGate Cookie

OAMAuthn is the WebGate cookie for 11g and 12c. On successful authentication and authorization, a user will be granted access to a protected resource. At that point, the browser will have a valid WebGate cookie with the clusterid:sessionid of the authenticating data center.

If authentication followed by authorization spans across multiple data centers, the data center authorizing the user request will trigger session adoption by retrieving the session's originating clusterid from the WebGate cookie. (WebGates need to have the same host name in each data center due to host scoping of the WebGate cookies.) After adopting the session, a new session will be created in the current data center with the synced session details.

Note:

The WebGate cookie cannot be updated during authorization hence the newly created sessionid cannot be persisted for future authorization references. In this case, the remote sessionid and the local sessionids are linked through session indexing. During a subsequent authorization call to a data center, a new session will be created when:

-

MDC is enabled

-

a session matching the sessionid in the WebGate cookie is not present in the local data center

-

there is no session with the Session Index that matches the sessionid in the WebGate cookie

-

a valid session exists in the remote data center (based on the MDC SessionSync Policy)

In these instances, a new session is created in the local data center with a Session Index that refers to the sessionid in the WebGate cookie.

17.1.1.3 OAM_GITO (Global Inactivity Time Out) Cookie

OAM_GITO is a domain cookie set as an authorization response.

The session details of the authentication process will be recorded in the OAM_ID cookie. If the authorization hops to a different data center, session adoption will occur by creating a new session in the data center servicing the authorization request and setting the session index of the new session as the incoming sessionid. Since subsequent authentication requests will only be aware of the clusterid:sessionid mapping available in the OAM_ID cookie, a session hop to a different data center for authorization will go unnoticed during the authentication request. To address this gap, an OAM_GITO cookie (which also facilitates time out tracking across WebGate agents) is introduced.

During authorization, the OAM_GITO cookie is set as a domain cookie. For subsequent authentication requests, the contents of the OAM_GITO cookie will be read to determine the latest session information and the inactivity or idle time out values. The OAM_GITO cookie contains the following data.

-

Data Center Identifier

-

Session Identifier

-

User Identifier

-

Last Access Time

-

Token Creation Time

Note:

For the OAM_GITO cookie, all WebGates and Access Manager servers should share a common domain hierarchy. For example, if the server domain is .us.example.com then all WebGates must have (at least) .example.com as a common domain hierarchy; this enables the OAM_GITO cookie to be set with the .example.com domain.

17.1.2 Session Adoption During Authorization

Multi-Data Center session adoption is supported during the authorization flow. After successful authentication, the OAMAuthn cookie will be augmented with the cluster ID details of the data center where authentication has taken place.

During authorization, if the request is routed to a different data center, Access Manager runtime checks for a valid remote session and determines if it is a Multi-Data Center scenario. When a valid remote session is located, the Multi-Data Center session adoption process is triggered per the session adoption policy.

The session adoption policy can be configured so that the clone Access Manager cluster would make a back-end request for session details from the master Access Manager cluster using the Oracle Access Protocol (OAP). The session adoption policy can also be configured to invalidate the previous session so the user has a session only in one data center at a given time. Following the session adoption process, a new session will be created in the data center servicing the authorization request.

Note:

Since OAMAuthn cookie updates are not supported during authorization, the newly created session's Session Index will be set to that of the incoming Session ID.

17.1.3 Session Indexing

The Session Index refers to the session identifier in the OAMAuth cookie.

A new session with a Session Index is created in the local data center during an authorization call to a data center. It occurs when the following conditions are met:

-

Session matching Session ID in the OAMAuth cookie is not present in the local data center.

-

MDC is enabled.

-

No session with Session Index matching Session ID in the OAMAuth cookie.

-

Valid session exists in the remote data center based on the MDC Session Sync Policy.

17.1.4 Supported Multi-Data Center Topologies

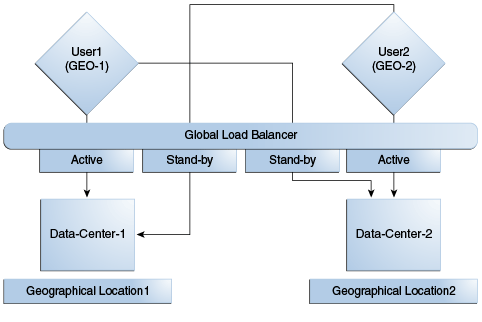

Access Manager supports three Multi-Data Center topologies: Active-Active, Active-Passive, and Active-Standby modes.

The following sections contain details on these modes.

17.1.4.1 The MDC Active-Active Mode

An Active-Active topology is when Master and Clone data centers are exact replicas and active at the same time.

They cater to different sets of users based on defined criteria; geography, for example. A load balancer routes traffic to the appropriate Data Center. Figure 17-2 illustrates a Multi-Data Center set up in Active-Active mode during normal operations.

In Figure 17-2, the New York Data Center is designated as the Master and all policy and configuration changes are restricted to it. The London Data Center is designated as a Clone and uses REST APIs to periodically synchronize data with the New York Data Center. The global load balancer is configured to route users in different geographical locations (US and Europe) to the appropriate data centers (New York or London) based on proximity to the data center (as opposed to proximity of the application being accessed). For example, all requests from US-based User 1 will be routed to the New York Data Center (NYDC) and all requests from Europe-based User 2 will be routed to the London Data Center (LDC).

Note:

The Access Manager clusters in Figure 17-2 are independent and not part of the same Oracle WebLogic domain. WebLogic domains are not recommended to span across data centers.

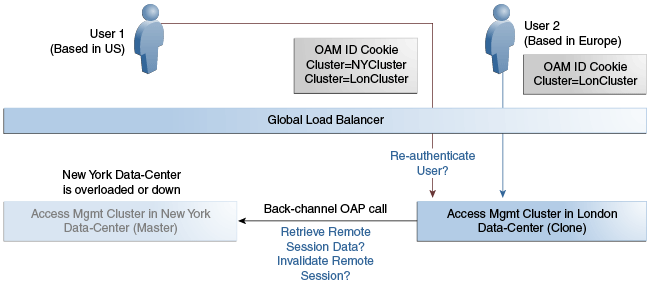

In this example, if NYDC was overloaded with requests, the global load balancer would start routing User 1 requests to the Clone Access Manager cluster in LDC. The Clone Access Manager cluster uses the OAM_ID cookie of the user to check for a valid session in the Master cluster. If there is a valid session in the Master cluster, a new session is created without prompting for authentication or re-authentication.

Further, the session adoption policy can be configured such that the Clone Access Manager cluster would make a back-end request for session details from the Master Access Manager cluster using the Oracle Access Protocol (OAP). The session adoption policy can also be configured to invalidate the remote session (the session in NYDC) so the user has a session only in one data center at a given time.

Figure 17-3 illustrates how a user might be rerouted if the Master cluster is overloaded or down. When the Master Access Manager cluster goes completely down, the clone Access Manager cluster tries to obtain the session details of User 1. Since the Master Access Manager cluster is completely inaccessible, User 1 is forced to re-authenticate and establish a new session in the Clone Access Manager cluster. In this case, any information stored in the previous session is lost.

Note:

An Active-Active topology with agent failover is when an agent has Access Manager servers in one data center configured as primary and Access Manager servers in the other data centers configured as secondary to aid failover scenarios.

17.1.4.2 The MDC Active-Passive Mode

An Active-Passive topology is when the primary data center is operable but the clone data center is not. In this topology, the clone can be brought up within a reasonable time in cases when the primary data center fails. Thus, in the Active-Passive Mode one of the data centers is passive and services are not started.

In this use case, the data center does not have to be brought up immediately but within a reasonable amount of time in cases when the primary data center fails. There is no need to do an MDC setup although policy data will be kept in sync.

17.1.4.3 The MDC Active-Hot Standby Mode

Active–Hot Standby is when one of the data centers is in hot standby mode. In this case, traffic is not be routed to the Hot Standby data center unless the active data center goes down.

In this use case, you do not need additional data centers for traffic on a daily basis but only keep one ready. Follow the Active-Active mode steps to deploy in Active-Hot Standby Mode but do not route traffic to the center defined as Hot Standby. The Hot Standby center will continue to sync data but will only be used when traffic is directed there by the load balancer or by an administrator.

17.2 Multi-Data Center Deployments

In a Multi-Data Center deployment, each data center will include a full Access Manager installation; WebLogic Server domains will not span the data centers.

Global load balancers maintain users to data center affinity although a user request may be routed to a different data center when:

-

the data center goes down.

-

a load spike causes redistribution of traffic.

-

each data center is not a mirror of the other. (For example, certain applications may only be deployed in a single data center)

-

WebGates are configured to load balance within the data center and failover across data centers.

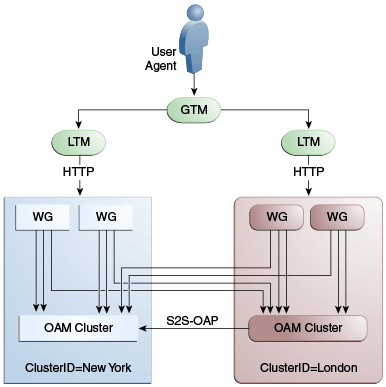

Figure 17-4 illustrates a basic Multi-Data Center deployment.

The following sections describe several deployment scenarios:

-

Session Adoption Without Re-authentication, Session Invalidation or Session Data Retrieval

-

Session Adoption Without Re-authentication But With Session Invalidation andSession Data Retrieval

-

Authentication and Authorization Requests Served By Different Data Centers

Note:

The OAP connection used for back channel communication does not support load balancing or failover so a load balancer needs to be used.

17.2.1 Session Adoption Without Re-authentication, Session Invalidation or Session Data Retrieval

The following scenario illustrates the flow when the Session Adoption Policy is configured without re-authentication, remote session invalidation and remote session data retrieval.

It is assumed the user has affinity with DC1.

-

User is authenticated by DC1.

On successful authentication, the OAM_ID cookie is augmented with a unique data center identifier referencing DC1 and the user can access applications protected by Access Manager in DC1.

-

Upon accessing an application deployed in DC2, the user is routed to DC2 by a global load balancer.

-

Access Manager in DC2 is presented with the augmented OAM_ID cookie issued by DC1.

On successful validation, Access Manager in DC2 knows that this user has been routed from the remote DC1.

-

Access Manager in DC2 looks up the Session Adoption Policy.

The Session Adoption Policy is configured without reauthentication, remote session invalidation or remote session data retrieval.

-

Access Manager in DC2 creates a local user session using the information present in the DC1 OAM_ID cookie (lifetime, user) and re-initializes the static session information ($user responses).

-

Access Manager in DC2 updates the OAM_ID cookie with its data center identifier.

Data center chaining is also recorded in the OAM_ID cookie.

-

User then accesses an application protected by Access Manager in DC1 and is routed back to DC1 by the global load balancer.

-

Access Manager in DC1 is presented with the OAM_ID cookie issued by itself and updated by DC2.

On successful validation, Access Manager in DC1 knows that this user has sessions in both DC1 and DC2.

-

Access Manager in DC1 attempts to locate the session referenced in the OAM_ID cookie.

-

If found, the session in DC1 is updated.

-

If not found, Access Manager in DC1 looks up the Session Adoption Policy (also) configured without reauthentication, remote session invalidation and remote session data retrieval.

-

-

Access Manager in DC1 updates the OAM_ID cookie with its data center identifier and records data center chaining as previously in DC2.

17.2.2 Session Adoption Without Re-authentication But With Session Invalidation andSession Data Retrieval

The following scenario illustrates the flow when the Session Adoption Policy is configured without re-authentication but with remote session invalidation and remote session data retrieval.

It is assumed the user has affinity with DC1.

-

User is authenticated by DC1.

On successful authentication, the OAM_ID cookie is augmented with a unique data center identifier referencing DC1.

-

Upon accessing an application deployed in DC2, the user is routed to DC2 by a global load balancer.

-

Access Manager in DC2 is presented with the augmented OAM_ID cookie issued by DC1.

On successful validation, Access Manager in DC2 knows that this user has been routed from the remote DC1.

-

Access Manager in DC2 looks up the Session Adoption Policy.

The Session Adoption Policy is configured without reauthentication but with remote session invalidation and remote session data retrieval.

-

Access Manager in DC2 makes a back-channel (OAP) call (containing the session identifier) to Access Manager in DC1 to retrieve session data.

The session on DC1 is terminated following data retrieval. If this step fails due to a bad session reference, a local session is created. See Session Adoption Without Re-authentication, Session Invalidation or Session Data Retrieval.

-

Access Manager in DC2 creates a local user session using the information present in the OAM_ID cookie (lifetime, user) and re-initializes the static session information ($user responses).

-

Access Manager in DC2 rewrites the OAM_ID cookie with its own data center identifier.

-

The user then accesses an application protected by Access Manager in DC1 and is routed to DC1 by the global load balancer.

-

Access Manager in DC1 is presented with the OAM_ID cookie issued by DC2.

On successful validation, Access Manager in DC1 knows that this user has sessions in DC2.

-

Access Manager in DC1 makes a back-channel (OAP) call (containing the session identifier) to Access Manager in DC2 to retrieve session data.

If the session is found, a session is created using the retrieved data. If it is not found, the OAM Server in DC1 creates a new session. The session on DC2 is terminated following data retrieval.

17.2.3 Session Adoption Without Re-authentication and Session Invalidation But With On-demand Session Data Retrieval

Multi-Data Center supports session adoption without re-authentication except that the non-local session are not terminated and the local session is created using session data retrieved from the remote DC.

Note that the OAM_ID cookie is updated to include an attribute that indicates which data center is currently being accessed.

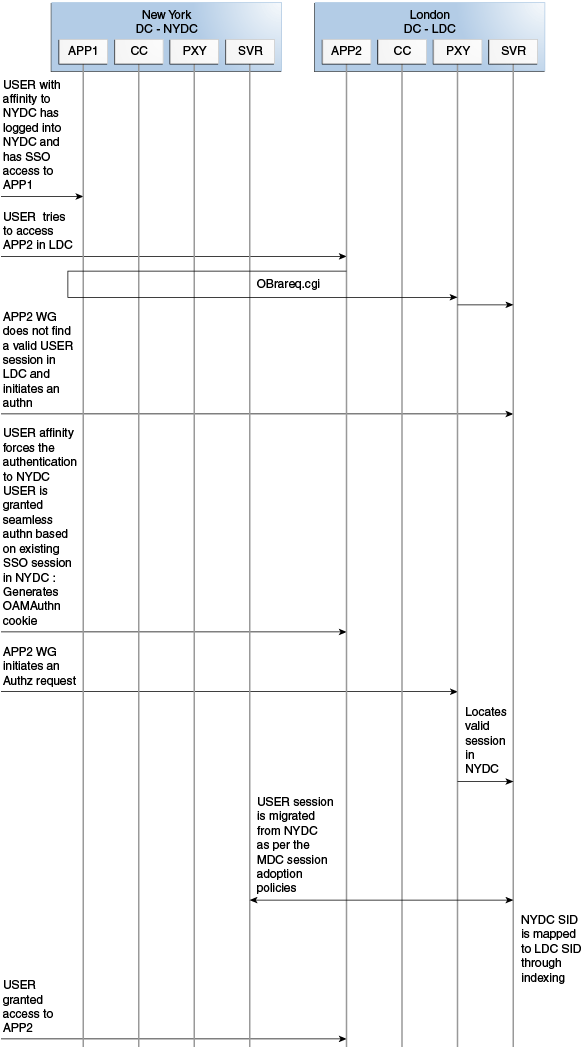

17.2.4 Authentication and Authorization Requests Served By Different Data Centers

Consider a scenario where an authentication request is served by the New York Data Center (NYDC) but the authorization request is presented to the London Data Center (LDC) because of user affinity.

If Remote Session Termination is enabled, the scenario requires a combination of the OAM_ID cookie, the OamAuthn/ObSSO authorization cookie and the GITO cookie to perform the seamless Multi-Data Center operations. This flow (and Figure 17-5 following it) illustrates this. It is assumed that the user has affinity with NYDC.

-

Upon accessing APP1, a user is authenticated by NYDC.

On successful authentication, the OAM_ID cookie is augmented with a unique data center identifier referencing NYDC. The subsequent authorization call will be served by the primary server for the accessed resource, NYDC. Authorization generates the authorization cookie with the NYDC identifier (cluster-id) in it and the user is granted access to the APP1.

-

User attempts to access APP2 in LDC.

-

The WebGate for APP2 finds no valid session in LDC and initiates authentication.

Due to user affinity, the authentication request is routed to NYDC where seamless authentication occurs. The OamAuthn cookie contents are generated and shared with the APP2 WebGate.

-

The APP2 WebGate forwards the subsequent authorization request to APP2's primary server, LDC with the authorization cookie previously generated.

During authorization, LDC will determine that this is a Multi-Data Center scenario and a valid session is present in NYDC. In this case, authorization is accomplished by syncing the remote session as per the configured session adoption policies.

-

A new session is created in LDC during authorization and the incoming session id is set as the new session's index.

Subsequent authorization calls are honored as long as the session search by index returns a valid session in LDC. Each authorization will update the GITO cookie with the cluster-id, session-id and access time. The GITO cookie will be re-written as an authorization response each time.

lf a subsequent authentication request from the same user hits NYDC, it will use the information in the OAM_ID and GITO cookies to determine which Data Center has the most current session for the user. The Multi-Data Center flows are triggered seamlessly based on the configured Session Adoption policies.

Figure 17-5 Requests Served By Different Data Centers

Description of "Figure 17-5 Requests Served By Different Data Centers"

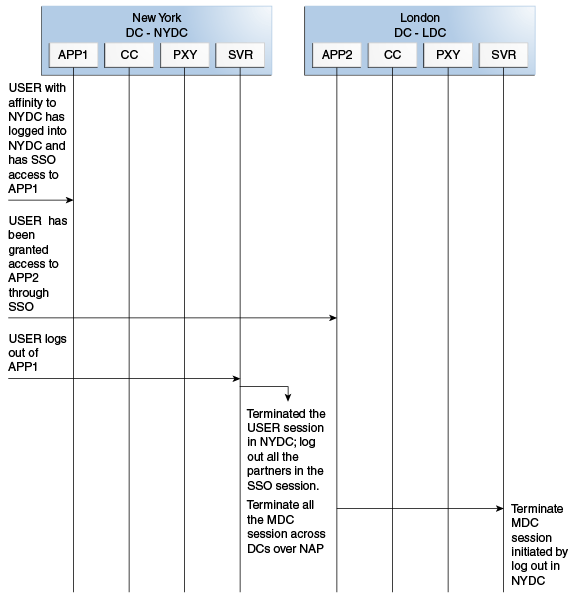

17.2.5 Logout and Session Invalidation

In Multi-Data Center scenarios, logout ensures that all server side sessions across data centers and all authentication cookies are cleared out. For session invalidation, termination of a session artifact over the back-channel will not remove the session cookie and state information maintained in the WebGates.

However, the lack of a server session will result in an Authorization failure which will result in re-authentication. In the case of no session invalidation, the logout clears all server side sessions that are part of the current SSO session across Data Centers. This flow (and Figure 17-6 following it) illustrates logout. It is assumed that the user has affinity with NYDC.

-

User with affinity to NYDC gets access to APP1 after successful authentication with NYDC.

-

User attempts to access APP2.

At this point there is a user session in NYDC as well as LDC as part of SSO.

-

User logs out from APP1.

Due to affinity, the logout request will reach NYDC.

-

The NYDC server terminates the user's SSO session and logs out from all the SSO partners.

-

The NYDC server sends an OAP terminate session request to all relevant Data Centers associated with the SSO session - including LDC.

This results in clearing all user sessions associated with the SSO across Data Centers.

Figure 17-6 Logout and Session Invalidation

Description of "Figure 17-6 Logout and Session Invalidation"

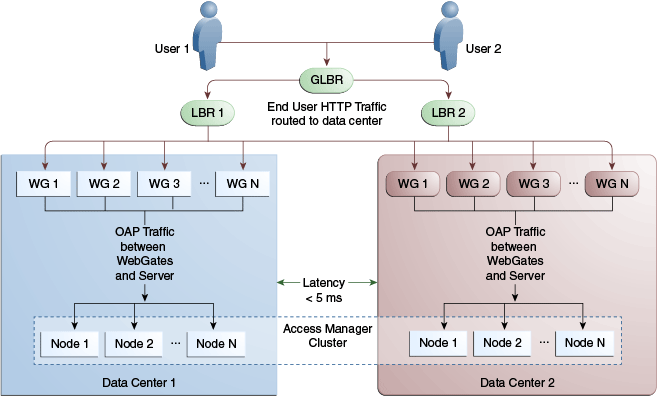

17.2.6 Stretch Cluster Deployments

For data centers that are geographically very close and have a guaranteed latency of less than 5 milliseconds, customers can choose a Stretch Cluster deployment.

In this case, unlike the traditional multi data center deployment described in the preceding sections, a single OAM cluster is stretched across multiple data centers; there are some OAM nodes in one data center and the remaining nodes in another data center. Though the deployment is spread across two data centers, Access Manager treats this as a single cluster. The policy database would reside in one of the data centers. The following limitations apply to a Stretch Cluster Deployment.

-

Access Manager depends on the underlying WebLogic and Coherence layers to keep the nodes in sync. The latency between data centers must be less than 5 milliseconds at all times. Any spike in the latency may cause instability and unpredictable behavior.

-

The cross data center chatter at runtime in a Stretch Cluster deployment is relatively more than that in the traditional multi data center deployment. In case of the latter, the runtime communication between data centers is restricted to use-cases where a session has to be adopted across data centers.

-

Since it is a single cluster across data centers, it does not offer the same level of reliability/availability as a traditional multi data center deployment. The policy database can become a single point of failure. In a traditional multi data center deployment, each data center is self-sufficient and operates independent of the other data center which provides far better reliability.

Figure 17-7 illustrates a Stretch Cluster deployment while Figure 17-8 below it illustrates a traditional MDC deployment. Oracle does recommend a traditional multi data center deployment over a Stretch Cluster deployment.

17.3 Active-Active Multi-Data Center Topology Deployment

An Active-Active topology is when Master and Clone data Centers are exact replicas of each other (including applications, data stores and the like).

They are active at the same time and cater to different sets of users based on defined criteria - geography, for example. A load balancer routes traffic to the appropriate Data Center. Identical Access Manager clusters are deployed in both locales with New York designated as the Master and London as the Clone.

Note:

An Active-Active topology with agent failover is when an agent has Access Manager servers in one data center configured as primary and Access Manager servers in the other data centers configured as secondary to aid failover scenarios.

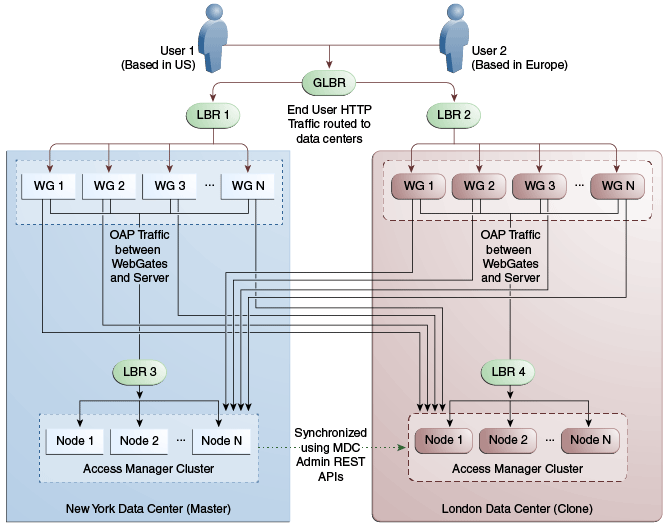

Figure 17-9 illustrates the topology for a Multi-Data Center deployment in Active-Active mode. The New York Data Center is designated as the Master and all policy and configuration changes are restricted to it. The London Data Center is designated as a Clone and uses MDC admin REST APIs periodically to synchronize data with the New York Data Center. The global load balancer is configured to route users in different geographical locations (US and Europe) to the appropriate data centers (New York or Europe) based on proximity to the data center (as opposed to proximity of the application being accessed). For example, all requests from US-based User 1 will be routed to the New York Data Center (NYDC) and all requests from Europe-based User 2 will be routed to the London Data Center (LDC).

The Global Load Balancer is configured for session stickiness so once a user has been assigned to a particular data center, all subsequent requests from that user would be routed to the same data center. In this example, User 1 will always be routed to the New York Data Center and User 2 to the London Data Center.

User requests in the respective data centers are intercepted by different WebGates depending on the application being accessed. Each WebGate has the various nodes of the Access Manager cluster within the same data center configured as its primary servers. In this case, the WebGates load balance and failover the local data center.

Note:

Administrators have the flexibility to configure the primary servers for every WebGate in different orders based on load characteristics. Running monitoring scripts in each data center will detect if any of the Access Manager components – the WebGates or the servers – are unresponsive so administrators can reconfigure the load balancers to direct user traffic to a different data center.

Any number of Clone data centers can be configured to distribute the load across the globe. The only condition is that all Clone data centers are synchronized from a single Master using MDC admin REST APIs. Figure 17-10 below depicts an Active-Active Multi-Data Center deployment across five data centers.

Figure 17-10 Active-Active Topology Across Multiple Data Centers

Description of "Figure 17-10 Active-Active Topology Across Multiple Data Centers"

17.4 Load Balancing Between Access Management Components

For example, instead of configuring the primary servers in each WebGate in the NYDC as ssonode1.ny.acme.com, ssonode2.ny.acme.com and so on, they can all point to a single virtual host name like sso.ny.acme.com and the load balancer will resolve the DNS to direct them to various nodes of the cluster. However, while introducing a load balancer between Access Manager components, there are a few constraining requirements to keep in mind.

-

OAP connections are persistent and need to be kept open for a configurable duration even while idle.

-

The WebGates need to be configured to recycle their connections proactively prior to the Load Balancer terminating the connections, unless the Load Balancer is capable of sending TCP resets to both the Webgate and the server ensuring clean connection cleanup.

-

The Load Balancer should distribute the OAP connection uniformly across the active Access Manager Servers for each WebGate (distributing the OAP connections according the source IP), otherwise a load imbalance may occur.

Figure 17-11 illustrates a variation of the deployment topology with local load balancers (LBR 3 and LBR 4) front ending the clusters in each data center. These local load balancers can be Oracle HTTP Servers (OHS) with mod_wl_ohs. The OAP traffic still flows between the WebGates and the Access Manager clusters within the data center but the load balancers perform the DNS routing to facilitate the use of virtual host names.

See Also Monitoring the Health of an Access Manager Server

Figure 17-11 Load Balancing Access Manager Components

Description of "Figure 17-11 Load Balancing Access Manager Components"

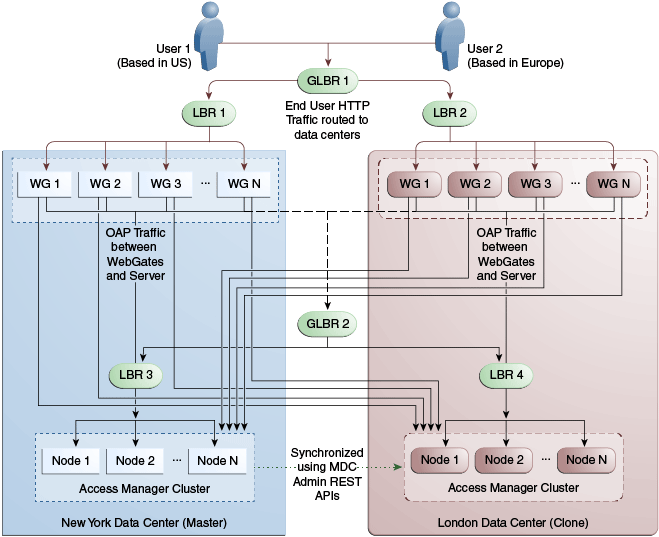

Figure 17-12 illustrates a second variation of the deployment topology with the introduction of a global load balancer (GLBR2) to front end local load balancers (LBR3 and LBR4). In this case, the host names can be virtualized not just within the data center but across the data centers. The WebGates in each data center would be configured to load balance locally but fail over remotely. One key benefit of this topology is that it guarantees high availability at all layers of the stack. Even if the entire Access Manager cluster in a data center were to go down, the WebGates in that data center would fail over to the Access Manager cluster in the other data center.

Figure 17-12 Global Load Balancer Front Ends Local Load Balancer

Description of "Figure 17-12 Global Load Balancer Front Ends Local Load Balancer"

17.5 Understanding Time Outs and Session Syncs

The following sections contain information on how the Multi-Data Center deals with session time outs and syncs.

17.5.1 Maximum Session Constraints

Credential Collector user affinity ensures that maximum session constraints per user are honored.

There is no Multi-Data Center session store to validate the maximum sessions allowed per user.

17.5.2 Multi-Data Center Policy Configurations for Idle Timeout

The OAM_ID and OAM_GITO cookies are used to calculate and enforce idle (inactivity) time outs. The OAM_GITO cookie, though, can be set only if there is a common sub-domain across WebGates. Thus, Multi-Data Center policies should be configured based on whether or not the OAM_GITO cookie is set.

Table 17-1 documents the policy configurations.

Table 17-1 Multi-Data Center Policy Configurations for Idle Timeout

| OAM_GITO Set | Multi-Data Center Policies |

|---|---|

|

Yes Idle time out will be calculated from the latest OAM_GITO cookie |

SessionMustBeAnchoredToDataCenterServicingUser=<true/false> SessionDataRetrievalOnDemand=true Reauthenticate=false SessionDataRetrievalOnDemandMax_retry_attempts=<number> SessionDataRetrievalOnDemandMax_conn_wait_time=<milliseconds> SessionContinuationOnSyncFailure=<true/false> MDCGitoCookieDomain=<sub domain> |

|

No Idle time out will be calculated from the OAM_ID cookie because OAM_GITO is not available |

SessionMustBeAnchoredToDataCenterServicingUser=false SessionDataRetrievalOnDemand=true Reauthenticate=false SessionDataRetrievalOnDemandMax_retry_attempts=<number> SessionDataRetrievalOnDemandMax_conn_wait_time=<milliseconds> SessionContinuationOnSyncFailure=<true/false> #MDCGitoCookieDomain= This setting should be commented or removed |

17.5.3 Expiring Multi-Data Center Sessions

Session expiration will be managed by the data center with which the user has affinity.

Users have affinity to a particular data center based on the global traffic manager or load balancer.

17.5.4 Session Synchronization and Multi-Data Center Fail Over

When a valid session corresponding to the user's request exists in a remote data center, based on MDC session synchronization policies, the remote session attributes are migrated and synced to the data center servicing the current request. If the synchronization fails, the datacenter can still access the requested resource as the Webgate failover across data centers is supported by the MDC.

Access Manager server side sessions are created and maintained based on Single Sign-On (SSO) credentials. The attributes stored in the session include (but are not limited to) the user identifier, an identity store reference, subject, custom attributes, partner data, client IP address and authentication level. SSO will be granted if the server can locate a valid session corresponding to the user's request.

In a Multi-Data Center scenario, when a user request hops across data centers, the data center servicing the request should validate for a legitimate session locally and across data centers. If a valid session for a given request exists in a remote data center, the remote session needs to be migrated to the current data center based on the MDC session synchronization policies. (See Multi-Data Center Deployments.) During this session synchronization, all session attributes from the remote session are synced to the newly created session in the data center servicing the current request.

The Multi-Data Center also supports WebGate failover across data centers. When a WebGate fails over from one data center to a second, the session data can not be synchronized because the first data center servers are down. Thus, the second data center will decide whether or not to proceed with the session adoption based on the setting configured for SessionContinuationOnSyncFailure. When true, even if the OAP communication to the remote data center fails, the data center servicing the current request can proceed to create a new session locally based on the mandatory attributes available in the cookie. This provides seamless access to the requested resource despite the synchronization failure. Table 17-2 summarizes prominent session synchronization and failover scenarios. The parameters in this table are explained in greater detail in Table D-2.

Table 17-2 Session Synchronization and Failover Scenarios

| MDC Deployment | MDC Policy | Validate Remote Session | Session Synchronized in DC Servicing User From Remote DC | Terminate Remote Session | User Challenged |

|---|---|---|---|---|---|

|

Active-Active |

SessionMustBeAnchoredToDataCenterServicingUser=true SessionDataRetrievalOnDemand=true Reauthenticate=false SessionDataRetrievalOnDemandMax_retry_attempts=<number> SessionDataRetrievalOnDemandMax_conn_wait_time=<milliseconds> SessionContinuationOnSyncFailure= false MDCGitoCookieDomain=<sub domain> |

Yes |

Yes |

Yes |

When a valid session could not be located in a remote data center |

|

Active-Active |

SessionMustBeAnchoredToDataCenterServicingUser=false SessionDataRetrievalOnDemand=true Reauthenticate=false SessionDataRetrievalOnDemandMax_retry_attempts=<number> SessionDataRetrievalOnDemandMax_conn_wait_time=<millisecon ds> SessionContinuationOnSyncFailure= false MDCGitoCookieDomain=<sub domain> |

Yes |

Yes |

No |

When a valid session could not be located in a remote data center |

|

Active-Standby |

SessionMustBeAnchoredToDataCenterServicingUser=true SessionDataRetrievalOnDemand=true Reauthenticate=false SessionDataRetrievalOnDemandMax_retry_attempts=<number> SessionDataRetrievalOnDemandMax_conn_wait_time=<millisecon ds> SessionContinuationOnSyncFailure= false MDCGitoCookieDomain=<sub domain> |

Could not validate as the remote DC is down |

No, since the remote DC is down |

Could not terminate as the remote data center is down |

Yes |

|

Active-Standby |

SessionMustBeAnchoredToDataCenterServicingUser=true SessionDataRetrievalOnDemand=true Reauthenticate=false SessionDataRetrievalOnDemandMax_retry_attempts=<number> SessionDataRetrievalOnDemandMax_conn_wait_time=<milliseconds> SessionContinuationOnSyncFailure= true MDCGitoCookieDomain=<sub domain> |

Could not validate as the remote data center is down |

No, since the remote data center is down |

Could not terminate as the remote data center is down |

No Provides seamless access by creating a local session from the details available in the valid cookie |

17.6 Replicating a Multi-Data Center Environment

Data in the Multi-Data Center environment must be replicated from the Master (supplier) to the Clones (consumers) as part of the initial setup procedure.

Following this initial replication, the following artifacts must be synced across data centers on a regular basis:

-

WebGate Profiles: While the WebGate profile is replicated to the Clone, the primary server list and logout URL details are updated with information about the Clone data center.

-

Authentication Modules

-

OAM Proxy Configurations

-

Session Manager configurations

-

Policy and partner data

17.6.1 Replicating Data Using the WLST

Initial replication of data (when setting up the Multi-Data Center) must be done manually using the WLST.

Following this initial replication, WLST commands or the Automated Policy Sync Replication Service can be used to sync the already replicated data. When using the WLST, partner profiles and policies are exported from the Master data center and then imported to the Clone data center. Replication of data in a Multi-Data Center environment is a requirement and using WLST for this purpose is the minimum method for accomplishing this.

17.6.2 Syncing Data Using Automated Policy Synchronization

Automated Policy Synchronization (APS, also referred to as the Replication Service) is a set of REST API used to automatically replicate data from the Master data center to Clone data centers.

It can be configured to keep Access Manager data synchronized across multiple data centers. A valid replication agreement between the data centers must be present before APS can run. See Understanding the Multi-Data Center Synchronization.

Note:

APS is only designed to keep data centers in sync and it is not used to do a complete replication from scratch. You will first need to replicate data manually using the WLST to establish a base line.

17.7 Multi-Data Center Recommendations

This section contains recommendations regarding the Multi-Data Center functionality.

17.7.1 Using a Common Domain

It is recommended that WebGates be domain-scoped in a manner that a common domain can be inferred across all WebGates and the OAM Server Credential Collectors. This allows for WebGates to set an encrypted GITO cookie to be shared with the OAM Server.

For example, if WebGates are configured on applications.abc.com and the OAM Server Credential Collectors on server.abc.com, abc.com is the common domain used to set the GITO cookie. In scenarios where a common domain cannot be inferred, setting the GITO cookie is not practical as a given data center may not be aware of the latest user sessions in another data center. This would result in the data center computing session idle-timeout based on old session data and could result in re-authenticating the user even though a more active session lives elsewhere.

Note:

A similar issue occurs during server fail-over when the SessionContinuationOnSyncFailure property is set. The expectation is to retrieve the session from contents of the OAM_ID cookie. Since it's not possible to retrieve the actual inactivity time out value from the GITO cookie, a re-authentication could result.

When there is no common cookie domain across WebGates and OAM servers, make the following configuration changes to address idle time out issues.

-

Run the

enableMultiDataCentreModeWLST command after removing the MDCGitoCookieDomain property from the input properties file. -

Because a WebGate cookie cannot be refreshed during authorization, set the value of the WebGate cookie validity lower than the value of the session idle time out property. Consider a session idle time out value of 30 minutes and a WebGate cookie validity value of 15 minutes; in this case, every 15 minutes the session will be refreshed in the authenticating data center.

17.7.2 Concerning the DCC and the OAM_GITO

The OAM_GITO cookie is not applicable when DCC is used.

The reasons are:

-

The #MDCGitoCookieDomain=setting should be commented out.

-

The SessionMustBeAnchoredToDataCenterServicingUser parameter must be set to

false. -

The WebGate cookie expiration interval should be set as documented in Using a Common Domain

.

17.7.3 Using an External Load Balancer

Note:

Failover between primary and secondary OAM servers is supported in the current release of 11g SDK APIs.

17.7.4 Honoring Maximum Sessions

17.7.5 WebGate Cookie Cannot be Refreshed During Authorization

Set the value of the WebGate cookie validity lower than the value of the session idle time out property as a WebGate cookie cannot be refreshed during authorization.

Consider a session idle time out value of 30 minutes and a WebGate cookie validity value of 15 minutes; in this case, every 15 minutes the session will be refreshed in the authenticating data center. Its recommended to set the WebGate cookie expiration to less than 2 minutes.