2 Installing and Configuring Oracle Identity Role Intelligence

Installing and configuring Oracle Identity Role Intelligence involves setting up the configuration files, creating the wallet, installing the Helm chart, and starting the data load process.

This section contains the following topics:

2.1 About OIRI on Kubernetes

OIRI uses Kubernetes as the Container Orchestration System.

OIRI uses Helm as the package manager for Kubernetes, which is used to install and upgrade OIRI on Kubernetes.

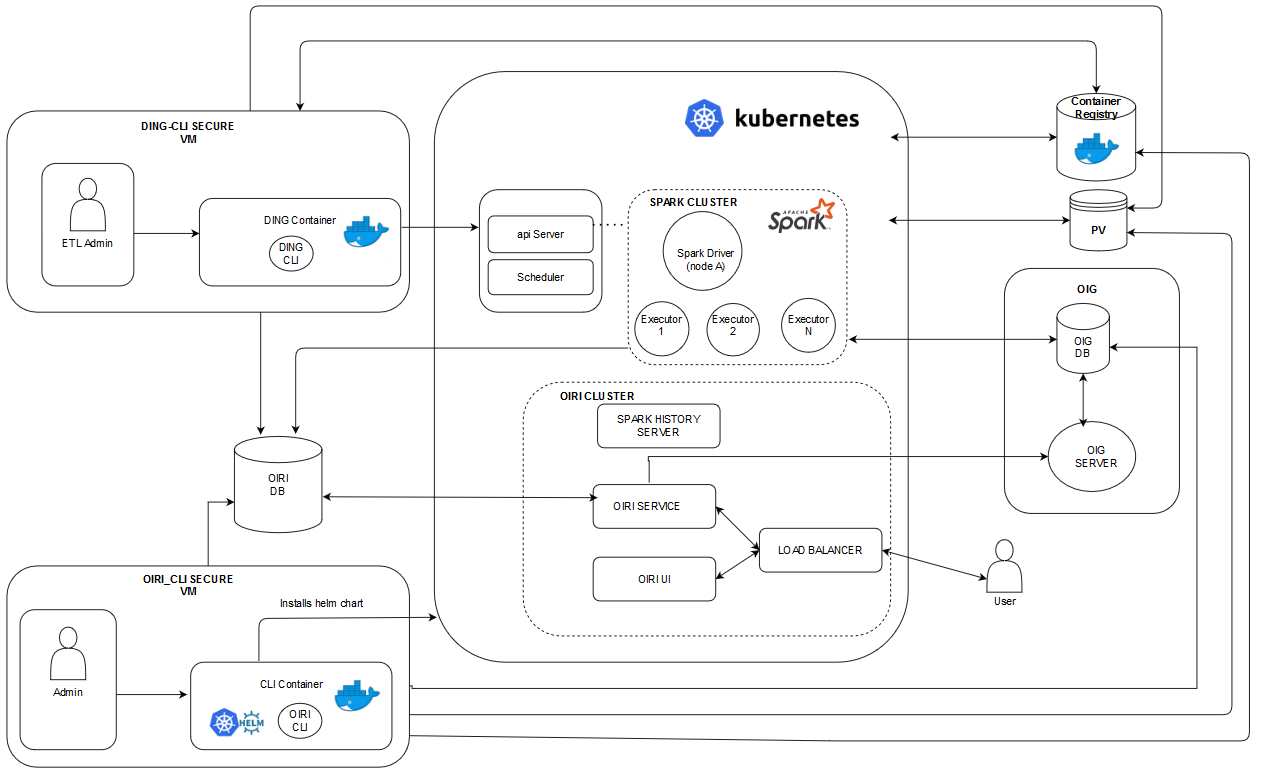

Figure 2-1 shows the deployment architecture of OIRI.

OIRI deployment includes the following components:

-

Oracle Identity Governance (OIG): This represents an already existing OIG setup. OIG is a prerequisite for setting up OIRI, and acts as an Identity Provider (IDP) for OIRI. As a result, any user logging in to OIRI is authenticated against OIG, which can also be used to load or import data for role mining into OIRI. Access to OIG database is required to import data into OIRI. Data is imported into OIRI through the Data Ingestion Command Line Interface (

ding-cli) component. -

OIRI Command Line Interface (

oiri-cli): This component is used to configure and install OIRI. This CLI is run as a pod inside the Kubernetes cluster. All the configuration scripts and Helm chart exists inside this pod. Command-line utilities, such as kubectl and helm is also available from inside the container. This CLI is also used to create the wallet and keystore. The wallet is used to securely store the credentials of the OIRI databse, OIG database, KeyStore, and OIG service account. KeyStore contains the Secure Sockets Layer (SSL) and token signing certificates. This VM should also have connectivity to the OIRI and OIG databases. -

OIRI Cluster: The OIRI Service, OIRI UI, Spark History Server, and front-end loadbalancer are installed as part of the Helm chart installation from the

oiri-clicontainer. Spark History Server is not exposed outside the Kubernetes cluster and can be accessed by using kubectl port-formward. See Installing the OIRI Helm Chart. OIRI Service has connectivity with OIG to authenticate the user logging in to OIRI UI. This is also used to publish the mined roles back to OIG. -

Data Ingestion Command Line Interface (

ding-cli): This is a secure VM to be used by the ETL Admin to carry out the data import process. This VM should have the connectivity and access to the Kubernetes cluster to trigger the data import jobs. The data import jobs are run inside a Spark cluster. This VM should have the connectivity with the OIRI and OIG databases. -

Spark Cluster: This is an ephemeral Spark cluster. When a request for data import job is triggered from the

ding-cli, the Kubernetes scheduler spins a driver and executor pod(s). When the data import job is completed, the executor pods are terminated, and the driver pod state is changed fromRunningtoCompleted. This Spark cluster should have the connectivity with the OIRI and OIG databases. -

Persistent Volume (PV): This is a persistent volume mounted on the Network File System (NFS) server. This is used to store all the configuration files and data that needs to be persisted, such as logs. All the components should have access to the PV.

-

Container Registry: This is the Docker registry, from which the required Docker images are pulled. Optionally, you can also use the .tar files for the images and load the images manually on all the VMs and Kubernetes nodes.

2.2 Prerequisites for Installing OIRI

The prerequisites for installing OIRI on Kubernetes are:

-

Oracle Database version starting from 12c Release 2 (12.2.0.1), on-premises or container-based, is installed and running. Oracle Database versions 18.3 and 19.3 are also supported.

Note:

if you have upgraded the OIRI database from 12.1.x to 12.2.x, 18c, or 19c, you should update the database parametercompatibleto a value of '12.2' or higher. If this is not done, you will seeORA-00972: identifier is too longerrors when creating some OIRI database objects. -

Oracle Identity Governance 12c (12.2.1.4.0) is installed and Oracle Identity Governance Bundle Patch 12.2.1.4.210428 is applied.

-

Docker version 19.03.11+ and Kubernetes Cluster (v1.17+) with

kubectlis installed. See Kubernetes documentation for information about installing Kubernetes cluster. -

Network File System (NFS) is available. NFS is used to create persistent volumes for using across nodes.

-

Create a user in Oracle Identity Governance (OIG) to log in to OIRI. See Creating a User in Performing Self Service Tasks with Oracle Identity Governance.

-

Authentication configuration is completed to authenticate users from OIG. See Configuring Authentication With Oracle Identity Governance for information about configuring authentication with OIG.

-

The Identity Audit feature is enabled in OIG. See Enabling Identity Audit in Performing Self Service Tasks with Oracle Identity Governance for information about enabling Identity Audit in OIG.

2.3 System Requirements and Certification

Ensure that your environment meets the system requirements such as hardware and software, minimum disk space, memory, required system libraries, packages, or patches before performing any installation.

The minimum system requirements for installing OIRI is:

- For installing OIRI on a standalone host:

- 16 GB of RAM

- Disk space of 50 GB

- 2 CPU

- For installing OIRI on a kubernetes cluster:

- Number of nodes : 3

- 16 GB of RAM per node

- 2 CPU per node (with virtualization support, for example Intel VT)

- Disk space of 150 GB

The certification document covers supported installation types, platforms, operating systems, databases, JDKs, and third-party products:

http://www.oracle.com/technetwork/middleware/ias/downloads/fusion-certification-100350.html

2.4 Configuring Authentication With Oracle Identity Governance

Oracle Identity Governance (OIG) manages the OIRI user and user access to the OIRI application.

To configure authentication to OIRI with OIG:

2.5 Loading the Container Images

The OIRI service comprises of four container images as follows:

oiri: OIRI serviceoiri-cli: OIRI command line interfaceoiri-ding: For data importoiri-ui: Identity Role Intelligence user interface

You can load the images by referring to the following:

2.6 Setting Up the Configuration Files

To set up the files required for configuring data import (or data ingestion) and Helm chart:

2.7 Parameters Required for Source Configuration

Table 2-1 lists the parameters required for OIRI database, OIG database, OIG server, and ETL source configurations.

Table 2-1 Source Configuration Parameters

| Parameter | Description | Mandatory | Default Value | Argument | Argument Shorthand |

|---|---|---|---|---|---|

|

OIG DB Host |

Host name of OIG database. This value is required for specifying OIG database as the source for ETL. |

No |

None |

|

|

|

OIG DB Port |

Port number of the OIG database. This value is required for specifying OIG database as the source for ETL. |

No |

None |

|

|

|

OIG DB Service Name |

Service name of the OIG database. This value is required for specifying OIG database as the source for ETL. |

No |

None |

|

|

|

OIRI DB Host |

Host name of the OIRI database. |

Yes |

None |

|

|

|

OIRI DB Port |

Port number of the OIRI database. |

Yes |

None |

|

|

|

OIRI DB Service |

Service name of the OIRI database. If you are using OIRI DBCS setup, then specify the PDB service name. |

Yes |

None |

|

|

|

OIG DB as Source for ETL |

Set this to true to enable OIG database as the source for ETL. |

No |

true |

|

|

|

Flat File as Source for ETL |

Set this to true to enable flat file as the source for ETL . |

No |

false |

|

|

|

OIG Server URL |

The URL of OIG server. If the OIG service is in the same K8s cluster as

that of OIRI, this parameter typically takes the format

|

Yes |

None |

|

|

|

OIG Connection Timeout |

Connect timeout interval, in milliseconds. |

No |

10000 |

|

|

|

OIG Read Timeout |

Read timeout interval, in milliseconds. |

No |

10000 |

|

|

|

OIG KeepAlive Timeout |

KeepAlive timeout is used in keep alive strategy. This strategy

will first try to apply the host's Keep-Alive policy stated in

the header. If that information is not present in the response

header it will keep alive connections for the period of

|

No |

10 |

|

|

|

OIG Connection Pool Maximum number |

The total number of connections in the OIRI database connection pool. |

No |

15 |

|

|

|

OIG Connections per route |

The maximum number of connections per (any) route. |

No |

15 |

|

|

|

OIG Proxy URI |

OIG Proxy URI |

No |

|

|

|

|

OIG Proxy Username |

OIG Proxy Username |

No |

|

|

|

|

OIG Proxy Password |

OIG Proxy Password |

No |

|

|

|

|

Key Store Name |

Key Store Name |

No |

|

|

2.8 Additional Parameters Required for Data Import

Table 2-2 lists the additional parameters required for configuring data import.

Table 2-2 Additional Parameters for Data Import

| Parameter | Description | Mandatory | Default Value | Argument | Argument Shorthand |

|---|---|---|---|---|---|

|

Spark Event Logs Enabled |

This flag enables the event logs that are used by the Spark history server to show job history. The allowed values for this flag are true/false. If set to false, no event logs are generated and you will not be able to see the job history on Spark history server. |

No |

true |

|

|

|

Spark Mode |

The supported values are |

No |

local |

|

|

|

Spark K8S Master URL |

This must be a URL with the format k8s://<API_SERVER_HOST>:<k8s_API_SERVER_PORT>. You must always specify the port, even if it is the HTTPS port 443. You can find the values of <API_SERVER_HOST> and <k8s_API_SERVER_PORT> in Kube config. |

Yes, if the value of the Spark

Mode parameter is |

None |

|

|

|

Ding Namespace |

This is the value of the namespace in which you want to start the Spark driver and executor pods for ETL |

No |

Ding |

|

|

|

Ding Image |

This is the name of the ding image to be used for spinning up the Spark driver and executor pods. This image contains the logic to run ETL. |

Yes, if the value of the Spark

Mode parameter is |

None |

|

|

|

Number of Executors |

This is the number of executor instances to be run in the Kubernetes cluster. These executors are terminated as soon as the ETL jobs are completed. |

No |

3 |

|

|

|

Image Pull Secret |

This is the Kubernetes secret name to pull the ding image from the registry. This is required only when using the Docker images from the container registry. |

No |

None |

|

|

|

Kubernetes Certificate File Name |

This is the name of the Kubernetes Certificate Name to be used for securely communicating to the kubernetes API server. |

Yes, if the value of the Spark Mode parameter is

|

None |

|

|

|

Driver Request Cores |

This is to specify the CPU request for the driver pod. The values of this parameter conform to the Kubernetes convention. For information about the meaning of CPU, see Meaning of CPU in Kubernetes documentation. Example values can be 0.1, 500m, 1.5, or 5, with the definition of CPU units documented in CPU units of Kubernetes documentation. This takes precedence over |

No |

0.5 |

|

|

|

Driver Limit Cores |

This is to specify a hard CPU limit for the driver pod. See Resource requests and limits of Pod and Container for information about CPU limit. |

No |

1 |

|

|

|

Executor Request Cores |

This is to specify the cpu request for each executor pod. Values conform to the Kubernetes convention. Example values can be 0.1, 500m, 1.5, and 5, with the definition of CPU units in Kubernetes documentation. |

No |

0.5 |

|

|

|

Executor Limit Cores |

This is to specify a hard CPU limit for each executor pod launched for the Spark application. |

No |

0.5 |

|

|

|

Driver Memory |

This is the amount of memory to use for the driver process where SparkContext is initialized, in the same format as JVM memory strings with a size unit suffix ("k", "m", "g" or "t"), for example, 512m, 2g. |

No |

1g |

|

|

|

Executor Memory |

This is the amount of memory to use per executor process, in the same format as JVM memory strings with a size unit suffix ("k", "m", "g" or "t"), for example 512m, 2g. |

No |

1g |

|

|

|

Driver Memory Overhead |

This is the amount of non-heap memory to be allocated per driver process in cluster mode, in MiB unless otherwise specified. This is memory that accounts for VM overheads, interned strings, other native overheads, and so on. This tends to grow with the container size (typically 6 to 10 percent). |

No |

256m |

|

|

|

Executor Memory Overhead |

This is the amount of additional memory to be allocated per executor process in cluster mode, in MiB unless otherwise specified. This is memory that accounts for VM overheads, interned strings, other native overheads, and so on. This tends to grow with the executor size (typically 6 to 10 percent). |

No |

256m |

|

|

2.9 Parameters Required for Authentication Configuration

Table 2-3 lists the parameters required for authentication configuration.

Table 2-3 Authentication Configuration Parameters

| Parameter | Description | Mandatory | Default Value | Argument | Argument Shorthand |

|---|---|---|---|---|---|

|

Authentication Provider |

The authentication provider for authenticating to OIRI. |

No |

OIG |

|

|

|

Access Token Issuer |

The OIG access token issuer. |

No |

www.example.com |

|

|

|

Cookie Domain |

The domain attribute specifies the hosts that are allowed to receive the cookie. If unspecified, it defaults to the same host that set the cookie, excluding subdomains. |

No |

None |

|

|

|

OIRI Access Token Issuer |

The OIRI access token issuer. |

No |

www.example.com |

|

|

|

Cookie Secure Flag |

If you are using non-SSL setup, then set this parameter to false. |

No |

true |

|

-csf |

|

Cookie Same Site |

Whether or not the cookie should be restricted to the same-site context. |

No |

Strict |

|

|

|

OIRI Access Token Audience |

The OIRI access token audience |

No |

www.example.com |

|

|

|

OIRI Access Token Expiration Time in minutes |

The OIRI access token expiration in minutes. |

No |

20 |

|

|

|

OIRI Access Token allowed clock skew |

The OIRI access token allowed clock skew. |

No |

30 |

|

|

|

Auth Roles |

A user with the role specified as the value of this parameter can login to OIRI. |

No |

OrclOIRIRoleEngineer |

|

|

|

Idle Session Timeout |

The session timeout in minutes if the OIRI application is idle. |

No |

15 |

|

|

|

Session Timeout |

OIRI session timeout in minutes |

No |

240 |

|

|

2.10 Entity Parameters for Data Import

Table 2-4 lists the user entity parameters that you can update by running

the updateDataIngestionConfig.sh command.

Note:

To view all the supported parameters for the

updateDataIngestionConfig.sh script, run the following

command from the ding-cli pod:

$ ./updateDataIngestionConfig.sh --help

Or:

$ ./updateDataIngestionConfig.sh -h

Table 2-4 User Entity Parameters for Data Import

| Parameter | Description | Default Value | Argument | Argument Shorthand |

|---|---|---|---|---|

|

Enabled (true/false) |

Determines whether the entity is enabled or disabled during data import. |

|

|

|

|

Sync Mode (full/incremental) |

For Day 0 data import, use full mode. For Day n data import, use incremental mode. In full mode, all the data is loaded in the OIRI database. In incremental mode, only updated data from the source is loaded in the OIRI database. |

|

|

|

|

Lower Bound |

The minimum value for the partitionColumn parameter that is used to determine partition stride. |

|

|

|

|

Upper Bound |

The maximum value for the partitionColumn parameter that is used to determine partition stride. |

|

|

|

|

Number of Partitions |

The number of partitions. This, along with lowerBound (inclusive) and upperBound (exclusive) form the partition strides for the generated WHERE clause expressions that are used to split the partitionColumn evenly. |

|

|

|

Table 2-5 lists the application entity parameters for data import that you

can update by running the updateDataIngestionConfig.sh command.

Table 2-5 Application Entity Parameters for Data Import

| Parameter | Description | Default Value | Argument | Argument Shorthand |

|---|---|---|---|---|

|

Enabled (true/false) |

Determines whether the application entity will be enabled or disabled during data import. |

|

|

|

|

Sync Mode (full/incremental) |

For Day 0 data import, use full mode. For Day n data import, use incremental mode. In full mode, all the data is loaded in the OIRI database. In incremental mode, only updated data from the source is loaded in the OIRI database. |

|

|

|

|

Lower Bound |

The minimum value for the partitionColumn parameter that is used to determine partition stride. |

|

|

|

|

Upper Bound |

The maximum value for the partitionColumn that is used to determine partition stride. |

|

|

|

|

Number of Partitions |

The number of partitions. This, along with lowerBound (inclusive) and upperBound (exclusive) form the partition strides for the generated WHERE clause expressions that are used to split the partitionColumn evenly. |

|

|

|

Table 2-6 lists the entitlement entity parameters for data import that you

can update by running the updateDataIngestionConfig.sh command.

Table 2-6 Entitlement Entity Parameters for Data Import

| Parameter | Description | Default Value | Argument | Argument Shorthand |

|---|---|---|---|---|

|

Enabled (true/false) |

Determines whether the entity is enabled or disabled during data import. |

|

|

|

|

Sync Mode (full/incremental) |

For Day 0 data import, use full mode. For Day n data import, use incremental mode. In full mode, all the data is loaded in the OIRI database. In incremental mode, only updated data from the source is loaded in the OIRI database. |

|

|

|

|

Lower Bound |

The minimum value for the partitionColumn that is used to determine partition stride. |

|

|

|

|

Upper Bound |

The maximum value for the partitionColumn that is used to determine partition stride. |

|

|

|

|

Number of Partitions |

The number of partitions. This, along with lowerBound (inclusive) and upperBound (exclusive) form the partition strides for the generated WHERE clause expressions that are used to split the partitionColumn evenly. . |

|

|

|

Table 2-7 lists the assigned entitlement parameters for data import that you

can update by running the updateDataIngestionConfig.sh command.

Table 2-7 Assigned Entitlement Parameters for Data Import

| Parameter | Description | Default Value | Argument | Argument Shorthand |

|---|---|---|---|---|

|

Enabled (true/false) |

Determines whether the entity is enabled or disabled during data import. |

TRUE |

|

|

|

Sync Mode (full/incremental) |

For Day 0 data import, use full mode. For Day n data import, use incremental mode. In full mode, all the data is loaded in the OIRI database. In incremental mode, only updated data from the source is loaded in the OIRI database. |

|

|

|

|

Lower Bound |

The minimum value for partitionColumn that is used to determine partition stride. |

|

|

|

|

Upper Bound |

The maximum value for partitionColumn that is used to determine partition stride. |

|

|

|

|

Number of Partitions |

The number of partitions. This, along with lowerBound (inclusive) and upperBound (exclusive) form the partition strides for the generated WHERE clause expressions that are used to split the partitionColumn evenly. |

|

|

|

Table 2-8 lists the role entity parameters for data import that you can

update by running the updateDataIngestionConfig.sh command.

Table 2-8 Role Entity Parameters for Data Import

| Parameter | Description | Default Value | Argument | Argument Shorthand |

|---|---|---|---|---|

|

Enabled (true/false) |

Determines whether the entity is enabled or disabled during data import. |

|

|

|

|

Sync Mode (full/incremental) |

For Day 0 data import, use full mode. For Day n data import, use incremental mode. In full mode, all the data is loaded in the OIRI database. In incremental mode, only updated data from the source is loaded in the OIRI database. |

|

|

|

|

Lower Bound |

The minimum value for partitionColumn that is used to determine partition stride. |

|

|

|

|

Upper Bound |

The maximum value for partitionColumn that is used to determine partition stride. |

|

|

|

|

Number of Partitions |

The number of partitions. This, along with lowerBound (inclusive) and upperBound (exclusive) form the partition strides for the generated WHERE clause expressions that are used to split the partitionColumn evenly. |

|

|

|

Table 2-9 lists the role hierarchy entity parameters for data import that

you can update by running the updateDataIngestionConfig.sh

command.

Table 2-9 Role Hierarchy Entity Parameters for Data Import

| Parameter | Description | Default Value | Argument | Argument Shorthand |

|---|---|---|---|---|

|

Enabled (true/false) |

Determines whether the entity is enabled or disabled during data import. |

|

|

|

|

Sync Mode (full/incremental) |

For Day 0 data import, use full mode. For Day n data import, use incremental mode. In full mode, all the data is loaded in the OIRI database. In incremental mode, only updated data from the source is loaded in the OIRI database. |

|

|

|

|

Number of Partitions |

The number of partitions. This, along with lowerBound (inclusive) and upperBound (exclusive) form the partition strides for the generated WHERE clause expressions that are used to split the partitionColumn evenly. |

|

|

|

Table 2-10 lists the role user membership entity parameters for data import

that you can update by running the updateDataIngestionConfig.sh

command.

Table 2-10 Role User Membership Entity Parameters for Data Import

| Parameter | Description | Default Value | Argument | Argument Shorthand |

|---|---|---|---|---|

|

Enabled (true/false) |

Determines whether the entity is enabled or disabled during data import. |

|

|

|

|

Sync Mode (full/incremental) |

For Day 0 data import, use full mode. For Day n data import, use incremental mode. In full mode, all the data is loaded in the OIRI database. In incremental mode, only updated data from the source is loaded in the OIRI database. |

|

|

|

|

Lower Bound |

The minimum value for partitionColumn that is used to determine partition stride. |

|

|

|

|

Upper Bound |

The maximum value for partitionColumn that is used to determine partition stride. |

|

|

|

|

Number of Partitions |

The number of partitions. This, along with lowerBound (inclusive) and upperBound (exclusive) form the partition strides for the generated WHERE clause expressions that are used to split the partitionColumn evenly. |

|

|

|

Table 2-11 lists the role entitlement composition entity parameters for data

import that you can update by running the

updateDataIngestionConfig.sh command.

Table 2-11 Role Entitlement Composition Entity Parameters for Data Import

| Parameter | Description | Default Value | Argument | Argument Shorthand |

|---|---|---|---|---|

|

Enabled (true/false) |

Determines whether the entity is enabled or disabled during data import. |

|

|

|

|

Sync Mode (full/incremental) |

For Day 0 data import, use full mode. For Day n data import, use incremental mode. In full mode, all the data is loaded in the OIRI database. In incremental mode, only updated data from the source is loaded in the OIRI database. |

|

|

|

| Lower Bound |

The minimum value for partitionColumn that is used to determine partition stride. |

|

|

|

|

Upper Bound |

The maximum value for partitionColumn that is used to determine partition stride. |

|

|

|

|

Number of Partitions |

The number of partitions. This, along with lowerBound (inclusive) and upperBound (exclusive) form the partition strides for the generated WHERE clause expressions that are used to split the partitionColumn evenly. |

|

|

|

Table 2-12 lists the account entity parameters for data import that you can

update by running the updateDataIngestionConfig.sh command.

Table 2-12 Account Entity Parameters for Data Import

| Parameters | Description | Default Value | Argument | Argument Shorthand |

|---|---|---|---|---|

|

Enabled (true/false) |

Determines whether the entity is enabled or disabled during data import. |

|

|

|

|

Sync Mode (full/incremental) |

For Day 0 data import, use full mode. For Day n data import, use incremental mode. In full mode, all the data is loaded in the OIRI database. In incremental mode, only updated data from the source is loaded in the OIRI database. |

|

|

|

|

Lower Bound |

The minimum value for partitionColumn that is used to determine partition stride. |

|

|

|

|

Upper Bound |

The maximum value for partitionColumn that is used to determine partition stride. |

|

|

|

|

Number of Partitions |

The number of partitions. This, along with lowerBound (inclusive) and upperBound (exclusive) form the partition strides for the generated WHERE clause expressions that are used to split the partitionColumn evenly. |

|

|

|

2.11 Flat File Parameters for Data Import

Table 2-13 lists the flat file parameters for data import.

Note:

To view all the parameters for the

updateDataIngestionConfig.sh script that you can modify,

run the following command from the ding-cli pod:

$ ./updateDataIngestionConfig.sh --help

Or:

$ ./updateDataIngestionConfig.sh -h

Table 2-13 Flat File Parameters for Data Import

| Parameter | Description | Default Value | Argument | Argument Shorthand |

|---|---|---|---|---|

|

Flat File Enabled |

Setting this parameter determines whether or not data

import will be performed against flat files. The value can be

|

|

--useflatfileforetl |

|

|

Flat File Format |

The format of the flat file, which is CSV. |

|

|

|

|

Flat File Data Separator |

The data separator in the rows of the flat files, which can be comma (,) colon (:) or vertical bar (|). |

|

|

|

|

Flat File Time Stamp Format |

The timestamp format in the flat files. |

|

|

|

2.12 Helm Chart Configuration Values

Table 2-14 lists the parameters required for setting up the

Values.yaml file to be used for Helm chart.

Table 2-14 Helm Chart Configuration Parameters

| Parameter Name | Description | Mandatory | Default Value | Argument | Argument Shorthand |

|---|---|---|---|---|---|

|

OIRI Namespace |

The name of the Kubernetes namespace on which you want to install OIRI. This namespace contains the installation of OIRI API pods and OIRI UI pods. |

No |

oiri |

|

|

|

OIRI Replicas |

The number of OIRI API pods to be run in the OIRI namespace. |

No |

1 |

|

|

|

OIRI API Image |

Name of the OIRI API Image. For example:

|

Yes |

None |

|

|

|

OIRI NFS Server |

NFS Server to be used for OIRI. This must be available across the Kubernetes nodes. |

Yes |

None |

|

|

|

OIRI NFS Storage Path |

The path on the NFS server that can be accessed

by OIRI API and UI Pods, for example,

|

Yes |

None |

|

|

|

OIRI NFS Storage Capacity |

The capacity of the NFS Server. See

the Kubernetes Resource Model for information

about the units expected by capacity, for example, |

Yes |

None |

|

|

|

OIRI UI Image |

Name of the OIRI UI Image. For example:

|

Yes |

None |

|

|

|

OIRI UI Replicas |

Number of OIRI UI pods to be run in the OIRI Namespace. |

No |

1 |

|

|

|

DING Namespace |

Name of the Kubernetes namespace on which you want to install the Spark Kubernetes history server. This namespace contains the installation of Spark history server and Spark cluster, including drivers and executors, for ETL. |

No |

ding |

|

|

|

Spark History Server Replicas |

Number of Spark history server pods to be run in the DING namespace. |

No |

1 |

|

|

|

DING NFS Server |

NFS server to be used for DING. This must be available across the Kubernetes nodes. |

Yes |

None |

|

|

|

DING NFS Storage Path |

The path on the NFS server that can be accessed by the Spark history server, driver, and executors in the spark cluster. For example:

|

Yes |

None |

|

|

|

DING NFS Storage Capacity |

The capacity of the NFS Server.

See the Kubernetes Resource Model for information

about the units expected by capacity, for example, |

Yes |

None |

|

|

|

DING Image |

Name of the data ingestion image to be used by the Spark history server, executor, and driver pods. For example:

|

Yes |

None |

|

|

|

Image Pull Secret |

Name of the Kubernetes secret to pull the image from the registry. |

No |

regcred |

|

|

|

Ingress Enabled |

Whether ingress is enabled. Default value of this parameter is 'true' which creates an ingress resource and an ingress controller. Setting this value to false will prevent creation of an ingress controller. |

No |

true |

|

|

|

Ingress Class Name |

Set Ingress controller. Default value of the parameter is 'nginx'. If you want to use your existing ingress controller then set this class to the class name managed by the controller. |

No |

nginx |

|

|

|

Ingress Host Name |

Ingress host name |

Yes |

None |

|

|

|

Install Service Account Name |

Service Account Name that is used to create the Kubernetes configuration when installing OIRI. |

No |

oiri-service-account |

|

|

|

Nginx-Ingress Type |

The type of ingress you want to create to access the OIRI API and OIRI UI. This can be NodePort or LoadBalancer. This release of OIRI supports only the NodePort ingress type. |

No |

NodePort |

|

|

|

Nginx-Ingress NodePort |

The port number of the ingress. Make sure the port provided is available and can be used. |

No |

30305 |

|

|

|

Nginx-Ingress SSL enabled |

Set this parameter to configure SSL. |

Yes |

|

|

|

|

Nginx-Ingress TLS secret |

This is the TLS secret in the default namespace. This is required

when SSL is enabled. This should match with the name you provide while creating a

TLS secret using |

No (required only if SSL is enabled) |

None |

|

|

|

Nginx-Ingress Replica Count |

Replica count for nginx controller. |

No |

1 |

|

|

2.15 Verifying and Updating the Wallet

2.16 Installing the OIRI Helm Chart

If you want to upgrade the Helm chart after you have updated

the values in the values.yaml file, then run the

updateValuesYaml.sh script from the

oiri-cli container, as described in Upgrade the OIRI Image

in Deploy Oracle Identity Role Intelligence on

Kubernetes.

If you want to change the data load configuration before running the data load process, then see Importing Entity Data to OIRI Database.

2.17 Uninstalling the OIRI Helm Chart (Optional)

While installing the OIRI Helm chart, if you encounter any issue, then fix the issue, unistall OIRI Helm chart, and then reinstall it again. If you do not unistall OIRI Helm chart, then the install process will fail with errors.

To uninstall the OIRI Helm chart, run the following command:

$ helm uninstall oiri -n <oirinamespace>

The output is:

release "oiri" uninstalled