4 Active-Active Application Tier with an Active-Active Database Tier

In an active-active application tier topology, two or more active server instances at distributed geographic locations are deployed to handle requests concurrently and thereby improve scalability and provide high availability.

Topics include:

- Active-Active Pair Topology Architecture Description

This supported MAA architecture consists of an active-active application infrastructure tier with WebLogic domain pairs used in conjunction with an active-active database tier with Oracle RAC cluster pairs, and in which both tiers span two sites. - Active-Active Pair Topology Design Considerations

Consider Oracle’s best practice design recommendations in an active-active application pair tier topology with an active-passive database pair tier.

Active-Active Pair Topology Architecture Description

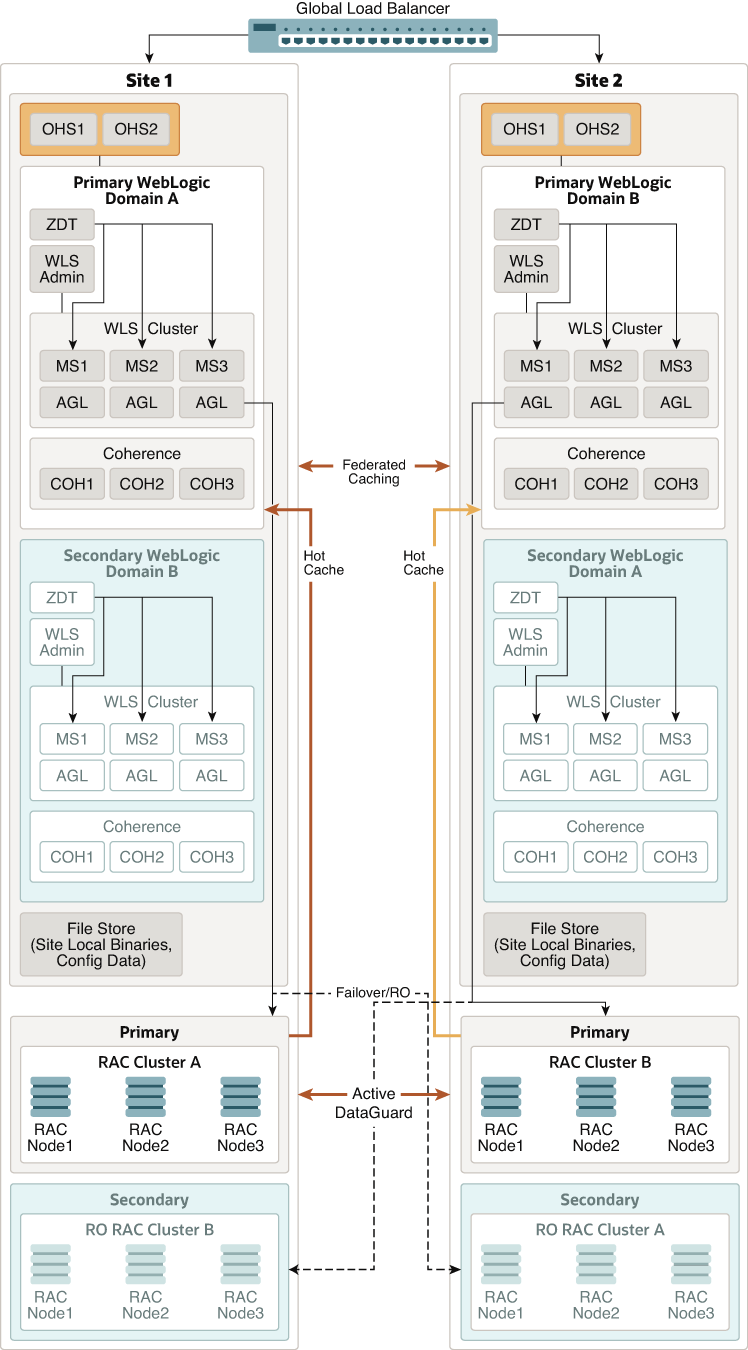

Figure 4-1 shows a recommended solution using an active-active application pair infrastructure tier with an active-active database pair tier.

Figure 4-1 Active-Active Application Pair Tier with an Active-Active Database Pair Tier Architecture Diagram

Description of "Figure 4-1 Active-Active Application Pair Tier with an Active-Active Database Pair Tier Architecture Diagram"

The key aspects of this sample topology include:

-

A global load balancer.

-

Two active instances of Oracle HTTP Server (OHS) at each site. OHS can balance requests to the WebLogic Server cluster.

-

Two identical domain pairs configured in two different data centers, Site 1 and Site 2. Domain A and B are independent domains and do not have to be configured with a symmetrical topology, however the domain pair at each site must be symmetrical. Each site contains a domain pair with one active domain and one passive domain. See Active-Active Pair Topology Design Considerations. The domains include:

-

A collection of Managed Servers (MS1, MS2, and MS3) in a WebLogic Server cluster, managed by the WebLogic Server Administration Server in the domain. In this sample, Active Gridlink (AGL) is being used to connect the Managed Servers to the primary database located in the same site. (Although a generic data source or multi data source can be used, Active Gridlink is preferable because it offers high availability and improved performance).

The Zero Downtime Patching (ZDT) arrows represent that the Managed Servers are patched in a rolling fashion. Because both domains are active, you can orchestrate the roll out of updates separately on each site. See WebLogic Server Zero Downtime Patching.

-

A Coherence cluster (COH1, COH2, and COH3) managed by the WebLogic Server Administration Server in the domain. Coherence persistent caching is used to recover cached data in case of a failure in the Coherence cluster. See Coherence Persistence and Clusters.

Read-Through caching or Coherence GoldenGate Hot Cache is used to update cache from the database. Coherence Hot Cache updates the Coherence cache in real time for any updates that are made on the active database. See Coherence GoldenGate Hot Cache

Coherence Federated Caching replicates data between the active clusters. In this active-active architecture, you can use the full capabilities of this feature, as described in Coherence Federated Caching. Data that is put into one active cluster is replicated at the other active clusters. Applications at different sites have access to a local cluster instance.

-

-

A file storage for the configuration data, local binaries, logs, and so on.

-

Two separate Oracle RAC database cluster pairs in the two different data centers, Site 1 and Site 2. Both sites contain a primary active Oracle RAC database cluster and a standby (passive) Oracle RAC database cluster. On Site 1, Cluster A is the active primary cluster, and Cluster B is in standby mode. On Site 2, Cluster B is the primary Active Oracle RAC cluster and Cluster A is in standby mode. The clusters can contain transaction logs, JMS stores, and application data. Data is replicated using Oracle Active Data Guard. (Although Oracle recommends using Oracle RAC database clusters because they provide the best level of high availability, they are not required. A single database or multitenant database can also be used.)

Active-Active Pair Topology Design Considerations

Consider Oracle’s best practice design recommendations in an active-active application pair tier topology with an active-passive database pair tier.

In addition to the design considerations described here, you should also follow the best practices recommended for all supported WebLogic Server and Coherence MAA architectures. See Common Design Considerations for High Availability and Disaster Recovery.

To take full advantage of high availability features in an active-active topology, consider the following:

-

Domain A and B are independent domains and do not have to be configured with a symmetrical topology, however the domain pair at each site must be symmetrical. That is, the domain pair must use the same domain configuration such as domain and server names, resource names, port numbers, user accounts, load balancers and virtual server names, and the same version of the software. Host names (not static IPs) must be used to specify the listen address of the Managed Servers. You can use any existing replication technology or methods that you currently use to keep the pairs in sync.

-

In this topology network latency is normally large (WAN network). If applications require session replication between sites you must choose either database session replication or Coherence*Web. See Session Replication.

-

Server and service migration only applies to Managed Servers in a cluster intra-site (within a site) in an active-active topology. See Server and Service Migration.

-

JMS is supported only intra-site in this topology. JMS recovery during failover or planned maintenance is not supported across sites.

-

Zero Downtime Patching is only supported intra-site (within a site) in an active-active topology. You can upgrade your WebLogic homes, Java, and applications in each site independently. Keep upgrade versions in sync to keep the domains symmetric at both sites.

-

You can design applications to minimize data loss during failures by combining different high availability features. For example, you can use a combination of Coherence federated cache, Coherence HotCache or Coherence Read-Through cache.

If the Coherence data is backed up in the database and there is a network partition failure, federated caching is unable to perform the replication and data becomes inconsistent on both sites since the Coherence clusters can independently continue doing work. Once the communication between the sites resumes, backed up data is pushed from the database to Coherence via Coherence HotCache or Coherence Read-Through cache, and eventually data in the Coherence cache is synchronized. See Coherence.