5 Active-Active Stretch Cluster with an Active-Passive Database Tier

In an active-active stretch cluster topology, cluster nodes can span data centers within a proximate geographical range, and usually with guaranteed, relatively low latency networking between the sites.

Topics include:

- Active-Active Stretch Cluster Topology Architecture Description

This supported MAA architecture consists of an active-active stretch cluster application infrastructure tier with an active-passive database tier and in which the two tiers span two sites. Both sites are configured with a WebLogic Server stretch cluster, and all server instances in each cluster are active. The database tier is active at the first site, but on standby at the second. - Active-Active Stretch Cluster Topology Design Considerations

Consider Oracle’s best practice design recommendations in an active-active stretch cluster topology with an active-passive database tier.

Active-Active Stretch Cluster Topology Architecture Description

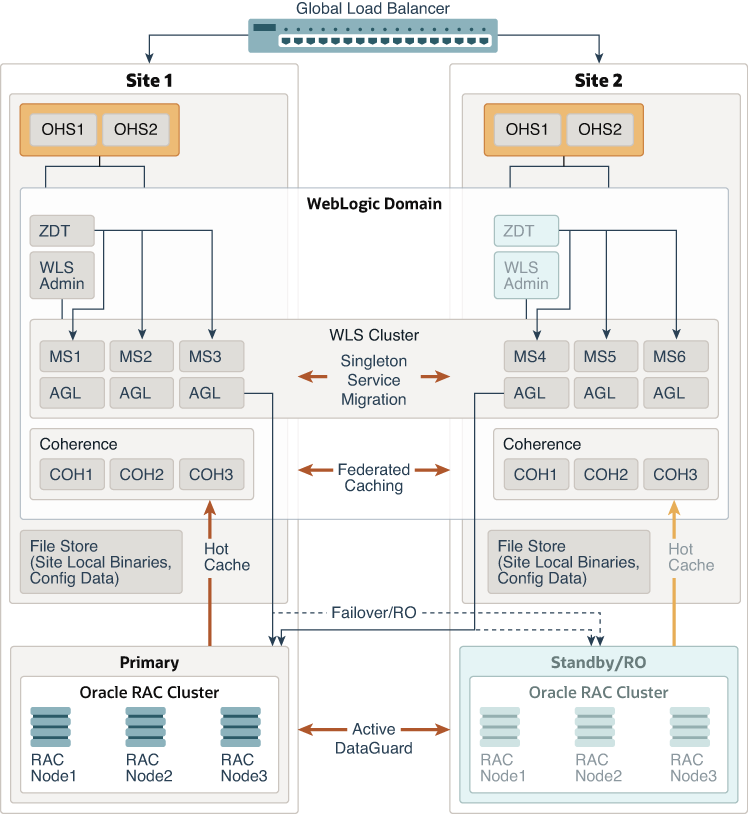

This supported MAA architecture consists of an active-active stretch cluster application infrastructure tier with an active-passive database tier and in which the two tiers span two sites. Both sites are configured with a WebLogic Server stretch cluster, and all server instances in each cluster are active. The database tier is active at the first site, but on standby at the second.

Figure 5-1 shows a recommended architecture using an active-active stretch cluster application infrastructure tier with an active-passive database tier.

Figure 5-1 Active-Active Stretch Cluster with an Active-Passive Database Tier Architecture Diagram

Description of "Figure 5-1 Active-Active Stretch Cluster with an Active-Passive Database Tier Architecture Diagram"

The key aspects of this topology include:

-

A global load balancer.

-

Two active instances of Oracle HTTP Server (OHS) at each site. OHS can balance requests to the WebLogic Server cluster.

-

WebLogic Server configured as a cluster that stretches across two different data centers, Site 1 and Site 2. All servers in the cluster are active. The cluster can be either dynamic or static. See Clustering.

-

The domain includes:

-

A WebLogic Server cluster that consists of a group of Managed Servers (MS1, MS2, and MS3) at Site 1 and another group of Managed Servers (MS4, MS5, and MS6) at Site 2. The Managed Servers are managed by the WebLogic Server Administration Server at Site 1. In this sample, Active Gridlink (AGL) is being used to connect the Managed Servers to the primary database. (Although a generic data source or multi data source can be used, Active Gridlink is preferable because it offers high availability and improved performance).

The Zero Downtime Patching (ZDT) arrows represent patching the Managed Servers in a rolling fashion. Because all of the servers in the cluster are active, you can use the full capabilities of this feature as described in WebLogic Server Zero Downtime Patching.

Because all of the servers are in the same cluster, you can use WebLogic Server singleton service migration to recover transactions.

-

In whole server migration, a migratable server instance, and all of its services, is migrated to a different physical machine upon failure. See Whole Server Migration in Administering Clusters for Oracle WebLogic Server.

-

In service migration, in the event of failure, services are moved to a different server instance within the cluster. See Service Migration in Administering Clusters for Oracle WebLogic Server.

-

-

A Coherence cluster at each site (COH1, COH2, and COH3) managed by the WebLogic Server Administration Server in the domain. Coherence persistent caching is used to recover cached data in case of a failure in the Coherence cluster. Read-Through caching or Coherence GoldenGate Hot Cache is used to update cache from the database. In this architecture, you can use the full capabilities of Coherence HotCache as described in Coherence GoldenGate Hot Cache.

Coherence Federated Caching replicates cache data asynchronously across the two sites. In this architecture, you can use the full capabilities of this feature as described in Coherence Federated Caching.

-

-

A file storage for the configuration data, local binaries, logs, domain home, Node Manager directories, shared application-specific files, and shared cluster files. Because this is a stretch cluster, the shared cluster files (TLogs, JMS stores, leasing tables) must all be stored in the database so that they can be shared by servers in the cluster.

-

Two separate Oracle RAC database clusters in two different data centers. The primary active Oracle RAC database cluster is at Site 1. Site 2 contains an Oracle RAC database cluster in standby (passive) read-only mode. The clusters can contain transaction logs, JMS stores, and application data. Data is replicated using Oracle Data Guard or Active Data Guard. (Although Oracle recommends using Oracle RAC database clusters because they provide the best level of high availability, they are not required. A single database or multitenant database can also be used.)

-

The Oracle WebLogic Server configuration is synchronized across multiple nodes in the same domain by the Oracle WebLogic Server infrastructure. Most of this configuration usually resides under the Administration Server’s domain directory. This configuration is propagated automatically to the other nodes in the same domain that contain Oracle WebLogic Servers. Based on this, the administration overhead of an Active-Active stretch cluster system is smaller as compared to any active-passive approach, where constant replication of WebLogic's configuration changes is required.

Active-Active Stretch Cluster Topology Design Considerations

Consider Oracle’s best practice design recommendations in an active-active stretch cluster topology with an active-passive database tier.

In addition to the design considerations described here, you should also follow the best practices recommended for all supported WebLogic Server and Coherence MAA architectures. See Common Design Considerations for High Availability and Disaster Recovery

To take full advantage of high availability features in an active-active stretch cluster, consider the following:

-

In a multi data center, active-active stretch cluster environment session replication across data centers can cause serious performance degradation in the system. Oracle recommends defining two different replication groups (one for each site) to minimize the possibility of replication occurring across the two sites.

Note:

Using replication groups is a best effort to replicate state only to servers in the same site, but is not a deterministic method. If one single server is available in one site, and other servers are available in the other site, replication occurs across the MAN and continues for that session even if servers come back online in the same site.

-

A stretch cluster uses an Oracle HTTP Server (OHS) configuration based on a fixed list of servers at each site (instead of the dynamic list provided by the OHS plug-in that is used in typical single-location deployments). Using a fixed list of servers eliminates undesired routing from one site to another. A disadvantage, however, is slower reaction times to failures in Oracle WebLogic Servers.

-

An active-active stretch cluster only works in the metro latency model. Latency should be no longer than 10-milliseconds round-trip time (RTT).

-

For contention and security reasons, Oracle does not recommend using shared storage across sites. Each site uses individual shared storage for JMS and Transaction Logs (TLogs), or alternatively the database is used as a persistent store. Whether a database store is more suitable than shared storage for a system depends on the criticality of the JMS and transaction data, because the level of protection that shared storage provides is much lower than the protection guaranteed by the database. With JMS, TLog, and leasing tables in a Data Guard database, cross-site synchronization is simplified and the need for a shared storage sub-system such as a NAS or a SAN is alleviated in the middle tier. Using TLogs and JMS in the database has a penalty, however, on the system’s performance. This penalty is increased when one of the sites needs to cross communicate with the database on the other site (depending on network lag between sites).

- Each site uses individual shared storage. If this is used to store runtime data, disk mirroring and replication from Site1 to Site2, and in reverse, can be used to provide a recoverable copy of these artifacts in each site.

-

It is recommended to store the JMS and Transaction Logs (TLogs) in database-based stores. This allows cross-site service migration for the JMS and JTA services, because the TLogs and JMS messages are available in the database for all the servers in the cluster. And, in case of a complete site switchover, the Tlogs and JMS messages are automatically replicated to the other site with the underlying Data Guard replication.

-

Both sites are managed with a single Administration Server that resides in one of the two sites. A unique Oracle WebLogic Server Administration Console is used to configure and monitor servers running on both sites. The WebLogic Server infrastructure is responsible for copying configuration changes to all the different domain directories used in the domain.

-

If an Administration Server fails, the same considerations that apply to an Administration Server failure in a single data center topology apply to a multi data center active-active stretch cluster topology. Use the standard failover procedures described in Failing Over or Failing Back Administration Server in High Availability Guide to address node failures (that is restarting the Administration Server in another node that resides in the same data center pointing to the shared storage that hosted the Administration Server domain directory). Also, deploy the appropriate backup and restore procedures to make regular copies of the Administration Server domain directory. If there is a failure that affects the site hosting the Administration Server (involving all nodes), you need to restart the server in a different site. To do so, use the existing storage replication technology to copy the Administration Server domain directory available in the failover site. Restore the server/directory (including both the domain and applications directories) in the failover site so that the exact same domain directory structure is created for the Administration Server domain directory as in the original site. Restart Node Manager in the node where the Administration Server is restored.

Likely, the Administration Server failed over to a different subnet requiring the use of a different virtual IP (VIP) that is reachable by other nodes. Make the appropriate changes in the host name resolution system in this subnet so that this VIP maps to the original Virtual Hostname that the Administration Server used as the listen address in Site1. For example, in Site1,

ADMINHOSTVHN1maps to10.10.10.1, while in Site2 either the local/etc/hostsor DNS server has to be updated so thatADMINHOSTVHN1maps to20.20.20.1. All servers useADMINHOSTVHN1as the address to reach the Administration Server. If the Administration Server is front ended with an Oracle HTTP Server and load balancer, clients are agnostic to this change. If clients directly access the Administration Server listen host name, they must be updated in their DNS resolution also.Also, if host name verification is enabled for the Administration Server, update the appropriate trust stores and key stores with new certificates. Use the instructions in Updating Self-Signed Certificates and Keystore on Standby Site in Disaster Recovery Guide.

Verify that the Administration Server is working properly by accessing the Oracle WebLogic Server Administration Console.

-

Servers that are remote to the Administration Server take longer to restart than the servers that are collocated. The reason is that all the communications with the Administration Server (for retrieving the domain configuration upon start) and initial connection pool creation and database access is affected by the latency across sites. See Administration Server High Availability Topology in High Availability Guide.

-

Automatic Server or Service Migration across sites is not recommended unless a database is used for JMS and TLog persistence, otherwise a constant replica of the appropriate persistent stores must be set up between the sites.

-

Oracle recommends using Service Migration instead of Server migration. Server migration uses Virtual IPs and in most scenarios the Virtual IPs used in one site are invalid for migration to the other. It requires additional intervention to enable a listen address, which is initially available in Site1 in Site2 and viceversa. This intervention can be automated in pre-migration scripts, but the RTO increases compared to a standard automated server migration (taking place in the scope of single data center). When compared, Service Migration does not require virtual IPs and the RTO is much better than in Server migration.

-

JMS and transaction recovery across sites is handled by using service or server migration inside a WebLogic Server stretch cluster. As explained in the Common Design Considerations: WebLogic Server section, Oracle recommends using Service Migration rather than Server Migration. For server or service migration of JMS or JTA, Oracle recommends using database leasing on a highly available database. When configured with consensus non-database leasing, servers in the stretch cluster could fail to reboot and require the entire domain to be restarted when the environment experiences network partition failure.

-

Zero Downtime Patching in an active-active stretch cluster topology orchestrates the updates to all servers in the stretch cluster across both sites. In a stretch cluster, the servers at each site must have their own Oracle Home. When an Oracle Home is updated and servers share the same Oracle Home, all servers must come down and are updated simultaneously. When each server has its own Oracle Home, each server can be patched individually and other servers in the stretch cluster can still service requests. See Zero Downtime Patching.

-

In an active-active stretch cluster topology, only stretch the WebLogic Server cluster across the two sites. Coherence should have a Coherence cluster on each site using federated caching to replicate data across the two active sites. When a Coherence cluster is stretched across sites, it is susceptible to split brain.

-

Table 5-1 lists the recommended settings to configure database leasing in a stretch cluster topology. See the following MBean descriptions in MBean Reference for Oracle WebLogic Server:

Table 5-1 Recommended Settings for DataBase Leasing in a Stretch Cluster

Configuration Property MBean/Command Description Recommended Setting DatabaseLeasingBasisConnectionRetryCountClusterMBeanThe maximum number of times that database leasing tries to obtain a valid connection from the data source.

5 (Default value is 1)

DatabaseLeasingBasisConnectionRetryDelayClusterMBeanThe length of time, in milliseconds, that database leasing waits before attempting to obtain a new connection from the data source when a connection has failed.

2000 (Default value is 1000)

TestConnectionOnReserveJDBCConnectionPoolParamsBeanEnables WebLogic Server to test a connection before giving it to a client.

Enabled. For leasing the data source. The servers remain in a running state during switchover.

-Dweblogic.cluster.jta.SingletonMasterRetryCountServer start up command

Specifies the retry count for the singleton master to get elected and open its listen ports. The singleton master might not be immediately available on the first try to deactivate JTA.

4 (The default value is 20)

Because

DatabaseLeasingBasisConnectionRetryCountis set to 5, this property can be decreased to 4. This setting can reduce the time cost during database server booting. -

Figure 5-2 and Figure 5-3 represent results found during benchmark testing that show the degradation observed in the overall system throughput (both sites working together) for different latencies. Figure 5-2 shows that for a latency of around 20-milliseconds round-trip time (RTT), the throughput decreases by almost 25%. Figure 5-3 shows the additional time (msecs) consumed for deploying SOA composites with increasing latencies (RTT in msecs.) between sites in a SOA Active-Active stretch cluster (as compared to a deployment with all servers and database in the same site).

Considering the data provided and the performance penalties observed in many tests, Oracle recommends not to exceed 10 ms of latency (RTT) for active-active stretch cluster topologies when the latency affects communication between the two sites.

Figure 5-2 Throughput Degradation With Latency in a Stretch Cluster

Description of "Figure 5-2 Throughput Degradation With Latency in a Stretch Cluster"Figure 5-3 Deployment Delay Vs. Latency in a Stretch Cluster

Description of "Figure 5-3 Deployment Delay Vs. Latency in a Stretch Cluster"