Deploy a Machine Learning Model at the Network Edge

Machine learning plays an increasingly important role in many industries. Machine learning models are built and trained by data scientists and are deployed to provide predictions or scores based on operational data—predictions and scores used, for example, to improve process efficiencies, detect and react to problems or anomalies, or assess key performance indicators (KPIs) and quality indicators.

- The model requires larger volumes of data than the bandwidth between the remote site and the central location can accommodate.

- You experience intermittent connectivity between the remote site and the central location.

- You have very low latency requirements covering the process of feeding the model runtime data, getting it to generate its score or prediction, and acting on that score or prediction.

Architecture

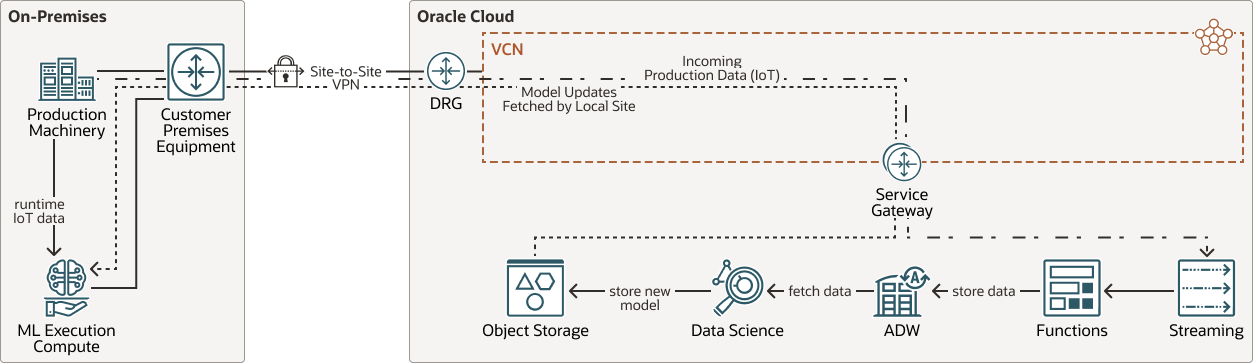

This architecture is the basis for deploying machine learning models close to data sources and production systems. It is depicted in this diagram:

Description of the illustration deploy-ml-edge-architecture.png

deploy-ml-edge-architecture-oracle.zip

- Production processes generate data, which are streamed to the streaming service on Oracle Cloud. The streaming service triggers a function to handle incoming streams.This function stores the incoming data in the autonomous data warehouse (ADW), perhaps after processing or transforming it.

- Data scientists periodically rebuild machine learning (ML) models based on the data in ADW. When these models are tested and confirmed to improve on old models, they are saved for deployment (for example, in ONNX form), and are pushed to object storage to make them available for subsequent fetching. A separate object storage bucket for each local site is recommended to ensure that the correct model is delivered to the correct site. The normal Oracle Data Science process of storing models in the model catalog, and later deploying them as model deployments is not used, because the model catalog is inaccessible outside OCI, and Oracle Data Science can only deploy to the Oracle Cloud.

- Periodically, local sites check for and fetch updates to the ML models, by checking in specific object storage buckets. When new models are available, the local sites fetch, deploy and start using them.

- In production, production data are passed to the ML runtime to generate scores, predictions, alerts, and so on.

- On-Premises Location

One or more remote production sites are connected to the Oracle Cloud using site-to-site VPN. These sites may be manufacturing facilities, where low-latency machine learning on data streams from production processes is required

- Production Machinery

At each remote site, one or more production systems, quality control systems, MESs, SCADA systems, and so on, which generate production data and/or IoT streams are running. Data from these systems are delivered to ML runtimes to score, predict, or alert, based on ML models' responses to the data

- ML Execution environment

At each remote site, a machine-learning execution environment is operational. At its core, this consists of an ML runtime, such as the ONNX Runtime, perhaps running as a web service, and providing scoring and prediction capabilities to an IoT gateway. The exact configuration of the ML execution environment will depend on specific local circumstances and requirements

- Tenancy

A tenancy is a secure and isolated partition that Oracle sets up within Oracle Cloud when you sign up for OCI. You can create, organize, and administer your resources in Oracle Cloud within your tenancy. A tenancy is synonymous with a company or organization. Usually, a company will have a single tenancy and reflect its organizational structure within that tenancy. A single tenancy is usually associated with a single subscription, and a single subscription usually only has one tenancy.

- Virtual cloud network (VCN) and subnets

A VCN is a customizable, software-defined network that you set up in an Oracle Cloud Infrastructure region. Like traditional data center networks, VCNs give you complete control over your network environment. A VCN can have multiple non-overlapping CIDR blocks that you can change after you create the VCN. You can segment a VCN into subnets, which can be scoped to a region or to an availability domain. Each subnet consists of a contiguous range of addresses that don't overlap with the other subnets in the VCN. You can change the size of a subnet after creation. A subnet can be public or private.

- Service gateway

The service gateway provides access from a VCN to other services, such as Oracle Cloud Infrastructure Object Storage. The traffic from the VCN to the Oracle service travels over the Oracle network fabric and never traverses the internet. In this architecture, the Service Gateway provides locally-peered access to Oracle Cloud services, via the VPN

- Object storage

Object storage provides quick access to large amounts of structured and unstructured data of any content type, including database backups, analytic data, and rich content such as images and videos. You can safely and securely store and then retrieve data directly from the internet or from within the cloud platform. You can seamlessly scale storage without experiencing any degradation in performance or service reliability. Use standard storage for "hot" storage that you need to access quickly, immediately, and frequently. Use archive storage for "cold" storage that you retain for long periods of time and seldom or rarely access. In this architecture, Object Storage is used to store updates to the model and associated scripts, when these are produced by data scientists.

- Autonomous Data Warehouse

Oracle Autonomous Data Warehouse is a self-driving, self-securing, self-repairing database service that is optimized for data warehousing workloads. You do not need to configure or manage any hardware, or install any software. Oracle Cloud Infrastructure handles creating the database, as well as backing up, patching, upgrading, and tuning the database. In this architecture, ADW stores the raw (or processed) production site IoT data, for later processing by data scientists

- Site-to-Site VPN

Site-to-Site VPN provides IPSec VPN connectivity between your on-premises network and VCNs in Oracle Cloud Infrastructure. The IPSec protocol suite encrypts IP traffic before the packets are transferred from the source to the destination and decrypts the traffic when it arrives.

- Functions

Oracle Functions is a fully managed, multitenant, highly scalable, on-demand, Functions-as-a-Service (FaaS) platform. It is powered by the Fn Project open source engine. Functions enable you to deploy your code, and either call it directly or trigger it in response to events. Oracle Functions uses Docker containers hosted in Oracle Cloud Infrastructure Registry. In this architecture, Functions are used to store streamed data in ADW, using a function built around a JDBC Connector

- Streaming

Oracle Cloud Infrastructure Streaming provides a fully managed, scalable, and durable storage solution for ingesting continuous, high-volume streams of data that you can consume and process in real time. You can use Streaming for ingesting high-volume data, such as application logs, operational telemetry, web click-stream data; or for other use cases where data is produced and processed continually and sequentially in a publish-subscribe messaging model. Streaming is used in this architecture to route streams of production data to ADW for persistence. It is also possible to introduce processing and enrichment of these data, as part of the streaming process, but this is not shown here

- Oracle Data Science

Oracle Data Science is a fully-managed platform for teams of data scientists to build, train, deploy, and manage machine learning models using Python and open source tools. It includes MLOps capabilities, such as automated pipelines, model deployments, and model monitoring; however these capabilities are restricted to model deployments on the Oracle Cloud and are not used in this architecture. In this architecture, data scientists use Oracle Data Science to efficiently analyse and build models on the data, and to create deployable versions of those models, for example, in ONNX form

- Oracle services network

The Oracle services network (OSN) is a conceptual network in Oracle Cloud Infrastructure that is reserved for Oracle services. These services have public IP addresses that you can reach over the internet. Hosts outside Oracle Cloud can access the OSN privately by using Oracle Cloud Infrastructure FastConnect or VPN Connect. Hosts in your VCNs can access the OSN privately through a service gateway.

Recommendations

- VCN

A VCN with an attached DRG and a service gateway is required to route traffic from the remote sites (connected by site-to-site VPN) to services running in the Service Network. This VCN needs an entry in its Route Table to route all traffic bound for services in the chosen Oracle Cloud Region to be routed through the service gateway. In this architecture, all traffic between the remote site and the Service Network is triggered by a request from the remote site to the Service Network

- Security

Use Oracle Cloud Guard to monitor and maintain the security of your resources in Oracle Cloud Infrastructure proactively. Cloud Guard uses detector recipes that you can define to examine your resources for security weaknesses and to monitor operators and users for risky activities. When any misconfiguration or insecure activity is detected, Cloud Guard recommends corrective actions and assists with taking those actions, based on responder recipes that you can define.

- Cloud Guard

Clone and customize the default recipes provided by Oracle to create custom detector and responder recipes. These recipes enable you to specify what type of security violations generate a warning and what actions are allowed to be performed on them. For example, you might want to detect object storage buckets that have visibility set to public.

Apply Cloud Guard at the tenancy level to cover the broadest scope and to reduce the administrative burden of maintaining multiple configurations.

You can also use the managed list feature to apply certain configurations to detectors.

- Security zones

For resources that require maximum security, Oracle recommends that you use security zones. A security zone is a compartment associated with an Oracle-defined recipe of security policies that are based on best practices. For example, the resources in a security zone must not be accessible from the public internet and they must be encrypted using customer-managed keys. When you create and update resources in a security zone, Oracle Cloud Infrastructure validates the operations against the policies in the security-zone recipe, and denies operations that violate any of the policies

- Network security groups (NSGs)

You can use NSGs to define a set of ingress and egress rules that apply to specific VNICs. We recommend using NSGs rather than security lists, because NSGs enable you to separate the VCN's subnet architecture from the security requirements of your application.

Considerations

Consider the following points when deploying this reference architecture.

- Performance

The main performance concerns are related to the volume of data generated by production processes.

The ML execution environment, where machine learning runtimes are invoked to respond to production data, are to be dimensioned to handle the volumes of data generated. This is an issue for the remote site setup and is outside the scope of this architecture.

Data generated by production processes may be sufficiently voluminous that bandwidth available for streaming to OCI may be overwhelmed. In such cases, it may be advisable to preprocess the data locally to discard irrelevant data, to aggregate data, to compress data, etc., so that bandwidth demands are reduced.

By moving ML runtimes close to the data source, ML latencies are reduced.

- Availability

If connectivity between remote sites and the Oracle Cloud is intermittent, then data could be streamed to a local Kafka installation, which then streams to Oracle Streaming. In this way, the local Kafka installation can buffer data if an network outage occurs.