Modern App Development - Event-Driven

The event-driven application pattern enables you to respond in near real time to changes in your cloud resources and to events generated by your application.

An event is any significant occurrence or change in a system, for example, a newly created object in object storage, or a performance alert in your application. This application pattern presents the design principles and architecture for creating scalable, secure, reliable, and highly performant event-driven applications.

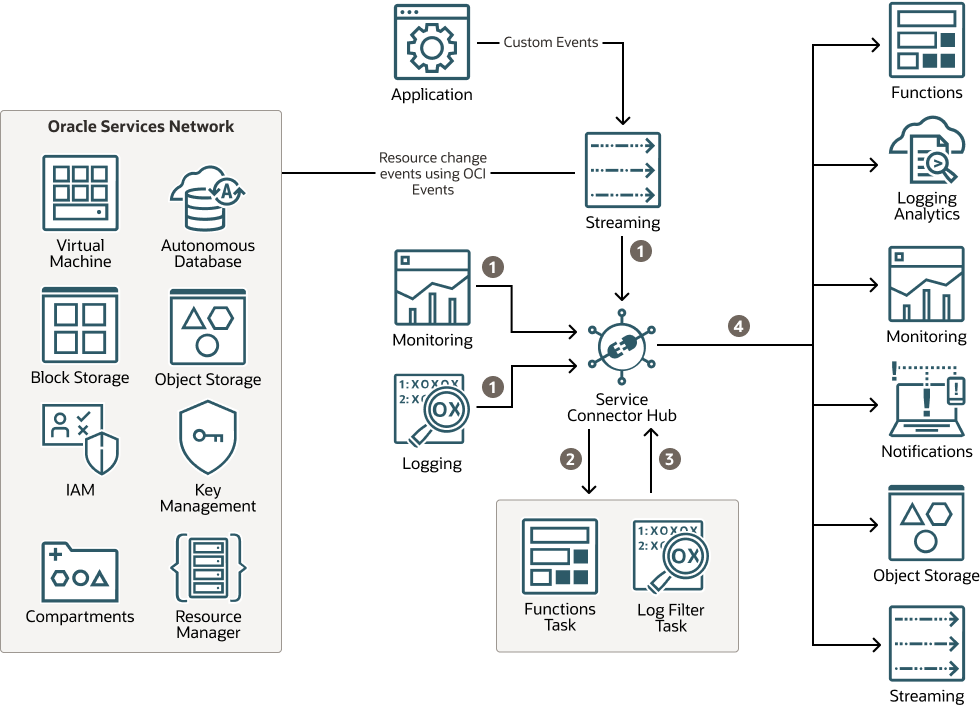

Oracle Cloud Infrastructure (OCI) provides services such as OCI Service Connector Hub and OCI Events to help you build event-driven applications. OCI Service Connector Hub enables you to create service connectors for moving data between services. A service connector specifies the source service containing the data to be moved, optional tasks, and the target service for delivery of data when tasks complete. Examples of optional tasks include, a function task to process data from the source, or a log filter task to filter log data from the source. Supported source services for service connectors include OCI Monitoring, OCI Logging, and OCI Streaming. Examples of target services include: OCI Functions, OCI Notifications, and OCI Object Storage. You can use OCI Events to raise resource-change events that can be ingested by service connectors by using streams. Likewise, your app can raise custom events and use streams to route them to service connectors.

Design Principles

Use the following modern app development principles when designing the architecture for your event-driven application:

- Use low-code platforms if possible, and if not, use mature programming languages and lightweight frameworks

Describe your event data using CloudEvents, an open and industry-standard format. CloudEvents enable you to describe event data in a consistent, widely-used format and to provide software development kits (SDKs) for several programming languages including Java. Events created using Oracle Cloud Infrastructure Events use the CloudEvents format.

- Use managed services to eliminate complexity in app development and operations

Use managed services to communicate, process, and persist event data.

Use OCI Service Connector Hub to orchestrate data movement between services. Use managed services such as OCI Functions or the Oracle Container Engine for Kubernetes (OKE) to process events. Use OCI Notifications to connect service connectors to downstream services such as email, SMS, Slack, or PagerDuty.

- Instrument end-to-end monitoring and tracing

An event router such as the OCI Service Connector Hub and OCI Events produce metrics in OCI Monitoring, making it easy to monitor your application events and to build custom metrics and alarms. For end-to-end distributed tracing, build custom dashboards with logging rules (OCI Logging Analytics) as well as alarm-based monitoring (OCI Notifications) to enable administrators to discover and quickly react to any issues. Additionally, an event router has the unique ability to provide a single pane-of-glass view into the event's footprint.

Architecture

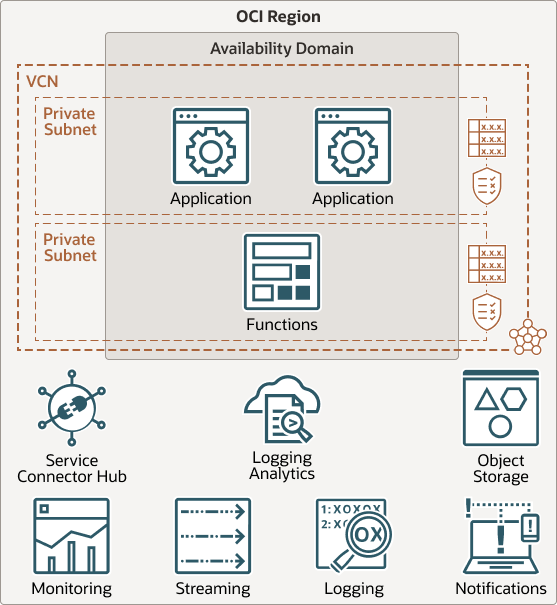

This architecture uses modern app development principles to create event-driven applications.

mad-event-driven-architecture-oracle.zip

The architecture has the following components:

- Streaming

Oracle Cloud Infrastructure Streaming provides a fully managed, scalable, and durable storage solution for ingesting continuous, high-volume streams of data that you can consume and process in real time. You can use Streaming for ingesting high-volume data, such as application logs, operational telemetry, web click-stream data; or for other use cases where data is produced and processed continually and sequentially in a publish-subscribe messaging model.

- Functions

Oracle Cloud Infrastructure Functions is a fully managed, multitenant, highly scalable, on-demand, Functions-as-a-Service (FaaS) platform. It is powered by the Fn Project open source engine. Functions enable you to deploy your code, and either call it directly or trigger it in response to events. Oracle Functions uses Docker containers hosted in Oracle Cloud Infrastructure Registry.

- Service connectors

Oracle Cloud Infrastructure Service Connector Hub is a cloud message bus platform that orchestrates data movement between services in OCI. You can use service connectors to move data from a source service to a target service. Service connectors also enable you to optionally specify a task (such as a function) to perform on the data before it is delivered to the target service.

You can use Oracle Cloud Infrastructure Service Connector Hub to quickly build a logging aggregation framework for security information and event management (SIEM) systems.

- You can use service connectors to move data from a source service to a target service. Service connectors also enable you to optionally specify a task (such as a function) to perform on the data before it is delivered to the target service.

- Notifications

The Oracle Cloud Infrastructure Notifications service broadcasts messages to distributed components through a publish-subscribe pattern, delivering secure, highly reliable, low latency, and durable messages for applications hosted on Oracle Cloud Infrastructure.

Example Use Case

The Alarm on Log Data use case adopts the event-driven architecture to implement alarms for log data using the Oracle Cloud Infrastructure (OCI) Service Connector Hub, OCI Logging, and OCI Monitoring services.

This use case creates a service connector and an alarm. The service connector (OCI Service Connector Hub) processes and moves log data from OCI Logging to OCI Monitoring while the alarm fires when triggered by received log data. For details, see the link to the scenario in the Explore More topic.

Deploy

- Go to GitHub.

- Clone or download the repository to your local computer.

- Follow the instructions in the

READMEdocument.