Learn About Automating Hadoop Data Migration to Oracle with WANdisco LiveData Migrator

Oracle Cloud Infrastructure Lakehouse provides an integrated platform of multiple Oracle cloud services working together with easy movement of data and unified governance, and offers the ability to use the best open-source and commercial tools based on your use cases and preferences.

Architecture

WANdisco LiveData Migrator automates the large-scale movement of data and metadata from existing on-premises data lakes, Spark, and Hadoop environments to Oracle Cloud Infrastructure (OCI). Leveraging WANdisco's LiveData capabilities, data migration can occur while the source data is under active change, without requiring any production system downtime or business disruption, and supports complete and continuous data migration.

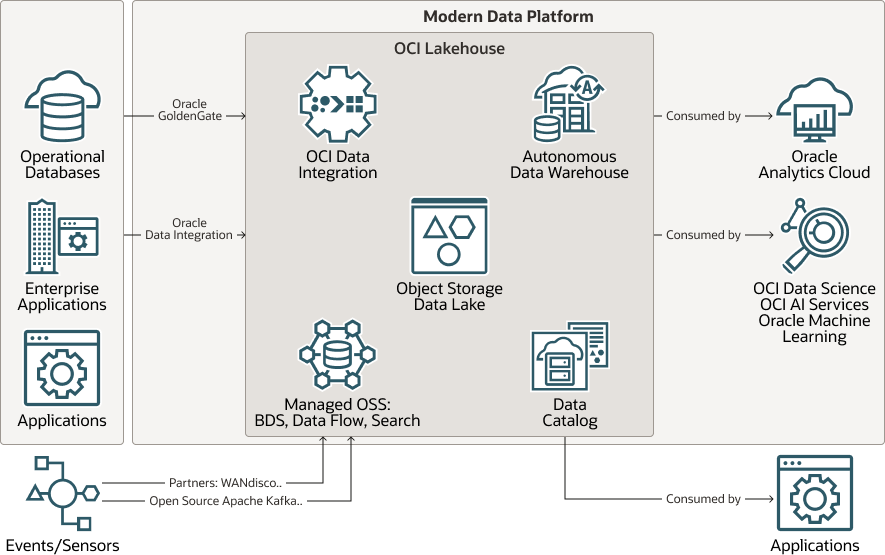

The following diagram illustrates the functional architecture of OCI's modern data platform.

Description of the illustration modern-data-platform.png

modern-data-platform-oracle.zip

- Data is collected from operational databases, enterprise applications, other applications, and external events and sensors.

- Data is transferred to Oracle Cloud Infrastructure Lakehouse through Oracle GoldenGate, Oracle Cloud Infrastructure Data Integration, partner applications, such as WANdisco, and open source apps, such as Apache and Kafka.

- Data is consumed by Oracle Analytics Cloud, Oracle Cloud Infrastructure Data Science, Oracle Cloud Infrastructure AI Services, and Oracle Machine Learning within OCI and applications outside of OCI.

This architecture supports the following components:

- Oracle Cloud Infrastructure GoldenGate

Oracle Cloud Infrastructure GoldenGate is a fully managed service that allows data ingestion from sources residing on premises or in any cloud, leveraging the GoldenGate CDC technology for a non intrusive and efficient capture of data and delivery to Oracle Autonomous Data Warehouse in real time and at scale in order to make relevant information available to consumers as quickly as possible.

- Integration

Oracle Integration is a fully managed service that allows you to integrate your applications, automate processes, gain insight into your business processes, and create visual applications.

- WANdisco LiveData Migrator

WANdisco LiveData Migrator automates the large-scale movement of data and metadata from existing on-premises data lakes, Spark, and Hadoop environments to OCI migrates live data at scale from an on-premises. LiveData Migrator doesn't require downtime, it migrates the changes made to data before, during, and after migration.

- Autonomous Data

Warehouse

Oracle Autonomous Data Warehouse is a self-driving, self-securing, self-repairing database service that is optimized for data warehousing workloads. You do not need to configure or manage any hardware, or install any software. Oracle Cloud Infrastructure handles creating the database, as well as backing up, patching, upgrading, and tuning the database.

-

Oracle Cloud Infrastructure

AI Services

Oracle Cloud Infrastructure AI Services is a collection of services with prebuilt machine learning models that make it easier for developers to apply AI to applications and business operations. The models can be custom-trained for more accurate business results. Teams within an organization can reuse the models, datasets, and data labels across services. OCI AI Services makes it possible for developers to easily add machine learning to apps without slowing down application development.

-

Oracle Machine Learning

Oracle Machine Learning services provides a common framework for machine learning model management and deployment with Oracle Autonomous Database. It accelerates the creation and deployment of machine learning models for data scientists by eliminating the need to move data to dedicated machine learning systems.

- Object Storage Data Lake

Object storage provides quick access to large amounts of structured and unstructured data of any content type, including database backups, analytic data, and rich content such as images and videos. You can safely and securely store and then retrieve data directly from the internet or from within the cloud platform. You can seamlessly scale storage without experiencing any degradation in performance or service reliability. Use standard storage for "hot" storage that you need to access quickly, immediately, and frequently. Use archive storage for "cold" storage that you retain for long periods of time and seldom or rarely access.

A data lake is a place to store your structured and unstructured data, as well as a method for organizing large volumes of highly diverse data from diverse sources. Data lakes are becoming increasingly important as people, especially in business and technology, want to perform broad data exploration and discovery. Bringing data together into a single place or most of it in a single place makes that simpler.

- Data catalog

Oracle Cloud Infrastructure Data Catalog is a fully managed, self-service data discovery and governance solution for your enterprise data. It provides data engineers, data scientists, data stewards, and chief data officers a single collaborative environment to manage the organization's technical, business, and operational metadata.

- Analytics

Oracle Analytics Cloud is a scalable and secure public cloud service that empowers business analysts with modern, AI-powered, self-service analytics capabilities for data preparation, visualization, enterprise reporting, augmented analysis, and natural language processing and generation. With Oracle Analytics Cloud, you also get flexible service management capabilities, including fast setup, easy scaling and patching, and automated lifecycle management.

- Oracle Cloud Infrastructure Streaming service

Oracle Cloud Infrastructure Streaming service (OSS) provides a fully managed, scalable, and durable solution for ingesting and consuming high-volume data streams in real-time. Use Streaming for any use case in which data is produced and processed continually and sequentially in a publish-subscribe messaging model.

About Oracle Cloud Infrastructure Lakehouse

Organizations can easily migrate existing or build new open source data lakes in Oracle Cloud Infrastructure Lakehouse with fully managed services like Oracle Big Data Service and Oracle Cloud Infrastructure Data Flow. Spark, HIVE, Hbase, and many more services can be easily deployed and scaled on OCI.

Oracle Big Data Service provides fully configured, secure, highly available, and dedicated Apache Hadoop and Spark clusters on demand. It offers the commonly used Hadoop components making it simple for enterprises to move workloads to the cloud and ensures compatibility with on-premises solutions.

Oracle Cloud Infrastructure Data Flow is a fully managed serverless Spark service that enables you to focus on their Spark workloads with zero infrastructure concepts. It enables rapid application delivery because developers can focus on app development, not infrastructure management.

Many organizations are looking to migrate their on-premises data lakes to leverage the Oracle Cloud Infrastructure Lakehouse architecture. However, migrating a data lake from on-premises Hadoop environments to the cloud can be challenging without the right support.

About Migrating Apache Hadoop Data with LiveData Migrator

Apache Hadoop data migration is difficult because of the volume of data and amount of data changes typically occurring in these systems.

Traditional data migration approaches relied on tools designed for static data transfer, such as bulk transfer devices or open-source tools like DistCp (Distributed Copy). These require the on-premises systems either to be brought down to prevent data changes from happening during the migration process, or require those responsible for the migration to identify the changes and develop custom solutions to migrate the new and changed data. This adds time and risks to the data migration, and according to industry analysts, results in over 60% of data migration initiatives to go over time, exceed budget, or fail altogether.

- Cloudera, including CDP (Cloudera Data Platform)

- CDH (Cloudera Data Hub)

- HDP (Hortonworks Data Platform) HDFS versions 2.6 and higher

The source systems can be running on Oracle Big Data Appliance or custom hardware configurations.