Learn About the CPU Inference Blueprint

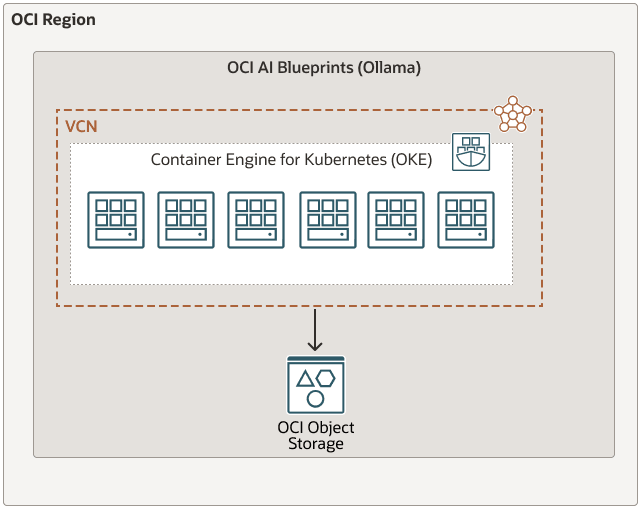

Oracle Cloud Infrastructure AI Blueprints is a streamlined, no-code solution for deploying and managing generative AI workloads on Oracle Cloud Infrastructure Kubernetes Engine (OKE).

By providing opinionated hardware recommendations, pre-packaged software stacks, and out-of-the-box observability tooling, OCI AI Blueprints helps you get your AI applications running quickly and efficiently, without wrestling with the complexities of infrastructure decisions, software compatibility, and machine learning operations (ML Ops) best practices.

This CPU Inference blueprint provides a comprehensive framework for testing inference on CPUs using the Ollama platform with a variety of supported models such as Mistral, Gemma, and others available through Ollama. Unlike GPU-dependent solutions, this blueprint is designed for environments where CPU inference is preferred or required.

The blueprint offers clear guidelines and configuration settings to deploy a robust CPU inference service and thereby enable thorough performance evaluations and reliability testing. Ollama's lightweight and efficient architecture makes it an ideal solution for developers looking to benchmark and optimize CPU-based inference workloads.

This blueprint explains how to use CPU inference for running large language models using Ollama. It includes two main deployment strategies:

- Serving pre-saved models directly from Oracle Cloud Infrastructure Object Storage

- Pulling models from Ollama and saving them to OCI Object Storage