Implement an NFS Cluster Server on OCI Using Object Storage as a Repository

When we have an unlimited amount of critical, unstructured data accessed frequently upon its inception, but infrequently thereafter, the best option is Oracle Cloud Infrastructure Object Storage.

However, since some applications are unable to use Object Storage natively, we need to use a third-party solutions. For this architecture, we'll use:

- Rclone for reading and writing to Object Storage.

- Corosync and Pacemaker to build to the cluster (active-standby), and to ensure the high availability required by critical applications (e.g., Rclone, secondary IP, NFS service, mount point, etc.).

- Secondary IP to mount the network file system (NFS) shared by the cluster and for automatic failover between the nodes of the cluster.

This reference architecture describes a customer-inspired configuration, combining high availability and flexibility.

Architecture

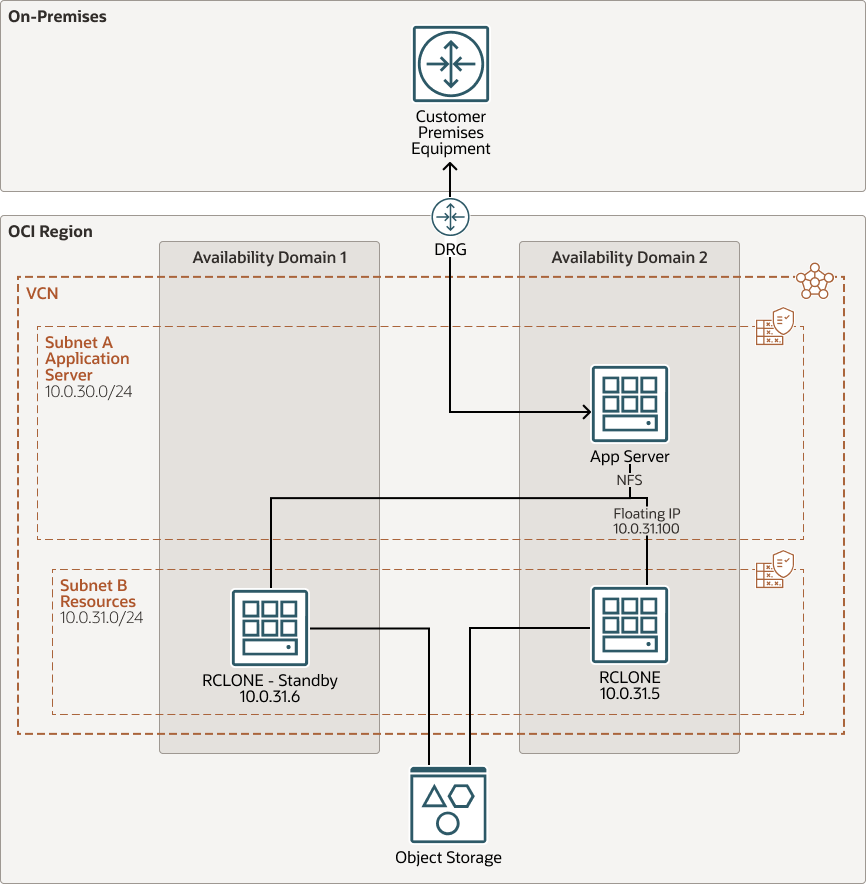

The following diagram illustrates this reference architecture.

In this scenario, to quicken the switchover process, we kept: the Rclone and NFS services running; Rclone in the mount model; the NFS service sharing the folder that was mounted by Rclone; and the seconary IP attached to only one of the nodes.

The architecture has the following components:

oci-rclone-architecture-diagram-oracle.zip

The process of switchover between nodes can be seen on OTube.

APP Server => inst-i6hjc-rclone-ha - Client Server

/mnt/nfs_rclone_v2 = Mount point client side

NFS - Floating IP = 172.10.0.100

RCLONE => nfs-rclone = Cluster Node1 = IP = 172.10.0.287

RCLONE STANDBY => inst-e2fc3-rclone-ha = Cluster Node 2 - IP = 172.10.0.121

NFS - SHARE

/mnt/nfs_rclone = From Object Storage You can use the cross-region backup to create a disaster recover; if you use a variable in setup, the Rclone config is static.

oci-nfs-cluster-architecture-diagram-oracle.zip

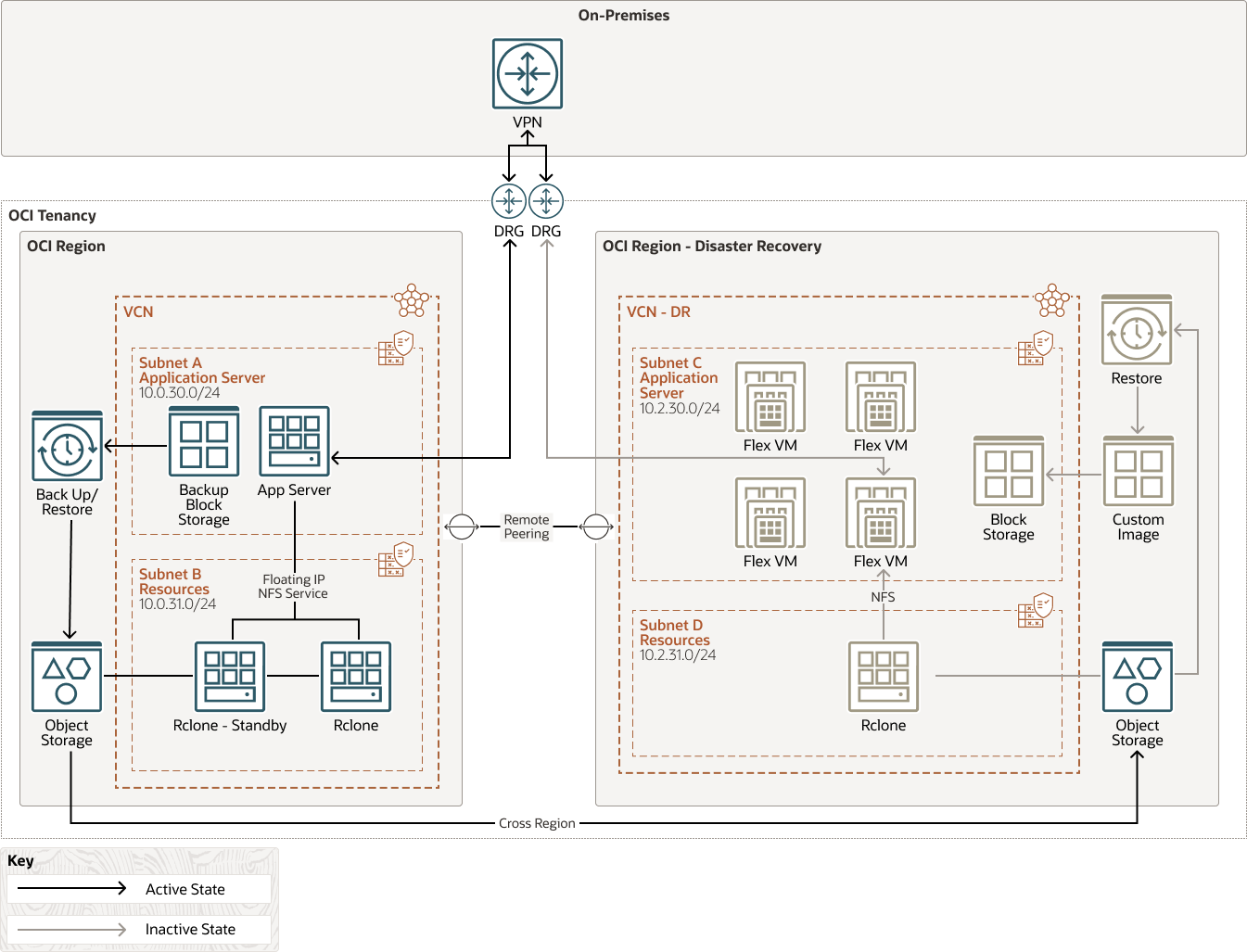

For disaster recovery:

- Use Object Storage replication for disaster recovery by creating a replica of the data in other regions. See Explore More: Using Replication.

- Create a disaster recovery solution for compute instances. See Explore More: Replicating a Volume.

This architecture has the following components:

- Region

An Oracle Cloud Infrastructure region is a localized geographic area that contains one or more data centers, called availability domains. Regions are independent of other regions, and vast distances can separate them (across countries or even continents).

- Availability domains

Availability domains are standalone, independent data centers within a region. The physical resources in each availability domain are isolated from the resources in the other availability domains, which provides fault tolerance. Availability domains don’t share infrastructure such as power or cooling, or the internal availability domain network. So, a failure at one availability domain is unlikely to affect the other availability domains in the region.

- Fault domains

A fault domain is a grouping of hardware and infrastructure within an availability domain. Each availability domain has three fault domains with independent power and hardware. When you distribute resources across multiple fault domains, your applications can tolerate physical server failure, system maintenance, and power failures inside a fault domain.

- Virtual cloud network (VCN) and subnets

A VCN is a customizable, software-defined network that you set up in an Oracle Cloud Infrastructure region. Like traditional data center networks, VCNs give you complete control over your network environment. A VCN can have multiple non-overlapping CIDR blocks that you can change after you create the VCN. You can segment a VCN into subnets, which can be scoped to a region or to an availability domain. Each subnet consists of a contiguous range of addresses that don't overlap with the other subnets in the VCN. You can change the size of a subnet after creation. A subnet can be public or private.

- Object storage

Object storage provides quick access to large amounts of structured and unstructured data of any content type, including database backups, analytic data, and rich content such as images and videos. You can safely and securely store and then retrieve data directly from the internet or from within the cloud platform. You can seamlessly scale storage without experiencing any degradation in performance or service reliability. Use standard storage for "hot" storage that you need to access quickly, immediately, and frequently. Use archive storage for "cold" storage that you retain for long periods of time and seldom or rarely access.

- Rclone

Rclone is an open source, command-line program to manage files on cloud storage, used here to delivery the Object Storage via NFS.

- Corosync and Pacemaker

Corosync and Pacemaker are both open source services suitable for both small and large clusters, and responsible for providing high availability within applications.

- OCI CLI

OCI CLI is responsible for integrating Linux Corosync/Pacemaker VirtualIP IPaddr2 resource with Oracle Cloud Infrastructure vNIC Secondary IP.

- Oracle Linux

Oracle Linux 8.6 instances are used to host this environment. Other Linux distros can be used as well, as long as they support Linux Rclone/Corosync/Pacemaker.

- Secondary IP

A secondary private IP can be added to an instance after it launches. You can add it to the primary or secondary VNIC on the instance. This secondary IP will float between the nodes; if a node is down, the floating IP will pass to another one. Corosync and Pacemaker will be responsible for this change process.

Recommendations

- Rclone

Use the same directory name on both cluster nodes. Pay attention to the NFS export configuration so that there are no problems using the Rclone file system. If you use the graphical interface to mount the directory, you can monitor it through the graphical interface.

If you don't configure the Rclone services in the Linux cluster, we recommend using a shell script in crontab to start the services during server startup

- VCN

When you create a VCN, determine the number of CIDR blocks required and the size of each block based on the number of resources that you plan to attach to subnets in the VCN. Use CIDR blocks that are within the standard private IP address space.

Select CIDR blocks that don't overlap with any other network (in Oracle Cloud Infrastructure, your on-premises data center, or another cloud provider) to which you intend to set up private connections.

After you create a VCN, you can change, add, and remove its CIDR blocks.

When you design the subnets, consider your traffic flow and security requirements. Attach all the resources within a specific tier or role to the same subnet, which can serve as a security boundary.

- Compute shapes

This architecture uses an Oracle Linux OS image with either E3 or E4 flex shape with minimum resources to host compute hosts to cluster nodes. If your application needs more throughput network, memory, or cores, you can choose a different shape.

- Oracle Linux cluster

Once your Oracle Linux instances have been provisioned, you'll need to set up the CLI as explained in the public documentation and install and configure your Corosync/Pacemaker cluster along with its requirements (stonith, quorum, resources, constraints, etc.). After configuring your Corosync/Pacemaker cluster and CLI, you will need to setup your VirtualIP resource. In this link is a quick example of how to setup a VirtualIP resource on Corosync/Pacemaker using the command line. The same process can be done through the web browser UI as well.

- Object Storage

Use the Object Lifecycle Management (see Explore More) to change to type and use the retention rules to preserve data (see Explore More) to change and manage the data in bucket after upload the files.

- Corosync and Pacemaker

Use as many variables as possible when integrating Linux Corosync and Pacemaker with OCI CLI, to keep the script static and independent of the running server.

Explore More

Learn more about disaster recovery.

Review these additional resources:

- OCI

- Using Replication

- Replicating a Volume

- Using Object Lifecycle Management

- Using Retention Rules to Preserve Data

- Best practices framework for Oracle Cloud Infrastructure

- Overview of Object Storage

- Setting Up High Availability Clustering

- Automatic Virtual IP Failover on Oracle Cloud Infrastructure Looks Hard, But it isn't

- Restoring a Backup to a New Volume

- Other