Chapter 3 Understanding Storage

- 3.1 What is Storage Used for in Oracle VM?

- 3.2 What Types of Storage Can be Used?

- 3.3 How is Storage Created within Oracle VM?

- 3.4 How do Different Storage Types Connect?

- 3.5 What is Multipathing?

- 3.6 What are Uniform and Non-uniform Exports?

- 3.7 What are Access Groups?

- 3.8 How is Storage Used for Server Pool Clustering?

- 3.9 Where are Virtual Machine Resources Located?

- 3.10 Are there Guidelines for Configuring Storage?

- 3.11 What does a Refresh Operation do?

Since storage is such a fundamental component within an Oracle VM, and many of the concepts surrounding it are not discussed elsewhere in the Oracle VM documentation, this chapter is dedicated to providing a detailed overview of storage infrastructure and how it is used within Oracle VM. Many of the concepts presented here are designed to assist you during the configuration of your storage using any of the interfaces provided by Oracle VM Manager, including the web-based user interface and the Oracle VM Manager Command Line Interface. A very brief overview of storage components is provided at Section 2.6.1, “Storage”.

To get a more thorough overview of how storage is used within Oracle VM and the supported storage types, it is recommended that you refer to Section 3.1, “What is Storage Used for in Oracle VM?” and Section 3.2, “What Types of Storage Can be Used?”.

The particular way in which Oracle VM approaches storage is through plug-ins: Oracle has made storage configuration and integration as flexible and modular as possible by creating a Storage Connect plug-in for each different category and type of storage. These plug-ins are discussed in further detail in Section 3.4, “How do Different Storage Types Connect?”.

For information on managing and working with the contents of a storage repository, such as virtual machine templates, ISO files, virtual appliances, and so on, see Section 3.9, “Where are Virtual Machine Resources Located?”.

Once you have a good understanding of the Oracle VM storage concepts, please take the time to read Section 3.10, “Are there Guidelines for Configuring Storage?” before you start planning your storage infrastructure. This can help you to ensure that your storage configuration is well planned and that you avoid common pitfalls.

3.1 What is Storage Used for in Oracle VM?

Storage in Oracle VM is used for a variety of purposes. These can be grouped into two main categories:

-

Oracle VM Server clustering resources.

-

Oracle VM virtual machine resources (such as virtual appliances, templates, ISOs, disk images and configuration data).

In the first instance, it is absolutely essential that any Oracle VM Server clustering resources that are used within a deployment are located on shared storage. Shared storage is any storage mechanism that can be made available to all of the Oracle VM Server instances within a server pool. This is a requirement because data relating to the health and availability of components within a pool needs to be shared across all members within a pool. While it is possible to avoid taking advantage of the clustering facilities provided by Oracle VM, thereby avoiding any requirement for shared storage, this is an uncommon configuration and due to the range of support that Oracle VM provides for different shared storage technologies it is highly recommended that some form of shared storage is used for at least this purpose.

In general, it is highly recommended that Oracle VM virtual machine resources also make use of shared storage. By using shared storage for these resources, it is possible to easily migrate or restart virtual machines between different servers within a pool. It is possible to use local storage, such as the physical disks attached to any particular Oracle VM Server, within a deployment, for the purpose of provisioning virtual machine resources, but limitations apply if functionality such as live migration is required. Furthermore, the overhead required to manage storage within the data center for your Oracle VM deployment is significantly reduced if you choose to use shared storage.

The actual provisioning of virtual machine resources is usually achieved by creating a storage repository. In Oracle VM terms, a repository is a file system that is logically structured in a particular way so that different resources can be located easily and made available for the purpose of creating, cloning or running virtual machines. If the repository is located on an NFS share, the directory structure required for the repository is created on the existing file system exposed through the share. On shared physical disks exposed through SAN hardware via ISCSI or Fibre Channel, the disk or LUN is formatted with an OCFS2 file system, and the directory structure is created on top of this. OCFS2 is used in this situation because the file system is accessed simultaneously by multiple Oracle VM Servers, something which most file systems cannot handle, but which OCFS2 is explicitly designed to handle. Since OCFS2 is not available on SPARC, iSCSI and Fibre Channel disks cannot be used to host a repository accessible to SPARC-based Oracle VM Servers. For SPARC-based servers you should always use an NFS server share to host a repository.

A repository can also be created on a local storage disk. In general, this means that the data within such a repository is only available to virtual machines running on the Oracle VM Server to which the disk is attached. However, where the Oracle VM Servers in the server pool are x86-based, the filesystem is formatted using OCFS2 and a facility has been provided to enable live migration of virtual machines, running off local storage, between the Oracle VM Servers within the pool.

For SPARC-based servers, ZFS and SAS disks are treated as local storage disks and live migration of virtual machines running on these disks is not facilitated.

3.2 What Types of Storage Can be Used?

Oracle VM is designed to allow you to use a wide variety of storage types so you can adapt your configuration to your needs. Whether you have a limited hardware setup or a full rack of servers, whether you perform an installation for testing and temporary internal use or design a production environment that requires high availability in every area, Oracle VM offers support for a suitable storage solution.

Making use of both generic and vendor-specific Storage Connect plug-ins, Oracle VM allows you to use the following types of storage:

Oracle VM does not support mixing protocols to access the same storage device. Therefore, if you are using Fibre Channel to access a storage device, you must not then access the same device using the iSCSI protocol.

Usually the file system of a disk that is used as storage for an Oracle VM Server is formatted using OCFS2 (Oracle Cluster File System). The only exception to this is NFS, since by nature this file system is already designed to be accessed by multiple systems at once. The use of OCFS2 ensures that the file system can properly handle simultaneous access by multiple systems at the same time. OCFS2 is developed by Oracle and is integrated within the mainstream Linux kernel, ensuring excellent performance and full integration within an Oracle VM deployment. OCFS2 is also a fundamental component used to implement server pool clustering. This is discussed in more detail in Section 3.8, “How is Storage Used for Server Pool Clustering?”. Since OCFS2 is not available on SPARC, only NFS can be used to support SPARC-based server pool clustering or shared repository hosting.

To enable HA or live migration, you must make sure all Oracle VM Servers have access to the same storage resources. Specifically for live migration and virtual machine HA restarts, the Oracle VM Servers also must be part of the same server pool. Also note that clustered server pools require access to a shared file system where server pool information is stored and retrieved, for example in case of failure and subsequent server role changes. In x86 environments, the server pool file system can either be on an NFS share or on a LUN of a SAN server. In SPARC environments, the server pool file system can only reside on an NFS share.

3.2.1 Local Storage

Local storage consists of hard disks installed locally in your Oracle VM Server. For SPARC, this includes SAS disks and ZFS volumes.

In a default installation, Oracle VM Server detects any unused space on the installation disk and re-partitions the disk to make this space available for local storage. As long as no partition and data are present, the device is detected as a raw disk. You can use the local disks to provision logical storage volumes as disks for virtual machines or install a storage repository.

In Oracle VM Release 3.4.2, support was added for NVM Express (NVMe) devices. If you use an NVMe device as local storage in an Oracle VM deployment, Oracle VM Server detects the device and presents it through Oracle VM Manager.

- Oracle VM Server for x86

-

-

To use the entire NVMe device as a storage repository or for a single virtual machine physical disk, you should not partition the NVMe device.

-

To provision the NVMe device into multiple physical disks, you should partition it on the Oracle VM Server where the device is installed. If an NVMe device is partitioned then Oracle VM Manager displays each partition as a physical disk, not the entire device.

You must partition the NVMe device outside of the Oracle VM environment. Oracle VM Manager does not provide any facility for partitioning NVMe devices.

-

NVMe devices can be discovered if no partitions exist on the device.

-

If Oracle VM Server is installed on an NVMe device, then Oracle VM Server does not discover any other partitions on that NVMe device.

-

- Oracle VM Server for SPARC

-

-

Oracle VM Manager does not display individual partitions on an NVMe device but only a single device.

Oracle recommends that you create a storage repository on the NVMe device if you are using Oracle VM Server for SPARC. You can then create as many virtual disks as required in the storage repository. However, if you plan to create logical storage volumes for virtual machine disks, you must manually create ZFS volumes on the NVMe device. See Creating ZFS Volumes on NVMe Devices in the Oracle VM Administrator's Guide.

-

Some important points about local storage are as follows:

-

Local storage can never be used for a server pool file system.

-

Local storage is fairly easy to set up because no special hardware for the disk subsystem is required. Since the virtualization overhead in this set up is limited, and disk access is internal within one physical server, local storage offers reasonably high performance.

-

In an Oracle VM environment, sharing a local physical disk between virtual machines is possible but not recommended.

-

If you place a storage repository on a local disk in an x86 environment, an OCFS2 file system is created. If you intend to create a storage repository on a local disk, the disk must not contain any data or meta-data. If it does, it is necessary to clean the disk manually before attempting to create a storage repository on it. This can be done using the dd command, for example:

# dd if=/dev/zero of=/dev/

diskbs=1MWhere

diskis the device name of the disk where you want to create the repository.WarningThis action is destructive and data on the device where you perform this action may be rendered irretrievable.

-

In SPARC environments, a storage repository is created using the ZFS file system. If you use a local physical disk for your storage repository, a ZFS pool and file system are automatically created when you create the repository. If you use a ZFS volume to host the repository, the volume is replaced by a ZFS file system and the repository is created within the file system.

-

Oracle VM Release 3.4 uses features built into the OCFS2 file system on x86 platforms that enable you to perform live migrations of running virtual machines that have virtual disks on local storage. These live migrations make it possible to achieve nearly uninterrupted uptime for virtual machines.

However, if the virtual machines are stopped, Oracle VM Manager does not allow you to move virtual machines directly from one local storage repository to another local repository if the virtual machines use virtual disks. In this case, you must use an intermediate shared repository to move the virtual machines and the virtual disks from one Oracle VM Server to another.

For more information on live migrations and restrictions on moving virtual machines between servers with local storage, see Section 7.7, “How Can a Virtual Machine be Moved or Migrated?”.

The configuration where local storage is most useful is where you have an unclustered server pool that contains a very limited number of Oracle VM Servers. By creating a storage repository (see Section 4.4, “How is a Repository Created?”) on local storage you can set up an Oracle VM virtualized environment quickly and easily on one or two Oracle VM Servers: all your resources, virtual machines and their disks are stored locally.

Nonetheless, there are disadvantages to using local storage in a production environment, such as the following:

-

Data stored across disks located on a large number of different systems adds complexity to your backup strategy and requires more overhead in terms of data center management.

-

Local storage lacks flexibility in a clustered environment with multiple Oracle VM Servers in a server pool. Other resources, such as templates and ISOs, that reside on local storage cannot be shared between Oracle VM Servers, even if they are within the same server pool.

In general, it is recommended to use attached storage with comprehensive redundancy capabilities over local storage for critical data and for running virtual machines that require high availability with robust data loss prevention.

3.2.1.1 SAS Disks

SAS (Serial Attached SCSI) disks can be used as local storage within an Oracle VM deployment. On SPARC systems SAS disk support is limited to the SAS controller using the mpt_sas driver. This means that there is internal SAS disk support on T3 and later, but internal SAS disks on T2 and T2+ are unsupported.

Only local SAS storage is supported with Oracle VM Manager. Oracle VM does not support shared SAS storage (SAS SAN), meaning SAS disks that use expanders to enable a SAN-like behavior can only be accessed as local storage devices. Oracle VM Manager recognizes local SAS disks during the discovery process and adds these as Local File Systems. SAS SAN disks are ignored during the discovery process and are not accessible for use by Oracle VM Manager.

On Oracle VM Servers that are running on x86 hardware you can determine whether SAS devices are shared or local by running the following command:

# ls -l /sys/class/sas_end_device

Local SAS:

lrwxrwxrwx 1 root root 0 Dec 18 22:07 end_device-0:2 ->\ ../../devices/pci0000:00/0000:00:01.0/0000:0c:00.0/host0/port-0:2/end_device-0:2/sas_end_device/ end_device-0:2 lrwxrwxrwx 1 root root 0 Dec 18 22:07 end_device-0:3 ->\ ../../devices/pci0000:00/0000:00:01.0/0000:0c:00.0/host0/port-0:3/end_device-0:3/sas_end_device/ end_device-0:3

SAS SAN:

lrwxrwxrwx 1 root root 0 Dec 18 22:07 end_device-0:0:0 -> \ ../../devices/pci0000:00/0000:00:01.0/0000:0c:00.0/host0/port-0:0/expander-0:0/port-0:0:0/ end_device-0:0:0/sas_end_device/end_device-0:0:0 lrwxrwxrwx 1 root root 0 Dec 18 22:07 end_device-0:1:0 -> \ ../../devices/pci0000:00/0000:00:01.0/0000:0c:00.0/host0/port-0:1/expander-0:1/port-0:1:0/ end_device-0:1:0/sas_end_device/end_device-0:1:0

For SAS SAN storage, note the inclusion of the expander within the device entries.

3.2.2 Shared Network Attached Storage (NFS)

Network Attached Storage – typically NFS – is a commonly used file-based storage system that is very suitable for the installation of Oracle VM storage repositories. Storage repositories contain various categories of resources such as templates, virtual disk images, ISO files and virtual machine configuration files, which are all stored as files in the directory structure on the remotely located, attached file system. Since most of these resources are rarely written to but are read frequently, NFS is ideal for storing these types of resources.

With Oracle VM you discover NFS storage via the server IP or host name and typically present storage to all the servers in a server pool to allow them to share the same resources. This, along with clustering, helps to enable high availability of your environment: virtual machines can be easily migrated between host servers for the purpose of load balancing or protecting important virtual machines from going off-line due to hardware failure.

NFS storage is exposed to Oracle VM Servers in the form of shares on the NFS server which are mounted onto the Oracle VM Server's file system. Since mounting an NFS share can be done on any server in the network segment to which NFS is exposed, it is possible not only to share NFS storage between servers of the same pool but also across different server pools.

In terms of performance, NFS is slower for virtual disk I/O compared to a logical volume or a raw disk. This is due mostly to its file-based nature. For better disk performance you should consider using block-based storage, which is supported in Oracle VM in the form of iSCSI or Fibre Channel SANs.

NFS can also be used to store server pool file systems for clustered server pools. This is the only shared storage facility that is supported for this purpose for SPARC-based server pools. In x86 environments, alternate shared storage such as iSCSI or Fibre Channel is generally preferred for this purpose for performance reasons.

Unsupported NFS Server Configurations

Oracle VM does not support the following configurations as they result in errors with storage repositories:

- Multiple IP addresses or hostnames for one NFS server

-

If you assign multiple IP addresses or hostnames to the same NFS server, Oracle VM Manager treats each IP address or hostname as a separate NFS server.

- DNS round-robin

-

If you configure your DNS so that a single hostname is assigned to multiple IP addresses, or a round-robin configuration, the storage repository is mounted repeatedly on the Oracle VM Server file system.

- Nested NFS exports

-

If your NFS file system has other NFS file systems that reside inside the directory structure, or nested NFS exports, exporting the top level file directory from the NFS server results in an error where Oracle VM Server cannot access the storage repository. In this scenario, the OVMRU_002063E No utility server message is returned for certain jobs and written to

AdminServer.log.For more information about resolving errors with nested NFS exports, see Doc ID 2109556.1 in the Oracle Support Knowledge Base.

3.2.3 iSCSI Storage Attached Network

With Internet SCSI, or iSCSI, you can connect storage entities to client machines, making the disks behave as if they are locally attached disks. iSCSI enables this connectivity by transferring SCSI commands over existing IP networks between what is called an initiator (the client) and a target (the storage provider).

To establish a link with iSCSI SANs, all Oracle VM Servers can use configured network interfaces as iSCSI initiators. It is the user's responsibility to:

-

Configure the disk volumes (iSCSI LUNs) offered by the storage servers.

-

Discover the iSCSI storage through Oracle VM Manager. When discovered, unmanaged iSCSI and Fibre Channel storage is not allocated a name in Oracle VM Manager. Use the ID allocated to the storage to reference unmanaged storage devices in the Oracle VM Manager Web Interface or the Oracle VM Manager Command Line Interface.

-

Set up access groups, which are groups of iSCSI initiators, through Oracle VM Manager, in order to determine which LUNs are available to which Oracle VM Servers.

Performance-wise, an iSCSI SAN is better than file-based storage like NFS and it is often comparable to direct local disk access. Because iSCSI storage is attached from a remote server, it is perfectly suited for an x86-based clustered server pool configuration where high availability of storage and the possibility to live migrate virtual machines are important factors.

In SPARC-based environments, iSCSI LUNs are treated as local disks. Since OCFS2 is not supported under SPARC, these disks cannot be used to host a server pool cluster file system and they cannot be used to host a repository. Typically, if iSCSI is used in a SPARC environment, the LUNs are made available directly to virtual machines for storage.

Provisioning of iSCSI storage can be done through open source target creation software at no additional cost, with dedicated high-end hardware, or with anything in between. The generic iSCSI Oracle VM Storage Connect plug-in allows Oracle VM to use virtually all iSCSI storage providers. In addition, vendor-specific Oracle VM Storage Connect plug-ins exist for certain types of dedicated iSCSI storage hardware, allowing Oracle VM Manager to access additional interactive functionality otherwise only available through the management software of the storage provider. Examples are creating and deleting LUNs, extending existing LUNs and so on. Check with your storage hardware supplier if a Oracle VM Storage Connect plug-in is available. For installation and usage instructions, consult your supplier's plug-in documentation.

Oracle VM is designed to take advantage of Oracle VM Storage Connect plug-ins to

perform LUN management tasks. On a storage array do not unmap a

LUN and then remap it to a different LUN ID without rebooting

the affected Oracle VM Servers. In general, remapping LUNs is risky

because it can cause data corruption since the targets have been

switched outside of the affected Oracle VM Servers. If you attempt to

remap a LUN to a different ID, affected Oracle VM Servers are no longer

able to access the disk until they are rebooted and the

following error may appear in

/var/log/messages:

Warning! Received an indication that the LUN assignments on this target have changed. The Linux SCSI layer does not automatically remap LUN assignments.

3.2.4 Fibre Channel Storage Attached Network

Functionally, a fibre channel SAN is hardly different from an iSCSI SAN. Fibre channel is actually older technology and uses dedicated hardware instead: special controllers on the SAN hardware, host bus adapters or HBAs on the client machines, and special fibre channel cables and switches to interconnect the components.

Like iSCSI, a Fibre Channel SAN transfers SCSI commands between initiators and targets establishing a connectivity that is almost identical to direct disk access. However, whereas iSCSI uses TCP/IP, a Fibre Channel SAN uses Fibre Channel Protocol (FCP). The same concepts from the iSCSI SAN, as described in Section 3.2.3, “iSCSI Storage Attached Network”, apply equally to the Fibre Channel SAN. Again, generic and vendor-specific Storage Connect plug-ins exist. Your storage hardware supplier can provide proper documentation with the Oracle VM Storage Connect plug-in.

3.3 How is Storage Created within Oracle VM?

To add storage to the Oracle VM environment, you must first discover it. The discovery process connects to the storage and discovers the file systems or disks available, and configures them for use with Oracle VM.

Discovering a File Server

In Oracle VM, the term file server is used to indicated file-based storage made available to the environment from another physical server, as opposed to local storage. Describing the technology used to expose file systems, NFS shares and so on, is beyond the scope of this guide. The procedure linked to below explains how you can bring the exposed file-based storage into Oracle VM, prepare it for the installation of a storage repository, and configure the file server and discovered file systems.

Before you discover a file server, make sure that your storage server exposes a writable file system to the storage network of your server pool.

To discover a file server, use Discover File

Servers

![]() on the Storage tab to display the

Discover a File Server dialog

box, which is used to discover the external storage mount points.

To discover a file server, see

Discover File Server in the

Oracle VM Manager User's Guide.

on the Storage tab to display the

Discover a File Server dialog

box, which is used to discover the external storage mount points.

To discover a file server, see

Discover File Server in the

Oracle VM Manager User's Guide.

Discovering a SAN Server

Make sure that your storage array server exposes raw disks (Fibre Channel SAN volumes, iSCSI targets and LUNs) to the storage network of your server pool.

To discover a storage array (SAN) server, use

Discover SAN Server

![]() on the Storage tab to display the

Discover SAN Server dialog box,

which is used to discover the external storage elements. To

discover a storage array, see

Discover SAN Server in the

Oracle VM Manager User's Guide.

on the Storage tab to display the

Discover SAN Server dialog box,

which is used to discover the external storage elements. To

discover a storage array, see

Discover SAN Server in the

Oracle VM Manager User's Guide.

After discovering a file server and its file systems, or a storage array, it is ready to be used either for storage repositories or as server pool file systems. A server pool file system is selected during the creation of the server pool (see Section 6.8, “How are Server Pools Created?”); to create storage repositories on your file systems, see Section 4.4, “How is a Repository Created?”.

3.4 How do Different Storage Types Connect?

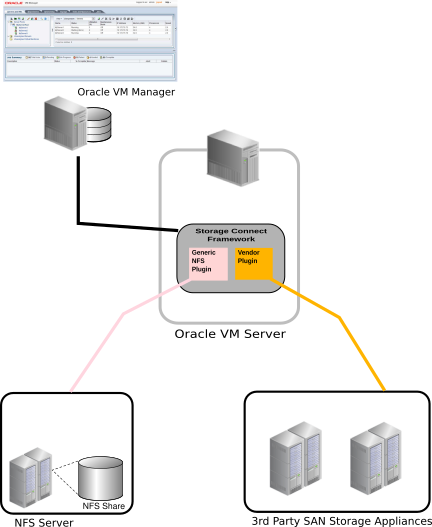

Oracle VM Manager communicates

with all storage through a set of plug-ins, which are part of the

Storage Connect framework. These plug-ins are not actually run

from the Oracle VM Manager but rather live on some or all of the

Oracle VM Servers.

You can see these plug-in files in the local file system of an

Oracle VM Server in the /opt/storage-connect/

directory. In the Oracle VM Manager Web Interface you select an available plug-in when

creating and configuring storage elements for use in your

environment.

The Oracle VM Storage Connect framework provides a storage discovery and provisioning API that enables the provisioning and management of storage platforms directly from within Oracle VM Manager. The abstraction provided by the Storage Connect framework allows administrators to perform provisioning operations without the need to know the specific behavior of any storage platforms that are being used within the Oracle VM infrastructure. The Storage Connect API allows third party storage vendors to develop their own Oracle VM Storage Connect plug-ins that enable their storage appliances to interface with the Storage Connect framework.

Storage elements are logically divided in File Servers and SAN Servers. This distinction refers to the difference between file-based storage and block-based storage, or raw disks. Both types of storage are handled using Oracle VM Storage Connect plug-ins. The Oracle VM Storage Connect framework is able to present the capabilities of these two different types of storage facilities in a similar way, and a user would need to have only basic knowledge of storage to be able to manage either of them.

Oracle VM Storage Connect plug-ins are split up according to the functionality they offer: there are generic plug-ins and non-generic plug-ins, also referred to as vendor-specific plug-ins. Generic plug-ins offer a limited set of standard storage operations on virtually all storage hardware, such as discovering and operating on existing storage resources. We categorize these operations as 'passive' in the sense that they do not interact with the storage management but simply detect the available storage architecture and allow it to be used in the Oracle VM environment.

Vendor-specific plug-ins include a much larger set of operations, which also includes direct, active interventions on the storage hardware: clone, create LUNs, resize, and so on. To execute generic storage plug-in operations, only an access host or fibre channel connectivity is required (for iSCSI: typically a host name or IP address with a port number). The non-generic plug-in operations require an additional admin host, with optional administrative user name and password, granting Oracle VM Servers, with the appropriate plug-in installed, direct access to the configuration of the storage hardware.

The following generic plug-ins are included with Oracle VM Server and work with Oracle VM Manager:

-

Oracle Generic NFS Plug-in.

-

Oracle Generic SCSI Plug-in.

To install vendor-specific plug-ins, see Installing Oracle VM Storage Connect plug-ins in the Oracle VM Administrator's Guide .

3.5 What is Multipathing?

Multipathing is the technique of creating more than one physical path between the server and its storage devices. It results in better fault tolerance and performance enhancement. Oracle VM supports multipath I/O out of the box. Oracle VM Servers are installed with multipathing enabled because it is a requirement for SAN disks to be discovered by Oracle VM Manager.

Any system disks (disks that contain / or /boot) are blocked by Oracle VM Manager and are not available for use in an Oracle VM environment.

Multipath configuration information is stored in /etc/multipath.conf

on each Oracle VM Server and contains specific settings for Oracle VM along with an extensive set

of configuration details for commonly used SAN hardware. In most cases the user should not

need to modify this file and is advised not to. Examining the contents of the file may be

useful to better understand how it works in Oracle VM and what may need to be configured

if your SAN is not using multipathing and your LUNs are not appearing.

More detail on how multipathing is configured is covered in Enabling Multipath I/O Support in the Oracle VM Administrator's Guide.

3.6 What are Uniform and Non-uniform Exports?

If all servers within a deployment have the same access to file systems exposed by the filer, Oracle VM Manager understands these exports to be uniform. In some cases, an NFS file server may be configured to export mount points with various access restrictions. In the case where some Oracle VM Servers in a deployment are not able to access exports that are available to others, Oracle VM Manager understands these exports to be non-uniform.

Since Oracle VM Manager never connects directly to any storage, but relies on Oracle VM Servers to handle the administration and the discovery of file systems exported by the NFS file server, Oracle VM Manager requires that you designate an Oracle VM Server as an administrative server. In the case of uniform exports, Oracle VM Servers designated as administration servers may be used for the purpose of performing file system refreshes. However, in the case where exports are non-uniform, an Oracle VM Server must be designated for each unique view of the file server. These servers are called 'refresh servers' and should only be configured in the instance where an NFS file server is configured to have non-uniform exports. Attempting to configure refresh servers for an NFS file server with uniform exports is prevented and an error message is returned. Equally, it is not possible to change the export type from non-uniform to uniform if there are any refresh servers defined for the file server. Instead, you must remove all refresh servers from the configuration for the file server, before attempting to change the export type. This design helps to ensure that a configuration behaves optimally for uniform exports, while ensuring that all file systems are refreshes for non-uniform exports.

During a file system refresh for an NFS file server with uniform exports, an Oracle VM Server designated as an administrative server is selected to perform the file system refresh; however in the case of non-uniform exports, a file system refresh is performed by every single Oracle VM Server that is designated as a refresh server. Oracle VM Manager then uses the information reported by each server to construct its view of all of the file systems that are available. For this reason, file system refreshes on NFS file servers configured with non-uniform exports may take longer to complete.

Non-uniform exports are not intended for servers within the same server pool. All of the servers that belong to a server pool that requires access to file systems on an NFS file server with non-uniform exports must be added to an access group. See Access Groups Perspective in the Oracle VM Manager User's Guide for more information on this.

If you need to refresh file systems for an NFS file server that has non-uniform exports, it is important to understand that for the operation to complete, each of the refresh servers must perform its own file system refresh, since each server has access to a different set of exports. If any of the refresh servers are unavailable, the job cannot complete, since not all exports can be refreshed. If you are faced with this situation, you may need to edit the NFS file server to remove a refresh server that is out of commission, and add an alternate that has access to the same set of file server exports.

3.7 What are Access Groups?

Access groups provide a means to arrange and restrict access to storage to a limited set of servers. In Oracle VM there are two types of access groups that ultimately provide very similar functionality:

-

File System Access Groups define which Oracle VM Servers have access to a particular file server export or file system. This is commonly used in environments where a file server has non-uniform exports, since it is important to be able to determine which Oracle VM Servers are able to refresh the file system. This is discussed in more detail in Section 3.6, “What are Uniform and Non-uniform Exports?”.

-

SAN Access Groups define which storage initiators can be used to access physical disks exposed using some form of SAN storage, such as iSCSI or Fibre Channel. SAN access group functionality is defined by the Oracle VM Storage Connect plug-in that you are using. The generic iSCSI plug-in creates a single access group to which all available storage initiators are added at the time of discovery. Other plug-ins provide more granular control allowing you to create multiple access groups that limit access to particular physical disks according to your requirements.

It is possible that in some environments you may have mixed SAN storage, where both iSCSI and Fibre Channel types are available. This can cause some confusion when configuring access groups, since the storage initiators for an access group must match the type of storage that the access group is being configured for. Oracle VM Manager is unable to filter the storage initiators available on each Oracle VM Server according to type, since access group contents are defined by the Oracle VM Storage Connect plug-in manufacturer. Therefore, it is important to pay attention to the storage initiators that you add to an access group if you are in an environment that supports more than one storage type. In most cases, creating an access group that contains storage initiators of a different type to that of the storage array type causes the Oracle VM Storage Connect plug-in to generate an error.

3.8 How is Storage Used for Server Pool Clustering?

To support server pool clustering, shared storage is required. On x86 hardware, this can be provided either in the form of an NFS share or a LUN on a SAN server. In either case, a disk image is created and formatted using OCFS2 (Oracle Cluster File System). On SPARC hardware, only NFS is supported to host the server pool file system.

For x86 environments, a clustered server pool always uses an OCFS2 file system to store the cluster configuration and to take advantage of OCFS2's heartbeat facility.

For SPARC environments, a clustered server pool is always hosted on an NFS share. Clustering for SPARC makes use of the same mechanisms that are available in OCFS2, but does not use the actual OCFS2 file system. Instead, clustering data is stored directly in the NFS share.

There are two types of heartbeats used within the cluster to ensure high availability:

-

The disk heartbeat: all Oracle VM Servers in the cluster write a time stamp to the server pool file system device.

-

The network heartbeat: all Oracle VM Servers communicate through the network to signal to each other that every cluster member is alive.

These heartbeat functions exist directly within the kernel and are fundamental to the clustering functionality that Oracle VM offers for server pools. It is very important to understand that the heartbeating functions can be disturbed by I/O-intensive operations on the same physical storage. For example, importing a template or cloning a virtual machine in a storage repository on the same NFS server where the server pool file system resides may cause a time-out in the heartbeat communication, which in turn leads to server fencing and reboot. To avoid unwanted rebooting, it is recommended that you choose a server pool file system location with sufficient and stable I/O bandwidth. Place server pool file systems on a separate NFS server or use a small LUN, if possible. For more information on setting up storage for a server pool file system, see Section 3.10, “Are there Guidelines for Configuring Storage?”.

3.9 Where are Virtual Machine Resources Located?

A storage repository defines where Oracle VM resources may reside. Resources include virtual machine configuration files, templates for virtual machine creation, virtual appliances, ISO files (CD/DVD image files), shared and unshared virtual disks, and so on. For information on the configuration and management of storage repositories and their contents, see Chapter 4, Understanding Repositories.

3.10 Are there Guidelines for Configuring Storage?

It is important to plan your storage configuration in advance of deploying virtual infrastructure. Here are some guidelines to keep in mind:

-

Take care when adding, removing, and resizing LUNs as it may require a physical server reboot. Do not resize LUNs that are used as part of Logical disks; instead, create a new LUN and add it to the disk group. Do not shrink LUNs that already host a repository; it causes the repository to become read-only and is likely to corrupt the OCFS2 file system.

-

If you resize a physical disk directly on the SAN server, you must refresh the physical disk within Oracle VM Manager to ensure that it is aware of the change and is capable of correctly determining the available disk space when performing other operations like creating a repository.

-

Avoid remapping LUNs as this can cause data corruption since the targets have been switched outside of the server. The Linux SCSI layer does not support dynamic remapping of LUNs from the storage array.

-

Test your configuration, especially fail over, in a test environment before rolling into production. You may need to make changes to the multipath configuration files of Oracle VM Server.

-

Plan the size and type of storage that you are using by workload. For example:

-

Boot volumes can typically be on higher capacity drives as most operating systems have minimal I/O activity on the boot disk, but some of that I/O is memory paging, which is sensitive to response times.

-

Applications can be on larger, slower drives (for example, RAID 5) unless they perform a lot of I/O. Write-intensive workloads should use RAID 10 on medium to fast drives. Ensure that log files are on different physical drives than the data they are protecting.

-

Infrastructure servers such as DNS tend to have low I/O needs. These servers can have larger, slower drives.

-

-

If using storage server features such as cloning and snapshots, use raw disks.

-

While it may be tempting to create a very large LUN when using logical disks, this can be detrimental to performance as each virtual machine queues I/Os to the same disks. You should size your repositories based on a thorough assessment of your requirements and then keep them to a size that is close to your exact requirements. Sizing of a repository should be based on a sum of the following values:

-

The total amount of disk space required for all running virtual machines. For instance if you have 6 virtual machines sized at 12 GB, you should ensure that the repository has at least 72 GB of available disk space.

-

The total amount of disk space required to provision each virtual machine with a local virtual disk. Consider that for 6 virtual machines, each with a 36 GB local virtual disk, you must have at least 216 GB of disk space available to your repository.

-

The total amount of disk space required to store any templates that you might use to deploy new guest images.

-

The total amount of disk space required to store ISO images for operating systems that may get installed to new guest images.

-

The total amount of disk space that you may require in reserve to deploy an additional number of virtual machines with local virtual disks in the future. As a rule of thumb, assume that you may need to deploy an additional virtual machine for each running virtual machine, so if you intend to initially run 6 virtual machines, you may find it sensible to size your repository for 12 virtual machines.

-

-

Be sure to leave some disk space available to create smaller storage entities of, at least, 12 GB each to use as server pool file systems. The server pool file system is used to hold the server pool and cluster data, and is also used for cluster heartbeating. You create space for server pool file systems the same way as you create storage entities for storage repositories. For more information about the use and management of clusters and server pools, see Chapter 6, Understanding Server Pools and Oracle VM Servers and Servers and VMs Tab in the Oracle VM Manager User's Guide.

-

Place server pool file systems on a separate NFS server or use a small LUN, if possible. The cluster heartbeating function can be disturbed by I/O-intensive operations on the same physical storage. For example, importing a template or cloning a virtual machine in a storage repository on the same NFS server where the server pool file system resides may cause a time-out in the heartbeat communication, which in turn leads to server fencing and reboot. To avoid unwanted rebooting, it is recommended that you choose a server pool file system location with sufficient and stable I/O bandwidth.

-

Disable read and write caching on the underlying disk systems to guarantee I/O synchronization. Caching may result in data loss if the Oracle VM Server or a virtual machine fails abruptly. To disable write caching, change the applicable settings in the RAID controller BIOS. Alternatively, use the sg_wr_mode command or use the SCSI disk class directly: echo "write through" > /sys/class/scsi_disk/

scsi-device-id/cache_type.

When creating exports on a file server, if you choose to restrict access to a particular set of hosts, then all exports on the file server must have an identical list of permitted hosts in the export list. In Oracle VM Manager all of the hosts that have been permitted access must be added to the file server's list of Admin Servers. See Discover File Server in the Oracle VM Manager User's Guide for more information on adding Admin Servers to your file server in Oracle VM Manager.

3.11 What does a Refresh Operation do?

Oracle VM Manager stores information about various objects such as the storage components or repositories within the environment. In general, if all operations are performed via Oracle VM Manager it is unlikely that this information becomes inconsistent, however external modifications or manual updates may result in an inconsistency in the information available to Oracle VM Manager and the status of the actual component. For instance, if files are manually copied into a repository via the file system where the repository is located, then Oracle VM Manager is unaware of the changes within the repository and is also unaware of the changes to the amount of available and used space on the file system where the repository is located.

Files can be copied manually into a repository. This should NOT be done on a regular basis. All repository operations should use the Oracle VM Manager.

To handle these situations, Oracle VM Manager provides mechanisms to refresh information about various components. Manual refresh operations may be performed for storage at each level:

-

Refresh File Server

-

Refresh SAN Server

-

Refresh Physical Disk

-

Refresh File System

-

Refresh Repository

Each refresh operation triggers an action on one or more of the assigned admin or refresh servers to gather the information required and report it back to Oracle VM Manager. When these refresh operations are performed using the Oracle VM Manager Web Interface, or Oracle VM Manager Command Line Interface, child operations may be triggered to refresh other items of interest. For example, refreshing a repository also triggers a file system refresh for the file system where the repository is hosted, while refreshing a file server also triggers a refresh operation for every file system hosted on the file server.

When a file system is refreshed, it is temporarily mounted on the configured admin server and its utilization information is collected and returned to Oracle VM Manager. This is a resource consuming operation and can take some time to complete. Therefore, you should give consideration to the types of refresh operations that you may need to perform. For instance, if you only need to check the utilization information for one file system, refresh only that file system rather than performing a full file server refresh, which would result in many more child operations being triggered.

Some information in Oracle VM Manager can be updated automatically via a periodic function that gathers file system statistics as part of the regular health monitoring functionality built into Oracle VM Manager. This service takes advantage of the fact that file systems that are already in use by Oracle VM, for hosting repositories or server pool clusters, are already mounted on Oracle VM Servers within the environment. Oracle VM Servers that are already capable of providing other health statistics can also report periodically on the utilization information for any of the file systems that they have mounted. When this feature is enabled, the information reflected by Oracle VM Manager for any of the file systems that are currently in use is more likely to be accurate and consistent and less likely to require manual refresh operations. The settings to control the automated gathering of file system statistics are described in more detail in Preferences in the Oracle VM Manager User's Guide.