11 Working with A/B Testing

Note:

A/B test functionality for this Oracle WebCenter Sites product is provided through an integration with Google Analytics. To use the A/B test functionality for this Oracle product, you must first register with Google for a Google Analytics account that will allow you to measure the performance of an “A/B” test. In addition, you acknowledge that as part of an “A/B” test, anonymous end user information (that is, information of end users who access your website for the test) will be delivered to and may be used by Google under Google’s terms that govern that information.For example, you might use A/B Testing to explore:

-

Which banner image resulted in more leads generated from the home page?

-

Which featured article resulted in visitors landing on a promoted section of the website?

-

Do visitors spend more time on the home page with a red banner displayed versus a blue banner?

-

Does adding a testimonial increase the click-through rate?

-

Which page layout resulted in more visitors downloading the featured white paper?

For information about working with A/B Testing, see these topics:

11.1 A/B Test Overview

Creating and running A/B tests involves the following main steps, which are described in detail throughout this chapter:

-

Decide what the test will compare.

An A/B test compares two or more variants, where variant A serves as the control (base), and variant B and any additional variants (C, D, and so on) are each compared with A.

You can run multiple A/B tests at the same time.

-

Decide what the test will measure.

You use a goal to specify the visitor action the test will capture and compare for the variants, and then display as A/B test results. A goal refers to a specific visitor action that you identify for tracking, and you can specify the type of goal that fits your use case. The default goals are Destination, Duration, Pages per session, and Event. For example, if you choose Destination, you can specify a page like

surfing.html. The conversion occurs whenever anyone visits this page. -

In A/B Test mode in the Oracle WebCenter Sites: Contributor interface, create an A/B test and add variants to it.

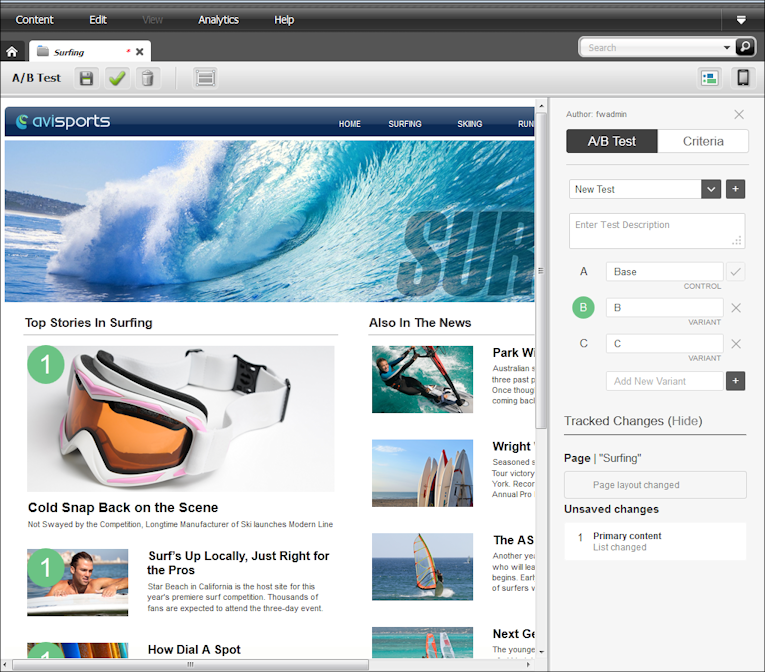

On the management system, create the test and use the WYSIWYG A/B test mode to add one or more variants to a selected web or mobile page. You can identify a variant by its color-coding, and easily save or discard its tracked changes. In the figure below, first variant (B) displays in green in both the A/B Test panel (B) and in each change (1) on the page. See Creating A/B Tests.

-

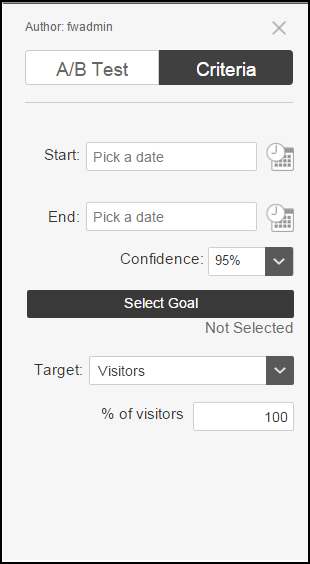

Select criteria for the A/B test.

-

Specify when and how the test will start and end. In Google Analytics it appears as a length of time. For example, if you select 14 as the start date and 28 as the end date, in Google Analytics, it appears as 2 weeks. See Specifying the Start and End of an A/B Test.

-

Specify a statistical confidence level to achieve. For example, enter 95 for a 95% confidence level in the results. See Selecting the Confidence Level of an A/B Test.

-

Click Select Goal and choose a goal in the dialog box that appears. See Specifying the Goal to Track in an A/B Test.

-

Select the visitors or segments to target for the test. You can display the test to a specified percent of the entire visitor pool or to a specified percent of one or more selected visitor segments. See Specifying Visitors or Segments to Target in A/B Tests.

Figure 11-2 A/B Test Criteria Pane

-

-

Approve the test (and its dependencies) to see the actual Experiment in Google.

-

View test results in the A/B Test Report.

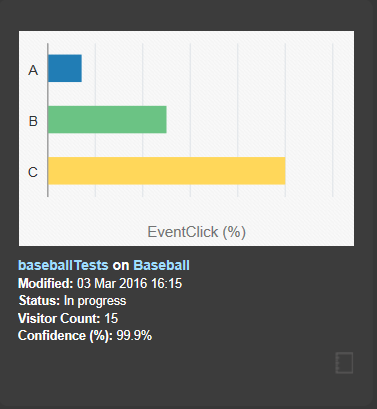

As visitors view variants and conversions take place, you can view the results for individual A/B tests on the report, which maintains the same variant color-coding you saw while creating test variants. The report enables you to see if there are measurable differences in visitor actions between variants. A cookie is left so that visitors see the same variant upon returning to the site. See Viewing A/B Test Results.

-

Optionally update the site to use the winning variant.

After displaying the winning variant for a while, you might copy or create an A/B Test to include new variants, enabling you to refine iterations over time.

11.2 Before You Begin A/B Testing

Creating A/B tests requires the following prerequisites to be in place:

-

The A/B test asset type

WCS_ABTestmust be enabled. By default it is not enabled, except in the “avisports” and “FirstSite II” sample sites.A WebCenter Sites administrator or developer enables asset types.

For more information, see Administering A/B Testing in Administering Oracle WebCenter Sites.

-

The A/B code element must be included in templates on which A/B tests will be run. This code element is not included in templates by default.

A WebCenter Sites developer adds the A/B code element to templates.

For more information, see Template Assets in Developing with Oracle WebCenter Sites.

-

The property

abtest.delivery.enabledmust be set to true on the delivery instance, that is any instance that will deliver the A/B test variants to site visitors. The property should not be set on instances that are used only to create the A/B tests, because this will give false results. Theabtest.delivery.enabledproperty is in the ABTest category of the wcs_properties.json file.For more information, see A/B Test Properties in Property Files Reference for Oracle WebCenter Sites.

-

Any user that will be able to “promote the winner” of an A/B test must be given the

MarketingAuthorrole. Any user that will be able to view A/B test reports and stop tests must be given theMarketingEditorrole.For more information on configuring users, see Configuring Users, Profiles, and Attributes in Administering Oracle WebCenter Sites.

11.3 Creating A/B Tests

Creating an A/B Test involves setting up variants of the web page to be tested, then setting up the criteria that will be applied during the test.

For information about creating A/B tests, see these topics:

11.3.1 Switching In and Out of A/B Test Mode

Working with A/B tests in Contributor is similar to editing in Web view. Two key differences are that in A/B test mode you make page changes to a test layer of variants rather than to site pages themselves, and an A/B Test panel displays at right.

For information about switching in and out of A/B test mode, see these topics:

11.3.1.1 Switching Into A/B Test Mode

If there are no A/B tests for a page, the panel at right contains a single control showing the text “Create a test”.

If one or more A/B tests already exist for a page, the panel at right shows the A/B Test controls and an option to change to the Criteria controls:

-

Use the A/B Test controls to create and edit tests for a page, and to track changes that you make to a page’s variants.

-

Use the Criteria controls to set the criteria for the selected test, such as its start and end determinants, which conversion to measure, and target and confidence information.

The controls in the toolbar also change when in A/B Test mode:

-

The toolbar label changes to A/B Test.

-

If the test has not yet been published, a Save icon and a Change Page Layout icon are available.

-

An Approve icon and a Delete icon are always available.

-

There are no Edit, Preview, Checkin/Checkout, or Reports icons.

11.3.2 Creating, Copying, and Selecting A/B Tests

You can create A/B tests, make copies of them, and select existing tests.

For information about creating, copying, and selecting A/B tests, see these topics:

These tasks assume that you are working in A/B Test Mode.

11.3.2.1 Creating an A/B Test for a Page

- In the list box containing either the text "Create a test" or a list of existing tests, click the + (plus) button beside the list box.

- In the Create New Test window, enter a name for the test in the top or only field.

- Click Submit.

- In the A/B Test toolbar, click the Save icon.

11.3.2.3 Selecting A/B Tests

-

Open the drop-down list of existing tests and select the one you want.

After creating, copying, or selecting a test, you can work with its variants (described in Adding/Selecting and Editing A/B Test Variants) and select its criteria. The criteria you select apply to all variants of the test.

11.3.3 Setting Up A/B Test Variants

For information about setting up A/B test variants, see these topics:

11.3.3.1 Adding/Selecting and Editing A/B Test Variants

In this procedure you define the A/B test by selecting a variant in the panel and making edits to its webpage, then use the color-coding and numbering to identify the selected variant and its changes.

To add/select and edit test variants:

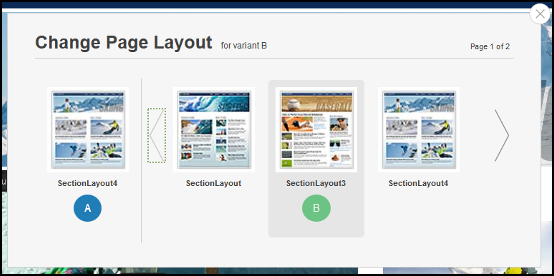

11.3.3.2 Changing the Page Layout of an A/B Test Variant

You may want a variant to use a different page layout from the control or other variants.

11.3.4 Setting Up A/B Test Criteria

For information about setting up A/B test criteria, see these topics:

11.3.4.1 Specifying the Start and End of an A/B Test

When creating a test, you can specify a start and end for it. If you do not specify a start, the test will start as soon as it is published. Once a test starts running, you can view its current results, stop it early, and promote its winner, as described in Viewing A/B Test Results and Making Site Changes Based on A/B Test Results. You cannot edit a test after it is published.

11.3.4.2 Selecting the Confidence Level of an A/B Test

When creating a test, you can select a confidence level for its results. This number determines confidence in the significance of the test results, specifically that conversion differences are caused by variant differences themselves rather than random visitor variations. The larger the number of A/B test visitors, the easier it is to determine statistical significance in variant differences.

If you set a confidence level, the A/B test will continue until the set level is reached, even beyond the set end point of the test.

For information about confidence level calculation, see How the A/B Test Confidence Level is Calculated.

To select a test confidence level:

- In A/B test view, click Criteria in the A/B Test panel (see Switching Into A/B Test Mode).

- In the Confidence field, select or enter a confidence level percent. You can select percents ranging from 85% to 99.9%

- Click Save.

11.3.4.3 How the A/B Test Confidence Level is Calculated

The conversion rate refers to the number of visitors who performed the specified visitor action divided by the number of visitors to the page variant. This rate is calculated per variant.

The confidence interval is calculated using the Wald method for a binomial distribution. In the Wald Method (Figure 11-6), conversion rate is represented by p.

Figure 11-6 Wald Method of Determining Confidence Intervals for Binomial Distributions

Description of "Figure 11-6 Wald Method of Determining Confidence Intervals for Binomial Distributions"

The results for each variant are then used to calculate the Z-Score (Figure 11-7). The Z-score measures how accurate results are. A common Z-Score (confidence range) used in A/B Testing is a 3% range from the final score. However, this is only a common use, and any range can be used. The range is then determined for the conversion rate (p) by multiplying the standard error with that percentile range of standard normal distribution.

At this point the results must be determined to be significant; that is, that conversion rates are not different based on random variations. The Z-Score is calculated in this way:

The Z-Score is the number of positive standard deviation values between the control and the test mean values. Using the previous standard confidence interval, a statistical significance of 95% is determined when the view event count is greater than 1000 and that the Z-Score probability is either greater than 95% or less than 5%.

11.3.4.4 Specifying the Goal to Track in an A/B Test

Marketers are required to create and use custom goals in Google Analytics for A/B testing. These goals must be created before setting up A/B tests. You can create goals of your own, or you can use goals created by others.

To specify the goal to track:

11.3.4.5 Specifying Visitors or Segments to Target in A/B Tests

You can randomly display an A/B test to a specified percent of either:

-

The entire visitor pool

-

One or more selected segments

For example, you might enter 50 to target the test to 50% of all visitors or to 50% of visitors in a segment of visitors 65 years or older. (For more information about segments, see Creating Segments.)

Within the specified percent, test variants display in equal percentages to the target visitors. For example, if a test targeting 30% of visitors includes two variants (B and C) in addition to the control (A), 10% of visitors would be shown control A, 10% variant B, and 10% variant C.

11.4 Approving and Publishing A/B Tests

After creating and editing your test's variants and specifying its criteria, the next step is to approve the test for publishing to the delivery system. The test is created in Google Analytics at this time. Uptil this point there is nothing in Google Analytics. After approving the test, you may log into Google and see the experiment and all its settings.

A few items to consider about approval:

-

Approving the test approves it and its variants. As with all webpage approvals, you must approve an asset and all of its dependencies.

-

The test must include a goal before you can approve it.

-

A/B tests are published to a destination that you select. This destination must itself already have been published, by an administrator. Consult your administrator if you find that no published destinations are available to you.

-

Upon publishing, the test will begin at the date and time you specified in the test's criteria. If that time has already passed, the test will start immediately.

To approve a test's assets for publishing and to start the test:

11.5 Using A/B Test Results

The results of your A/B tests are shown in A/B Test reports. You can use these to decide whether one of the variant pages you tested should be promoted to the active web site.

For information about using A/B test results, see these topics:

11.5.1 Viewing A/B Test Results

Once the test begins, whenever visitors view the control (A) or variant versions of the webpage, their site visit information is captured and these statistics become available in the A/B Test report. For completed tests, this report shows data that will let you compare the relative performance of the base and variant webpage designs. You can then use this information to decide whether to promote one of the variants to become the webpage that visitors see. For a full description of this report, see The A/B Test Report.

To view the A/B Test report in the Contributor interface:

11.5.2 The A/B Test Report

A/B Test reports show data that let you compare the relative performance of base and variant webpage designs. You can use this information to decide whether to promote one of the variants to become the webpage that visitors see.

Header Section

Figure 11-8 A/B Test Report: Header Section

Table 11-1 Features of the A/B Test Report Header Section

| Feature | Description |

|---|---|

|

Product and report name area |

Confirms that you are viewing an Oracle WebCenter Sites A/B Test Report. |

|

Status area |

Shows whether the report is in progress, complete, or not published. |

|

Button |

This is a red Stop button, a green Promote button, or a green Edit button. |

Summary Section

Figure 11-9 A/B Test Report: Summary Section

Table 11-2 Features of the A/B Test Report Summary Section

| Feature | Description |

|---|---|

|

Information area |

Shows the name of the A/B test, the name of the web page on which it is running, the owner of the test, and the test’s description. |

|

Conversion |

The type of conversion that the test is monitoring. |

|

Segments |

If the test includes a segmented user base, this describes the segmentation. “All” means the user base is not segmented. |

|

Stop |

When or how the test is intended to stop. |

|

Chart |

The conversion rate for each variant, displayed together so that they can be compared. |

Metrics Bar

Figure 11-10 A/B Test Report: Metrics Bar

Table 11-3 Features of the A/B Test Report Metrics Bar

| Feature | Description |

|---|---|

|

Target |

The percentage of all visitors, the end date, and the confidence percentage, as set up for the test. |

|

Summary to date |

The number of visitors served, the number of conversions, and the confidence percentage reached so far, for all variants combined. If the test allows one variant to be identified as better than the others, this is shown as the winner, when the test is complete. |

Conversions Section

Figure 11-11 A/B Test Report: Conversions Section

Table 11-4 Features of the A/B Test Report Conversions Section

| Feature | Description |

|---|---|

|

Select criteria |

Normally set to Device. |

|

Display |

Conversion information for each variant. |

Confidence Section

Figure 11-12 A/B Test Report: Confidence Section

Table 11-5 Features of the A/B Test Report Confidence Section

| Feature | Description |

|---|---|

|

Chart |

For each variant, the number of visitors, the number of conversions, the conversion percentage, the Z-score (see How the A/B Test Confidence Level is Calculated), and the confidence figure. |

11.6 Deleting an A/B Test

When you delete an A/B test, you will not be able to access any of the reports that were generated for it. However, the data is not destroyed and you can access EID directly and create your own reports to retrieve it.

If you attempt to delete an A/B test that is running, you will see a warning before you can complete the deletion.

Note:

The experiment that is created in Google is not deleted even if you have deleted the A/B test by performing the subsequent steps. To remove an experiment from Google, log into the Google Analytics interface and delete it.To delete an A/B Test:

You can return to the web page you were testing by clicking the Go Back button in the toolbar.