11 Maintaining Oracle Big Data Appliance

This chapter describes how to monitor and maintain Oracle Big Data Appliance. Some procedures use the dcli utility to execute commands in parallel on all servers.

This chapter contains the following sections:

11.1 Monitoring the Ambient Temperature of Servers

Maintaining environmental temperature conditions within design specification for a server helps to achieve maximum efficiency and targeted component service lifetimes. Temperatures outside the ambient temperature range of 21 to 23 degrees Celsius (70 to 74 degrees Fahrenheit) affect all components within Oracle Big Data Appliance, possibly causing performance problems and shortened service lifetimes.

To monitor the ambient temperature:

-

Connect to an Oracle Big Data Appliance server as

root. -

Set up passwordless SSH for

rootby entering thesetup-root-sshcommand, as described in "Setting Up Passwordless SSH". -

Check the current temperature:

dcli 'ipmitool sunoem cli "show /SYS/T_AMB" | grep value'

-

If any temperature reading is outside the operating range, then investigate and correct the problem. See Table 2-13.

The following is an example of the command output:

bda1node01-adm.example.com: value = 22.000 degree C

bda1node02-adm.example.com: value = 22.000 degree C

bda1node03-adm.example.com: value = 22.000 degree C

bda1node04-adm.example.com: value = 23.000 degree C

.

.

.

11.2 Powering On and Off Oracle Big Data Appliance

This section includes the following topics:

11.2.1 Nonemergency Power Procedures

This section contains the procedures for powering on and off the components of Oracle Big Data Appliance in an orderly fashion.

See Also:

Oracle Big Data Appliance Software User's Guide for powering on and off gracefully when the software is installed and running.

11.2.1.1 Powering On Oracle Big Data Appliance

To turn on Oracle Big Data Appliance:

-

Turn on all 12 breakers on both PDUs.

Oracle ILOM and the Linux operating system start automatically.

11.2.1.2 Powering Off Oracle Big Data Appliance

11.2.1.2.2 Powering Off Multiple Servers at the Same Time

Use the dcli utility to run the shutdown command on multiple servers at the same time. Do not run the dcli utility from a server that will be shut down. Set up passwordless SSH for root, as described in "Setting Up Passwordless SSH".

The following command shows the syntax of the command:

# dcli -l root -g group_name shutdown -hP now

In this command, group_name is a file that contains a list of servers.

The following example shuts down all Oracle Big Data Appliance servers listed in the server_group file:

# dcli -l root -g server_group shutdown -hP now

11.2.2 Emergency Power-Off Considerations

In an emergency, halt power to Oracle Big Data Appliance immediately. The following emergencies may require powering off Oracle Big Data Appliance:

-

Natural disasters such as earthquake, flood, hurricane, tornado, or cyclone

-

Abnormal noise, smell, or smoke coming from the system

-

Threat to human safety

11.2.2.1 Emergency Power-Off Procedure

To perform an emergency power-off procedure for Oracle Big Data Appliance, turn off power at the circuit breaker or pull the emergency power-off switch in the computer room. After the emergency, contact Oracle Support Services to restore power to the system.

11.2.2.2 Emergency Power-Off Switch

Emergency power-off (EPO) switches are required when computer equipment contains batteries capable of supplying more than 750 volt-amperes for more than 5 minutes. Systems that have these batteries include internal EPO hardware for connection to a site EPO switch or relay. Use of the EPO switch removes power from Oracle Big Data Appliance.

11.2.3 Cautions and Warnings

The following cautions and warnings apply to Oracle Big Data Appliance:

WARNING:

Do not touch the parts of this product that use high-voltage power. Touching them might result in serious injury.

Caution:

-

Do not turn off Oracle Big Data Appliance unless there is an emergency. In that case, follow the "Emergency Power-Off Procedure".

-

Keep the front and rear cabinet doors closed. Failure to do so might cause system failure or result in damage to hardware components.

-

Keep the top, front, and back of the cabinets clear to allow proper airflow and prevent overheating of components.

-

Use only the supplied hardware.

11.3 Adding Memory to the Servers

You can add memory to all servers in the cluster or to specific servers.

See Also:

11.3.1 Adding Memory to an Oracle Server X6-2L

Oracle Big Data Appliance X6-2L ships from the factory with 256 GB of memory in each server. Eight of the 24 slots are populated with 32 GB DIMMs. Memory is expandable up to 768 GB (in the case of 32 GB DIMMs in all 24 slots).

See the Oracle® Server X6-2L Service Manual for instruction on DIMM population scenarios and rules, installation, and other information.

11.3.2 Adding Memory to an Oracle Server X5-2L, Sun Server X4-2L, or Sun Server X3-2L

Oracle Big Data Appliance X5-2 ships from the factory with 128 GB of memory in each server. Eight of the 24 slots are populated with 16 GB DIMMs. Memory is expandable up to 768 GB (in the case of 32 GB DIMMs in all 24 slots).

Oracle Big Data Appliance X4-2 and X3-2 servers are shipped with 64 GB of memory. The eight slots are populated with 8 GB DIMMs. These appliances support 8 GB, 16 GB, and 32 GB DIMMs. You can expand the amount of memory for a maximum of 512 GB (16 x 32 GB) in a server. You can use the 8 * 32 GB Memory Kit.

You can mix DIMM sizes, but they must be installed in order from largest to smallest. You can achieve the best performance by preserving symmetry. For example, add four of the same size DIMMs, one for each memory channel, to each processor, and ensure that both processors have the same size DIMMs installed in the same order.

To add memory to an Oracle Server X5-2L, Sun Server X4-2L, or Sun Server X3-2L:

-

If you are mixing DIMM sizes, then review the DIMM population rules in the Oracle Server X5-2L Service Manual at

http://docs.oracle.com/cd/E41033_01/html/E48325/cnpsm.gnvje.html#scrolltoc -

Power down the server.

-

Install the new DIMMs. If you are installing 16 or 32 GB DIMMs, then replace the existing 8 GB DIMMs first, and then replace the plastic fillers. You must install the largest DIMMs first, then the next largest, and so forth. You can reinstall the original DIMMs last.

See the Oracle Server X5-2L Service Manual at

http://docs.oracle.com/cd/E41033_01/html/E48325/cnpsm.ceiebfdg.html#scrolltoc -

Power on the server.

11.3.3 Adding Memory to Sun Fire X4270 M2 Servers

Oracle Big Data Appliance ships from the factory with 48 GB of memory in each server. Six of the 18 DIMM slots are populated with 8 GB DIMMs. You can populate the empty slots with 8 GB DIMMs to bring the total memory to either 96 GB (12 x 8 GB) or 144 GB (18 x 8 GB). An upgrade to 144 GB may slightly reduce performance because of lower memory bandwidth; memory frequency drops from 1333 MHz to 800 MHz.

To add memory to a Sun Fire X4270 M2 server:

-

Power down the server.

-

Replace the plastic fillers with the DIMMs. See the Sun Fire X4270 M2 Server Service Manual at

http://docs.oracle.com/cd/E19245-01/E21671/motherboard.html#50503715_71311 -

Power on the server.

11.4 Maintaining the Physical Disks of Servers

Repair of the physical disks does not require shutting down Oracle Big Data Appliance. However, individual servers can be taken outside of the cluster temporarily and require downtime.

See Also:

"Parts for Oracle Big Data Appliance Servers" for the repair procedures

11.4.1 Verifying the Server Configuration

The 12 disk drives in each Oracle Big Data Appliance server are controlled by an LSI MegaRAID SAS 92610-8i disk controller. Oracle recommends verifying the status of the RAID devices to avoid possible performance degradation or an outage. The effect on the server of validating the RAID devices is minimal. The corrective actions may affect operation of the server and can range from simple reconfiguration to an outage, depending on the specific issue uncovered.

11.4.1.1 Verifying Disk Controller Configuration

Enter this command to verify the disk controller configuration:

# MegaCli64 -AdpAllInfo -a0 | grep "Device Present" -A 8

The following is an example of the output from the command. There should be 12 virtual drives, no degraded or offline drives, and 14 physical devices. The 14 devices are the controllers and the 12 disk drives.

Device Present

================

Virtual Drives : 12

Degraded : 0

Offline : 0

Physical Devices : 14

Disks : 12

Critical Disks : 0

Failed Disks : 0

If the output is different, then investigate and correct the problem.

11.4.1.2 Verifying Virtual Drive Configuration

Enter this command to verify the virtual drive configuration:

# MegaCli64 -LDInfo -lAll -a0

The following is an example of the output for Virtual Drive 0. Ensure that State is Optimal.

Adapter 0 -- Virtual Drive Information: Virtual Drive: 0 (Target Id: 0) Name : RAID Level : Primary-0, Secondary-0, RAID Level Qualifier-0 Size : 1.817 TB Parity Size : 0 State : Optimal Strip Size : 64 KB Number Of Drives : 1 Span Depth : 1 Default Cache Policy: WriteBack, ReadAheadNone, Cached, No Write Cache if Bad BBU Current Cache Policy: WriteBack, ReadAheadNone, Cached, No Write Cache if Bad BBU Access Policy : Read/Write Disk Cache Policy : Disk's Default Encryption Type : None

11.4.1.3 Verifying Physical Drive Configuration

Use the following command to verify the physical drive configuration:

# MegaCli64 -PDList -a0 | grep Firmware

The following is an example of the output from the command. The 12 drives should be Online, Spun Up. If the output is different, then investigate and correct the problem.

Firmware state: Online, Spun Up

Device Firmware Level: 061A

Firmware state: Online, Spun Up

Device Firmware Level: 061A

Firmware state: Online, Spun Up

Device Firmware Level: 061A

.

.

.

11.5 Maintaining the InfiniBand Network

The InfiniBand network connects the servers through the bondib0 interface to the InfiniBand switches in the rack. This section describes how to perform maintenance on the InfiniBand switches.

This section contains the following topics:

11.5.1 Backing Up and Restoring Oracle ILOM Settings

Oracle ILOM supports remote administration of the Oracle Big Data Appliance servers. This section explains how to back up and restore the Oracle ILOM configuration settings, which are set by the Mammoth utility.

See Also:

-

For Sun Server X4-2L and Sun Server X3-2L servers, the Oracle Integrated Lights Out Manager 3.1 documentation library at

-

For Sun Fire X4270 M2 servers, the Oracle Integrated Lights Out Manager 3.0 documentation library at

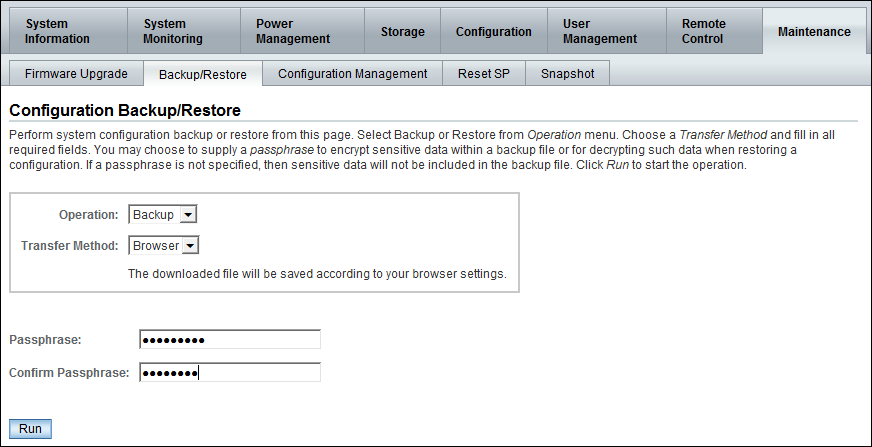

11.5.1.1 Backing Up the Oracle ILOM Configuration Settings

Figure 11-1 Oracle ILOM 3.1 Configuration Backup

Description of "Figure 11-1 Oracle ILOM 3.1 Configuration Backup"

11.5.2 Replacing a Failed InfiniBand Switch

Complete these steps to replace a Sun Network QDR InfiniBand Gateway switch or a Sun Datacenter InfiniBand Switch 36.

See Also:

-

"In-Rack InfiniBand Switch-to-Server Cable Connections" for information about cabling

-

Sun Network QDR InfiniBand Gateway Switch Installation Guide at

-

http://docs.oracle.com/cd/E26699_01/html/E26706/gentextid-125.html#scrolltoc -

Sun Datacenter InfiniBand Switch 36 User's Guide at

http://docs.oracle.com/cd/E26698_01/html/E26434/gentextid-119.html#scrolltoc

To replace a failed InfiniBand switch:

-

Turn off both power supplies on the switch by removing the power plugs.

-

Disconnect the cables from the switch. All InfiniBand cables have labels at both ends indicating their locations. If any cables do not have labels, then label them.

-

Remove the switch from the rack.

-

Install the new switch in the rack.

-

Restore the switch settings using the backup file, as described in "Backing Up and Restoring Oracle ILOM Settings".

-

Connect to the switch as

ilom_adminand open the Fabric Management shell:-> show /SYS/Fabric_Mgmt

The prompt changes from -> to FabMan@hostname->

-

Disable the Subnet Manager:

FabMan@bda1sw-02-> disablesm

-

Connect the cables to the new switch, being careful to connect each cable to the correct port.

-

Verify that there are no errors on any links in the fabric:

FabMan@bda1sw-02-> ibdiagnet -c 1000 -r

-

Enable the Subnet Manager:

FabMan@bda1sw-02-> enablesm

Note:

If the replaced switch was the Sun Datacenter InfiniBand Switch 36 spine switch, then manually fail the master Subnet Manager back to the switch by disabling the Subnet Managers on the other switches until the spine switch becomes the master. Then reenable the Subnet Manager on all the other switches.

11.5.3 Verifying InfiniBand Network Operation

If any component in the InfiniBand network has required maintenance, including replacing an InfiniBand Host Channel Adapter (HCA) on a server, an InfiniBand switch, or an InfiniBand cable, or if operation of the InfiniBand network is suspected to be substandard, then verify that the InfiniBand network is operating properly. The following procedure describes how to verify network operation:

Note:

Use this procedure any time the InfiniBand network is performing below expectations.

To verify InfiniBand network operation:

-

Enter the

ibdiagnetcommand to verify InfiniBand network quality:# ibdiagnet -c 1000

Investigate all errors reported by this command. It generates a small amount of network traffic and can run during a normal workload.

See Also:

Sun Network QDR InfiniBand Gateway Switch Command Reference at

http://docs.oracle.com/cd/E26699_01/html/E26706/gentextid-28027.html#scrolltoc -

Report switch port error counters and port configuration information. The

LinkDowned,RcvSwRelayErrors,XmtDiscards, andXmtWaiterrors are ignored by this command:# ibqueryerrors.pl -rR -s LinkDowned,RcvSwRelayErrors,XmtDiscards,XmtWait

See Also:

Linux

manpage foribqueryerrors.S -

Check the status of the hardware:

# bdacheckhw

The following is an example of the output:

[SUCCESS: Correct system model : SUN FIRE X4270 M2 SERVER [SUCCESS: Correct processor info : Intel(R) Xeon(R) CPU X5675 @ 3.07GHz [SUCCESS: Correct number of types of CPU : 1 [SUCCESS: Correct number of CPU cores : 24 [SUCCESS: Sufficient GB of memory (>=48): 48 [SUCCESS: Correct GB of swap space : 24 [SUCCESS: Correct BIOS vendor : American Megatrends Inc. [SUCCESS: Sufficient BIOS version (>=08080102): 08080102 [SUCCESS: Recent enough BIOS release date (>=05/23/2011) : 05/23/2011 [SUCCESS: Correct ILOM version : 3.0.16.10.a r68533 [SUCCESS: Correct number of fans : 6 [SUCCESS: Correct fan 0 status : ok [SUCCESS: Correct fan 1 status : ok [SUCCESS: Correct fan 2 status : ok [SUCCESS: Correct fan 3 status : ok [SUCCESS: Correct fan 4 status : ok [SUCCESS: Correct fan 5 status : ok [SUCCESS: Correct number of power supplies : 2 [1m[34mINFO: Detected Santa Clara Factory, skipping power supply checks [SUCCESS: Correct disk controller model : LSI MegaRAID SAS 9261-8i [SUCCESS: Correct disk controller firmware version : 12.12.0-0048 [SUCCESS: Correct disk controller PCI address : 13:00.0 [SUCCESS: Correct disk controller PCI info : 0104: 1000:0079 [SUCCESS: Correct disk controller PCIe slot width : x8 [SUCCESS: Correct disk controller battery type : iBBU08 [SUCCESS: Correct disk controller battery state : Operational [SUCCESS: Correct number of disks : 12 [SUCCESS: Correct disk 0 model : SEAGATE ST32000SSSUN2.0 [SUCCESS: Sufficient disk 0 firmware (>=61A): 61A [SUCCESS: Correct disk 1 model : SEAGATE ST32000SSSUN2.0 [SUCCESS: Sufficient disk 1 firmware (>=61A): 61A . . . [SUCCESS: Correct disk 10 status : Online, Spun Up No alert [SUCCESS: Correct disk 11 status : Online, Spun Up No alert [SUCCESS: Correct Host Channel Adapter model : Mellanox Technologies MT26428 ConnectX VPI PCIe 2.0 [SUCCESS: Correct Host Channel Adapter firmware version : 2.9.1000 [SUCCESS: Correct Host Channel Adapter PCI address : 0d:00.0 [SUCCESS: Correct Host Channel Adapter PCI info : 0c06: 15b3:673c [SUCCESS: Correct Host Channel Adapter PCIe slot width : x8 [SUCCESS: Big Data Appliance hardware validation checks succeeded -

Check the status of the software:

# bdachecksw

[SUCCESS: Correct OS disk sda partition info : 1 ext3 raid 2 ext3 raid 3 linux-swap 4 ext3 primary [SUCCESS: Correct OS disk sdb partition info : 1 ext3 raid 2 ext3 raid 3 linux-swap 4 ext3 primary [SUCCESS: Correct data disk sdc partition info : 1 ext3 primary [SUCCESS: Correct data disk sdd partition info : 1 ext3 primary [SUCCESS: Correct data disk sde partition info : 1 ext3 primary [SUCCESS: Correct data disk sdf partition info : 1 ext3 primary [SUCCESS: Correct data disk sdg partition info : 1 ext3 primary [SUCCESS: Correct data disk sdh partition info : 1 ext3 primary [SUCCESS: Correct data disk sdi partition info : 1 ext3 primary [SUCCESS: Correct data disk sdj partition info : 1 ext3 primary [SUCCESS: Correct data disk sdk partition info : 1 ext3 primary [SUCCESS: Correct data disk sdl partition info : 1 ext3 primary [SUCCESS: Correct software RAID info : /dev/md2 level=raid1 num-devices=2 /dev/md0 level=raid1 num-devices=2 [SUCCESS: Correct mounted partitions : /dev/md0 /boot ext3 /dev/md2 / ext3 /dev/sda4 /u01 ext4 /dev/sdb4 /u02 ext4 /dev/sdc1 /u03 ext4 /dev/sdd1 /u04 ext4 /dev/sde1 /u05 ext4 /dev/sdf1 /u06 ext4 /dev/sdg1 /u07 ext4 /dev/sdh1 /u08 ext4 /dev/sdi1 /u09 ext4 /dev/sdj1 /u10 ext4 /dev/sdk1 /u11 ext4 /dev/sdl1 /u12 ext4 [SUCCESS: Correct swap partitions : /dev/sdb3 partition /dev/sda3 partition [SUCCESS: Correct Linux kernel version : Linux 2.6.32-200.21.1.el5uek [SUCCESS: Correct Java Virtual Machine version : HotSpot(TM) 64-Bit Server 1.6.0_29 [SUCCESS: Correct puppet version : 2.6.11 [SUCCESS: Correct MySQL version : 5.6 [SUCCESS: All required programs are accessible in $PATH [SUCCESS: All required RPMs are installed and valid [SUCCESS: Big Data Appliance software validation checks succeeded

11.5.4 Understanding the Network Subnet Manager Master

The Subnet Manager manages all operational characteristics of the InfiniBand network, such as the ability to:

-

Assign a local identifier to all ports connected to the network

-

Calculate and program switch forwarding tables

-

Monitor changes in the fabric

The InfiniBand network can have multiple Subnet Managers, but only one Subnet Manager is active at a time. The active Subnet Manager is the Master Subnet Manager. The other Subnet Managers are the Standby Subnet Managers. If a Master Subnet Manager is shut down or fails, then a Standby Subnet Manager automatically becomes the Master Subnet Manager.

Each Subnet Manager has a configurable priority. When multiple Subnet Managers are on the InfiniBand network, the Subnet Manager with the highest priority becomes the master Subnet Manager. On Oracle Big Data Appliance, the Subnet Managers on the leaf switches are configured as priority 5, and the Subnet Managers on the spine switches are configured as priority 8.

The following guidelines determine where the Subnet Managers run on Oracle Big Data Appliance:

-

Run the Subnet Managers only on the switches in Oracle Big Data Appliance. Running a Subnet Manager on any other device is not supported.

-

When the InfiniBand network consists of one, two, or three racks cabled together, all switches must run a Subnet Manager. The master Subnet Manager runs on a spine switch.

-

When the InfiniBand network consists of four or more racks cabled together, then only the spine switches run a Subnet Manager. The leaf switches must disable the Subnet Manager.

See Also:

-

Sun Network QDR InfiniBand Gateway Switch library at

-

Sun Datacenter InfiniBand Switch 36 library at

11.6 Changing the Number of Connections to a Gateway Switch

If you change the number of 10 GbE connections to a Sun Network QDR InfiniBand Gateway switch, then you must run the bdaredoclientnet utility. See "bdaredoclientnet."

To re-create the VNICs in a rack:

-

Verify that /opt/oracle/bda/network.json exists on all servers and correctly describes the custom network settings. This command identifies files that are missing or have different date stamps:

dcli ls -l /opt/oracle/bda/network.json

-

Connect to node01 (bottom of rack) using the administrative network. The

bdaredoclientnetutility shuts down the client network, so you cannot use it in this procedure. -

Remove passwordless SSH:

/opt/oracle/bda/bin/remove-root-ssh

See "Setting Up Passwordless SSH" for more information about this command.

-

Change directories:

cd /opt/oracle/bda/network

-

Run the utility:

bdaredoclientnet

The output is similar to that shown in Example 7-2.

-

Restore passwordless SSH (optional):

/opt/oracle/bda/bin/setup-root-ssh

11.7 Changing the NTP Servers

The configuration information for Network Time Protocol (NTP) servers can be changed after the initial setup. The following procedure describes how to change the NTP configuration information for InfiniBand switches, Cisco switches, and Sun servers. Oracle recommends changing each server individually.

To update the Oracle Big Data Appliance servers:

To update the InfiniBand switches:

-

Log in to the switch as the

ilom-adminuser. -

Follow the instructions in "Setting the Time Zone and Clock on an InfiniBand Switch".

To update the Cisco Ethernet switch:

-

Use telnet to connect to the Cisco Ethernet switch.

-

Delete the current setting:

# configure terminal Enter configuration commands, one per line. End with CNTL/Z. (config)# no ntp server current_IPaddress

-

Enter the new IP address:

# configure terminal Enter configuration commands, one per line. End with CNTL/Z. (config)# ntp server new_IPaddress

-

Save the current configuration:

# copy running-config startup-config -

Exit from the session:

# exit

See Also:

Restart Oracle Big Data Appliance after changing the servers and switches.

11.8 Monitoring the PDU Current

The PDU current can be monitored directly. Configure threshold settings as a way to monitor the PDUs. The configurable threshold values for each metering unit module and phase are Info low, Pre Warning, and Alarm.

See Also:

Sun Rack II Power Distribution Units User's Guide for information about configuring and monitoring PDUs at

Table 11-1 lists the threshold values for the Oracle Big Data Appliance rack using a single-phase, low-voltage PDU.

Table 11-1 Threshold Values for Single-Phase, Low-Voltage PDU

| PDU | Module/Phase | Info Low Threshold | Pre Warning Threshold | Alarm Threshold |

|---|---|---|---|---|

|

A |

Module 1, phase 1 |

0 |

18 |

23 |

|

A |

Module 1, phase 2 |

0 |

22 |

24 |

|

A |

Module 1, phase 3 |

0 |

18 |

23 |

|

B |

Module 1, phase 1 |

0 |

18 |

23 |

|

B |

Module 1, phase 2 |

0 |

22 |

24 |

|

B |

Module 1, phase 3 |

0 |

18 |

23 |

Table 11-2 lists the threshold values for the Oracle Big Data Appliance rack using a three-phase, low-voltage PDU.

Table 11-2 Threshold Values for Three-Phase, Low-Voltage PDU

| PDU | Module/Phase | Info Low Threshold | Pre Warning Threshold | Alarm Threshold |

|---|---|---|---|---|

|

A and B |

Module 1, phase 1 |

0 |

32 |

40 |

|

A and B |

Module 1, phase 2 |

0 |

34 |

43 |

|

A and B |

Module 1, phase 3 |

0 |

33 |

42 |

Table 11-3 lists the threshold values for the Oracle Big Data Appliance rack using a single-phase, high-voltage PDU.

Table 11-3 Threshold Values for Single-Phase, High-Voltage PDU

| PDU | Module/Phase | Info Low Threshold | Pre Warning Threshold | Alarm Threshold |

|---|---|---|---|---|

|

A |

Module 1, phase 1 |

0 |

16 |

20 |

|

A |

Module 1, phase 2 |

0 |

20 |

21 |

|

A |

Module 1, phase 3 |

0 |

16 |

20 |

|

B |

Module 1, phase 1 |

0 |

16 |

20 |

|

B |

Module 1, phase 2 |

0 |

20 |

21 |

|

B |

Module 1, phase 3 |

0 |

16 |

20 |

Table 11-4 lists the threshold values for the Oracle Big Data Appliance rack using a three-phase, high-voltage PDU.

Table 11-4 Threshold Values for Three-Phase, High-Voltage PDU

| PDU | Module/Phase | Info Low Threshold | Pre Warning Threshold | Alarm Threshold |

|---|---|---|---|---|

|

A and B |

Module 1, phase 1 |

0 |

18 |

21 |

|

A and B |

Module 1, phase 2 |

0 |

18 |

21 |

|

A and B |

Module 1, phase 3 |

0 |

17 |

21 |