On the NAS Administrator toolbar, click the Application button to open the Application window.

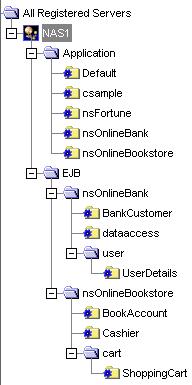

In the left pane of the Application window, select the server where you want to enable sticky load balancing.

Open the application group that contains the application component or components for which you want to enable sticky load balancing.

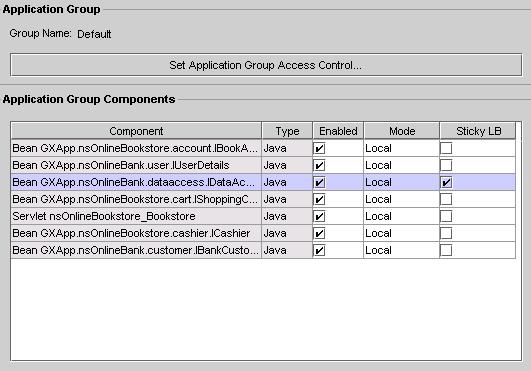

In the right pane of the Application window, select the application component for which you want to enable sticky load balancing.

In the Sticky LB column, click the checkbox for the selected application component.

Repeat steps 4 and 5 for each application component where you want to enable sticky load balancing.

Click Toggle Sticky LB to select or deselect all Sticky LB checkboxes.