This section provides information for Post-installation required for the OFSDF Application Pack.

Topics:

· OFSAA Infrastructure Patch Installation

· Verify the Log File Information

· Stop the Infrastructure Services

· Create and Deploy the EAR/WAR Files

· EAR/WAR File - Build Once and Deploy Across Multiple OFSAA Instances

· Start the Infrastructure Services

· Access the OFSAA Application

· Configure the excludeURLList.cfg File

· Configure the Big Data Processing

· Create the Application Users

· Map the Application User(s) to User Group

· Change the ICC Batch Ownership

· Add TNS entries in the TNSNAMES.ORA File

· Configure Transparent Data Encryption (TDE) and Data Redaction in OFSAA

· Implement Data Protection in OFSAA

· Configure and Use the External Engine POI Tables

NOTE

See the Post-Installation section in the Oracle Financial Services Advanced Analytical Applications Infrastructure Installation Guide Release 8.1.2.0.0 to complete these procedures.

For additional configuration information, see the Additional Configuration section.

Oracle strongly recommends installing the latest available patch set to be up-to-date with the various releases of the OFSAA product.

After the installation of OFSDF Application Pack 8.1.2.0.0:

· Apply the OFSAAI Mandatory Patch 33663417.

ATTENTION:

For the Mandatory Patch ID 33663417:

On the 10th of December 2021, Oracle released the Security Alert CVE-2021-44228 in response to the disclosure of a new vulnerability affecting Apache Log4J prior to version 2.15. The application of the 33663417 Mandatory Patch fixes the issue.

For details, see the My Oracle Support Doc ID 2827801.1.

Ensure that whenever any New or Upgrade or an Incremental Installation Release is applied, the OFSAAI Mandatory Patch 33663417 for Log4J must be reapplied.

For the Patch download information, see the Download the Mandatory Patches Section in Pre-installation for a new installation and in Upgrade for an upgrade installation.

See My Oracle Support (MOS) for more information about the latest release.

This section provides information on the script to be executed after the FSDF installation.

1. Connect to the Atomic Schema.

2. Execute the DIM_MR_TIME_VERTEX_FIX_812.sql

See the following logs files for more information:

· Pack_Install.log file in the OFS_BFND_PACK/logs/ directory.

· OFS_FSDF_installation.log file and the OFS_FSDF_installation.err file in the OFS_BFND_PACK/OFS_FSDF/logs directory.

· Infrastructure installation log files in the OFS_BFND_PACK/OFS_AAI/logs/ directory.

· OFSAAInfrastucture_Install.log file in the $FIC_HOME directory.

ATTENTION:

You can ignore the "ORA-00001: unique constraint" error, and Object already exists and Table has a primary key warnings for the OFSA_CATALOG_OF_LEAVES table in the log file.

In case of any other errors, contact My Oracle Support (MOS).

See the Stop the Infrastructure Services section in the OFSAAI Release 8.1.2.0.0 Installation and Configuration Guide for details.

See the Create and Deploy the EAR/WAR Files section in the OFSAAI Release 8.1.2.0.0 Installation and Configuration Guide for details.

See the EAR/WAR File - Build Once and Deploy Across Multiple OFSAA Instances section in the OFSAAI Release 8.1.2.0.0 Installation and Configuration Guide for details.

See the Start the Infrastructure Services section in the OFSAAI Release 8.1.2.0.0 Installation and Configuration Guide for details.

See the Access the OFSAA Application section in the OFSAAI Release 8.1.2.0.0 Installation and Configuration Guide for details.

See the OFSAA Landing Page section in the OFSAAI Release 8.1.2.0.0 Installation and Configuration Guide for details.

See the section Configure the excludeURLList.cfg File in the OFSAAI Release 8.1.2.0.0 Installation and Configuration Guide for details.

This section is not applicable if you have enabled Financial Services Big Data Processing during the installation of OFSDF 8.1.2.0.0 full installer. Follow instructions in this section if you intend to enable Big Data Processing.

Topics:

· Copy the Jar Files to the OFSAA Installation Directory

· Copy the KEYTAB and KRB5 Files in OFSAAI

· Configure the Apache Livy Interface

3. Download the supported Cloudera HIVE JDBC Connectors and copy the following jars files to the $FIC_HOME/ext/lib/ and $FIC_WEB_HOME/webroot/WEB-INF/lib/ directory. For the latest supported versions, see OFSAA Technology Matrix v8.1.2.0.0.

§ hive_service.jar

§ hive_metastore.jar

§ HiveJDBC4.jar

§ zookeeper-3.4.6.jar

§ TCLIServiceClient.jar

4. Copy the following jars files from the <Cloudera Installation Directory>/jars/ directory based on the CDH version to the $FIC_HOME/ext/lib/ and $FIC_WEB_HOME/webroot/WEB-INF/lib/ directories.

§ CDH v5.13.0:

— commons-collections-3.2.2.jar

— commons-configuration-1.7.jar

— commons-io-2.4.jar

— commons-logging-1.2.jar

— hadoop-auth-2.6.0-cdh5.13.0.jar

— hadoop-common-2.6.0-cdh5.13.0.jar

— hadoop-core-2.6.0-mr1-cdh5.13.0.jar

— hive-exec-1.1.0-cdh5.13.0.jar

— httpclient-4.3.jar

— httpcore-4.3.jar

— libfb303-0.9.3.jar

— libthrift-0.9.3.jar

— slf4j-api-1.7.5.jar

— slf4j-log4j12-1.7.5.jar

§ CDH v6.3.0:

— commons-collections-3.2.2.jar

— commons-configuration2-2.1.1.jar

— commons-io-2.6.jar

— commons-logging-1.2.jar

— hadoop-auth-3.0.0-cdh6.3.0.jar

— hadoop-common-3.0.0-cdh6.3.0.jar

— hive-exec-2.1.1-cdh6.3.0.jar

— httpclient-4.5.3.jar

— httpcore-4.4.6.jar

— libfb303-0.9.3.jar

— libthrift-0.9.3.jar

— slf4j-api-1.7.25.jar

— slf4j-log4j12-1.7.25.jar

A Keytab is a file containing pairs of Kerberos principals and encrypted keys (these are derived from the Kerberos password). The krb5.conf file contains Kerberos configuration information, including the locations of KDCs and admin servers for the Kerberos realms of interest, defaults for the current realm, and Kerberos applications, and mappings of hostnames onto Kerberos realms.

If the Authentication is configured as KERBEROS_WITH_KEYTAB for the Hive database, then you must use the Keytab file to login to Kerberos. The Keytab and Kerberos files must be copied to $FIC_HOME/conf and $FIC_WEB_HOME/webroot/conf of the OFSAAAI installation directory.

Generate the application EAR/WAR file and redeploy the application onto your configured web application server.

Restart the Web application server and the OFSAAAI Application Server. For more information, see the Start the Infrastructure Services Start the Infrastructure Servicessection.

Apache Livy is an Interface service that enables easy interaction with a Spark cluster over a REST interface.

Topics:

· Set metastore Path for Spark

Do the following configuration for the Big Data mode of installation.

To configure Spark, you must configure crossJoin.

NOTE

This section is applicable only during the Stage and Results on Hive installation.

To configure crossJoin, follow these steps:

1. Open the Cloudera Manager application.

2. Navigate to SPARK2 select Configuration, and search the spark-defaults.conf file.

For example:

/etc/spark2/conf.cloudera.spark2_on_yarn/spark-defaults.conf

3. Set the parameter spark.sql.crossJoin.enabled to true.

4. Set the parameter spark.executor.memory to 10g.

NOTE

When the Spark memory settings are set to low values, T2T execution fails. To resolve this, adjust the value of the spark.executor.memory variable.

To set the metastore path for Spark, follow these steps:

NOTE

This section is applicable during both the installation processes in Big Data (Stage and Results on Hive, and Stage on Hive and Results on RDBMS).

1. Copy the hive-site.xml file from the hive conf directory to the SPARK2 conf directory.

For example:

cp /etc/hive/conf.cloudera.hive/hive-site.xml /etc/spark2/conf.cloudera.spark2_on_yarn

2. Edit the hive-site.xml file to add the following entries in the SPARK2 conf directory:

On hive conf |

In SPARK2 conf |

|---|---|

<property> <name>hive.metastore.warehouse.dir</name> <value>/user/hive/warehouse</value> </property> |

<property> <name>spark.sql.warehouse.dir</name> <value>/user/hive/warehouse</value> </property> |

NOTE

When Cloudera is configured for a cluster, then all the nodes must adhere to this configuration.

Configure DMT to provide Apache Livy Interface details.

NOTE

This section is applicable only during the Stage and Results on Hive installation.

Ensure that you have the appropriate User Role to access this screen. To add a New Cluster, add the appropriate role to the user:

NOTE

To add a new cluster and then register it, the user must have the DMTADMIN (Data Management Admin) role. Therefore, assign the DMTADMIN role to the user, and ensure to map the DMTADMIN role to the FSDFADMIN user group.

1. From the OFSDF Home, select Data Management Framework, select Data Management Tools, select DMT Configuration, select Register Cluster, and click Add Cluster.

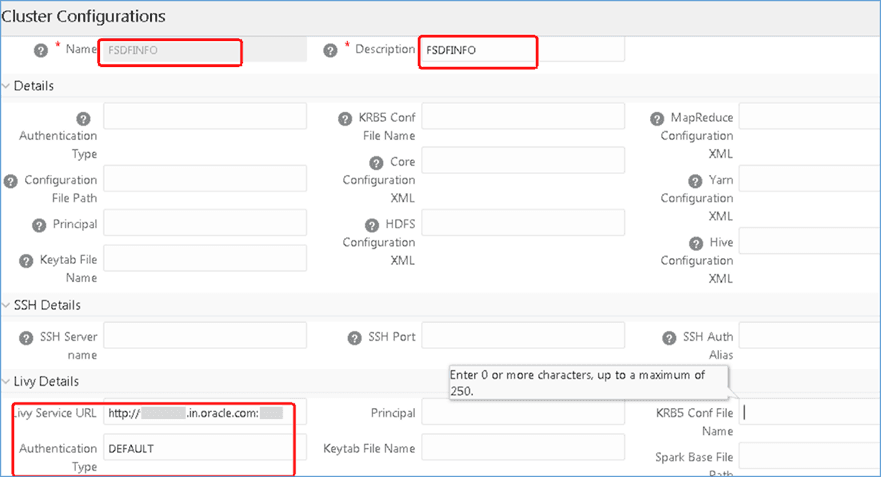

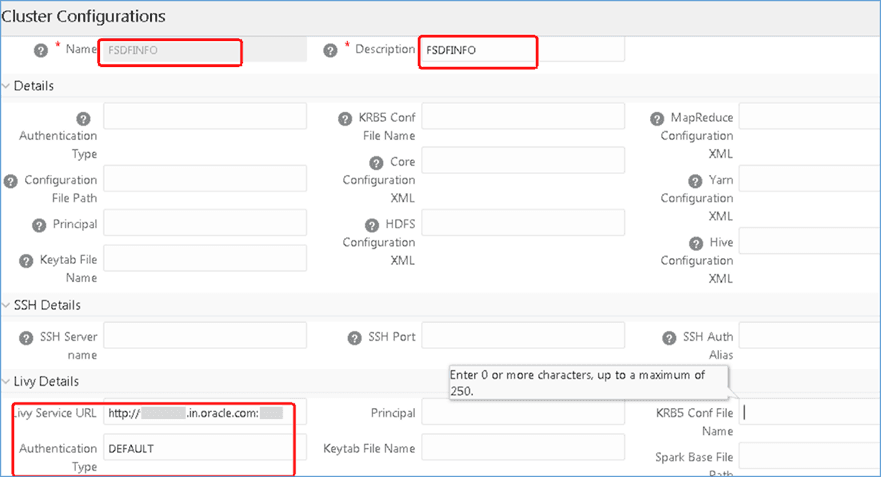

2. Enter the details as shown in the image. In the Livy Service URL field, enter the Livy Server URL of your environment.

Figure 35: Cluster Configurations

3. To populate data in the DIM_DATES table, navigate to the Batch Execution screen. Perform these steps:

a. Run the batch FSDFINFO_POP_DATES_DIM_HIVE.

SCD batches (FSDFINFO_DATA_FOUNDATION_SCD, FSDFINFO_DIM_ACCOUNT_SCD) are sequenced with wait mode Yes enabled.

NOTE

FSDFINFO_DATA_FOUNDATION_SCD and FSDFINFO_DIM_ACCOUNT_SCD batches are made sequential.

b. Click Execute Batch.

4. Monitor the status of the batch in the Batch Monitor screen of OFSAAI.

5. Execute T2T Batches in the Process Modelling Framework.

NOTE

In the Process Modelling Framework, the FSDF_SOURCED_RUN Runs are made sequential to each other.

Create the application users in the OFSAA setup before use.

For details, see the User Administrator section in the Oracle Financial Services Analytical Applications Infrastructure User Guide Release 8.1.2.0.0.

User UserGroup Map facilitates you to map user(s) to a specific user group which in turn is mapped to a specific Information Domain and role. Every user group mapped to the Information Domain needs to be authorized. Else, it cannot be mapped to users.

User UserGroup Map screen displays details such as User ID, Name, and the corresponding Mapped Groups. You can view and modify the existing mappings within the User UserGroup Maintenance screen.

Starting with the OFSAA 8.1 release, with the installation of the OFSDF Application Pack, preconfigured Application user groups are seeded. These user groups are unique to every OFSAA Application Pack and have application roles pre-configured.

You can access the User UserGroup Map by expanding the Identity Management section within the tree structure of the LHS menu.

Name |

Description |

|---|---|

FSDF Admin |

A user mapped to this group will have access to all the menu items for the entire FSDF Application. The exclusive menus which are available only to this group of users are Application Preference and Global Preference under Settings Menu. |

FSDF Data Modeler |

A user mapped to this group will have access only to Data Model Management and Metadata Browser Menus. |

FSDF Analyst |

A user mapped to this group will have access to Data Management Framework, Dimension Management, and Metadata Browser Menus. |

FSDF Operator |

A user mapped to this group will have access to Rule Run Framework and Operations Menus. |

All the seeded Batches in the OFSDF application are automatically assigned to the SYSADMN user during installation. To see the batches in the Batch Maintenance menu, you must execute the following query in the Config Schema of the database:

begin

AAI_OBJECT_ADMIN.TRANSFER_BATCH_OWNERSHIP ('fromUser','toUser','infodom');

end;

OR

begin

AAI_OBJECT_ADMIN.TRANSFER_BATCH_OWNERSHIP ('fromUser','toUser');

end;

Where:

· fromUser indicates the user who currently owns the batch

· toUser indicates the user to whom the ownership must be transferred

· infodom is an optional parameter. If specified, the ownership of the batches of that Infodom will be changed.

Example:

begin

AAI_OBJECT_ADMIN.TRANSFER_BATCH_OWNERSHIP ('SYSADMN','FSDFOP','OFSBFNDINFO’);

end;

See the Add TNS entries in the TNSNAMES.ORA File section in the OFSAAI Release 8.1.2.0.0 Installation and Configuration Guide for details.

See the Configure Transparent Data Encryption (TDE) and Data Redaction in OFSAA section in the OFSAAI Release 8.1.2.0.0 Installation and Configuration Guide for details.

See the Oracle Financial Services Data Foundation Application Pack Data Protection Implementation Guide Release 8.1.x for details.

In the Integration Process, if more than one OFSAA Application exists in the same environment, then the output of one OFSAA application can be consumed by another OFSAA application. For example, if in one environment the OFSDF Application Pack, OFS Capital Adequacy Application Pack (OFS CAP or BASEL), or OFS Liquidity Risk Solution Application Pack (OFS LRS) are present, then OFS LRS can consume OFS CAP computed outputs.

If OFS Capital Adequacy Application Pack (OFS CAP or BASEL) or OFS Liquidity Risk Solution Application Pack (OFS LRS) is already installed in the same environment as the OFSDF Application Pack, then the integration process is implicitly available.

If OFS Capital Adequacy Application Pack (OFS CAP or BASEL) or OFS Liquidity Risk Solution Application Pack (OFS LRS) is not installed in the same environment as the OFSDF Application Pack, then execute the Integration Utility to enable the Integration process provided in the OFSDF Application Pack v8.1.2.0.0 release.

To enable the Integration process, you must execute the Integration Utility. Follow these steps:

1. Navigate to the $FIC_HOME/utility/ directory.

2. Assign the 755 permission to the IntegUtil directory using the following command:

chmod -R 755 IntegUtil

3. Execute the enableIntT2T.sh file, using the following command:

./enableIntT2T.sh

4. Verify the log file EnableIntegT2TStatus_<timestamp>.log in the $FIC_HOME/utility/IntegUtil/logs/ directory.

The Integration Utility is executed successfully.

NOTE

The Integration process is enabled only after the respective application pack is installed successfully.

For additional configuration information, see the Additional Configuration section.

This section provides information about configuring and using the External Engine POI (Processing Output Integration) Tables.

NOTE

Use this section only to source the External Engine Data from the OFSAA Applications to OFSDF.

The pre-requisites to configure and use the External Engine POI Tables are as follows:

1. The OFSDF Data Model Release 8.1.2.0.0 needs to be uploaded.

2. The POI related OFSDF Data Model Release 8.1.2.0.1 Patch ID 33549470 corresponding to the External Engine functionality needs to be uploaded (Download and extract the Data Model patch from My Oracle Support (MOS)).

As a result, the POI Stage Tables become available for usage.

To begin using the External Engine functionality, follow these steps:

1. Navigate to the path $FIC_HOME/ExternalEngine_Artifacts. The ExternalEngine_Artifacts folder contains the T2T Metadata design sheets, and the Metadata and PMF Scripts required for the EXTERNAL_ENGINE_RUN Process.

2. In the SQLScripts folder files, replace the placeholders (For example, ##INFODOM##). Then execute the following Metadata Scripts in the Config Schema:

§ DM_T2T_FCT_IFRS_PLACED_COLLATERAL.sql

§ DM_T2T_FCT_LLFP_CRE_FACILITY_SUMMARY.sql

§ DM_T2T_FCT_LRM_MITIGANTS_SUMMARY.sql

§ DM_T2T_FCT_LRM_PLACED_COLLATERAL.sql

§ DM_T2T_FCT_COHORT_LOAN_LOSS_DETAILS.sql

§ DM_T2T_FCT_IFRS_CREDIT_LINE_DETAILS.sql

§ DM_T2T_FCT_IFRS_MITIGANTS_SUMMARY.sql

3. For creating a PMF Run for the External Engine T2Ts, in the PMF Script file, replace the placeholders (For example, ##INFODOM##). Then execute the following Script in the Config Schema:

§ pmf_EXTERNAL_ENGINE_LOAD_RUN.sql

4. The External Engine related PMF Process (Process Name is Financial Services Data Foundation External Engine Run and Process ID is EXTERNAL_ENGINE_RUN) is available for usage in the Process Modeling Framework Module of the OFSDF Application UI. In the Process Modeller Page, execute the External Engine PMF Process (Process Name is Financial Services Data Foundation External Engine Run and Process ID is EXTERNAL_ENGINE_RUN) and verify the result (data populated) in the following Fact Tables:

§ FCT_IFRS_CREDIT_LINE_DETAILS

§ FCT_IFRS_MITIGANTS_SUMMARY

§ FCT_IFRS_PLACED_COLLATERAL

§ FCTI_LLFP_CRE_FACILITY_SUMMARY

§ FCT_COHORT_LOAN_LOSS_DETAILS

§ FCT_LRM_MITIGANTS_SUMMARY

§ FCT_LRM_PLACED_COLLATERAL