Conversation Insights for Skills

The conversation reports for skills, which track voice and text conversations by time period and by channel, enable you to identify execution paths, determine the accuracy of your intent resolutions, and access entire conversation transcripts.

To access the session-level metrics, select

Coversation from the Metric

filter in the Overview report.

Voice Insights are tracked for skills routed to chat clients that have been configured for voice recognition and are running on Version 20.8 or higher of the Oracle Web, iOS, or Android SDKs.

Report Types

- Overview – Use this dashboard to quickly find out the total number of voice and text conversations by channel and by time period. The report's metrics break this total down by the number of complete, incomplete, and in-progress conversations. In addition, this report tells you how the skill completed, or failed to complete, conversations by ranking the usage of the skill's transactional and answer intents in bar charts and word clouds.

- Custom Metrics – Enables you to measure the custom dimensions that have been applied to the skill.

- Intents – Provides intent-specific data and information for the execution metrics (states, conversation duration, and most- and least-popular paths).

- Paths – Shows a visual representation of the conversation flow for an intent.

- Conversations – Displays the actual transcript of the skill-user dialog, viewed in the context of the dialog flow and the chat window.

- Retrainer – Where you use the live data and obtained insights to improve your skill through moderated self-learning.

- Export – Lets you download a CSV file of the Insights data collected by Oracle Digital Assistant. You can create a custom Insights report from the CSV.

Review the Summary Metrics and Graphs

You can adjust this view by toggling the between the Voice and Text modes, or you can compare the two by enabling. Compare text and voice conversations.

When you select Text, the report displays a set of common metrics. When you select Voice, the report includes additional voice-specific metrics. These metrics only apply for voice conversations, so they do not appear when you choose Compare text and voice conversations

The Mode options depend on the presence of voice or text messages. If there are only text messages, for example, then only the Text option appears.

Common Metrics

- Total number of conversations—The total number of

conversations, which is comprised of completed, incomplete, and in-progress

conversations. Regardless of status, a conversation can be comprised of one or

more dialog turns. Each turn is a single exchange between the user and the

skill.

Note

Conversations are not the same as metered requests. To find out more about metering, refer to Oracle PaaS and IaaS Universal Credits Service Descriptions. - Completed conversations – Conversations that have ended by

answering a user's query successfully. Conversations that conclude with an End

Flow state or at a state where End flow (implicit) is selected as the transition

are considered complete. In YAML-authored skills, conversations are counted as

complete when the traversal through the dialog flow ends with a

returntransition or at a state with theinsightsEndConversationproperty.Note

This property and thereturntransition are not available in Visual Flow Designer. - Incomplete conversations – Conversations that users didn't complete, because they abandoned the skill, or couldn't complete it because of system-level errors, timeouts, or infinite loops.

- In progress conversations – "In-flight" conversations (conversations that have not yet completed nor timed-out). This metric tracks multi-turn conversations. An in-progress conversation becomes an timeout after a session expires.

- Average time spent on conversations – The average length for all of the skill’s conversations.

- Total number of users and Number of unique users – User base metrics that indicate how many users a skill has and how many of these users are returning users.

Voice Metrics

These metrics are for informational purposes only; you cannot act upon them.

- Average time spent on conversations – The average length of time of the voice conversations.

- Average Real Time Factor (RTF) – The ratio of the time taken to process the audio input relative to the CPU time. For example, if it takes one second of CPU time to process one second of audio, then the RTF is 1 (1/1). The RTF for 500 milliseconds to process one second of audio is .5 or ½ . Ideally, RTF should be below 1 to ensure that the processing does not lag behind the audio input. If the RTF is above 1, contact Oracle Support.

- Average Voice Latency – The delay, in milliseconds, between detecting the end of the utterance and the generation of the final result (or transcription). If you observe latency, contact Oracle Support.

- Average Audio Time – The average duration, in seconds, for all voice conversations.

- Switched Conversations – The percentage of

the skill's conversations that began with voice commands, but needed to be

switched to text to complete the interaction. This metric indicates that there

were multiple execution paths involved in switching from voice to text.

Incomplete Conversation Breakdown

- Timeouts – Timeouts are triggered when an in-progress conversation is idle for more than an hour, causing the session to expire.

- System-Handled Errors – System-handled errors are handled by the system, not the skill. These errors occur when the dialog flow definition is not equipped with error handling.

- Infinite Loop – Infinite loops can occur because of flaws in the dialog flow definition, such as incorrectly defined transitions.

- Canceled - The number of times that users exited a skill by explicitly canceling the conversation.

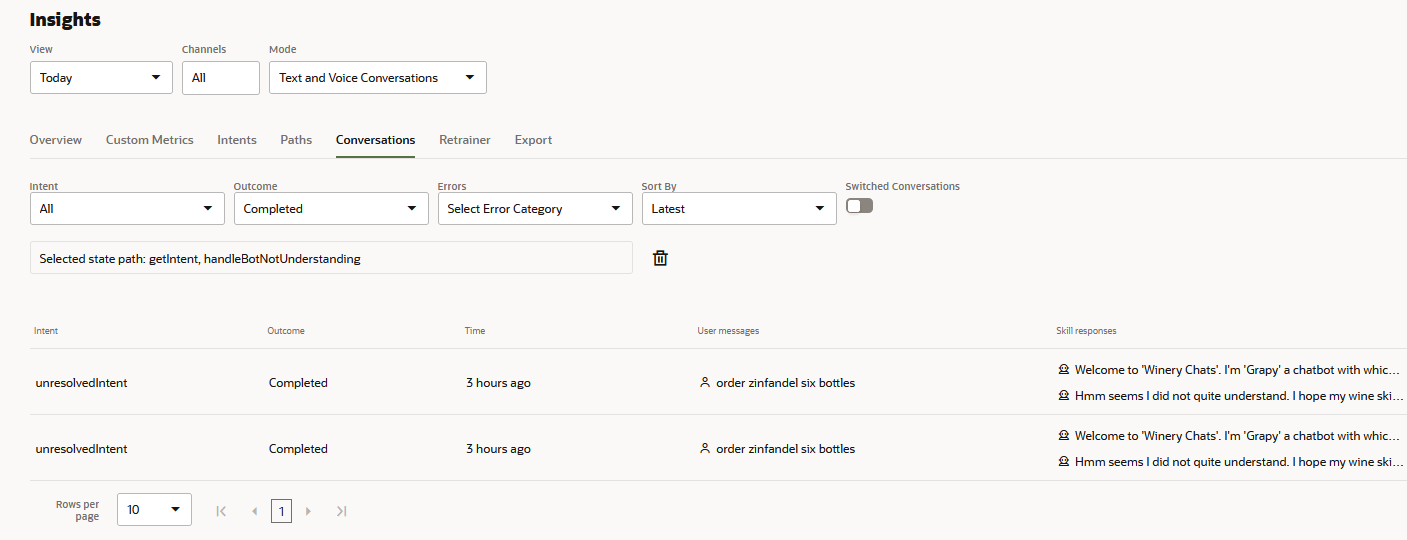

By clicking an error category in the table, or one of the arcs in the graph, you can drill down to the Conversations report to see these errors in the context of incomplete conversations. When you access the Conversations report from here, the Conversations report's Outcome and Errors filters are set to Incomplete and the selected error category. For example, if you click Infinite Loop, the Conversations report will be filtered by Incomplete and Infinite Loop. The report's Intents and Outcome filters are set to Show All and the Sort by field is set to Latest.

User Metrics

- Number of users – A running total of all types of users who have interacted with the skill: users with channel-assigned IDs that persist across sessions (the unique users), and users whose automatically assigned IDs last for only one session.

- Number of unique users – The number of users

who have accessed the skill as identified by their unique user IDs. Each channel

has a different method of assigning an ID to a user: users chatting with the

skill through the Web channel are identified by the value defined for

userIdfield, for example. The Skill Tester's test channel assigns you a new user ID each time you end a chat session by clicking Reset.Once assigned, these unique IDs persist across chat sessions so that the unique user count tallied by this metric does not increase when a user revisits the skill. The count only increases when another user assigned with a unique ID is added to the user pool.Tip:

Because the user IDs are only unique within a channel (a user with identical IDs on two different channels will be counted as two users, not one), you can get a better idea of the user base by filtering the report by channel.

Enable New User Tracking

"purgeUserData": true in the payload of the Start Export Task POST

request.

The collection of new user data only begins on the date that this feature was shipped with Release 23.10.

Review Conversation Trends Insights

- Completed – The conversations that users have

successfully completed. Conversations that conclude with an End Flow state or at

a state where End flow (implicit) is selected as the transition are considered

complete. In YAML-authored skills, conversations are counted as complete when

the traversal through the dialog flow ends with a

returntransition or at a state with theinsightsEndConversationproperty.Note

This property and thereturntransition are not available in Visual Flow Designer. - Incomplete – Conversations that users didn't complete, because they abandoned the skill, or couldn't complete because of system-level errors, timeouts, or flaws in the skill's design.

- In Progress – "In-flight" conversations (conversations that have not yet completed nor timed out). This metric tracks multi-turn conversations.

View Intent Usage

Not all conversations resolve to an intent. When No Intent displays in the Intent bar chart and word cloud, it indicates that an intent was not resolved by user input, but through a transition action, a skill-initiated conversation, or through routing from a digital assistant.

You can filter the Intents bar chart and the word cloud using the bar chart's

All Intents, Answer Intents, and

Transaction Intents options.

Description of the illustration all-intents.png

These options enable you to quickly breakdown usage. For example, for mixed

skills – ones that have both transactional and answer intents – you can view usage for

these two types of intents using the Answer Intents and

Transaction Intents options.

Description of the illustration transactional-intents.png

The key phrases rendered in the word cloud reflect the option, so for example,

only the key phrases associated answer intents display when you select Answer

Intents.

Description of the illustration answer-intents.png

Review Intents and Retrain Using Key Phrase Clouds

The color represents the level of success for the intent resolution:

- Green represents a high average of resolving requests at, or exceeding, the Confidence Win Margin threshold within the given period.

- Yellow represents intent resolution that, on average, don't meet the Confidence Win Margin threshold within the given period. This color is a good indication that the intent needs retraining.

- Red is reserved for unresolvedIntent. This is the collection of user requests that couldn't be matched to any intent but could potentially be incorporated into the corpus.

Beyond that, it gives you a more granular view of intent usage through key phrases, which are representations of actual user input, and, for English-language phrases (the behavior differs when non-English language phrases resolved to an intent), access to the Retrainer.

Review Key Phrases

By clicking an intent, you can drill down to a set of key phrases. These phrases are

abstractions of the original user message that preserve its original intent. For

example, the key phrase cancel my order is rendered from the original message,

I want to cancel my order. Similar messages can be grouped within a single

key phrase. The phrases I want to cancel my order, can you cancel my

order, and cancel my order please can be grouped within the cancel my

order key phrase, for example. Like the intents, size represents the prominence

for the time period in question and color reflects the confidence level.

Description of the illustration key-phrases-intent.png

You can see the actual user message (or the messages grouped within a key

phrase) within the context of a conversation when you click a phrase and then choose

View Conversations from the context menu.

Description of the illustration view-conversations-option.png

This option opens the Conversations Report.

Description of the illustration key-phrases-conversation-report.png

Anonymized values display in the phrase cloud when you enable PII Anonymization.

Description of the illustration pii-skill-phrase-cloud.png

Retrain from the Word Cloud

This option opens the Retrainer, where you can add the actual phrase to the training corpus.

Review Native Language Phrases

The behavior of the key phrase cloud differs for skills with native language support

in that you can't access the Retrainer for non-English phrases. When phrases in

different languages have been resolved to an intent, languages, not key phrases, display

in the cloud when you click an intent. For example, if French and English display after

you click unresolvedIntent, then that means that there are

phrases in both English and French that could not be resolved to any intent.

Description of the illustration ml-phrase-cloud.png

If English is among the languages, then you can drill down to the key phrase

cloud by clicking English. From the key phrase cloud, you can use

the context menu's View Conversations and

Retrain options to drill down to the Conversation Report and

the Retrainer. But when you drill down from a non-English language, you drill down to

the Conversations report, filtered by the intent and language. There is no direct access

to the Retrainer. So going back to the unresolvedIntent example, if you clicked

English, you would drill down to the key phrase cloud. If you

clicked French, you'd drill down to the Conversations report,

filtered by unresolvedIntent and French.

Description of the illustration ml-conversation-report.png

If you want to incorporate or reassign a phrase after reviewing it within the

context of the conversation, you'll have to incorporate the phrase directly from the

Retrainer by filtering on the intent, the language (and any other criteria).

Review Language Usage

For a multi-lingual skill, you can compare the usage of its supported

languages through the segments of the Languages chart. Each segment

represents a language currently in use.

Description of the illustration languages-chart-overview-skill.png

If you want to review the conversations represented by a language in

the chart, you can click either a segment or the legend to drill down to the

Conversations report, which is filtered by the

selected language.

Description of the illustration conversations-report-filtered-language.png

Review User Feedback and Ratings

The average customer satisfaction score, which is proportional to the number of conversations for each of the ratings, is rendered at the center of the donut chart. The individual totals on a per-conversation basis for each number on the range are graphed as arcs of the User Rating donut chart which vary in length according occurrence. Clicking one of these arcs opens the Conversations report filtered by the score.

If your skill runs on a platform prior to Release 21.12, you need to switch Enable Masking off to see the user rating in the conversation transcript. To retain the actual user rating in the transcripts for skills running on Platforms 21.12 and higher (where Enable Masking is deprecated), you need delete the NUMBER entity from the list of entities treated as PII when enabling PII anonymization.

By default, the User Feedback component's minimum threshold for

determining a positive or negative reaction is set at two (Dissatisfied). If

user feedback is enabled for the component, the User Feedback word cloud

displays the user comments that accompany negative ratings and sizes them

according to their frequency. You can see these comments in the context of

the overall interaction by clicking the arc on the User Rating chart that

represents a below-the-threshold rating (a one or two per the component's

default settings) and then drill down to the Conversation report, which is

filtered by the selected score.

Description of the illustration conversation-report-user-feedback.png

How to Add the Feedback Component to the Dialog Flow

System.Feedback state using the next

transition.) Your dialog flow can transition to a user feedback sequence of states whenever

you want to gauge a user's reaction. This could be, for example, after a user has either

completed or canceled a transaction.

If the state preceding the User Feedback state has a Keep Turn property, set it to

True to ensure that the skill does not hand the conversation off

to the user before the flow transitions on to the User Feedback. To maintain the skill's

control in YAML-authored flows, set keepTurn: true in the state before the

System.Feedback state.

above, below, and cancel

transitions. In the YAML dialog flows, each of these states have

return: done transitions.These states accommodate the high and low range of the rating as determined by the Threshold property. You can add these states as user messages that confirm receipt of the user rating.

| Feedback Type | Message Example |

|---|---|

above |

Thank you for rating us

${system.userFeedbackRating.value} |

below |

You entered: ${system.userFeedbackText.value} We appreciate

your feedback. |

cancel |

Skipped giving a rating or feedback? Maybe next

time. |

systemComponent_Feedback_ keys in a resource bundle CSV file.

Using Custom Metrics to Measure User Feedback

Each of these Set Custom Metrics states correspond to one of the states named by the User Feedback component's

above, below, and

cancel transition actions. So, for example, if you wanted add a metric

called Feedback Type to the Custom Metrics report, you would do the following:

- Insert Set Custom Metrics states before each of the states named by the User Feedback

component's

above,below, andcanceltransition actions. - For each state, enter Feedback Type as the dimension name.

- Add separate values for each state, as appropriate to the transition type (e.g.,

Canceled,Positive,Negative). Now that you've instrumented the dialog flow to record feedback data, you can query the different values in the Custom Metrics report.

In YAML-based flows, you track feedback metrics by adding System.SetCustomMetrics states.

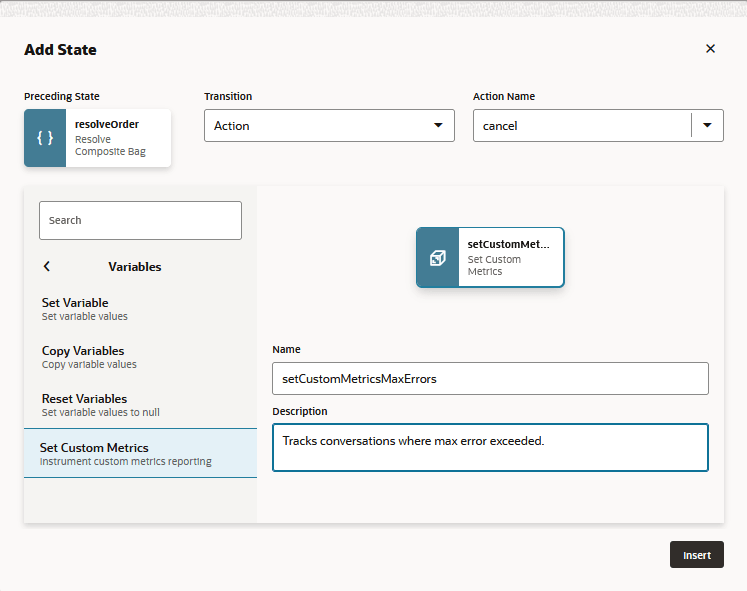

Review Custom Metrics

The Custom Metrics report gives you added perspective on the Insights data

by tracking conversation data for skill-specific dimensions. The dimensions tracked by

this report are created in the dialog flow definition using the Set Custom Metrics component (and the System.SetCustomMetrics component in YAML flows).

Using this component, you can create dimensions to explore business and development

needs that are particular to your skill. For example, you can build dimensions that

report the consumption of a product or service (the most requested pizza dough or the

type of expense report that's most commonly filed), or track when the skill fails users

by forcing them to exit or by passing them to live agents.

Dimensions and categories appear in the report only when the conversations measured by them have occurred.

Instrument the Skill for Custom Metrics

To generate the Custom Metrics report, you need to define one or

more dimensions using the Set Custom Metrics component (accessed by clicking Variables >

Set Custom Metrics or Variables >

Set Insights Custom Metrics in YAML dialogs ).

Description of the illustration insights-component-dialog.png

If the Custom Metrics report has no data, then it's likely that no Set Custom Metrics

states have been defined, or that the transitions to these states have not been set correctly.

You can define up to six dimensions for each skill.

Creating Dimensions for Variable Values

You can track entity values by setting a next transition to a Set

Custom Metrics state after value-setting state (e.g., a Resolve Composite Bag state). The

dimensions and filters in the Set Custom Metrics report are rendered from the dimensions and

dimension values defined by the Set Custom Metrics component.

If the value-setting state references a composite bag entity, you can track the

bag items using an Apache Freemarker expression to define the dimension value. For example,

the value for a Pizza Size dimension can be defined as

${pizza.value.pizzaSize.value}. The individual values returned by this

expression (small, medium, large) are rendered as data segments in Custom Metrics report and

can also be applied as filters. For example, the report that results from instrumenting a

pizza skill breaks down pizza orders by size, type, and pizza dough. These added details

supplement the metrics already reported for the Order Pizza intent.

Description of the illustration custom-metrics-example.png

Entity value-based dimensions are only recorded in the Custom Metrics report after an entity value has been set. When no value has been set, or when the value-setting state does not transition to a Set Custom Metrics state, the report's graphs note the missing data as <not set>.

Creating Dimensions that Track Skill Usage

In addition to dimensions based on variable values, you can create

dimensions that track not only how users interact with the skill, but its overall

effectiveness as well. You can, for example, add a dimension that tells you how often, and

why, users are transferred to live agents.

Description of the illustration custom-metrics-agent-transfer-example.png

Dimensions like these inform you of the user experience. You can add the same dimension to different Set Custom Metrics states across your flows. Each of these Set Custom Metrics states define a different category (or dimension value). For example, there are Agent Transfer dimensions in two different flows: one in the Order Pizza flow having the value No Agent Needed when the skill successfully completes an order and the other in the UnresolvedIntent flow having the value of Bad Input that tracks when the skill transfers users to a live agent because of unresolved input. The Custom Metrics report records data for these metrics when these states are included in an execution flow.

| Custom Metric States for Agent Transfer Dimension | Flow | Value | Use |

|---|---|---|---|

setInsightsCustomMetricsNoAgent |

Order Pizza (from the next transition of the Resolve Composite

Bag state,

|

No Agent Needed | Reflects the number of successful conversations where orders were placed without assistance. |

setInsightsCustomMaxErrors |

Order Pizza (from the cancel transition of the Resolve Composite

Bag state)

|

Max Errors | Reflects the number of conversations where users were directed to live agents because they reached the m |

setInsightsCustomMetricsBadInput |

UnresolvedIntent | Bad Input | Reflects the number of conversations where unresolved input resulted in users getting transferred to a live agent. |

setInsightsCustomMetricsLiveAgent |

Call Agent (before the Agent Initiation sequence) | Agent Requested | Reflects the number of conversations where users requested a live agent. |

Export Custom Metrics Data

| Column | Description |

|---|---|

CREATED_ON |

The date of the data export. |

USER_ID |

The ID of the skill user. |

SESSION_ID |

An identifier for the current session. This is a random GUID, which makes this ID different from the USER_ID. |

BOT_ID |

The skill ID which is assigned to the skill when it was created. |

CUSTOM_METRICS |

A JSON array that contains an object for each custom

metric dimension. name is a dimension name and

value is the dimension value captured from the

conversation. [{"name":"Custom Metric Name

1","value":"Custom Metric Value"},{"name":"Custom Metric Name

2","value":"Custom Metric Value"},...] For example:

[{"name":"Pizza Size","value":"Large"},{"name":"Pizza

Type","value":"Hot and Spicy"},{"name":"Pizza

Crust","value":"regular"},{"name":"Agent Transfer","value":"No

Agent Needed"}].

|

QUERY |

The user utterance or the skill response that contains a custom metric value. |

CHOICES |

The menu choices in UI components. |

COMPONENT |

The dialog component,

System.setCustomMetrics, (Set Custom Component

in visual flows) that executes the custom metrics.

|

CHANNEL |

The channel that conducted the session. |

Review Intents Insights

This report returns the intents defined for a skill over a given time period, so its contents may change to reflect the intents that have been added, renamed, or removed from the skill at various points in time.

Completed Paths

You can use these statistics and as indicators of the user experience. For example, you can use this report to ascertain if the time spent is appropriate to the task, or if the shortest paths still result in an attenuated user experience, one that may encourage users to drop off. Could you, for example, usher a user more quickly through the skill by slotting values with composite bag entities instead of prompts and value setting components?

- You can trace the execution path for a selected intent by clicking View Path, which opens the Paths report filtered by completed conversations for the intent. To improve focus on the execution paths, you can filter out the states that you're not interested in.

- You can read transcripts of the completed conversations for an intent by clicking View Conversations, which opens the Conversations report filtered by completed conversations for the intent.

Incomplete Paths

System.DefaultErrorHandler. Using it, you can find out if a dialog

flow state is a continual point of failure and the reasons why (errors, timeouts, or bad

user input). This report doesn’t show paths or velocity for incomplete paths because

they don’t apply to this user input. Instead, the bar chart ranks each intent by the

number messages that either couldn’t be resolved to any intent, or had the potential of

getting resolved (meaning the system could guess an intent), but were prevented from

doing so because of low confidence scores.

The Incomplete States chart doesn't render Answer Intents (static intents) in YAML-based flows because their outcomes are supported by the

System.Intent component state alone, not by a

series of states in an dialog flow definition.

{}). Even if the skill handles

the transaction successfully, Insights will still classify the conversation as

incomplete, and will chart the final state as

System.DefaultErrorHandler.

- Click View Path opens the Paths report

filtered for incomplete conversations for the selected intent. The terminal

states on this path may include states defined in the dialog or an internal

state that marks the end of a conversation, such as

System.EndSession,System.ExpiredSession,System.MaxStatesExceededHandler, andSystem.DefaultErrorHandler. - You can access transcripts of conversations that lead to the failure by clicking View Conversations. This option opens the Conversations report filtered for incomplete conversations for the selected intent. You can narrow the results further by applying a filter. For example, you can filter the report by error conditions.

unresolvedIntent

In addition to the duration and routes for task-oriented intents, the Intents report also returns the messages that couldn’t get resolved. To see these messages, click unresolvedIntent in the left navbar. Clicking an intent in the Closest Predictions bar chart updates the Unresolved Message window with the unresolved messages for that intent sorted by a probability score.

Review Path Insights

The Paths report lets you find out how many conversations flowed through the

intents' execution paths for any given period. This report renders a path that's similar

to a transit map where the stops can represent intents, the states defined in the dialog

flow definition and the internal states that mark the beginning and end of every

conversation that is not classified as in-progress.

Description of the illustration path-report.png

You can scroll through this path to see where the values slotted from the user

input propelled the conversation forward, and where it stalled because of incorrect user

input, timeouts resulting from no user input, system errors, or other problems. While

the last stop in a completed path is green, for incomplete paths where these problems

have arisen, it’s red. Through this report, you can find out where the number of

conversations remained constant through each state and pinpoint where the conversations

branched because of values getting set (or not set), or dead-ended because of some other

problem like a malfunctioning custom component or a timeout.

Query the Paths Report

All of the execution flows render by default after you enter your query. The green Begin arrow

System.BeginSession, the system state that starts

each conversation. The System.Intent icon For incomplete conversations, the path may conclude with an internal state such as

System.ExpiredSession,

System.MaxStatesExceededHandler, or

System.DefaultErrorHandler that represent the error that terminated

the conversation.

If the final state in a YAML-authored flow uses an empty (or implcit) transaction (

{}), Insights will classify this state as a

System.DefaultErrorHandler state and consider the conversation

as incomplete, even if the skill handled the transaction succesfully.

The report displays Null Response for any customer message that's blank (or not otherwise in plain text) or contains unexpected input. For non-text responses that are postback actions, it displays the payload of the most recent action. For example:

{"orderAction":"confirm""system.state":"orderSummary"}Scenario: Querying the Pathing Report

Looking at the Overview report for a financial skill, you notice that there is a sudden uptick in incomplete conversations. By adding up the values represented by the orange "incomplete" segments of the stacked bar charts, you deduce that conversations are failing on the execution paths for the skill's Send Money and Balances intents.

To investigate the intent failures further, you open the pathing report and

enter your first query: filter for all intents that have an incomplete outcome. The path

renders with two branches: one that begins with startPayments and ends

with SystemDefaultErrorHandler and a second that starts with

startBalances and also ends with System.DefaultErrorHandler. Clicking

the final node in either path opens the details pane that notes the number of errors and

displays snippets of the user messages received by the skill before these errors

occurred. To see these snippets in context, you then click View

Conversations in the details panel to see the transcript. In all of the

conversations, the skill was forced to respond with Unexpected Error Prompt (Oops!

I'm encountering a spot of trouble…) because system errors prevented it from

processing the user request.

To find out more about the states leading up to these errors (and their

possible roles in causing these failures), you then refer to the dialog flow definition

to identify the states that begin the execution paths for each of the intents. These

states are startBalances, startTxns,

startPayments, startTrackSpending, and

setDate.

Comparing the paths to the dialog flow definition, you notice that in both

the startPayments and the startBalances flows, the

last state rendered in the path precedes a state that uses a custom component. After

checking the Components![]() page, you notice that the service has been disabled, preventing the skill from

retrieving the account information needed to complete conversations.

page, you notice that the service has been disabled, preventing the skill from

retrieving the account information needed to complete conversations.

Review the Skill Conversation Insights

Using the Conversations report, you can examine the actual transcripts of the conversations to review how the user input completed the intent-related paths, or why it didn’t. You can filter the conversations by channel, by mode (Voice, Text, All), Type (intent flow or LLM flow), and by time period.

For a single intent, the Conversations report lists the different conversations that have completed. However, in YAML-authored dialog flows, complete can mean different things depending on the user message and the

return transition, which ends the conversation and destroys the

conversation context. For an OrderPizza intent, for example, the Conversations

report might show two successfully completed conversations. Only one of them

culminates in a completed order. The other conversation ends successfully as well,

but instead of fulfilling an order, it handles incorrect user input.

View Conversation Transcripts

Clicking View Conversation opens the conversation in the

context of a chat window. Clicking the bar chart icon displays the voice

metrics for that interaction.

Description of the illustration view-conversation-window.png

View Voice Metrics

PII Anonymization

CURRENCY and DATE_TIME values are not anonymized, even though they contain numbers. Also, the "one" in the default prompt for a composite bag entity ("Please select one value for...") gets anonymized as a numeric value. To avoid this, add a custom prompt ("Select a value for...", for example).

- PERSON

- NUMBER

- PHONE_NUMBER

- URL

Enable Masking is deprecated in Release 21.12. Use PII anonymization instead to mask numeric values in the Insights reports and export logs. You cannot apply anonymization to conversations logged prior to the 21.12 release.

Enable PII Anonymization

- Click Settings > General.

- Switch on Enable PII Anonymization.

- Click Add Entity to select the entity values

that you want to anonymize in the Insights reports and the logs.

NoteIf you want to discontinue the anonymization for a PII value, or if you don't want an anonym to be used at all, select the corresponding entity and then click Delete Entity. Once you delete an entity, the actual PII value appears throughout the Insights reports for subsequent conversations. Its anonymized form, however, will remain for prior conversations.

Anonymized values are persisted to the database only after you enable anonymization for PII values for the selected entities. They are not applied to prior conversations. Depending on the date range selected for the Insights reports or export files, the PII values might appear in both their actual and anonymized forms. You can apply anonymization to any non-anonymized PII value (including those in conversations that occurred before you enabled anonymization in the skill or digital assistant settings) when you create an export task. These anonyms apply only to the exported file and are not persisted in the database.Note

Anonymization is permanent (the export task-applied anonymization notwithstanding). You can't recover PII values after you enable anonymization.

PII Anonymization in the Export File

Anonymization in an exported Insights file depends on whether (and when) you've enabled PII anonymization for the skill or digital assistant in Settings.

- The PII values recognized for the selected entities are replaced with anonyms. These anonyms get persisted to the database and replace the PII values in the logs and Insights reports. This anonymization is applied to the conversations that occur after – not prior to – your enabling of anonymization in Settings.

- The Enable PII anonymization for the file option for the export task is enabled by default to ensure that the PII values recognized for the entities selected in Settings are applied to conversations that occurred before PII anonymization had been set. The anonyms applied during the export to conversations that predate the PII anonymization exist in the export file only. The original PII values remain in the database, Insights logs, and in the Insights reports).

- If you switch off Enable PII anonymization for the

file, only the PII values recognized for the entities that were

selected in Settings will be anonymized. The log files will contain the anonyms

for conversations that occurred after anonymization settings have been enabled

for the skill or digital assistant. Prior conversations will appear as original,

unmodified utterances with their PII values intact. Consequently, the export

file may include both anonymized and non-anonymized conversations if part of the

export task's date range predates anonymization.

Note

If your export task includes anonymized conversations that occurred prior to Release 22.04, the anonyms applied to the pre-22.04 conversations will be changed, or re-anonymized, in the export files when you select Enable PII anonymization for the file for the export task. The anonyms in the exported file will not match either the anonyms in pre-22.04 export files or the anonyms that appear in the Insights reports.

- The Enable PII anonymization for the file option will be disabled by default for the export task so that the exported file will contain all the original unmodified utterances, including the PII values.

- If you select Enable PII anonymization for the file, the PII values will be anonymized in the exported file only for the default entities, PERSON, EMAIL, URL, and NUMBER. The PII values will remain in the database, logs, and Insights reports.

Apply the Retrainer

- time period

- language – For multi-lingual capability that's enabled through either native language support or translation services. By default, the report filters by the primary language.

- intents – Filter by matching the names of the two top-ranking intents, and by using comparison operators for their resolution-related properties, confidence and Win Margin.

- channels – Includes the Agent Channel that's created for Oracle Service Cloud integrations.

- text or voice modes – Includes switched conversations.

Update Intents with the Retrainer

- You can only add user input to the training corpus that belongs to a draft version of a skill, not a published version.

- You can’t add any user input that’s already present as an utterance in the training corpus, or that you have already added using the Retrainer.

- Because you cannot update a published skill, you

must create a draft version before you can add

new data to the corpus.

If you're reviewing a published version of the skill, select the draft version of the skill.

Tip:

Click Compare All Versions or switch off the Show Only

Latest toggle to access both the draft

and published versions of the skill.

or switch off the Show Only

Latest toggle to access both the draft

and published versions of the skill.

- In the draft version of the skill, apply a filter, if needed, then click Search.

- Select the user message, then choose the target

intent from the Select Intent menu.

If your skill supports more than one native language, then

you can add it to the language-appropriate training set by

choosing from among the languages in the Select

Language menu.

Tip:

You can add utterances to an intent on an individual basis, or you can select multiple intents and then select the target intent and if needed, a language from the Add To menus that's located at the upper left of the table. If you want to add all of returned requests to an intent, select Utterances (located at the upper right of the table) and then choose the intent and language from the Add To menu. - Click Add Example.

- Retrain the skill.

- Republish the skill.

- Update the digital assistant with the new skill.

- Monitor the Overview report for changes to the metrics over time and also compare different versions of the skill to find out if new versions have actually added to the skill's overall success. Repeating the retraining process improves the skill's responsiveness for each new version. For skills integrated with Oracle Service Cloud Chat, for example, retraining should result in a downward trend in escalations, which is indicated by a downward trend in the usage of agent handoff intents.

Moderated Self-Learning

By setting the Top Confidence filter below the confidence threshold set for the skill, or through the default filter, Intent Matches unresolvedIntent, you can update your training corpus using the confidence ranking made by the intent processing framework. For example, if the unresolvedIntent search returns "someone used my credit card," you can assign it to an intent called Dispute. This is moderated self-learning – enhancing the intent resolution while preserving the integrity of the skill.

For instance, the default search criteria for the report shows you the random user input that can’t get resolved to the Confidence Level because it’s inappropriate, off-topic, or contains misspellings. By referring to the bar chart, you can assign the user input: you can strengthen the skill’s intent for handling unresolved intents by assigning the input that’s made up of gibberish, or you can add misspelled entries to the appropriate task-oriented intent (“send moneey” to a Send Money intent, for example). If your skill has a Welcome intent, for example, you can assign irreverent, off-topic messages to which your skill can return a rejoinder like, “I don’t know about that, but I can help you order some flowers.”

Support for Translation Services

If your skill uses a translation service, then the Retrainer displays the user

messages in the target language. However, the Retrainer does not add translated messages

to the training corpus. It instead adds them in English, the accepted language of the

training model. Clicking ![]() reveals the English version that can potentially be added to the corpus. For

example, clicking this icon for contester (French), reveals dispute

(English).

reveals the English version that can potentially be added to the corpus. For

example, clicking this icon for contester (French), reveals dispute

(English).

Create Data Manufacturing Jobs

Instead of assigning utterances to intents yourself, you can crowd source this task

by creating Intent Annotation and Intent Validation jobs. You don't need to compile the conversation logs

into a CSV to create these jobs. Instead, you click Create then

Data Manufacturing Job.

You then choose the job type for the user input that's filtered in the Retrainer

report. For example, you can create an Intent Annotation job from a report filtered by the top

intent matching unresolvedIntent, or you can create an Intent Validation job from a report

filtered on utterances that have matched an intent.

Description of the illustration retrainer-data-manufacturing-job-dialog.png

Tip:

Using the Select utterances options, you can choose all of the results returned by the filter applied to the Retrainer for the data manufacturing job, or create a job from a subset of these results which can include a random sampling of utterances. Selecting Exclude utterances from previous jobs means that utterances selected for a previous data manufacturing job will no longer be available for subsequent jobs: the utterances included in one Intent Annotation job, for example, won't be available for a later Intent Annotation job. Use this option when you're creating multiple jobs to review a large set of results.Create a Test Suite

Similar to the data manufacturing jobs from the results

queried in the Retrainer report, you can also create test cases from the utterances returned by your query. You can add a

suite of these test cases to the Utterance Tester by clicking Create,

then Test Suite.

You can filter the utterances for the test suite using the Select

utterances options in the Create Test Suite dialog. You can include all of the

utterances returned by the filter applied to the Retrainer in the test suite, or a subset of

these results which can include a random sampling of the utterances. Select Include

language tag to ensure that the language that's associated with a test case

remains the same throughout testing.

Description of the illustration create-test-suite-dialog-insights.png

You can access the completed test suite by clicking Go to Test Cases in the Utterance Tester.

Review Language Usage

For a multi-lingual skill, you can compare the usage of its supported

languages through the segments of the Languages chart. Each segment

represents a language currently in use.

Description of the illustration languages-chart-overview-skill.png

If you want to review the conversations represented by a language in

the chart, you can click either a segment or the legend to drill down to the

Conversations report, which is filtered by the

selected language.

Description of the illustration conversations-report-filtered-language.png

Export Insights Data

The various Insights reports provide you with different perspectives, but if you need to view this data in another way, then you can create your own report from a CSV file of exported Insights data.

The data may be spread across a series of CSVs when the export task returns more than 1,048,000 rows. In such cases, the ZIP file will contain a series of ZIP files, each containing a CSV.

- Name: The name of the export task.

- Last Run: The date when the task was most recently run.

- Created By: The name of the user who created the task.

- Export Status: Submitted, In Progress, Failed, No Data (when there's no data to export within the date range defined for the task), or Completed, a hyperlink that lets you download the exported data as a CSV file. Hovering over the Failed status displays an explanatory message.

An export task applies to the current version of the skill.

Create an Export Task

- Open the Exports page and then click + Export.

- Enter a name for the report and then enter a date range.

- Click Enable PII anonymization for the exported

file to replace Personally Identifiable Information (PII) values

with anonyms in the exported file. These anonyms exist only in the exported file

if PII is not enabled in the skill settings. In this case, the PII values, not

their anonym equivalents, still get stored in database and appear in the

exported Insights logs and throughout the Insights reports, including the

Conversations report, the Retrainer, and the key phrases in the word cloud. If PII has been enabled in the skill

settings, then logs and Insights reports will contain anonyms.

Note

The PII anonymization that's enabled for the skill or digital assistant settings factors into how PII values that get anonymized in the export file and also contributes to the consistency of the anonymization in the export file. - Click Export.

- When the task succeeds, click Completed to

download a ZIP of the CSV (or CSVs for large exports). The name of the

skill-level export CSV begins with

B_. File names for digital assistant-level exports begin withD_.

Description of the illustration insights-export-dialog.png

Review the Export Logs

BOT_NAMEcontains the name of the skill or the name of the digital assistant. You can use this column to see how the dialog is routed from the digitial system to the skills (and between the skills).CHANNEL_SESSION_IDstores the channel session ID. You can use that ID, in conjunction with the third column,CHANNEL_ID, to create a kind of unique identifier for the session. Because sessions can expire or get terminated, you can use this identifier to find out if the session has changed.TIMESTAMPindicates the chronology or sequence in which the events happened. Typcially, you would sort by this column..USER_UTTERANCEandBOT_RESPONSEcontain the actual conversation between the skill and its user. These two fields make the interleafing of the user and skill messages easily visible when you sort by theTIMESTAMP.There may be duplicate utterances in the

USER_UTTERANCEcolumn. This can happen when user testing runs on the same instance, but more likely it's because the utterance is used in different parts of the conversation.- You can use the

COMPONENT_NAME,CURR_STATEandNEXT_STATEto debug the dialog flow.

Filter the Exported Insights Data

TIMESTAMP column

to view the sequence of events. For other perspectives, such as the skill-user

conversation, for example, you can filter the columns by the system-generated internal states. Common filtering techniques

include:

- Sorting out the skill and digital assistant conversation – When an

export contains both data from a digital assistant and its registered skills,

the contents of the

BOT_NAMEfield might seem confusing, as the conversation appears to jump arbitrarily between the different skills and between the skills and the digitial assistant. To to see the dialog in the correct sequence (and context), theTIMESTAMPcolumn in ascending order. - Finding the conversation boundaries – Use

System.BeginSessionfield and one of the terminal states to find the beginning and end of a conversation. Conversations start with aSystem.BeginSessionstate. They can end with any of the following terminal states:System.EndSessionSystem.ExpiredSessionSystem.MaxStatesExceededHandlerSystem.DefaultErrorHandler

- Reviewing the actual user-skill conversation – To isolate the

contents of the

USER_UTTERANCEandBOT_RESPONSEcolumns, filterCURR_STATEcolumn by the system-generated statesSystem.MsgReceivedandSystem.MsgSentNoteSometimes parts of the user-skill dialog may be repeated in the

A non-text message response, such those from resolve entities states, the skill output will be partial responses joined by a newline character.USER_UTTERANCEandBOT_RESPONSEcolumns. The user text is repeated when there is an automatic transition that does not require user input. The skill responses get repeated if next state is one of the terminal states, such asSystem.EndSessionorSystem.DefaultErrorHandler. - Reviewing just the dialog flow execution with the user-skill dialog

– To view internal transactions or display only the non-text messages, you need

to filter out the

System.MsgReceivedandSystem.MsgReceivedstates from theCURR_STATEcolumn (the opposite approach to viewing just the dialog). - Identifying a session – Compare the values in the

CHANNEL_SESSION_IDandSESSION_ID(which are next to each other).

The Export Log Fields

| Column Name | Description | Sample Value |

|---|---|---|

BOT_NAME |

The name of the skill | PizzaBot |

CHANNEL_SESSION_ID |

The ID for a user for the session.This value identifies a new session. A change in this value indicates that the session expired or was reset for the channel. | 2e62fb24-8585-40c7-91a9-8adf0509acd6 |

SESSIONID |

An identifier for the current session. This is a

random GUID, which makes this ID different from the

CHANNEL_SESSION_ID or the

USER_ID. A session indicates that one or more

intent execution paths that have been terminated by an explicit

return transition in state definition, or by an

implicit return injected by the Dialog Engine.

|

00cbecbb-0c2e-4749-bfa9-c1b222182e12 |

TIMESTAMP |

The "created on" timestamp. Used for chronological ordering or sequencing of events. | 14-SEP-20 01.05.10.409000 PM |

USER_ID |

The user ID | 2880806 |

DOMAIN_USERID |

Refers to the USER_ID.

|

2880806 |

PARENT_BOT_ID |

The ID of the skill or digital assistant. When a conversation is triggered by a digital assistant, this refers to the ID of the digital assistant. | 9148117F-D9B8-4E99-9CA9-3C8BA56CE7D5 |

ENTITY_MATCHES |

Identifies the composite bag item values that are

matched in the first utterance that's resolved to an intent. If a

user's first message is "Order a large pizza", this column will

contain the match for the for the PizzaSize item within the

composite bag entity,

Pizza:ENTITY_MATCHES column, because the initial

message that was resolved to the intent did not contain any item

values.

An empty object ( |

{"Pizza":[{"entityName":"Pizza","PizzaType":["CHEESE

BASIC"],"PizzaSize":["Large"]}]} |

PHRASE |

The ODA interpretation of the user input | large thin pizza |

INTENT_LIST |

A ranking of the candidate intents, expressed as a JSON object. | [{"INTENT_NAME":"OrderPizza","INTENT_SCORE":0.4063},{"INTENT_NAME":"OrderPasta","INTENT_SCORE":0.1986}]For digital assisant exports, this is a ranking of

skills that were called through the digital assistant. For

example:

|

BOT_RESPONSE |

The responses made by the skill in response to any user utterances. | How old are you? |

USER_UTTERANCE |

The user input. | 18 |

INTENT |

The intent selected by the skill to process the conversation.This lists the top intent out of the list of intent(s) that were considered a possibility for the conversation. | OrderPizza |

LOCALE |

The user's locale | en-US |

COMPONENT_NAME |

The component (either system or custom), executed in

the current state. You can use this field along with the

CURR_STATE and NEXT STATE to

debug the dialog flow.There are other values in the

COMPONENT_NAME column that are not

components:

|

AgeChecker |

CURR_STATE |

The current state for the conversation, which you use

to determine the source of the messgage. This field contains the

names of the states defined in the dialog flow definition along with

system-genarated states. You can filter the CSV by these states,

which include System.MsgRecieved for user messages

and System.MsgSent for messages sent by the skill

or agents for customer service integrations.

|

checkage

|

NEXT_STATE |

The next state in the execution path. The state transitions in the dialog flow definition indicate the next state in the execution path. | crust |

Language |

The language used during the session. | fr |

SKILL_VERSION |

The version of the skill | 1.2 |

INTENT_TYPE |

Whether the intent is transactional

(TRANS) or an answer intent

(STATIC)

|

STATIC |

CHANNEL_ID |

Identifies the channel on which the conversation was

conducted. This field, along with

CHANNEL_SESSION_ID, depict a session.

|

AF5D45A0EF4C02D4E053060013AC71BD |

ERROR_MESSAGE |

The returned error message. | Session expired due to

inactivity.

|

INTENT_QUERY_TEXT |

The input that's sent to the intent server for

classification. The content of INTENT_QUERY_TEXT

and USER_UTTERANCE are the same when the user input

is in one of the native languages, but it's different when the user

input is in a language that's not natively supported so it's handled

by a translated service. In this case, the

INPUT_QUERY_TEXT is in English.

|

|

TRANSLATE_ENABLED |

Whether a translation service is used. | NO |

SKILL_SESSION_ID |

The session ID | 6e2ea3dc-10e2-401a-a621-85e123213d48 |

ASR_REQUEST_ID |

A unique key field that identifies each voice input, in other words, the Speech Request ID. Presence of this value indicates the input is a voice input. | cb18bc1edd1cda16ac567f26ff0ce8f0 |

ASR_EE_DURATION |

The duration for a single voice utterance within a conversation window. | 3376 |

ASR_LATENCY |

The voice latency, measured in milliseconds. While voice recognition demands a large number of computations, the memory bandwidth and battery capacity are limited. This introduces latency from the time a voice input is received to when it is transcribed. Additionally, server-based implementations also add latency due to the round trip. | 50 |

ASR_RTF |

a standard metric of performance in the voice recognition system. If it takes time {P} to process an input of duration {I} , the real time factor is defined as: RTF = \frac{P}{I}.The ratio of the time taken to process the audio input relative to the CPU time. For example, if it takes one second of CPU time to process one second of audio, then the RTF is 1 (1/1). The RTF for 500 milliseconds to process one second of audio is .5 or ½ . | 0.330567 |

CONVERSATION_ID |

The conversation ID | 906ed6bd-de6d-4f59-a2af-3b633d6c7c06

|

CUSTOM_METRICS |

A JSON array that contains an object for each custom

metric dimension. name is a dimension name and

value is the returned value. This column is

available for Versions 22.02 and higher.

|

|

Internal States

| State Name | Description |

|---|---|

System.MsgReceived |

A message received event that's triggered to Insights when a skill receives a text message from an external source, such as a user or another skill. |

System.MsgSent |

A message sent event that's triggered to Insights when a skill

responds to an external source, such as a user or another

skill.

For each |

System.BeginSession |

A System.BeginSession event is sent as a marker

for starting the session when:

|

System.EndSession |

A System.EndSession event is

captured as a marker for session termination when the current state

has not generated any unhandled errors and it has a

return transition, which indicates that there

won't be another dialog state to execute. The

System.EndSession event may also be recorded

when the current state has:

|

System.ExpiredSession (Error type:

"systemHandled") |

A session time out. The default timeout is one

hour.

When a conversation stops for more than one

hour, the expiration of the session is triggered. The session

expiration is captured as two separate events in Insights. The

first event is the idle state, the state in the dialog flow

where user communication stopped. The second is the internal

|

System.DefaultErrorHandler |

The default error handler is executed when there is

no error handling defined in the dialog flow, either globally (such

as the defaultTransitions node in YAML-based

flows), or at the state level with error

transitions. When the dialog flow includes error

transitions, a System.EndSession event is

triggered.

|

System.ExpiredSessionHandler |

The System.ExpiredSessionHandler

event is raised if a message is sent from an external system, or

user, to the skill after the session has expired. For example, this

event is triggered when a user stops chatting with the skill in

mid-conversation, but then sends a message after leaving the chat

window open for more than one hour.

|

System.MaxStatesExceededHandler |

This event is raised if there are more than 100 dialog states triggered as part of a single user message. |

Tutorial: Use Oracle Digital Assistant Insights

Apply Insights reporting (including the Retrainer) with this tutorial: Use Oracle Digital Assistant Insights.