This part describes network requirements and functionality for systems with a network architecture based on a physical InfiniBand fabric.

Oracle Private Cloud at Customer relies on different physical and logical networks to provide secure and reliable network connectivity for different application and management functions. This section outlines the minimum network requirements to install an Oracle Private Cloud at Customer system.

Infrastructure Management Network

All infrastructure components inside the base rack are physically connected to this Gigabit Ethernet network, which uses the

192.168.4.0/24subnet. A single uplink connects it to an Oracle-managed switch, which integrates the management interfaces of the external ZFS storage appliance and the Oracle Advanced Support Gateway. With a second network interface, the support gateway connects to the data center network, enabling Oracle to access the infrastructure management network remotely. No customer or external access to this network is permitted.Virtual Machine Management Private Network

A Private Virtual Interconnect (PVI), a virtual Ethernet network configured on top of the physical InfiniBand fabric, connects the management nodes and compute nodes in the

192.168.140.0/24subnet. It is used for all network traffic inherent to Oracle VM Manager, Oracle VM Server and the Oracle VM Agents. No external access is provided.Optical Storage Network

Four 10GbE optical connections run between the base rack and internal 10GbE switches to provide resilient connectivity between the compute nodes and the external Oracle ZFS Storage Appliance ZS7-2. This dedicated 10GbE Ethernet internal network allows customer VMs to access shared storage on the external ZFS storage appliance. No external access is provided.

InfiniBand Storage Private Network

For storage connectivity between the management nodes, compute nodes, and internal and external ZFS storage appliances, a high bandwidth IPoIB network is used. The components are assigned an IP address in the

192.168.40.0/24subnet. This network also fulfills the heartbeat function for the clustered Oracle VM server pool. No external access is provided.Virtual Machine Networks

For network traffic to and from virtual machines (VMs), virtual Ethernet networks are configured on top of the physical InfiniBand fabric. Untagged traffic is supported by default; the customer can request the addition of VLANs to the network configuration, and subnets appropriate for IP address assignment at the virtual machine level. The default configuration includes one private VM network and one public VM network. Additional custom networks can be configured to increase network capacity. Contact Oracle for more information about expanding the network configuration.

External VM connectivity is provided through public VM networks, which terminate on the I/O modules installed in the Fabric Interconnects and are routed externally across the 10GbE ports. The I/O ports must be cabled to redundant external 10GbE switches, which in turn must be configured to accept the tagged VLAN traffic to and from the VMs.

Client Network

This information is currently not available in the documentation.

In addition, Oracle Private Cloud at Customer requires the following data center network services:

DNS Service

As part of the deployment process, you work together with Oracle to determine the host names and IP addresses to be used when deploying Oracle Private Cloud at Customer. The fully qualified domain names (FQDN) and IP addresses of the management nodes must be registered in the data center Domain Name System (DNS).

NTP Service

At least one reliable Network Time Protocol (NTP) server is required and should be accessible on the client network. The management nodes are configured to synchronize with the NTP server. All other Oracle Private Cloud at Customer components are configured to reference the active management node for clock synchronization.

Every Oracle Private Cloud at Customer rack is shipped with all the required network equipment and cables to support the Oracle Private Cloud at Customer environment. Depending on your particular deployment, this includes InfiniBand and Ethernet cabling between the base rack and the Oracle ZFS Storage Appliance ZS7-2, as well as additional Oracle Private Cloud switches and hardware running the Oracle Advanced Support Gateway and Oracle Cloud Control Plane. Every base rack also contains pre-installed cables for all rack units where additional compute nodes can be installed during a future expansion of the environment.

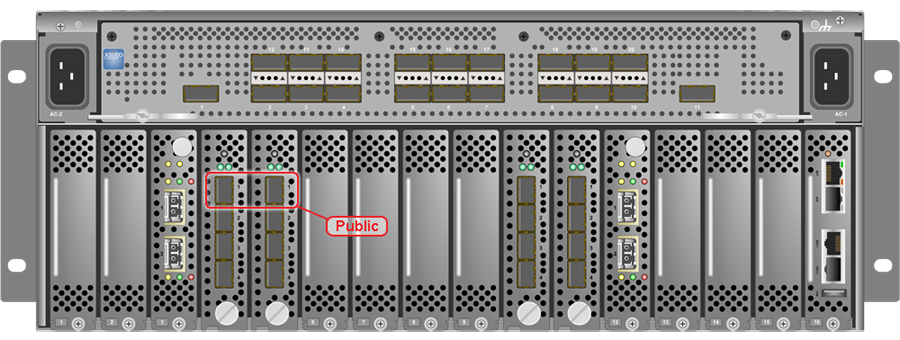

Before the Oracle Private Cloud at Customer system is powered on for the first time, the Fabric Interconnects must be properly connected to the next-level data center switches. You must connect two 10 Gigabit Ethernet (GbE) IO module ports labeled “Public” on each Fabric Interconnect to the data center public Ethernet network.

Figure 4.2 shows the location of the 10 GbE Public IO module ports on the Oracle Fabric Interconnect F1-15.

It is critical that both Fabric Interconnects have two 10GbE connections each to a pair of next-level data center switches. This configuration with four cable connections provides redundancy and load splitting at the level of the Fabric Interconnects, the 10GbE ports and the data center switches. This outbound cabling should not be crossed or meshed, because the internal connections to the pair of Fabric Interconnects are already configured that way. The cabling pattern plays a key role in the continuation of service during failover scenarios involving Fabric Interconnect outages and other components.

The IO modules only support 10 GbE transport and cannot be connected to gigabit Ethernet switches. The Oracle Private Cloud at Customer system must be connected externally to 10GbE optical switch ports.

Do not enable Spanning Tree Protocol (STP) in the upstream switch ports connecting to the Oracle Private Cloud at Customer.

It is not possible to configure any type of link aggregation group (LAG) across the 10GbE ports: LACP, network/interface bonding or similar methods to combine multiple network connections are not supported.

To provide additional bandwidth to the environment hosted on Oracle Private Cloud at Customer, additional custom networks can be configured. Please contact your Oracle representative for more information.

During the initial software configuration of Oracle Private Cloud at Customer, the network settings of the management nodes must be reconfigured. For this purpose, you should reserve three IP addresses in the public (data center) network: one for each management node, and one to be used as virtual IP address shared by both management nodes.

Oracle Private Cloud at Customer also requires a large number of preassigned private IP addresses. To avoid network interference and conflicts, you must ensure that the data center network does not overlap with any of the infrastructure subnets of the Oracle Private Cloud at Customer default configuration. These are the subnets you should keep clear:

192.168.140.0/24

192.168.40.0/24

192.168.4.0/24