16 Migrate Virtual Machines and Block Storage to Oracle Cloud Infrastructure

Note that this migration tool doesn't help with discovering resources in your source environment. You can use Oracle Cloud Infrastructure Classic Discovery and Translation Tool to generate reports of all the resources in your Oracle Cloud Infrastructure Compute Classic account.

You can't use this tool for the following types of migration:

- Application-aware migration

- Object storage migration. Use rclone or CloudBerry to migrate data from Oracle Cloud Infrastructure Object Storage Classic to Oracle Cloud Infrastructure Object Storage.

- PaaS migration. Re-create the PaaS instances on Oracle Cloud Infrastructure and redeploy the applications.

- Oracle Database migration. Use native tools like RMAN, Data Pump, and GoldenGate or GoldenGate Cloud Service to migrate when possible. See Select a Method to Migrate Database Instances.

It is assumed that you are familiar with both Oracle Cloud Infrastructure Compute Classic as well as Oracle Cloud Infrastructure and that you have access to both services.

The Terraform configurations generated by Oracle Cloud Infrastructure Classic Discovery and Translation Tool can be used to automate the set up of resources in your Oracle Cloud Infrastructuretenancy. It is assumed that you are familiar with installing and using Terraform.

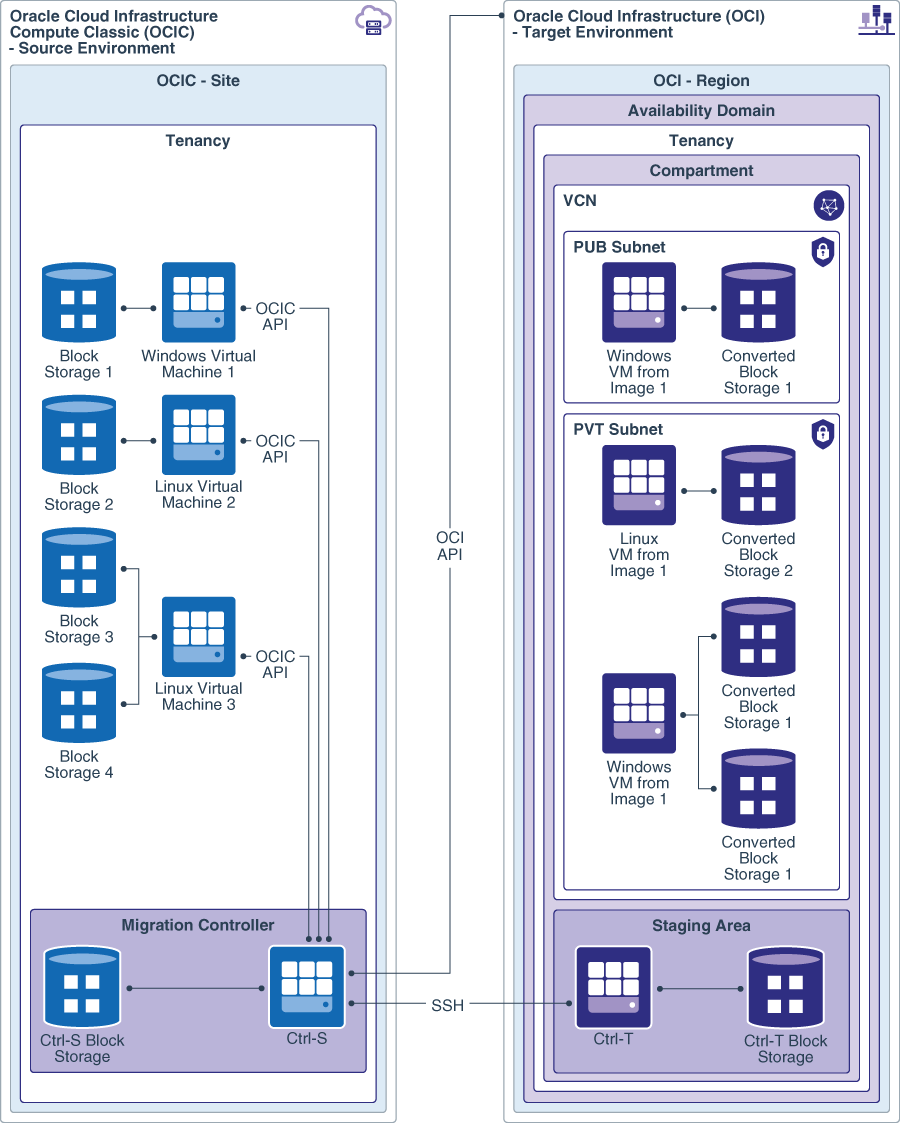

Architecture

This architecture shows resources in your Oracle Cloud Infrastructure Compute Classic account being migrated to your Oracle Cloud Infrastructure tenancy using Oracle Cloud Infrastructure Classic VM and Block Storage Migration Tool.

Description of the illustration migrate_vms_block_storage_architecture.png

Oracle Cloud Infrastructure Classic VM and Block Storage Migration Tool uses the migration controller instance in your source environment, Control-S, to migrate your VMs and block storage volumes. The Control-S instance contains tools and scripts that you can use to configure your source VMs for migration. The Control-S instance also includes scripts that set up another migration controller instance, Control-T, in the target environment. These migration controllers are used to collect information about the source environment, copy data, create images from boot volumes, and create the required images and data volumes in the target environment.

Workflow

Here's an overview of the high-level steps required to migrate your VMs and block storage from Oracle Cloud Infrastructure Compute Classic to Oracle Cloud Infrastructure.

This workflow assumes that you'll use Oracle Cloud Infrastructure Classic Discovery and Translation Tool along with Oracle Cloud Infrastructure Classic VM and Block Storage Migration Tool to automate this process.

- Create an instance in Oracle Cloud Infrastructure Compute Classic using the Oracle Cloud Infrastructure Classic Migration Tools image. If you've already created this instance earlier in your migration process, you can use the same instance for this procedure. You don't need to create it again.

- Log in to the migration controller instance, Control-S, using SSH.

- Set up and use Oracle Cloud Infrastructure Classic Discovery and Translation Tool to do the following. For more information about using this tool, see Identify and Translate Resources in Your Source Environment. For information about the commands, options, and permitted values, run the tool with the

--helpoption. To view help on all commands and options, use:opcmigrate --full-help.- Run

opcmigrate discoverto get a list of resources in your source environment. - Run

opcmigrate plan createto create a migration plan. You can use several options with this command to filter the output of theopcmigrate discovercommand. - Edit the migration plan to specify migration attributes for individual objects, if required.

- Run

opcmigrate generateto create Terraform configuration files. If you want to migrate your network using network topology mapping, use the--with-security-rule-unionoption to include security lists and security rules in the generated Terraform file. - Run

opcmigrate instances-export --plan <plan_name>to generate a list of instances to migrate.

- Run

- Review the Terraform configuration carefully and edit it as required. When you're satisfied with the network definition, apply the Terraform plan in your Oracle Cloud Infrastructure tenancy. At this stage, the Terraform configuration creates only the networking objects. Compute instances aren't created yet, as all the information required to launch instances isn't yet available in the configuration file.

- Use Oracle Cloud Infrastructure Classic VM and Block Storage Migration Tool to complete the following steps. Detailed instructions to complete these steps are provided in the following sections.

- Set up the Control-S instance to perform the migration.

- Use the list of instances generated by Oracle Cloud Infrastructure Classic Discovery and Translation Tool to create one or more job files. Alternatively, provide this list as input in the

secret.ymlfile when you set up Control-S. You can also specify a list of unattached volumes that you want to migrate. - Use the

opcmigrate migrate instanceset of commands to configure the source instances, set up the migration controller instance, Control-T, in the target environment, and run the migration job.

- After volume migration is complete, launch your compute instances in the target environment using the appropriate boot volume and then attach the appropriate data volumes to each instance. You can complete this procedure either by using the Oracle Cloud Infrastructure, or by updating the Terraform configuration with the required information.

Migration Overview

Oracle Cloud Infrastructure Classic VM and Block Storage Migration Tool performs a number of tasks to copy boot and data volumes from your Oracle Cloud Infrastructure Compute Classic account to your Oracle Cloud Infrastructure tenancy.

This tool does the following:

- Runs as a service daemon on the Control-S instance. You can start or stop the service, or view the status of the service. You can also run multiple migration jobs at the same time to migrate resources in parallel.

- Configures Control-S with details of the resources in the source environment to be migrated, based on user input or input from Oracle Cloud Infrastructure Classic Discovery and Translation Tool.

- Configures Control-S with information required to access the target Oracle Cloud Infrastructure environment, based on user input.

- Configures the source instances to collect information about the attached storage volumes. When the boot images and storage volumes have been migrated to the Oracle Cloud Infrastructure environment, this information is used to attach the storage volumes to the appropriate virtual machines at the appropriate mount points.

- Starts the migration controller VM, Control-T, in Oracle Cloud Infrastructure.

- The migration process performs the following steps for each source instance and all attached storage volumes, for both boot and data volumes. These steps are carried out in parallel for up to eight boot and data volumes at a time.

- Creates colocated snapshots of the source storage volumes to be migrated. If you specify volumes restored from colocated snapshots, the tool creates a remote snapshot of those volumes. Creating a remote snapshot of a volume restored from a colocated snapshot can take a longer time, compared to creating a colocated snaphost of a storage volume.

- Creates new volumes from the snapshots.

- Attaches the new storage volumes to Control-S.

- Creates the corresponding storage volumes in Oracle Cloud Infrastructure, attached to the migration controller VM, Control-T.

- Copies the data from all volumes attached to Control-S to volumes attached to Control-T over a secure SSH pipe.

- Detaches the migrated volumes from Control-T, so they can be used to launch VMs or attached to new VMs as data volumes.

- Detaches the storage volumes from Control-S in Oracle Cloud Infrastructure Compute Classic.

- Deletes the new storage volumes that were created for migration in Oracle Cloud Infrastructure Compute Classic.

- Deletes the colocated snapshots in Oracle Cloud Infrastructure Compute Classic.

After the boot and data volumes have been migrated to the target environment, launch your VMs in Oracle Cloud Infrastructure using the migrated boot volumes and attach the migrated data volumes to the appropriate VMs.

Considerations for Migration

Before you start your migration, consider the following factors that could have an impact on your migration process.

- Perform a proof-of-concept migration with VMs running applications that are as close to the configurations as possible.

- Quiesce applications on your source VMs and don't make any changes on the source VMs while migration is in progress.

- The maximum size of the boot volumes of VMs that can be imported is approximately 1 TB – assuming the boot volume has 60% used space and 50% compression ratio.

- A block storage volume in Oracle Cloud Infrastructure Compute Classic can have a maximum of five colocated snapshots. If a storage volume has more than five snapshots, the tool generates an error and fails.

- A single Control-S instance can migrate up to eight storage volumes at a time. To migrate a larger number of volumes, you can launch multiple Control-S instances.

- You can create and specify multiple job files on a migration controller instance. These jobs use the same source and target environments; only the list instances and storage volumes specified for migration is different.

- Up to four migration jobs can be run in parallel. If you submit more than four jobs, the other jobs are queued until some jobs finish. Note that if you run multiple jobs in parallel, the total number of storage volumes being migrated must not exceed eight across all the currently running migration jobs.

- The steps to migrate data for all the storage volumes are carried out in parallel. So the overall time taken for the migration depends mainly on the size of the largest storage volume that needs to be migrated.

- In a single run of the migration tool, you can migrate VMs and storage volumes from a single source identity domain and site. To migrate VMs and storage volumes from a different site or identity domain, create and configure another Control-S instance.

- In a single run of the migration tool, you can migrate VMs and storage volumes to a single target tenancy, region, and availability domain. To migrate resources to a different tenancy, region, or availability domain, create and configure another Control-S instance.

- A boot volume is migrated to a specified availability domain in Oracle Cloud Infrastructure and it can be used to launch a VM in the same availability domain only. Ensure that you migrate each boot volume to the availability domain where you want to launch the VM.

- When possible, the private IP addresses of the target instances should be the same as the private IP addresses of the source instances. This should be taken into consideration when setting up the network in your Oracle Cloud Infrastructure tenancy before you start migrating VMs and block volumes. In some cases, you might not be able to re-create the Oracle Cloud Infrastructure Compute Classic private IP addresses in your Oracle Cloud Infrastructure VCNs. In these cases, you might need to change application configurations to make things work.

Map Oracle Cloud Infrastructure Compute Classic Instance Shapes to Oracle Cloud Infrastructure Shapes

While some of the instance shapes in your Oracle Cloud Infrastructure Compute Classic account correspond to similar shapes in Oracle Cloud Infrastructure, in other cases you may not find an exact equivalent.

Here are some suggestions for which shapes in the target environment are the best fit for shapes you've used in your source environment.

| Oracle Cloud Infrastructure Compute Classic | Oracle Cloud Infrastructure | ||||

|---|---|---|---|---|---|

| Shape | OCPU/GPU | RAM | Shape | OCPU/GPU | RAM |

| oc3 | 1 | 7.5 | VM.Standard2.1 | 1 | 15 |

| oc4 | 2 | 15 | VM.Standard2.2 | 2 | 30 |

| oc5 | 4 | 30 | VM.Standard2.4 | 4 | 60 |

| oc6 | 8 | 60 | VM.Standard2.8 | 8 | 120 |

| oc7 | 16 | 120 | VM.Standard2.16 | 16 | 240 |

| oc8 | 24 | 180 | VM.Standard2.24 | 24 | 320 |

| oc9 | 32 | 240 | BM.Standard2.52 | 52 | 768 |

| oc1m | 1 | 15 | VM.Standard2.1 | 1 | 15 |

| oc2m | 2 | 30 | VM.Standard2.2 | 2 | 30 |

| oc3m | 4 | 60 | VM.Standard2.4 | 4 | 60 |

| oc4m | 8 | 120 | VM.Standard2.8 | 8 | 120 |

| oc5m | 16 | 240 | VM.Standard2.16 | 16 | 240 |

| oc8m | 24 | 360 | VM.Standard2.24 | 24 | 320 |

| oc9m | 32 | 480 | BM.Standard2.52 | 52 | 768 |

| ocio1m | 1 | 15 | VM.DenseIO2.8 | 8 | 120 |

| ocio2m | 2 | 30 | VM.DenseIO2.8 | 8 | 120 |

| ocio3m | 4 | 60 | VM.DenseIO2.8 | 8 | 120 |

| ocio4m | 8 | 120 | VM.DenseIO2.8 | 8 | 120 |

| ocio5m | 16 | 240 | VM.DenseIO2.16 | 16 | 240 |

| ocsg2-k80 | 6 / 2 | 120 | VM.GPU3.2 | 12 / 2 | 180 |

| ocsg2-m60 | 6 / 2 | 120 | VM.GPU3.2 | 12 / 2 | 180 |

| ocsg1-k80 | 3 / 1 | 60 | VM.GPU3.1 | 6 / 1 | 90 |

| ocsg1-m60 | 3 / 1 | 60 | VM.GPU3.1 | 6 / 1 | 90 |

If instances in your Oracle Cloud Infrastructure Compute Classic account have multiple virtual NICs (vNICs), then you might need to select a larger shape in Oracle Cloud Infrastructure, to ensure that the appropriate number of vNICs is supported.

| Oracle Cloud Infrastructure Compute Classic Shape | Oracle Cloud Infrastructure Shape for 1 or 2 vNICs | Oracle Cloud Infrastructure Shape for 3 or 4 vNICs | Oracle Cloud Infrastructure Shape for 5 or more vNICs |

|---|---|---|---|

| oc3 | VM.Standard2.1 | VM.Standard2.4 | VM.Standard2.8 |

| oc4 | VM.Standard2.2 | VM.Standard2.4 | VM.Standard2.8 |

| oc5 | VM.Standard2.4 | VM.Standard2.4 | VM.Standard2.8 |

| oc1m | VM.Standard2.1 | VM.Standard2.4 | VM.Standard2.8 |

| oc2m | VM.Standard2.2 | VM.Standard2.4 | VM.Standard2.8 |

| oc3m | VM.Standard2.4 | VM.Standard2.4 | VM.Standard2.8 |

End-to-End Procedure

Here's an example of an end-to-end procedure for migrating instances and storage volumes using the migration tools. For more detailed information, see the relevant sections of this document.

Before you start, ensure that you have:

- The required permissions in your source and target environments.

- SSH or RDP access to the source VMs.

- The SSH key required to access the Control-S instance.

- The API PEM key for Oracle Cloud Infrastructure.

Perform the following steps to complete the migration. Note that the steps here are useful as a quick reference if you're already familiar with the migration process. If you're performing a migration for the first time, it's recommended that you follow the more detailed instructions provided in the relevant sections of this document.

| Step | More Information |

|---|---|

| Create a Control-S instance in Oracle Cloud Infrastructure Compute Classic using the Oracle Cloud Infrastructure Classic Migration Tools image. | Launch the Migration Controller Instance (Control-S) in the Source Environment |

|

Log in to the Control-S instance using SSH. On the Control-S instance, copy the PEM key required for the API connection to the file |

Configure the Migration Controller Instance (Control-S) |

Use the file /home/opc/ansible/secret.yml.sample to create your secret.yml file in the same path and enter the required information.

|

Configure the Migration Controller Instance (Control-S) |

If you're migrating Linux instances, create the bucket ocic-oci-sig in Oracle Cloud Infrastructure Object Storage and generate a pre-authenticated request (PAR) for writes to this bucket. Enter this PAR in the secret.yml file on the Control-S instance.

|

Complete the Prerequisites |

On the Control-S instance, run: |

Configure the Migration Controller Instance (Control-S) |

Update the default profile file or create a new profile file in the directory /home/opc/.opc/profiles, to provide credentials for all the services in your Oracle Cloud

Infrastructure Compute Classic account.

|

Set Up Your Profile |

Discover resources in your source environment. |

Generate a Summary and JSON Output |

Generate a list of resources to be migrated. |

Generate a List of Instances to Migrate |

| Edit the list of resources to be migrated and create the required job files. | Specify the Instances and Storage Volumes to be Migrated |

| Set up the VCN, subnets, and other networking components in your Oracle Cloud Infrastructure tenancy to launch your migration controller instance, Control-T, as well as for your migrated VMs and block volumes. | Create a Virtual Cloud Network in Oracle Cloud Infrastructure |

| Enable Logical Volume Manager (LVM) on Control-S, if required. | Enable Logical Volume Manager (LVM) on Control-S |

SSH into each Linux VM that you want to migrate. Install:

Ensure that all secondary attached volume mounts have Ensure that you don't specify You are required to specify the |

Prepare Your Linux Source Instances for Migration |

On the Control-S instance, create or update the /home/opc/ansible/hosts.yml file with information about the Linux VMs that you want to migrate.

|

Prepare Your Linux Source Instances Using Tools on Control-S |

Use the scripts on Control-S to set up your Linux source VMs. |

Prepare Your Linux Source Instances Using Tools on Control-S |

Log in to each Windows VMs that you want to migrate. Copy the file /home/opc/src/windows_migrate.ps1 from Control-S to each source instance.

Run |

Prepare Your Windows Source Instances for Migration |

Launch the migration controller in the target environment. |

Launch the Migration Controller Instance (Control-T) in the Target Environment |

Start a migration job. |

Start the Migration |

Monitor the migration job. |

Monitor the Migration |

|

For an incremental migration or a base migration job that has the shutdown policy For the base migration phase or when your migration job that has the shutdown policy In the second or subsequent phase of incremental migration, you can use the |

Resume a Migration Job |

| When the migration job is complete, use the migrated block volumes to launch instances in your Oracle Cloud Infrastructure tenancy. | Launch VMs in the Target Environment |

Attach block volumes to your instances. Then, on the Control-S instance, run: |

Attach and Mount Block Storage on Compute Instances in the Target Environment |

| Validate the target environment after the migration is complete. | Validate the Target Environment |

Plan for the Migration

It's important to plan your migration carefully, to ensure the process is smooth and requires minimal down time.

Before you start the migration, you should:

- Collect information about the source instances that you want to migrate.

- Ensure that you have the required SSH and PEM keys to access the source and target environments.

- Configure the source environment.

- Set up the network in the target environment.

- Collect information from the target environment, such as the tenancy, user, and compartment Oracle Cloud IDs (OCIDs).

Complete the Prerequisites

Before you begin your migration, complete the following prerequisites.

- Launch the migration controller instance, Control-S, in your Oracle Cloud Infrastructure Compute Classic account using the Oracle Cloud Infrastructure Classic Migration Tools image. For information about creating your Control-S instance, see Complete the Prerequisites and Launch the Migration Controller Instance (Control-S) in the Source Environment. If you've already created this instance earlier in your migration process, you can use the same instance for this procedure. You don't need to create it again.

- Verify that you have sufficient quota in Oracle Cloud

Infrastructure to perform the migration.

- For the migration controller instance, you'll need one VM of 1.2 shape and block storage of 50 GB of attached storage or double the size of all attached volumes of sources you intend to migrate. The minimum size of a storage volume in Oracle Cloud Infrastructure is 50 GB. Ensure that you have sufficient storage capacity for the number of storage volumes that you intend to migrate.

- When your block volumes have been migrated, you'll need to launch the VMs that you're migrating. Ensure that you have sufficient quota to launch the required number of VMs. To check your service limits, in the Oracle Cloud Infrastructure Console, from the menu, select Governance and then click Service Limits.

- In Oracle Cloud Infrastructure, ensure that you have a virtual cloud network (VCN) with subnets and any other networking components that may be required. Consider creating a separate VCN and subnet for the migration controller instance, Control-T. This ensures that the private IP address assigned to Control-T doesn't conflict with the private IP address that you need to assign to an instance after migration. If you create the VCN using the Oracle Cloud Infrastructure Console, select the option CREATE VIRTUAL CLOUD NETWORK PLUS RELATED RESOURCES.

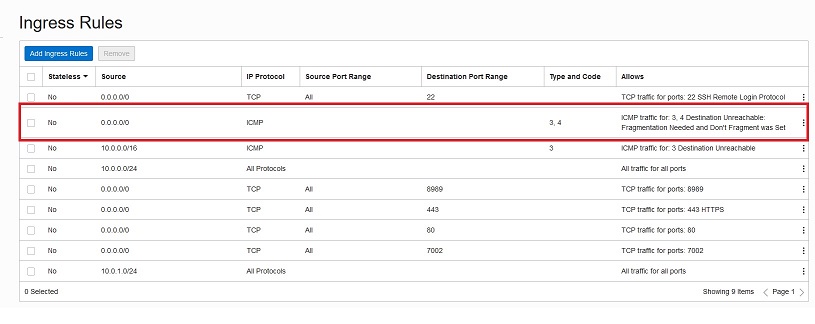

- Ensure that you have an ingress security rule in place for the Oracle Cloud

Infrastructure VCN, to allow ICMP traffic with type 3, code 4, and with the source 0.0.0.0/0. This is required to enable the Control-S instance to access resources in the Oracle Cloud

Infrastructure tenancy. To view or add a security rule, in the Oracle Cloud

Infrastructure:

- Click Networking and then Virtual Cloud Networks.

- Click the network that you want to use.

- From the Resources list on the left, click Security Lists.

- On the Security Lists page, click the security list you want to view or edit. The Security List Details page displays the ingress and egress rules in this security list.

- If you're migrating Linux instances:

- Create the bucket

ocic-oci-sigin Oracle Cloud Infrastructure Object Storage and generate a pre-authenticated request (PAR) for writes to this bucket. This write-only PAR allows the tool to write a signal object to theocic-oci-sigbucket when it is launched in the target environment, indicating that it is ready to handle storage attachments for the volumes post migration. Set the expiration date suitably far out, so that you can complete the migration before the PAR expires. If you need to run the migration tool multiple times, ensure that the PAR is still valid or create a new PAR for the bucket. - Ensure that you have

yuminstalled on each Linux instance to be migrated, that ayumrepository has been configured, and that the instance can access theyum.oracle.comrepository. When you set up your Linux source instances for migration,yumis used to install the required tools. - Ensure that Python 2.6 or higher is installed on each Linux instance to be migrated.

- Create the bucket

Get Details of the Target Environment

Log in to the Oracle Cloud Infrastructure Console and collect the information required for the migration controller, Control-S, to connect to the target environment.

Configure the Migration Controller Instance (Control-S)

Once the Control-S instance has started, connect to the instance using SSH. All of the tools required for the migration are already on the machine, but additional configuration is required to provide details of the source and target environments.

Enable Logical Volume Manager (LVM) on Control-S

On the migration controller instance, Control-S, Logical Volume Manager (LVM) is turned off by default for all attached volumes except the boot disk. If you want to use LVM with attached volumes, you must enable it.

This step is required only if you want to use LVM volumes to store the qcow2 images of boot volumes that you are migrating. Use LVM volumes only if you don't otherwise have sufficient space for the qcow2 images and you can't use non-LVM volumes. Remember that the LVM volume must be mounted on /images.

To enable LVM for attached volumes, log in to the Control-S instance using SSH and modify the global filter in the file /etc/lvm/lvm.conf. For example, if you want to enable LVM for the volume /dev/xvdc, then add "a|^/dev/xvdc|" to the global filter before "r|.*|", as follows:

global_filter = ["a|^/dev/xvdb|", "a|^/dev/xvdc|", "r|.*|" ]Enable LVM only for attached volumes that you want to migrate. Don't enable LVM for any other devices, as it could interfere with the migration workflow. Don't turn off the global filter.

Specify the Instances and Storage Volumes to be Migrated

You can specify the resources to be migrated by creating one or more job files and specifying the relevant job file when you start a migration job.

Creating multiple job files to specify the instances and storage volumes to be migrated allows you to run multiple migration jobs in parallel.

Sample Input for Specifying Resources

Job files must be created in JSON format. A job file can include a list of instances as well as a list of unattached storage volumes. However, it's recommended that you specify either a list of instances or a list of unattached storage volumes in a job file to make the objects in a migration job easier to track. Here's an example of instances and an unattached storage volume with sample values in JSON format, which you can use to create your job files.

{

"version": "1",

"incremental": false,

"instances": [

{

"name": "MULTIPART_INSTANCE_NAME_HERE",

"os": "linux",

"osKernelVersion": "4.1.12",

"osSku": "",

"attached_only": false,

"specified_volumes_only": [],

"shutdown_policy": "wait",

"specified_launch_mode": "PARAVIRTUALIZED"

},

{

"name": "MULTIPART_INSTANCE_NAME_HERE",

"os": "windows",

"osSku": "Server 2012 R2 Standard",

"attached_only": false,

"specified_volumes_only": [],

"shutdown_policy": "shutdown",

"specified_launch_mode": "EMULATED"

}

],

"volumes": [

{

"name": "MULTIPART_STORAGE_VOLUME_NAME_HERE",

"os": "linux",

"osKernelVersion": "4.1.12",

"osSku": "",

"specified_launch_mode": "PARAVIRTUALIZED"

}

]

}Generate a List of Instances for Migration Using the opcmigrate instances-export Command

You can use the output of Oracle Cloud Infrastructure Classic Discovery and Translation Tool to generate your job files. For example, to generate a list of instances in your Oracle Cloud Infrastructure Compute Classic environment, run the following commands:

opcmigrate discover

opcmigrate plan create --output migration-plan.json

opcmigrate instances-export --plan migration-plan.json --format json > instances.jsonFor more information about using these commands, see Run Oracle Cloud Infrastructure Classic Discovery and Translation Tool to Generate Reports.

From the output of this command, identify the instances that you want to migrate. Remember to add the osKernelVersion attribute for instances in the job file. This required attribute isn't included in the output generated by the opcmigrate instances-export command. Also add any other instance attributes described below, if required.

Specify Attributes of Instances for Migration

The following information is required about instances and block volumes that you want to migrate.

name:Specify the full name of the instances that you want to migrate. Instance names have the following format:

You can find out the instance names from the Oracle Cloud Infrastructure Compute Classic console./Compute-<account_id>/<user_email_id>/<user_specified_instance_name>/<autogenerated_instance_name>os:Specify the operating system of the instance. For Linux instances, specifylinuxand for Windows instances, specifywindows.osKernelVersion:This value is required for deciding whether an image will be imported in emulated mode or paravirtualized mode. The tool determines the best virtualization mode for an image based on the boot volume's image information and its default OS kernel version. The default virtualization modes are as follows:- For Linux instances with a kernel version less than 3.4 in the 3.x series, images are imported in emulated mode. Linux instances with kernel version from 3.4 to 4.x are imported in paravirtualized mode.

- For all Windows instances, the boot image is imported in emulated mode.

- For instances where the guest OS kernel version can't be determined, the boot image is imported in the emulated mode.

This value is a required attribute for Linux instances. For other instances, this value is optional. However, it is recommended that you specify this value to ensure that boot volumes are imported in the appropriate mode if you have:

- VMs created from custom images.

- Bootable volumes restored from snapshots.

- VMs created using Oracle Cloud Infrastructure Compute Classic images, where the guest OS has been updated after launching the instance.

For any instance, if you use the

specified_launch_modeattribute, then the virtualization mode specified there is used and the virtualization mode derived fromosKernelVersionis ignored.osSku:For Windows instances, fill in theosSkuattribute. Valid values for theosSkuattribute are:Server 2008 R2 EnterpriseServer 2008 R2 StandardServer 2008 R2 DatacenterServer 2012 StandardServer 2012 DatacenterServer 2012 R2 StandardServer 2012 R2 DatacenterServer 2016 StandardServer 2016 Datacenter

For Linux instances, leave the

osSkuattribute blank.shutdown_policy:Useshutdown_policyto specify if the instances to be migrated should be shut down during migrations, and if so, how. Valid values for this attribute areignore, shutdown,andwait(the default).ignore:The tool doesn't wait for the instances to shut down. It proceeds with the migration right away.For Windows instances that use RAID or mirrored volumes, don't set the shut down policy to

ignore. If you do, then Windows will fail to recognize the volumes due to consistency checks after migration. In this case, you must specify the RAID or mirror volumes manually. To avoid this issue, select one of the other shut down policies.shutdown:The tool shuts down all instances with this policy specified, before proceeding with the migration.wait:You must shut down all instances before the migration starts. Use the Oracle Cloud Infrastructure Compute Classic console or any other interface to shut down the instances. When any instance in a job has the policywait,the state of all instances and volumes in that job is set toreadyafter the attachment and volume information is captured. After all instances to be migrated have been shut down, use theopcmigrate migrate instance job resumecommand to resume the migration. See Stop and Restart the Migration Service.

Use the

shutdownor thewaitmode to ensure that all data has been written to the boot volume before the volume is migrated.Note that instances must not be shut down before you start a migration job. Even if you specify the shut down policy

waitorshutdown,all instances that are part of a migration job must be running when you start the migration job.specified_launch_mode:This attribute is optional. By default, the launch mode for an instance is determined based on the value of theosKernelVersionattribute. The default virtualization modes are as follows:- For Linux instances with a kernel version less than 3.4 in the 3.x series, images are imported in emulated mode. Linux instances with kernel version from 3.4 to 4.x are imported in paravirtualized mode.

- For all Windows instances, the boot image is imported in emulated mode.

- For instances where the guest OS kernel version can't be determined, the boot image is imported in the emulated mode.

Specify the launch mode for the migrated image if you wish to override the default launch mode determined by the tool based on the value of

osKernelVersion. If the default launch mode is appropriate for an instance, you can skip this attribute.You can specify a value for the

specified_launch_modeattribute for instances or for unattached boot volumes. The valid values for this attribute arePARAVIRTUALIZEDandEMULATED.If you specify the mode as

PARAVIRTUALIZEDfor Windows images, ensure that you download and install the required virtualization drivers on the Windows source instances. See Prepare Your Windows Source Instances for Migration.

Specify Attributes of Attached Boot and Data Volumes for Migration

attached_only:Specify whether you want to skip migrating the boot volume of an instance. You can set theattached_onlyattribute totrueto indicate that you want to skip migrating the boot volume of an instance. In this case, only attached data volumes are migrated. This is useful if many instances use an identical image and you don't want to migrate identical boot volumes many times over. This is an optional attribute with the default valuefalse, so by default the boot volume of an instance is migrated.specified_volumes_only:Specify the attached storage volumes that you want to migrate. You can use thespecified_volumes_onlyattribute to specify a list of attached storage volumes you want to migrate. If specified, only the volumes in thespecified_volumes_onlylist are migrated. This is an optional attribute. If an empty list is specified (the default value), all volumes are migrated.For example, consider an instance with a boot volume and three data volumes.

- To migrate all the block volumes, use:

{ "name": "MULTIPART_INSTANCE_NAME_HERE", "os": "linux", "osKernelVersion": "4.1.12", "osSku": "", "attached_only": "false", "specified_volumes_only": [] } - To migrate data volumes 1 and 2, but not 3, use:

{ "name": "MULTIPART_INSTANCE_NAME_HERE", "os": "linux", "osKernelVersion": "4.1.12", "osSku": "", "attached_only": "false", "specified_volumes_only": ["/Compute-590693805/jack.jones@example.com/vol1", "/Compute-590693805/jack.jones@example.com/vol2"] }Here the boot volume isn't explicitly included in the list of

specified_volumes_only,so it won't be migrated, even thoughattached_onlyis set tofalse.

- To migrate all the block volumes, use:

- When both

attached_onlyandspecified_volumes_onlyare used, both filters are applied. This means that only volumes satisfying both conditions are migrated.For example, consider an instance with a boot volume and three data volumes.

- To migrate the boot volume and data volumes 1 and 2, but not 3, use:

{ "name": "MULTIPART_INSTANCE_NAME_HERE", "os": "linux", "osKernelVersion": "4.1.12", "osSku": "", "attached_only": "false", "specified_volumes_only": ["/Compute-590693805/jack.jones@example.com/boot_vol", "/Compute-590693805/jack.jones@example.com/vol1", "/Compute-590693805/jack.jones@example.com/vol2"] } - To migrate data volumes 1 and 2, but not 3 and not the boot volume, use:

{ "name": "MULTIPART_INSTANCE_NAME_HERE", "os": "linux", "osKernelVersion": "4.1.12", "osSku": "", "attached_only": "false", "specified_volumes_only": ["/Compute-590693805/jack.jones@example.com/vol1", "/Compute-590693805/jack.jones@example.com/vol2"] }Here the boot volume won't be migrated because it isn't included in the list of

specified_volumes_only. - To migrate all the data volumes but not the boot volume, use:

{ "name": "MULTIPART_INSTANCE_NAME_HERE", "os": "linux", "osKernelVersion": "4.1.12", "osSku": "", "attached_only": "true", "specified_volumes_only": [] }Here the boot volume won't be migrated because

attached_onlyis set totrue. In this case, regardless of the value of thespecified_volumes_onlylist, the boot volume won't be migrated.

- To migrate the boot volume and data volumes 1 and 2, but not 3, use:

Specify Attributes of Unattached Boot and Data Volumes for Migration

volumes:Specify the unattached storage volumes that you want to migrate. You can use this list to specify storage volumes restored from colocated snapshots as well. Note that, while you can migrate remote snapshots by restoring those snapshots as a block volume in Oracle Cloud Infrastructure, you can't directly migrate colocated snapshots. To migrate a colocated snapshot, first restore it to a volume in your Oracle Cloud Infrastructure Compute Classic account, then migrate that volume by including it in the list of unattached volumes to be migrated. Remember that it takes a longer time to migrate a volume restored from a colocated snapshot, than it does to migrate the original volume.For unattached boot volumes, specify values for

os,osKernelVersionandosSku, if required.

Migrate Data Incrementally to Reduce Down Time

You can use the attribute "incremental": "true" in a job file to specify that data on all attached storage volumes in a migration job should be copied incrementally.

This attribute applies to all storage volumes attached to any instance in a job file. Incremental migration doesn't apply to unattached storage volumes defined in the "volumes" section of the job file.

Note:

This attribute is supported in recent versions of the Oracle Cloud Infrastructure Classic Migration Tools image. If you created your Control-S instance prior to May 2019, you won't be able to perform incremental migrations. Note also that this feature isn't supported in all sites. If you need to perform an incremental migration in a site where this feature isn't currently supported, please contact Oracle Support.Specifying that data should be copied incrementally reduces the down time of source instances. With this option specified, the migration process is completed in two phases.

- In the first phase, Oracle Cloud Infrastructure Classic VM and Block Storage Migration Tool generates base snapshots of all the specified boot and data volumes while instances are still running.

- The tool uses these base snapshots to perform the first phase of the migration.

- At the end of the first phase, the corresponding boot and data volumes are available in the target environment with the base data. At this stage, the tool displays the job status as ready. Whenever convenient, you can proceed to the second phase of migration.

- In the second phase, source VMs must be shut down according to the specified shutdown policy.

- With shutdown policy

ignore,you must shut down the source VMs before resuming the migration job. Note that for jobs where incremental isn't explicitly specified astrue,the entire migration procedure completes without shutting down the source VM. However, when an incremental migration is performed, you must shut down source instances even if the specified shutdown policy isignore,and you must resume the migration after the VMs are shut down. - With shutdown policy

wait,you must shut down the source VMs before resuming the migration job. The tool will continue to wait until all source VMs with this policy have been shut down. If any source VMs with this policy specified are still running when you resume the migration job, the tool will throw an error. - With shutdown policy

shutdown,you can resume the migration job while VMs with this policy are still running. The tool will shut down the running VMs and resume the migration job. If you shut down VMs with this policy specified, after the first phase completes and before you resume the migration, the tool will simply verify that the VMs are shut down and will resume the migration job.

- With shutdown policy

- Resume the migration job by using the

opcmigrate migrate instance job resume <job_name>command. - A second snapshot is created for each of the boot and data volumes being migrated.

- Data that has changed between the base snapshot and the second snapshot is then copied over to the target volumes. As this incremental data would typically be much smaller in size than the base data, this process can be completed much faster than the first phase of the migration.

When an incremental migration is performed, the second phase of the migration uses the associated Oracle Cloud

Infrastructure Object Storage Classic account to copy data to the target volumes. You must specify the required credentials and access point in the secret.yml file.

Copying data incrementally is disabled by default. To enable it, specify it in the job file:

{

"version": "1",

"incremental": true,

"instances": [...When specified, this approach is applied to all attached boot and data volumes in the migration job.

Caution:

When you perform an incremental migration, at the end of the first phase, migrated volumes are available in the target environment. However, ensure that you don't attempt to attach and use the migrated volumes until the entire migration process is completed. If you access data on the migrated volumes after the first phase of migration is completed and before the second phase of migration has started, it could cause corruption of data in the migrated volume.Prepare Your Linux Source Instances for Migration

You need to configure your source instances so that they can be re-initialized correctly in the target environment.

You can configure your instances either by using tools provided on the Control-S instance or manually using custom tooling or fleet managers. The following OS versions can be configured using the tools on Control-S:

- Oracle Linux 7.2

- Oracle Linux 6.8

- Ubuntu Server 18

For instances running other versions of Linux, the tools available on Control-S can be used to configure the instances if all dependencies, such as python, lsblk, iscsi client utils and so on are satisfied.

Instances running Oracle Linux 5.11 can't be configured using these tools. However, you can still proceed with migrating these instances using this tool. After migration is completed, launch the instances in Oracle Cloud Infrastructure and attach the volumes to each instance manually. Then run the iSCSI commands to mount the volumes on each instance and update the mount point information on the instance.

To prepare your Linux source instances, start by completing the following steps:

- Ensure that you have SSH access to each Linux source instance.

- On each source instance, install via

yumorapt:iscsi-initiator-utils– This is required foriscsiadmcronieutil-linux-ngon Oracle Linux 5.x or Oracle Linux 6.x instances, andutil-linuxon Oracle Linux 7.x instances. This is required forlsblk.

- Verify that all secondary attached volume mounts have

_netdevandnofailspecified in/etc/fstab, so that when the instance is launched on Oracle Cloud Infrastructure it can start before the required volumes are attached.Ensure that you don't specify

_netdevandnofailin/etc/fstabfor file systems that are stored in bootable storage volumes.You are required to specify the

_netdevandnofailoptions in/etc/fstabonly for the non-bootable storage volumes entries, such as/dev/xvdband/dev/xvdc. Do not specify_netdevandnofailoptions in/etc/fstabfor any volume groups and other non/dev/entries. - Ensure that, on each source instance, NOPASSWD is set for the user that you will connect to the instance as. If you will connect to the instance as the

opcuser, then verify the following entry in the/etc/sudoersfile:opc ALL=(ALL) NOPASSWD: ALL - Verify that the

70-persistent-net.rulesfile doesn't exist in the/etc/udev/rules.ddirectory. The network rules in this file can cause network devices to be renamed after migration due to changes in the MAC address of the VM. This could make the network interface unreachable after migration. To prevent such network issues, before you migrate the instance remove or rename this file if it exists. - Ensure that all kernel updates have been completed successfully and that the instance has been rebooted after the most recent kernel update. Check that the default grub boot selection is the same as the latest kernel version found under

/bootand the current running kernel version.

Next, to use the tools on the Control-S instance to complete the process, see Prepare Your Linux Source Instances Using Tools on Control-S.

To complete the process using the manual steps, see Prepare Your Linux Source Instances Manually.

Prepare Your Windows Source Instances for Migration

Use the script provided in Control-S to set up your Windows instances for migration.

The following Windows versions can be configured for migration:

- Server 2008 R2 Enterprise

- Server 2008 R2 Standard

- Server 2008 R2 Datacenter

- Server 2012 Standard

- Server 2012 Datacenter

- Server 2012 R2 Standard

- Server 2012 R2 Datacenter

- Server 2016 Standard

- Server 2016 Datacenter

For each Windows instance that you want to migrate, do the following.

Launch the Migration Controller Instance (Control-T) in the Target Environment

Use the setup script provided in Control-S to launch the migration controller in the target environment.

The command to set up Control-T creates the ocic-oci-msg bucket in Oracle Cloud

Infrastructure Object Storage, with visibility set to public. If this bucket already exists, ensure that its visibility is set to public before you run the command to set up Control-T.

Migrate the Specified VMs and Block Volumes

When you've prepared the source instances and completed configuring the migration controller instances in the source and target environments, you're ready to start the migration.

Start the Migration

Use the migration script on the Control-S instance to start the migration.

Monitor the Migration

As the migration proceeds, you can see the volumes being created and listed in the Oracle Cloud Infrastructure Console.

Stop and Restart the Migration Service

To interrupt a migration job, you can stop and then restart the migration service. You can then resume interrupted jobs.

Resume a Migration Job

You might need to resume a migration job in several scenarios.

- If a migration job fails, you can resume the migration job. In this case, if boot volumes have already been uploaded, the job resumes from the image import stage. For all other cases, the job resumes from the beginning.

- If you used the default shutdown policy

waitfor any instance in a migration job, then the job won't begin until you have shut down all instances that have this policy specified. After you have shut down the relevant instances, you must resume the migration job. - If you have specified an incremental migration in the job file, then after the first phase of the migration is completed, the tool will wait for source instances to be shut down before proceeding with the second phase of the migration. In this case, when you are ready to proceed with the second phase, you must resume the migration job. The source instances must be shut down as per the specified shutdown policy. For instances with a shutdown policy of

ignoreorwait, you must shut down the instances before you resume the migration. For instances with a shutdown policy ofshutdown,the tool shuts down the instances after you resume the migration job.

Note that, when an incremental migration is specified, you must resume the migration job even if the shutdown policy specified was ignore or shutdown. However, if the incremental attribute isn't explicitly specified as true in the job file, then an incremental migration isn't performed. In this case, if the shutdown policy specified was ignore or shutdown, then you don't need to resume the migration job. The job automatically proceeds to completion.

- To resume a migration job, log in to Control-S and run:

opcmigrate migrate instance job resume <job_name>

You can set the --do-not-finalize option to retain information about snapshots for incremental migrations so that you can perform another incremental migration in the future. This allows you to perform multiple incremental migrations between the base migration and the final migration which reduces downtime when compared to the two-phase migration approach. By default, the --do-not-finalize option is not set.

You can perform additional incremental migrations to reduce the down time.

For the base migration phase or when your migration job that has the shutdown policy wait, do not use the --do-not-finalize option. In such a scenario, run the resume command without the --do-not-finalize option.

In the second or subsequent phase of incremental migration, you can use the --do-not-finalize option.

Let's consider the following example to understand the scenarios in which you can use the --do-not-finalize option. In this example, John Doe starts a migration and sees that the base migration took 24 hours to complete. Based on this, John Doe estimates that incremental migration will take 45 minutes. If a downtime of 45 minutes is not acceptable, then John Doe can use the --do-not-finalize option while running the resume command in phase 2. After the phase 2 of the migration is complete, John Doe estimates that another incremental migration will take 5 minutes. John Doe finds a downtime of 5 minutes is acceptable. Now, John Doe can issue the resume command without using the --do-not-finalize option to finalize the migration. In this last phase, John Doe will have to take a down time and shutdown their instances.

Abort a Migration Job

You can abort a job which is in the pending or running state, but you can't abort a job which is in the ready state.

Depending on your scenario, specify one of the following values for the scope option.

nocleanup: Use this value when you don't want the artifacts created by the migration to be cleaned up. When you resume a migration job after aborting a job with this scope, the migration is resumed from the point where the abort command was issued.partial: Use this value when you want to retain all artifacts that were successfully migrated but you want the resources to be cleaned up for artifacts that were partially migrated. When you resume a migration job after aborting the job with this scope, the tool retries migrating the artifacts that were not previously migrated successfully.complete: Use this value when you want all the artifacts created by the migration job to be destroyed, including the artifacts that were migrated successfully. When you resume a migration job after aborting the job with this scope, the tool restarts the entire migration from the beginning as all the resources that were previously migrated are destroyed.

After starting a migration job, you might need to abort it in several scenarios.

- If you have accidentally started a migration job, you might want to abort the job completely. In this case, set the

scopevalue ascomplete. - If you want to change some configurations in the source VM or install drivers after starting a migration job, you can abort the job partially or completely, and then resume the migration after making the required changes. In this case, set the

scopevalue aspartialorcompletedepending on whether you want to abort the job partially or completely. - If you notice that the migration is proceeding slowly due to network issues, you can pause the migration and resume the job after fixing the network issues. In this case, set the

scopevalue asnocleanup.

Let's consider the following example to further understand the differences between the scope values. In this example, the migration job consists of migrating three volumes: vol_1, vol_2, and vol_3. When you issue the abort command for this migration job, let's assume that the migration of vol_1 has completed successfully, migration of vol_2 has started and is in progress, and migration of vol_3 has not started yet and is enqueued for migration.

When you specify nocleanup as the scope, the tools stops the migration of the volumes. None of the artifacts created by the migration are cleaned up.

When you specify partial as the scope, the tools stops the migration of the volumes. Any volumes that are in-progress have corresponding temporary resources cleaned up, but any volumes that have successfully completed migration are left untouched. In this example, the temporary resources corresponding to vol_2 are cleaned up but there is no impact on the volume created in Oracle Cloud Infrastructure corresponding to vol_1's migration. The temporary resources that are cleaned up include snapshots taken on the source volume, clones, any OCI custom images and volumes created in Oracle Cloud Infrastructure, related attachments to Control-S and Control-T instances. When you resume this migration job, the tool creates all the required resources to migrate vol_2 and then vol_3 as temporary resources were cleaned up during the abort.

When you specify complete as the scope, the tools stops the migration of the volumes and all the artifacts created by the migration job are destroyed including any volumes that were successfully migrated to the target environment. In this example, the Oracle Cloud Infrastructure volume corresponding to vol_1's migration is deleted along with temporary artifacts created for vol_2's migration.

To resume a migration job after aborting it, see Resume a Migration Job.

Delete a Migration Job

If a job has completed successfully or if it has gone into an error state and you want to stop it, you can delete the job.

Launch VMs in the Target Environment

When a migration job completes, the boot volumes that were migrated in that job are available as boot volumes in your tenancy and the data volumes that were migrated are listed on the block volumes page. Next, use the boot volumes to launch compute instances in your Oracle Cloud Infrastructure tenancy.

Launch your VMs by using the Oracle Cloud Infrastructure Console or any other interface. Use the migrated boot volumes to launch the required compute instances. When your instances are ready, attach the appropriate data volumes to each instance.

Alternatively, you can complete the following steps to launch your instances using the boot volumes and attach the appropriate data volumes to each instance using Terraform.

Attach and Mount Block Storage on Compute Instances in the Target Environment

If you use the Terraform configuration generated by opcmigrate generate, then when you launch your compute instances, the appropriate storage volumes are automatically attached to each instance.

For Linux instances, after the migrated storage volumes have been successfully attached to each instance, the storage volumes must mounted be on each instance. For each migrated Linux instance:

Validate the Target Environment

After you've completed migration of your VMs and block storage, validate the setup of your target environment. Ensure that all VMs are running and can be accessed, that all storage volumes are attached and mounted, and that all network and firewall rules have been appropriately implemented.

Validate Your VMs and Block Storage in the Target Environment

After you have launched each of your migrated VMs, log in to each VM to ensure that you have access to the system and to verify that all the required block volumes are attached and mounted as expected.

Verify that your instances are launched in the emulated mode or the paravirtualized mode, as expected. Linux instances with a kernel version less than 3.4, are imported in emulated mode. Instances with a higher kernel version are imported in paravirtualized mode. If the guest OS kernel version couldn't be determined, the image is imported in emulated mode.

Validate Your Windows Licenses in the Target Environment

After launching your Windows VMs, check your Windows license.

Validate the Network Setup

When your instances are running, verify that network access to each instance is both permitted and restricted as intended.

Check each of the following, as applicable:

- VCNs and subnets have been created corresponding to the IP networks and the shared network in your source environment. Remember that the mapping of source environment networking to the target environment depends on whether you adopted the network topology mapping strategy or the security context mapping strategy.

- Instances are launched in the appropriate subnet.

- Appropriate security lists are applied to each subnet and appropriate security rules are created in each security list.

- Instances are accessible over the public Internet, where required.

- Instances aren't accessible over the public Internet, where such access isn't required. If you created all subnets as public subnets, ensure that instances in those subnets that should not be accessed over the public Internet don't have a public IP address.

- Instances in different subnets in the same VCN can communicate with each other as expected.

- Instances in different VCNs can't communicate with each other unless such communication has been explicitly enabled.

- If you used network topology mapping to set up your network, ensure that appropriate firewall rules are in place on instances to restrict network access, if required. The network topology mapping strategy might in some cases enable traffic that was restricted in the source environment.

- If you had IP networks connected by an IP network exchange in the source environment, ensure that there is connectivity between the corresponding VCNs and subnets in the target environment. If the IP networks were migrated to separate VCNs, ensure that local peering gateways (LPGs) have been set up to enable connectivity across those VCNs.

- If you migrated your FastConnect connection or if you set up a VPN connection, validate that those connections are working as expected.

- If you connected your Oracle Cloud Infrastructure Compute Classic network with your Oracle Cloud Infrastructure network, ensure that that connection is working as expected.

Troubleshooting

Here are a few tips for dealing with errors that might occur while installing and using Oracle Cloud Infrastructure Classic VM and Block Storage Migration Tool.

- Setting up Control-S fails with one of the following errors:

secret.yml has insecure file permissions; please ensure they are correct by running `chmod 600 ansible/secret.yml`This error indicates that permissions on the file /home/opc/ansible/secret.yml aren't restricted. The

secret.ymlfile contains sensitive information about your account. Ensure that you set the permissions appropriately usingchmod 600.fatal: [127.0.0.1]: FAILED! => {"changed": false, "msg": "AnsibleUndefinedVariable: 'opc_profile_endpoint' is undefined"}This error indicates that there is an issue with the information provided in the

secret.ymlfile. In this example, the AP end point for Oracle Cloud Infrastructure Compute Classic isn't provided. Errors in other fields in this file are similarly indicated. Fix the error and then run the command to set up Control-S again. Remember that whenever you make any changes insecret.yml,you have to run the command to set up Control-S again, to ensure that those changes get propagated.INFO The control-s id is b'401 Unauthorized\n'This error indicates that the tool is unable to access the metadata service on Control-S. Reboot the Control-S instance and try again.

- Setting up Control-T fails with one of the following errors:

The required information to complete authentication was not provided.This error indicates that there is an issue with the credentials for the Oracle Cloud Infrastructure tenancy. This information is usually provided in the

secret.ymlfile. Check the OCIDs entered in that file and ensure that they map to the OCIDs that you copied from the Oracle Cloud Infrastructure Console.oci.exceptions.ServiceError: {'opc-request-id': 'D4AFD39...','code': 'NotAuthorizedOrNotFound', 'message': 'subnet ocid1.subnet.oc1.phx.aaaaa... not found', 'status': 404}This error indicates that the subnet OCID provided in the

secret.ymlfile is incorrect. Check the subnet OCID entered in that file and ensure that it is the appropriate OCID for the subnet that you want to use.oci.exceptions.ServiceError: {'opc-request-id': '3375100...', 'code': 'InvalidParameter', 'message': "Parameter 'availabilityDomain' does not match. VNIC has 'phx-ad-3' while the subnet has 'phx-ad-2'", 'status': 400}This error indicates that the subnet information provided in

secret.ymlconflicts with the availability domain, which is also specified in that file. From the Oracle Cloud Infrastructure Console, find the OCID for the subnet in the appropriate availability domain.no such identity: /home/opc/.ssh/private_key: No such file or directoryThis error indicates that the SSH key required to connect to the Linux source instance wasn't found in the specified path. Verify that the private key is available in the path specified in the

hosts.ymlfile on Control-S.

- A migration job could not be submitted due to one of the following errors:

Running into connection issues: HTTPConnectionPool(host='127.0.0.1', port=8000): Max retries exceeded with url: /api/v1/jobs (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fb4c08b7668>: Failed to establish a new connection: [Errno 111] Connection refused',))This error indicates issues in connecting with your Oracle Cloud Infrastructure Compute Classic account. Check the

run_migrationlog file for more information about the issue. For example, the log file might contain logs similar to the following:File "/home/opc/oci-python-sdk/lib/python3.6/site-packages/mig/run_migration.py", line 135, in run_migration_svc_internal ocic_compute_client.authenticate() File "/home/opc/oci-python-sdk/lib/python3.6/site-packages/mig/retry_utils.py", line 52, in f_retry return f(*args, **kwargs) File "/home/opc/oci-python-sdk/lib/python3.6/site-packages/mig/api/ocic_compute_client.py", line 142, in wrapper _raise_exception_for_http_error(e) File "/home/opc/oci-python-sdk/lib/python3.6/site-packages/mig/api/ocic_compute_client.py", line 131, in _raise_exception_for_http_error raise clazz(message) mig.api.ocic_compute_client.ApiUnauthorizedError: Incorrect username or password. Resource: authenticate.This indicates an error in the user name or password specified in the

secret.ymlfile. This information might have been changed inadvertently while editing the file after setting up Control-S.ERROR Migration terminated due to unexpected error. Traceback (most recent call last): File "/home/opc/oci-python-sdk/lib/python3.6/site-packages/mig/run_migration.py", line 97, in run_migration_as_daemon return run_migration_svc_internal() File "/home/opc/oci-python-sdk/lib/python3.6/site-packages/mig/run_migration.py", line 145, in run_migration_svc_internal raise Exception(msg) Exception: Cannot obtain instance with id {} in container {}. Double check your opc_profile_endpoint.This error indicates that there is an issue with the API end point specified in the

secret.ymlfile. This could be caused if you updated yoursecret.ymlfile to set up migration from another Oracle Cloud Infrastructure Compute Classic site or region after you launched your Control-S instance. Make sure that thesecret.ymlfile has the API end point for the site where your Control-S instance is created.mig.api.ocic_compute_client.ApiForbiddenError: <!DOCTYPE HTML PUBLIC "-//IETF//DTD HTML 2.0//EN"> <html><head> <title>403 Forbidden</title> </head><body> <h1>Forbidden</h1> <p>You don't have permission to access /instanceIntHubAPIPre-1/a6122673-177c-475a-8869-e6519ab2a9a3 on this server.</p> </body></html>This error indicates that the complete, multipart instance name hasn't been provided in the list of instances to be migrated in

secret.ymlor in the job file. The complete, multipart instance name has the format/Compute-590693805/jack.jones@example.com/<instance_name>

- A migration job that was successfully submitted fails to complete with one of the following errors:

Reached the limit of number of snapshots (5) per volume for <storage_volume_name>This error indicates that five colocated snapshots already exist for the specified storage volume, so the tool is unable to create a colocated snapshot for migration. Delete one of the existing colocated snapshots and retry the job.

Cannot initiate the import image for {}, as the max images limit is exceeded, please delete unused ones and retry the migration job.This error indicates that you have already reached the limit for the number of custom images you can have in Oracle Cloud Infrastructure. Delete some of the existing custom images or request an increase in the limit.

"Failed to execute remote cmd: sudo iscsiadm -m discovery -t sendtargets -p <ip_adderss:port> Err: b'Permission denied (publickey,gssapi-keyex,gssapi-with-mic).\\r\\n'This error indicates that the SSH key generated on Control-S to connect with Control-T doesn't match the public key on Control-T. This could happen if you have already set up Control-T once and you set up Control-T a second time and it fails. In this case, the tool tries to use the SSH key generated for the second Control-T instance with the Control-T instance that was generated first. Ensure that Control-T is set up successfully before you start a migration job.

Not enough local space for bootvolume migration, volume size...This error indicates that the local file system containing

/imageson Control-S isn't large enough to convert the qcow2 images. Attach larger data volumes to the Control-S instance and mount the larger volume on/images. Make sure theopcuser has write access on/images.Either the bucket named ‘ocic-oci-mig’ does not exist in the namespace <compartment_name> or you are not authorized to access itThis error indicates that the Oracle Cloud Infrastructure user whose credentials are provided in the secret.yml file doesn't have permission to create objects in the

ocic-oci-migbucket. Ensure that this user has write permission on this bucket.