Configure bidirectional replication

After you set up a one-way replication, there's just a few extra steps to replicate data in the opposite direction. This quickstart example uses Autonomous AI Transaction Processing and Autonomous AI Lakehouse as its two cloud databases.

Related Topics

Before you begin

You must have two existing databases in the same tenancy and region in order to proceed with this quickstart. If you need sample data, download Archive.zip, and then follow the instructions in Lab 1, Task 3: Load the ATP schema

Overview

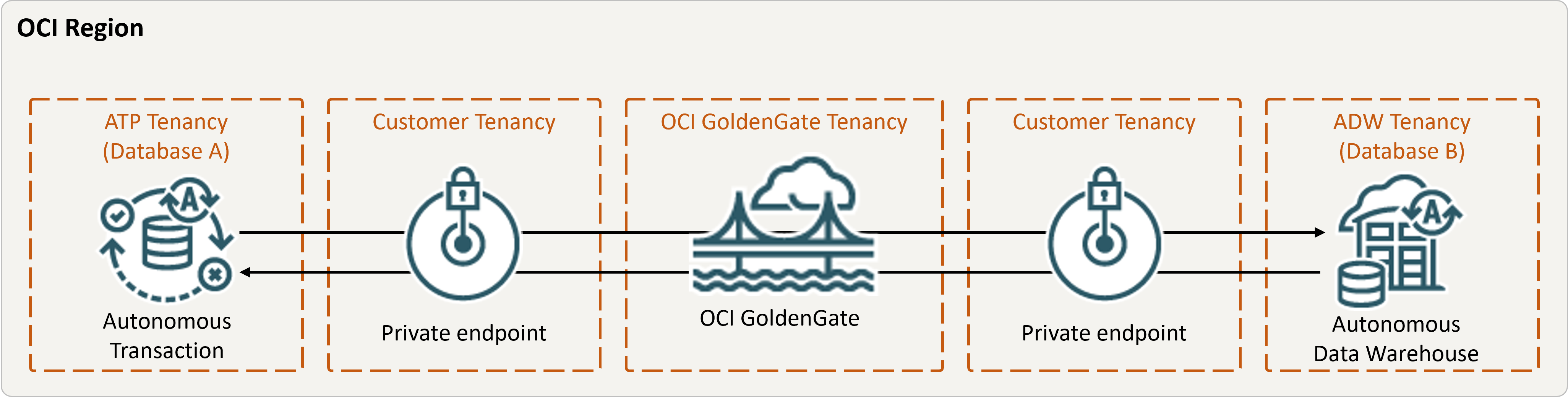

The following steps guide you through how to instantiate a target database using Oracle Data Pump and set up bidirectional (two-way) replication between two databases in the same region.

Description of the illustration bidirectional.png

Task 2: Add transaction information and a checkpoint table for both databases

In the OCI GoldenGate deployment console, go to the Configuration screen for the Administration Service, and then complete the following:

Task 3: Create the Integrated Extract

An Integrated Extract captures ongoing changes to source database.

Task 4: Export data using Oracle Data Pump (ExpDP)

Use Oracle Data Pump (ExpDP) to export data from the source database to Oracle Object Store.

Task 5: Instantiate the target database using Oracle Data Pump (ImpDP)

Use Oracle Data Pump (ImpDP) to import data into the target database

from the SRC_OCIGGLL.dmp that was exported from the source

database.

Task 6: Add and run a Non-integrated Replicat

- Add and run a Replicat.

- Perform some changes on Database A to see them replicated to Database B.