1 Introducing Oracle Exadata System Software

This chapter introduces Oracle Exadata System Software.

- Overview of Oracle Exadata System Software

Oracle Exadata Storage Server is a highly optimized storage server that runs Oracle Exadata System Software to store and access Oracle Database data. - Key Features of Oracle Exadata System Software

This section describes the key features of Oracle Exadata System Software. - Oracle Exadata System Software Components

This section provides a summary of the following Oracle Exadata System Software components.

1.1 Overview of Oracle Exadata System Software

Oracle Exadata Storage Server is a highly optimized storage server that runs Oracle Exadata System Software to store and access Oracle Database data.

With traditional storage, data is transferred to the database server for processing. In contrast, Oracle Exadata System Software provides database-aware storage services, such as the ability to offload SQL and other database processing from the database server, while remaining transparent to the SQL processing and database applications. Oracle Exadata storage servers process data at the storage level, and pass only what is needed to the database servers.

Oracle Exadata System Software is installed on both the storage servers and the database servers. Oracle Exadata System Software offloads some SQL processing from the database server to the storage servers. Oracle Exadata System Software enables function shipping between the database instance and the underlying storage, in addition to traditional data shipping. Function shipping greatly reduces the amount of data processing that must be done by the database server. Eliminating data transfers and database server workload can greatly benefit query processing operations that often become bandwidth constrained. Eliminating data transfers can also provide a significant benefit to online transaction processing (OLTP) systems that include large batch and report processing operations.

The hardware components of Oracle Exadata Storage Server are carefully chosen to match the needs of high-performance processing. The Oracle Exadata System Software is optimized to take maximum advantage of the hardware components. Each storage server delivers outstanding processing bandwidth for data stored on disk, often several times better than traditional solutions.

Oracle Exadata storage servers use state-of-the-art RDMA Network Fabric interconnections between servers and storage. Each RDMA Network Fabric link provides bandwidth of 40 Gb/s for InfiniBand Network Fabric or 100 Gb/s for RoCE Network Fabric. Additionally, the interconnection protocol uses direct data placement, also referred to as direct memory access (DMA), to ensure low CPU overhead by directly moving data from the wire to database buffers with no extra copies. The RDMA Network Fabric has the flexibility of a LAN network with the efficiency of a storage area network (SAN). With an RDMA Network Fabric network, Oracle Exadata eliminates network bottlenecks that could reduce performance. This RDMA Network Fabric network also provides a high performance cluster interconnection for Oracle Real Application Clusters (Oracle RAC) servers.

There are several configurations of storage server each configured to maximize some aspect of storage based on your requirements. Each storage server contains persistent storage media, which may be a mixture of hard disk drives (HDDs) and flash devices. High Capacity (HC) storage servers contain HDDs for primary data storage and high-performance flash memory, which is mainly used for caching purposes. Extreme Flash (EF) storage servers are geared towards maximum performance and have an all-flash storage configuration. Extended (XT) storage servers are geared towards extended-capacity applications, such as online data archiving, and contain HDDs only. HC and EF storage servers are also equipped with additional memory for Exadata RDMA Memory Cache (XRMEM cache), which supports high-performance data access using Remote Direct Memory Access (RDMA).

The Oracle Exadata architecture scales to any level of performance. To achieve higher performance or greater storage capacity, you add more storage servers (cells) to the configuration. As more storage servers are added, capacity and performance increase linearly. Data is mirrored across storage servers to ensure that the failure of a storage server does not cause loss of data or availability. The scale-out architecture achieves near infinite scalability, while lowering costs by allowing storage to be purchased incrementally on demand.

Note:

Oracle Exadata System Software must be used with Oracle Exadata storage server hardware, and only supports Oracle databases on the Oracle Exadata database servers. Information is available on My Oracle Support at

and on the Products page of Oracle Technology Network at

Parent topic: Introducing Oracle Exadata System Software

1.2 Key Features of Oracle Exadata System Software

This section describes the key features of Oracle Exadata System Software.

- Reliability, Modularity, and Cost-Effectiveness

Oracle Exadata Storage Server employs cost-effective modular storage hardware in a scale-out architecture enabling high availability and reliability. - Compatibility with Oracle Database

When the minimum required versions are met, all Oracle Database features are fully supported with Oracle Exadata System Software. - Smart Flash Technology

The Exadata Smart Flash Cache feature of the Oracle Exadata System Software intelligently caches database objects in flash memory, replacing slow, mechanical I/O operations to disk with very rapid flash memory operations. - Persistent Memory Accelerator and RDMA

Persistent Memory (PMEM) Accelerator provides direct access to persistent memory using Remote Direct Memory Access (RDMA), enabling faster response times and lower read latencies. - Exadata RDMA Memory

Oracle Exadata System Software release 23.1.0 introduces Exadata RDMA Memory (XRMEM). XRMEM incorporates all of the Exadata capabilities that provide direct access to storage server memory using Remote Direct Memory Access (RDMA), enabling faster response times and lower read latencies. - Centralized Storage

You can use Oracle Exadata to consolidate your storage requirements into a central pool that can be used by multiple databases. - I/O Resource Management (IORM)

I/O Resource Management (IORM) and the Oracle Database Resource Manager enable multiple databases and pluggable databases to share the same storage while ensuring that I/O resources are allocated across the various databases. - Exadata Hybrid Columnar Compression

Exadata Hybrid Columnar Compression stores data using column organization, which brings similar values close together and enhances compression ratios. - In-Memory Columnar Format Support

In an Oracle Exadata environment, the data is automatically stored in In-Memory columnar format in the flash cache when it will improve performance. - Offloading Data Search and Retrieval Processing

Exadata Smart Scan offloads search and retrieval processing to the storage servers. - Offloading of Incremental Backup Processing

To optimize the performance of incremental backups, the database can offload block filtering to Oracle Exadata Storage Server. - Fault Isolation with Quarantine

Oracle Exadata System Software has the ability to learn from the past events to avoid errors. - Protection Against Data Corruption

Data corruptions, while rare, can have a catastrophic effect on a database, and therefore on a business. - Fast File Creation

File creation operations are offloaded to Oracle Exadata Storage Servers. - Storage Index

Oracle Exadata Storage Servers maintain a storage index which contains a summary of the data distribution on the disk.

Parent topic: Introducing Oracle Exadata System Software

1.2.1 Reliability, Modularity, and Cost-Effectiveness

Oracle Exadata Storage Server employs cost-effective modular storage hardware in a scale-out architecture enabling high availability and reliability.

Oracle Exadata Storage Server is architected to eliminate single points of failure by mirroring data, employing fault isolation technologies, and protecting against disk and other storage hardware failures. Even brownouts are limited or eliminated when failures occur.

In the Oracle Exadata Storage Server architecture, multiple storage servers provide a pool of storage to support one or more databases. Intelligent software automatically and transparently distributes data evenly across the storage servers. Oracle Exadata Storage Server supports dynamic disk replacement, and Oracle Exadata System Software provides online dynamic data redistribution, which ensures that data is balanced across the storage without interrupting database processing. Oracle Exadata Storage Server also provides data protection from disk and storage server failures.

Parent topic: Key Features of Oracle Exadata System Software

1.2.2 Compatibility with Oracle Database

When the minimum required versions are met, all Oracle Database features are fully supported with Oracle Exadata System Software.

Oracle Exadata System Software works equally well with single-instance or Oracle Real Application Clusters (Oracle RAC) deployments of Oracle Database. Oracle Data Guard, Oracle Recovery Manager (RMAN), Oracle GoldenGate, and other database features are managed the same with Exadata storage cells as with traditional storage. This enables database administrators to use the same tools with which they are familiar.

Refer to My Oracle Support Doc ID 888828.1 for a complete list of the minimum required software versions.

1.2.3 Smart Flash Technology

The Exadata Smart Flash Cache feature of the Oracle Exadata System Software intelligently caches database objects in flash memory, replacing slow, mechanical I/O operations to disk with very rapid flash memory operations.

- Exadata Smart Flash Cache

Exadata Smart Flash Cache stores frequently accessed data in high-performance flash storage. - Write-Back Flash Cache

Write-Back Flash Cache enables write I/Os directly to Exadata Smart Flash Cache. - Exadata Smart Flash Log

Exadata Smart Flash Log improves transaction response times and increases overall database throughput for I/O intensive workloads by accelerating performance-critical log write operations.

Parent topic: Key Features of Oracle Exadata System Software

1.2.3.1 Exadata Smart Flash Cache

Exadata Smart Flash Cache stores frequently accessed data in high-performance flash storage.

Exadata Smart Flash Cache automatically works in conjunction with Oracle Database to intelligently optimize cache efficiency by favoring frequently accessed and high-value data. Each database I/O contains a tag that indicates the likelihood of repeated data access. This information is combined with internal statistics and other measures, such as the object size and access frequency, to determine whether to cache the data.

Just as importantly, Exadata Smart Flash Cache avoids caching data that will never be reused or will not fit into the cache. For example, a backup operation does not read data repeatedly, so backup-related I/O is not cached.

By default, caching occurs automatically and requires no user or administrator effort.

Although it is generally not required or recommended, Oracle Exadata System Software also enables administrators to override the default caching policy and keep specific table and index segments in or out of the cache.

Originally, Exadata Smart Flash Cache operated exclusively in Write-Through mode. In Write-Through mode, database writes go to disk first and subsequently populate Flash Cache. If a flash device fails with Exadata Smart Flash Cache operating in Write-Through mode, there is no data loss because the data is already on disk.

Parent topic: Smart Flash Technology

1.2.3.2 Write-Back Flash Cache

Write-Back Flash Cache enables write I/Os directly to Exadata Smart Flash Cache.

With Exadata Smart Flash Cache in Write-Back mode, database writes go to Flash Cache first and later to disk. Write-Back mode was introduced with Oracle Exadata System Software release 11.2.3.2.0.

Write-intensive applications can benefit significantly from Write-Back mode

by taking advantage of the fast latencies provided by flash. If your application writes

intensively and if you experience high I/O latency or significant waits for free

buffer waits, then you should consider using Write-Back Flash Cache.

With Exadata Smart Flash Cache in Write-Back mode, the total amount of disk I/O also reduces when the cache absorbs multiple writes to the same block before writing it to disk. The saved I/O bandwidth can be used to increase the application throughput or service other workloads.

However, if a flash device fails while using Write-Back mode, data that is not yet persistent to disk is lost and must be recovered from a mirror copy. For this reason, Write-Back mode is recommended in conjunction with using high redundancy (triple mirroring) to protect the database files.

The contents of the Write-Back Flash Cache is persisted across reboots, eliminating any warm-up time needed to populate the cache.

Parent topic: Smart Flash Technology

1.2.3.3 Exadata Smart Flash Log

Exadata Smart Flash Log improves transaction response times and increases overall database throughput for I/O intensive workloads by accelerating performance-critical log write operations.

The time to commit user transactions is very sensitive to the latency of log write operations. In addition, many performance-critical database algorithms, such as space management and index splits, are very sensitive to log write latency.

Although the disk controller has a large battery-backed DRAM cache that can accept writes very quickly, some write operations to disk can still be slow during busy periods when the disk controller cache is occasionally filled with blocks that have not been written to disk. Noticeable performance issues can arise even with relatively few slow redo log write operations.

Exadata Smart Flash Log reduces the average latency for performance-sensitive redo log write I/O operations, thereby eliminating performance bottlenecks that may occur due to slow redo log writes. Exadata Smart Flash Log removes latency spikes by simultaneously performing redo log writes to two media devices. The redo write is acknowledged as soon as the first write to either media device completes.

Prior to Oracle Exadata System Software release 20.1, Exadata Smart Flash Log performs simultaneous writes to disk and flash storage. With this configuration, Exadata Smart Flash Log improves average log write latency and increases overall database throughput. But, because all log writes must eventually persist to disk, this configuration is limited by the overall disk throughput, and provides little relief for applications that are constrained by disk throughput.

Oracle Exadata System Software release 20.1 adds a further optimization, known as Smart Flash Log Write-Back, that uses Exadata Smart Flash Cache in Write-Back mode instead of disk storage. With this configuration, Exadata Smart Flash Log improves average log write latency and overall log write throughput to eliminate logging bottlenecks for demanding throughput-intensive applications.

Parent topic: Smart Flash Technology

1.2.4 Persistent Memory Accelerator and RDMA

Persistent Memory (PMEM) Accelerator provides direct access to persistent memory using Remote Direct Memory Access (RDMA), enabling faster response times and lower read latencies.

Note:

PMEM is only available in Exadata X8M-2 and X9M-2 storage server models with High Capacity (HC) or Extreme Flash (EF) storage.

Starting with Oracle Exadata System Software release 19.3.0, workloads that require ultra low response time, such as stock trades and IOT devices, can take advantage of PMEM and RDMA in the form of a PMEM Cache and PMEM Log.

When database clients read from the PMEM cache, the client software performs an RDMA read of the cached data, which bypasses the storage server software and delivers results much faster than Exadata Smart Flash Cache.

PMEM cache works in conjunction with Exadata Smart Flash Cache. The following table describes the supported caching mode combinations when PMEM Cache is configured:

| PMEM Cache Mode | Flash Cache Mode | Supported Configuration? |

|---|---|---|

| Write-Through | Write-Through |

Yes. This is the default configuration for High Capacity (HC) servers with Normal Redundancy. |

| Write-Through | Write-Back |

Yes. This is the default configuration for HC servers with High Redundancy. This is also the default configuration for Extreme Flash (EF) servers. |

| Write-Back | Write-Back |

Yes. However, the best practice recommendation is to configure PMEM cache in write-through mode. This configuration provides the best performance and availability. Note: Commencing with Oracle Exadata System Software release 23.1.0, PMEM cache only operates in write-through mode. |

| Write-Back | Write-Through |

No. Without the backing of Write-Back Flash Cache, write-intensive workloads can overload the PMEM Cache in Write-Back mode. |

If PMEM Cache is not configured, Exadata Smart Flash Cache is supported in both Write-Back and Write-Through modes.

Redo log writes are critical database operations and need to complete in a timely manner to prevent load spikes or stalls. Exadata Smart Flash Log is designed to prevent redo write latency outliers. PMEM Log helps to further reduce redo log write latency by using PMEM and RDMA.

With PMEM Log, database clients send redo log I/O buffers directly to PMEM

on the storage servers using RDMA, thereby reducing transport latency. The cell server

(cellsrv) then writes the redo to Exadata Smart Flash Log (if enabled) and disk at a later time.

Reduced redo log write latency improves OLTP performance, resulting in higher transaction throughput. In cases where PMEM Log is bypassed, Exadata Smart Flash Log can still be used.

Parent topic: Key Features of Oracle Exadata System Software

1.2.5 Exadata RDMA Memory

Oracle Exadata System Software release 23.1.0 introduces Exadata RDMA Memory (XRMEM). XRMEM incorporates all of the Exadata capabilities that provide direct access to storage server memory using Remote Direct Memory Access (RDMA), enabling faster response times and lower read latencies.

XRMEM encompasses previous Exadata data and commit accelerators based on persistent memory (PMEM), which is only available in Exadata X8M and X9M storage server models. Starting with Exadata X10M, XRMEM enables the benefits of RDMA without needing specialized persistent memory and is primed to leverage developments in memory and storage hardware.

On Exadata X10M systems, workloads requiring ultra-low response times, such as stock trades and IOT devices, can take advantage of XRMEM cache. When database clients read from XRMEM cache, the client software performs an RDMA read of the cached data, which bypasses the storage server software and networking layers, eliminates expensive CPU interrupts and context switches, and delivers results much faster than Exadata Smart Flash Cache. In this case, XRMEM cache operates only in write-through mode, with database writes saved to persistent storage.

On Exadata X10M, XRMEM cache is available on High Capacity (HC) and Extreme Flash (EF) Exadata X10M storage servers, leveraging the high-performance dynamic random-access memory (DRAM) available on the servers. In this environment, XRMEM cache functions automatically, requiring no separate configuration or ongoing administration.

On existing Exadata X8M and X9M systems with Oracle Exadata System Software release 23.1.0, the persistent

memory data accelerator, also known as PMEM cache (or

PMEMCACHE), is now called the XRMEM cache (or XRMEMCACHE). Likewise, the persistent memory commit

accelerator, also known as PMEM log (or PMEMLOG), is now XRMEM log (or

XRMEMLOG). However, the CellCLI commands to manage

PMEMCACHE and PMEMLOG resources are still

available for backward compatibility.

Oracle Exadata System Software release 23.1.0 does not implement XRMEMLOG on

Exadata X10M systems.

Parent topic: Key Features of Oracle Exadata System Software

1.2.6 Centralized Storage

You can use Oracle Exadata to consolidate your storage requirements into a central pool that can be used by multiple databases.

Oracle Exadata evenly distributes the data and I/O load for every database across available disks in the Exadata storage servers. Every database can use all of the available disks to achieve superior I/O rates. Oracle Exadata provides higher efficiency and performance at a lower cost while also lowering your storage administration overhead.

Parent topic: Key Features of Oracle Exadata System Software

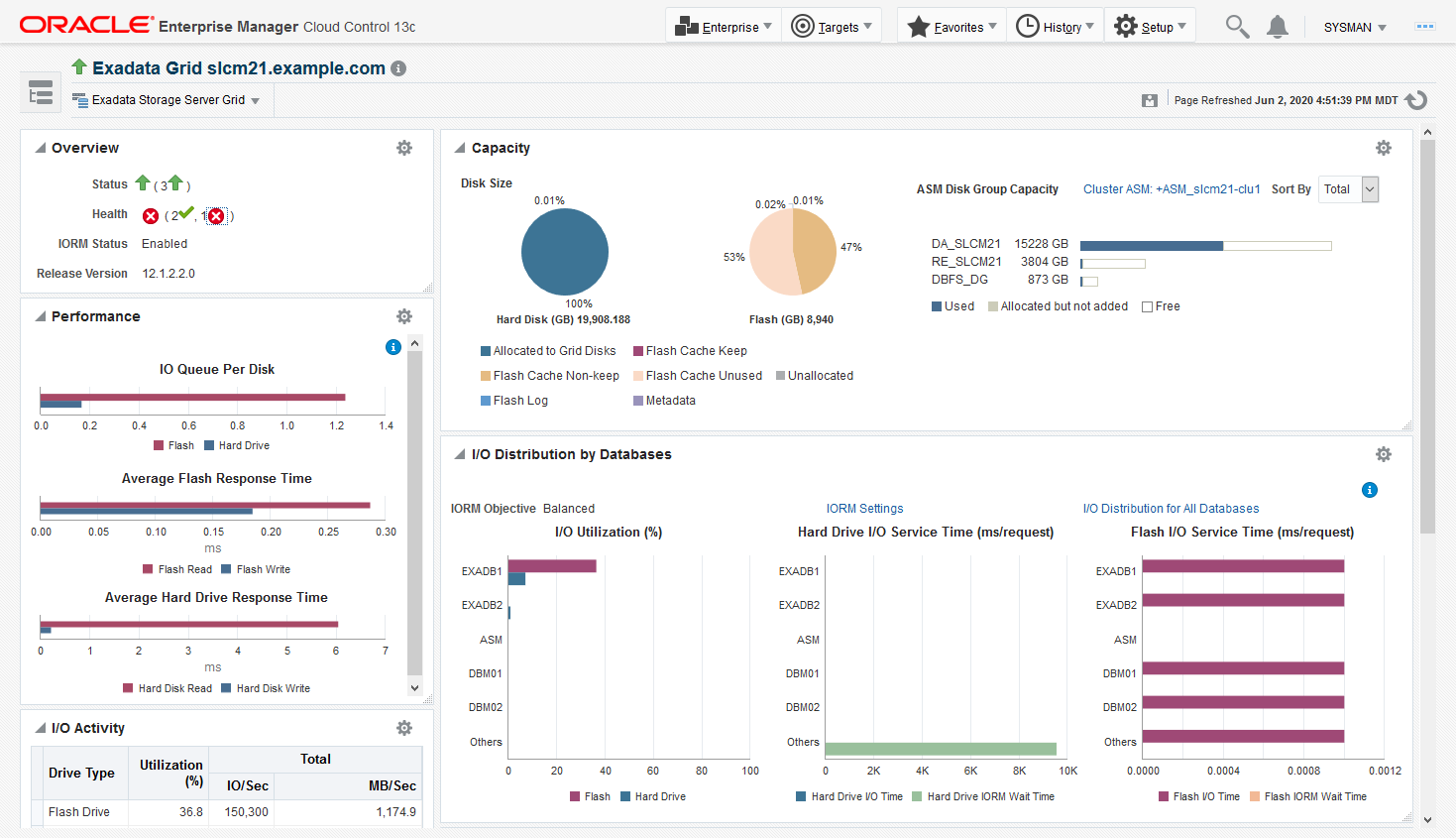

1.2.7 I/O Resource Management (IORM)

I/O Resource Management (IORM) and the Oracle Database Resource Manager enable multiple databases and pluggable databases to share the same storage while ensuring that I/O resources are allocated across the various databases.

Oracle Exadata System Software works with IORM and Oracle Database Resource Manager to ensure that customer-defined policies are met, even when multiple databases share the same set of storage servers. As a result, one database cannot monopolize the I/O bandwidth and degrade the performance of the other databases.

IORM enables storage cells to service I/O resources among multiple applications and users across all databases in accordance with sharing and prioritization levels established by the administrator. This improves the coexistence of online transaction processing (OLTP) and reporting workloads, because latency-sensitive OLTP applications can be given a larger share of disk and flash I/O bandwidth than throughput-sensitive batch applications. Oracle Database Resource Manager enables the administrator to control processor utilization on the database host on a per-application basis. Combining IORM and Oracle Database Resource Manager enables the administrator to establish more accurate policies.

IORM also manages the space utilization for Exadata Smart Flash Cache and Exadata RDMA Memory Cache (XRMEM cache). Critical OLTP workloads can be guaranteed space in Exadata Smart Flash Cache or XRMEM cache to provide consistent performance.

IORM for a database or pluggable database (PDB) is implemented and managed from the Oracle Database Resource Manager. Oracle Database Resource Manager in the database instance communicates with the IORM software in the storage cell to manage user-defined service-level targets. Database resource plans are administered from the database, while interdatabase plans are administered on the storage cell.

Related Topics

Parent topic: Key Features of Oracle Exadata System Software

1.2.8 Exadata Hybrid Columnar Compression

Exadata Hybrid Columnar Compression stores data using column organization, which brings similar values close together and enhances compression ratios.

Using Exadata Hybrid Columnar Compression, data is organized into sets of rows called compression units. Within a compression unit, data is organized by column and then compressed. Each row is self-contained within a compression unit.

Database operations work transparently against compressed objects, so no application changes are required. The database compresses data manipulated by any SQL operation, although compression levels are higher for direct path loads.

You can specify the following types of Exadata Hybrid Columnar Compression, depending on your requirements:

- Warehouse compression: This type of compression is optimized for query performance, and is intended for data warehouse applications.

- Archive compression: This type of compression is optimized for maximum compression levels, and is intended for historic data and data that does not change.

Assume that you apply Exadata Hybrid Columnar Compression to a daily_sales table. At the end of every day, the table is populated with items and the number sold, with the item ID and date forming a composite primary key. A row subset is shown in the following table.

Table 1-1 Sample Table daily_sales

| Item_ID | Date | Num_Sold | Shipped_From | Restock |

|---|---|---|---|---|

|

1000 |

01-JUN-07 |

2 |

WAREHOUSE1 |

Y |

|

1001 |

01-JUN-07 |

0 |

WAREHOUSE3 |

N |

|

1002 |

01-JUN-07 |

1 |

WAREHOUSE3 |

N |

|

1003 |

01-JUN-07 |

0 |

WAREHOUSE2 |

N |

|

1004 |

01-JUN-07 |

2 |

WAREHOUSE1 |

N |

|

1005 |

01-JUN-07 |

1 |

WAREHOUSE2 |

N |

The database stores a set of rows in an internal structure called a compression unit. For example, assume that the rows in the previous table are stored in one unit. Exadata Hybrid Columnar Compression stores each unique value from column 4 with metadata that maps the values to the rows. Conceptually, the compressed value can be represented as:

WAREHOUSE1WAREHOUSE3WAREHOUSE2

The database then compresses the repeated word WAREHOUSE in this value by storing it once and replacing each occurrence with a reference. If the reference is smaller than the original word, then the database achieves compression. The compression benefit is particularly evident for the Date column, which contains only one unique value.

As shown in the following illustration, each compression unit can span multiple data blocks. The values for a particular column may or may not span multiple blocks.

Exadata Hybrid Columnar Compression has implications for row locking. When an update occurs for a row in an uncompressed data block, only the updated row is locked. In contrast, the database must lock all rows in the compression unit if an update is made to any row in the unit. Updates to rows using Exadata Hybrid Columnar Compression cause rowids to change.

Note:

When tables use Exadata Hybrid Columnar Compression, Oracle DML locks larger blocks of data (compression units) which may reduce concurrency.Oracle Database supports four methods of table compression.

Table 1-2 Table Compression Methods

| Table Compression Method | Compression Level | CPU Overhead | Applications |

|---|---|---|---|

|

Basic compression |

High |

Minimal |

DSS |

|

OLTP compression |

High |

Minimal |

OLTP, DSS |

|

Warehouse compression |

Higher (compression level depends on compression level specified (LOW or HIGH)) |

Higher (CPU overhead depends on compression level specified (LOW or HIGH)) |

DSS |

|

Archive compression |

Highest (compression level depends on compression level specified (LOW or HIGH)) |

Highest (CPU overhead depends on compression level specified (LOW or HIGH)) |

Archiving |

Warehouse compression and archive compression achieve the highest compression levels because they use Exadata Hybrid Columnar Compression technology. Exadata Hybrid Columnar Compression technology uses a modified form of columnar storage instead of row-major storage. This enables the database to store similar data together, which improves the effectiveness of compression algorithms. Because Exadata Hybrid Columnar Compression requires high CPU overhead for DML, use it only for data that is updated infrequently.

The higher compression levels of Exadata Hybrid Columnar Compression are achieved only with data that is direct-path inserted. Conventional inserts and updates are supported, but result in a less compressed format, and reduced compression level.

The following table lists characteristics of each table compression method.

Table 1-3 Table Compression Characteristics

| Table Compression Method | CREATE/ALTER TABLE Syntax | Direct-Path Insert | DML |

|---|---|---|---|

|

Basic compression |

|

Yes |

Yes Note: Inserted and updated rows are uncompressed. |

|

OLTP compression |

|

Yes |

Yes |

|

Warehouse compression |

|

Yes |

Yes High CPU overhead. Note: Inserted and updated rows go to a block with a less compressed format and have lower compression level. |

|

Archive compression |

|

Yes |

Yes Note: Inserted and updated rows are uncompressed. Inserted and updated rows go to a block with a less compressed format and have lower compression level. |

The COMPRESS FOR QUERY HIGH option is the default data warehouse compression mode. It provides good compression and performance. The COMPRESS FOR QUERY LOW option should be used in environments where load performance is critical. It loads faster than data compressed with the COMPRESS FOR QUERY HIGH option.

The COMPRESS FOR ARCHIVE LOW option is the default archive compression mode. It provides a high compression level and good query performance. It is ideal for infrequently-accessed data. The COMPRESS FOR ARCHIVE HIGH option should be used for data that is rarely accessed.

A compression advisor, provided by the DBMS_COMPRESSION package, helps you determine the expected compression level for a particular table with a particular compression method.

You specify table compression with the COMPRESS clause of the CREATE TABLE command. You can enable compression for an existing table by using these clauses in an ALTER TABLE statement. In this case, only data that is inserted or updated is compressed after compression is enabled. Similarly, you can disable table compression for an existing compressed table with the ALTER TABLE...NOCOMPRESS command. In this case, all data that was already compressed remains compressed, and new data is inserted uncompressed.

Parent topic: Key Features of Oracle Exadata System Software

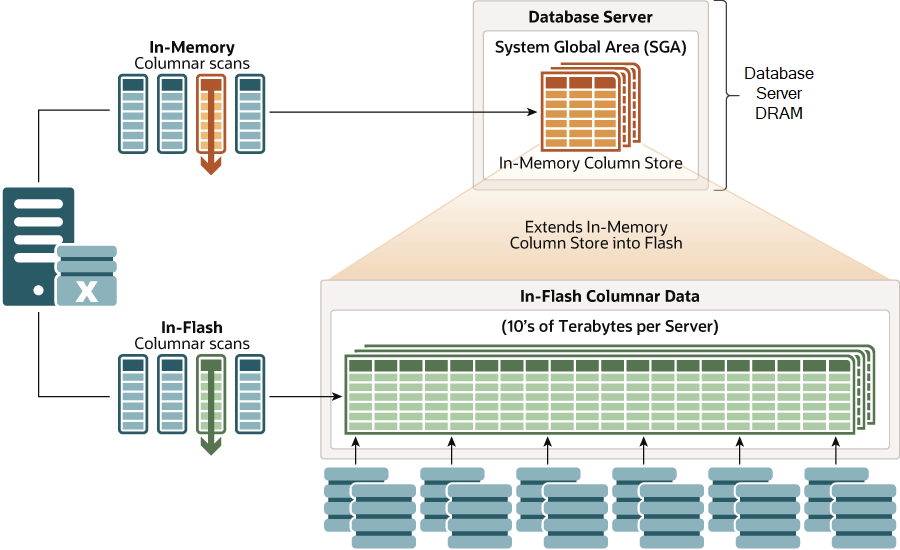

1.2.9 In-Memory Columnar Format Support

In an Oracle Exadata environment, the data is automatically stored in In-Memory columnar format in the flash cache when it will improve performance.

Oracle Exadata supports all of the In-Memory optimizations, such as accessing only the compressed columns required, SIMD vector processing, storage indexes, and so on.

If you set the INMEMORY_SIZE database initialization parameter to a non-zero value (requires the Oracle Database In-Memory option), then objects accessed using a Smart Scan are brought into the flash cache and are automatically converted into the In-Memory columnar format. The data is converted initially into a columnar cache format, which is different from Oracle Database In-Memory’s columnar format. The data is rewritten in the background into Oracle Database In-Memory columnar format. As a result, all subsequent accesses to the data benefit from all of the In-Memory optimizations when that data is retrieved from the flash cache.

Any write to an in-memory table does not invalidate the entire columnar cache of that table. It only invalidates the columnar cache unit of the disk region in which the block resides. For subsequent scans after a table update, a large part of the table is still in the columnar cache. The scans can still make use of the columnar cache, except for the units in which the writes were made. For those units, the query uses the original block version to get the data. After a sufficient number of scans, the invalidated columnar cache units are automatically repopulated in the columnar format.

A new segment-level attribute, CELLMEMORY, has also been introduced to help control which objects should not be populated into flash using the In-Memory columnar format and which type of compression should be used. Just like the INMEMORY attribute, you can specify different compression levels as sub-clauses to the CELLMEMORY attribute. However, not all of the INMEMORY compression levels are available; only MEMCOMPRESS FOR QUERY LOW and MEMCOMPRESS FOR CAPACITY LOW (default). You specify the CELLMEMORY attribute using a SQL command, such as the following:

ALTER TABLE trades CELLMEMORY MEMCOMPRESS FOR QUERY LOWThe PRIORTY sub-clause available with Oracle Database In-Memory is not available on Oracle Exadata because the process of populating the flash cache on Exadata storage servers if different from populating DRAM in the In-Memory column store on Oracle Database servers.

Parent topic: Key Features of Oracle Exadata System Software

1.2.10 Offloading Data Search and Retrieval Processing

Exadata Smart Scan offloads search and retrieval processing to the storage servers.

Smart Scan performs selected Oracle Database functions inside Oracle Exadata Storage Server. This capability improves query performance by minimizing the amount of database server I/O, which reduces the amount of I/O-related communication between the database servers and storage servers. Furthermore, the database server CPU saved by Smart Scan is available to boost overall system throughput.

Smart Scan automatically optimizes full table scans, fast full index scans, and fast full bitmap index scans that use the Direct Path Read mechanism, namely parallel operations and large sequential scans. The primary functions performed by Smart Scan inside Oracle Exadata Storage Server are:

-

Predicate Filtering

Rather than transporting all rows to the database server for predicate evaluation, Smart Scan predicate filtering ensures that the database server receives only the rows matching the query criteria. The supported conditional operators include

=,!=,<,>,<=,>=,IS [NOT] NULL,LIKE,[NOT] BETWEEN,[NOT] IN,EXISTS,IS OF type,NOT,AND, andOR. In addition, Exadata Storage Server evaluates most common SQL functions during predicate filtering. -

Column Filtering

Rather than transporting entire rows to the database server, Smart Scan column filtering ensures that the database server receives only the requested columns. For tables with many columns, or columns containing LOBs, the I/O bandwidth saved by column filtering can be very substantial.

For example, consider a simple query:

SQL> SELECT customer_name FROM calls WHERE amount > 200;In this case, Smart Scan offloads predicate filtering

(WHERE amount > 200) and column filtering (SELECT

customer_name) to the Exadata storage servers. The effect can be dramatic

depending on the table size, structure, and contents. For example, if the table contains

1 TB of data, but the query result is only 2 MB, then only 2MB of data is transported

from the storage servers and the database server.

The following diagram illustrates how Smart Scan avoids unnecessary data transfer between the storage servers and the database server.

Figure 1-2 Offloading Data Search and Retrieval

Description of "Figure 1-2 Offloading Data Search and Retrieval"

In addition to offloading predicate filtering and column filtering, Smart Scan enables:

-

Optimized join processing for star schemas (between large tables and small lookup tables). This is implemented using Bloom Filters, which provide a very efficient probabilistic method to determine whether an element is a member of a set.

-

Optimized scans on encrypted tablespaces and encrypted columns. For encrypted tablespaces, Exadata Storage Server can decrypt blocks and return the decrypted blocks to Oracle Database, or it can perform row and column filtering on encrypted data. Significant CPU savings occur within the database server by offloading the CPU-intensive decryption task to Exadata cells.

-

Optimized scans on compressed data. Smart Scan works in conjunction with Hybrid Columnar Compression so that column projection, row filtering, and decompression run in the Exadata Storage Servers to save CPU cycles on the database servers.

-

Offloading of scoring functions, such as

PREDICTION_PROBABILITY, for data mining models. This accelerates analysis while reducing database server CPU consumption and the I/O load between the database server and storage servers.

Parent topic: Key Features of Oracle Exadata System Software

1.2.11 Offloading of Incremental Backup Processing

To optimize the performance of incremental backups, the database can offload block filtering to Oracle Exadata Storage Server.

This optimization is only possible when taking backups using Oracle Recovery Manager (RMAN). The offload processing is done transparently without user intervention. During offload processing, Oracle Exadata System Software filters out the blocks that are not required for the incremental backup in progress. Therefore, only the blocks that are required for the backup are sent to the database, making backups significantly faster.

Related Topics

Parent topic: Key Features of Oracle Exadata System Software

1.2.12 Fault Isolation with Quarantine

Oracle Exadata System Software has the ability to learn from the past events to avoid errors.

When a faulty SQL statement caused a crash of the server in the past, Oracle Exadata System Software quarantines the SQL statement so that when the faulty SQL statement occurs again, Oracle Exadata System Software does not allow the SQL statement to perform Smart Scan. This reduces the chance of server software crashes, and improves storage availability. The following types of quarantine are available:

-

SQL Plan: Created when Oracle Exadata System Software crashes while performing Smart Scan for a SQL statement. As a result, the SQL Plan for the SQL statement is quarantined, and Smart Scan is disabled for the SQL plan.

-

Disk Region: Created when Oracle Exadata System Software crashes while performing Smart Scan of a disk region. As a result, the 1 MB disk region being scanned is quarantined and Smart Scan is disabled for the disk region.

-

Database: Created when Oracle Exadata System Software detects that a particular database causes instability to a cell. Instability detection is based on the number of SQL Plan Quarantines for a database. Smart Scan is disabled for the database.

-

Cell Offload: Created when Oracle Exadata System Software detects some offload feature has caused instability to a cell. Instability detection is based on the number of Database Quarantines for a cell. Smart Scan is disabled for all databases.

-

Intra-Database Plan: Created when Oracle Exadata System Software crashes while processing an intra-database resource plan. Consequently, the intra-database resource plan is quarantined and not enforced. Other intra-database resource plans in the same database are still enforced. Intra-database resource plans in other databases are not affected.

-

Inter-Database Plan: Created when Oracle Exadata System Software crashes while processing an inter-database resource plan. Consequently, the inter-database resource plan is quarantined and not enforced. Other inter-database resource plans are still enforced.

-

I/O Resource Management (IORM): Created when Oracle Exadata System Software crashes in the I/O processing code path. IORM is effectively disabled by setting the IORM objective to

basicand all resource plans are ignored. -

Cell-to-Cell Offload: See "Quarantine Manager Support for Cell-to-Cell Offload Operations".

When a quarantine is created, alerts notify administrators of what was quarantined, why the quarantine was created, when and how the quarantine can be dropped manually, and when the quarantine is dropped automatically. All quarantines are automatically removed when a cell is patched or upgraded.

CellCLI commands are used to manually manipulate quarantines. For instance, the administrator can manually create a quarantine, drop a quarantine, change attributes of a quarantine, and list quarantines.

Related Topics

Parent topic: Key Features of Oracle Exadata System Software

1.2.12.1 Quarantine Manager Support for Cell-to-Cell Offload Operations

Commencing with Oracle Exadata System Software 12.2.1.1.0, quarantine manager support is provided for cell-to-cell offload operations that support ASM rebalance and high throughput writes. If Exadata detects a crash during these operations, the offending operation is quarantined, and Exadata falls back to using non-offloaded operations.

These types of quarantines are most likely caused by incompatible versions of CELLSRV. If such quarantines occur on your system, contact Oracle Support Services.

To identify these types of quarantine, run the LIST QUARANTINE

DETAIL command and check the value of the quarantineType attribute.

Values associated with these quarantines are ASM_OFFLOAD_REBALANCE and

HIGH_THROUGHPUT_WRITE.

Following are some examples of the output produced by the LIST

QUARANTINE command.

For ASM rebalance:

CellCLI> list quarantine detail

name: 2

asmClusterId: b6063030c0ffef8dffcc99bd18b91a62

cellsrvChecksum: 9f98483ef351a1352d567ebb1ca8aeab

clientPID: 10308

comment: None

crashReason: ORA-600[CacheGet::process:C2C_OFFLOAD_CACHEGET_CRASH]

creationTime: 2016-06-23T22:33:30-07:00

dbUniqueID: 0

dbUniqueName: UnknownDBName

incidentID: 1

quarantineMode: "FULL Quarantine"

quarantinePlan: SYSTEM

quarantineReason: Crash

quarantineType: ASM_OFFLOAD_REBALANCE

remoteHostName: slc10vwt

rpmVersion: OSS_MAIN_LINUX.X64_160623

For high throughput writes originating from a non-container database:

CellCLI> list quarantine detail

name: 10

asmClusterId: b6063030c0ffef8dffcc99bd18b91a62

cellsrvChecksum: 9f98483ef351a1352d567ebb1ca8aeab

clientPID: 8377

comment: None

crashReason: ORA-600[CacheGet::process:C2C_OFFLOAD_CACHEGET_CRASH]

creationTime: 2016-06-23T23:47:01-07:00

conDbUniqueID: 0

conDbUniqueName: UnknownDBName

dbUniqueID: 4263312973

dbUniqueName: WRITES

incidentID: 25

quarantineMode: "FULL Quarantine"

quarantinePlan: SYSTEM

quarantineReason: Crash

quarantineType: HIGH_THROUGHPUT_WRITE

remoteHostName: slc10vwt

rpmVersion: OSS_MAIN_LINUX.X64_160623

For high throughput writes originating from a container database (CDB):

CellCLI> list quarantine detail

name: 10

asmClusterId: eff096e82317ff87bfb2ee163731f7f7

cellsrvChecksum: 9f98483ef351a1352d567ebb1ca8aeab

clientPID: 17206

comment: None

crashReason: ORA-600[CacheGet::process:C2C_OFFLOAD_CACHEGET_CRASH]

creationTime: 2016-06-24T12:59:06-07:00

conDbUniqueID: 4263312973

conDbUniqueName: WRITES

dbUniqueID: 0

dbUniqueName: UnknownDBName

incidentID: 25

quarantineMode: "FULL Quarantine"

quarantinePlan: SYSTEM

quarantineReason: Crash

quarantineType: HIGH_THROUGHPUT_WRITE

remoteHostName: slc10vwt

rpmVersion: OSS_MAIN_LINUX.X64_160623

Parent topic: Fault Isolation with Quarantine

1.2.13 Protection Against Data Corruption

Data corruptions, while rare, can have a catastrophic effect on a database, and therefore on a business.

Oracle Exadata System Software takes data protection to the next level by protecting business data, not just the physical bits.

The key approach to detecting and preventing corrupted data is block checking in which the storage subsystem validates the Oracle block contents. Oracle Database validates and adds protection information to the database blocks, while Oracle Exadata System Software detects corruptions introduced into the I/O path between the database and storage. The Storage Server stops corrupted data from being written to disk. This eliminates a large class of failures that the database industry had previously been unable to prevent.

Unlike other implementations of corruption checking, checks with Oracle Exadata System Software operate completely transparently. No parameters need to be set at the database or storage tier. These checks transparently handle all cases, including Oracle Automatic Storage Management (Oracle ASM) disk rebalance operations and disk failures.

Parent topic: Key Features of Oracle Exadata System Software

1.2.14 Fast File Creation

File creation operations are offloaded to Oracle Exadata Storage Servers.

Operations such as CREATE TABLESPACE, which can create one or more files, have a significant increase in speed due to file creation offload.

File resize operations are also offloaded to the storage servers, which are important for auto-extensible files.

Parent topic: Key Features of Oracle Exadata System Software

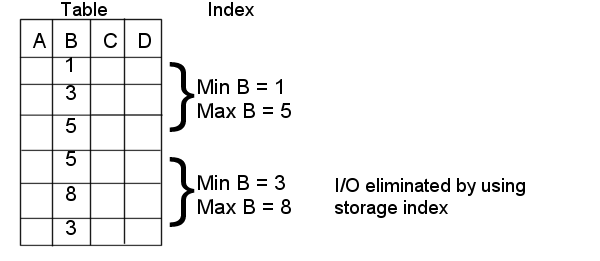

1.2.15 Storage Index

Oracle Exadata Storage Servers maintain a storage index which contains a summary of the data distribution on the disk.

The storage index is maintained automatically, and is transparent to Oracle Database. It is a collection of in-memory region indexes, prior to Exadata 12.2.1.1.0 each region index stores summaries for up to eight columns, and from Exadata 12.2.1.1.0, each region index may store summaries for up to 24 columns. If set summaries are used, the maximum number of 24 may not be achieved. There is one region index for each 1 MB of disk space. Storage indexes work with any non-linguistic data type, and work with linguistic data types similar to non-linguistic indexes.

Each region index maintains the minimum and maximum values of the columns of the table. The minimum and maximum values are used to eliminate unnecessary I/O, also known as I/O filtering. The Cell physical IO bytes saved by storage index statistic, available in the V$SYS_STAT and V$SESSTAT views, shows the number of bytes of I/O saved using storage index. The content stored in one region index is independent of the other region indexes. This makes them highly scalable, and avoids latch contention.

Queries using the following comparisons are improved by the storage index:

-

Equality (=)

-

Inequality (<, !=, or >)

-

Less than or equal (<=)

-

Greater than or equal (>=)

-

IS NULL

-

IS NOT NULL

Oracle Exadata System Software automatically builds Storage indexes after a query with a comparison predicate that is greater than the maximum or less than the minimum value for the column in a region, and would have benefited if a storage index had been present. Oracle Exadata System Software automatically learns which storage indexes would have benefited a query, and then creates the storage index automatically so that subsequent similar queries benefit.

In Oracle Exadata System Software release 12.2.1.1.0 and later, when data has been stored using the in-memory format columnar cache, Oracle Exadata Database Machine stores these columns compressed using dictionary encoding. For columns with fewer than 200 distinct values, the storage index creates a very compact in-memory representation of the dictionary and uses this compact representation to filter disk reads based on equality predicates. This feature is called set membership. A more limited filtering ability extends up to 400 distinct values.

For example, suppose a region of disk holds a list of customers in the United States and Canada. When you run a query looking for customers in Mexico, Oracle Exadata Storage Server can use the new set membership capability to improve the performance of the query by filtering out disk regions that do not contain customers from Mexico. In Oracle Exadata System Software releases earlier than 12.2.1.1.0, which do not have the set membership capability, a regular storage index would be unable to filter those disk regions.

Note:

The effectiveness of storage indexes can be improved by ordering the rows based on columns that frequently appear in WHERE query clauses.

Note:

The storage index is maintained during write operations to uncompressed blocks and OLTP compressed blocks. Write operations to Exadata Hybrid Columnar Compression compressed blocks or encrypted tablespaces invalidate a region index, and only the storage index on a specific region. The storage index for Exadata Hybrid Columnar Compression is rebuilt on subsequent scans.

Example 1-1 Elimination of Disk I/O with Storage Index

The following figure shows a table and region indexes. The values in the table range from one to eight. One region index stores the minimum 1, and the maximum of 5. The other region index stores the minimum of 3, and the maximum of 8.

For a query such as SELECT * FROM TABLE WHERE B<2, only the first set of rows match. Disk I/O is eliminated because the minimum and maximum of the second set of rows do not match the WHERE clause of the query.

Example 1-2 Partition Pruning-like Benefits with Storage Index

In the following figure, there is a table named Orders with the columns Order_Number, Order_Date, Ship_Date, and Order_Item. The table is range partitioned by Order_Date column.

The following query looks for orders placed since January 1, 2015:

SELECT count (*) FROM Orders WHERE Order_Date >= to_date ('2015-01-01', \

'YYY-MM-DD')

Because the table is partitioned on the Order_Date column, the preceding query avoids scanning unnecessary partitions of the table. Queries on Ship_Date do not benefit from Order_Date partitioning, but Ship_Date and Order_Number are highly correlated with Order_Date. Storage indexes take advantage of ordering created by partitioning or sorted loading, and can use it with the other columns in the table. This provides partition pruning-like performance for queries on the Ship_Date and Order_Number columns.

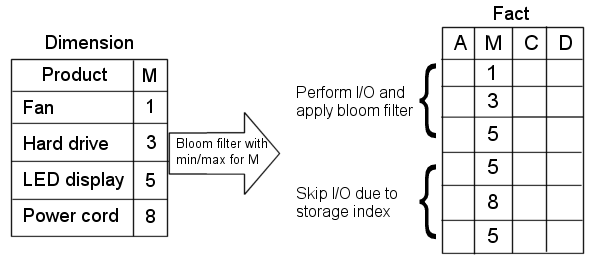

Example 1-3 Improved Join Performance Using Storage Index

Using storage index allows table joins to skip unnecessary I/O operations. For example, the following query would perform an I/O operation and apply a Bloom filter to only the first block of the fact table.

SELECT count(*) FROM fact, dim WHERE fact.m=dim.m AND dim.product="Hard drive"

The I/O for the second block of the fact table is completely eliminated by storage index as its minimum/maximum range (5,8) is not present in the Bloom filter.

Parent topic: Key Features of Oracle Exadata System Software

1.3 Oracle Exadata System Software Components

This section provides a summary of the following Oracle Exadata System Software components.

- About Oracle Exadata System Software

Unique software algorithms in Oracle Exadata System Software implement database intelligence in storage, PCI-based flash, and RDMA Network Fabric networking to deliver higher performance and capacity at lower costs than other platforms. - About Cell Management

Each cell in the Oracle Exadata Storage Server grid is individually managed with Cell Control Command-Line Interface (CellCLI). - About Storage Server Security

Security for Exadata Storage Servers is enforced by identifying which clients can access storage servers and grid disks. - About Oracle Automatic Storage Management

Oracle Automatic Storage Management (Oracle ASM) is the cluster volume manager and file system used to manage Oracle Exadata Storage Server resources. - Maintaining High Performance During Storage Interruptions

- About Database Server Software

Oracle software is installed on the Exadata database servers. - About Oracle Enterprise Manager for Oracle Exadata

Oracle Enterprise Manager provides a complete target that enables you to monitor Oracle Exadata, including configuration and performance, in a graphical user interface (GUI).

Parent topic: Introducing Oracle Exadata System Software

1.3.1 About Oracle Exadata System Software

Unique software algorithms in Oracle Exadata System Software implement database intelligence in storage, PCI-based flash, and RDMA Network Fabric networking to deliver higher performance and capacity at lower costs than other platforms.

Oracle Exadata Storage Server is a network-accessible storage device that contains Oracle Exadata System Software. Exadata software communicates with Oracle Database using a specialized iDB protocol, and provides both standard I/O functionality, such as block-oriented reads and writes, and advanced I/O functionality, including predicate offload and I/O Resource Management (IORM).

Each storage server has physical disks. The physical disk is an actual device within the storage server that constitutes a hard disk drive (HDD) or flash device. Each physical disk has a logical representation in the operating system (OS) known as a Logical Unit Number (LUN). Typically, there is a one-to-one relationship between physical disks and LUNs on all Exadata Storage Server models. However, on Exadata X10M Extreme Flash (EF) storage servers only, each of the four 30.72 TB capacity-optimized flash devices is configured with 2 LUNs, resulting in 8 LUNs on Exadata X10M EF storage servers.

A cell disk is an Oracle Exadata System Software abstraction built on the top of a LUN. After a cell disk is created from the LUN, it is managed by Oracle Exadata System Software and can be further subdivided into multiple grid disks.

Each grid disk is directly exposed to Oracle ASM for use in an Oracle ASM disk group. This level of virtualization enables multiple Oracle ASM clusters and multiple databases to share the same physical disk. This sharing provides optimal use of disk capacity and bandwidth.

Various metrics and statistics collected on the cell disk level enable you to evaluate the performance and capacity of storage servers. IORM schedules the cell disk access in accordance with user-defined policies.

The following image illustrates the main data storage components in a storage server (also called a cell).

- Oracle Exadata Storage Server includes physical disks, which can be hard disk drives (HDDs) or flash devices.

- Each physical disk is typically represented as a LUN in the cell OS (except for Exadata X10M EF storage servers only, where each capacity-optimized flash device contains 2 LUNs).

- The cell disk is a higher level of abstraction that represents the data storage area on each LUN that is managed by Oracle Exadata System Software. A LUN may contain only one cell disk.

- Each cell disk can contain multiple grid disks, which are directly available to Oracle ASM.

Figure 1-3 Oracle Exadata Data Storage Components

Description of "Figure 1-3 Oracle Exadata Data Storage Components"

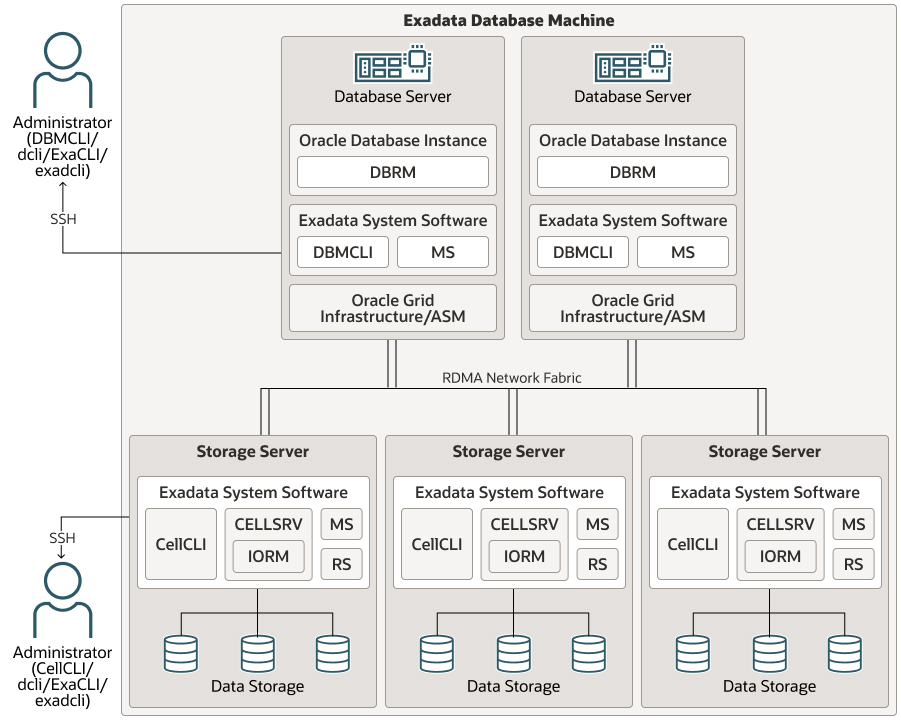

The following image illustrates the major software components in Oracle Exadata.

Figure 1-4 Software Components in Oracle Exadata

Description of "Figure 1-4 Software Components in Oracle Exadata"

The figure illustrates the following environment:

-

Single-instance or Oracle RAC databases access Oracle Exadata Storage Servers using the RDMA Network Fabric. Each database server runs the Oracle Database and Oracle Grid Infrastructure software. Resources are managed within each database instance by Oracle Database Resource Manager (DBRM).

-

The database servers also include Oracle Exadata System Software components, such as Management Server (MS) and a command-line management interface (DBMCLI).

-

Storage servers contain physical data storage devices, along with cell-based utilities and processes from Oracle Exadata System Software, including:

-

Cell Server (CELLSRV) is the primary Exadata storage server software component and provides the majority of Exadata storage services. It serves simple block requests, such as database buffer cache reads, and facilitates Smart Scan requests, such as table scans with projections and filters. CELLSRV implements Exadata I/O Resource Management (IORM), which works in conjunction with Oracle Database resource management to meter out I/O bandwidth to the various databases and consumer groups that are issuing I/Os. CELLSRV also collects numerous statistics relating to its operations. CELLSRV is a multithreaded server and typically uses the largest portion of CPU resources on a storage server.

-

Offload server (CELLOFLSRV <version>) is a helper process to CELLSRV that processes offload requests from a specific Oracle Database version. The offload servers enable a storage server to support all offload operations from multiple Oracle Database versions. The offload servers automatically run in conjunction with CELLSRV, and they require no additional configuration or maintenance.

-

Management Server (MS) provides standalone Oracle Exadata System Software management and configuration functions. MS works in cooperation with and processes most of the commands from the Cell Control Command-Line Interface (CellCLI), which is the primary user interface to administer, manage, and query server status. MS is also responsible for sending alerts, and it collects some statistics in addition to those collected by CELLSRV.

-

Restart Server (RS) monitors the CELLSRV, offload server, and MS processes and restarts them, if necessary.

-

-

The CellCLI utility is the primary management interface for Oracle Exadata System Software on each storage server, while each database server contains a similar utility called DBMCLI. The dcli utility enables administrators to perform management operations across multiple servers, while ExaCLI and exadcli facilitate remote management of Exadata servers, either individually or as a group.

Parent topic: Oracle Exadata System Software Components

1.3.2 About Cell Management

Each cell in the Oracle Exadata Storage Server grid is individually managed with Cell Control Command-Line Interface (CellCLI).

The CellCLI utility provides a command-line interface to the cell management functions, such as cell initial configuration, cell disk and grid disk creation, and performance monitoring. The CellCLI utility runs on the cell, and is accessible from a client computer that has network access to the storage cell or is directly connected to the cell. The CellCLI utility communicates with Management Server to administer the storage cell.

To access the cell, you should either use Secure Shell (SSH) access, or local access, for example, through a KVM (keyboard, video or visual display unit, mouse) switch. SSH allows remote access, but local access might be necessary during the initial configuration when the cell is not yet configured for the network. With local access, you have access to the cell operating system shell prompt and use various tools, such as the CellCLI utility, to administer the cell.

You can run the same CellCLI commands remotely on multiple cells with the dcli utility.

To manage a cell remotely from a compute node, you can use the ExaCLI utility. ExaCLI enables you to run most CellCLI commands on a cell. This is necessary if you do not have direct access to a cell to run CellCLI, or if SSH service on the cell has been disabled. To run commands on multiple cells remotely, you can use the exadcli utility.

Related Topics

See Also:

-

Using the ExaCLI Utility in Oracle Exadata Database Machine Maintenance Guide, for additional information about managing cells remotely

-

Using the exadcli Utility in Oracle Exadata Database Machine Maintenance Guide, for additional information about managing multiple cells remotely

Parent topic: Oracle Exadata System Software Components

1.3.3 About Storage Server Security

Security for Exadata Storage Servers is enforced by identifying which clients can access storage servers and grid disks.

Clients include Oracle ASM instances, database instances, and clusters. When creating or modifying grid disks, you can configure the Oracle ASM owner and the database clients that are allowed to use those grid disks.

Related Topics

Parent topic: Oracle Exadata System Software Components

1.3.4 About Oracle Automatic Storage Management

Oracle Automatic Storage Management (Oracle ASM) is the cluster volume manager and file system used to manage Oracle Exadata Storage Server resources.

Oracle ASM provides enhanced storage management by:

- Striping database files evenly across all available storage cells and disks for optimal performance.

- Using mirroring and failure groups to avoid any single point of failure.

- Enabling dynamic add and drop capability for non-intrusive cell and disk allocation, deallocation, and reallocation.

- Enabling multiple databases to share storage cells and disks.

The following topics provide a brief overview of Oracle ASM:

- Oracle ASM Disk Groups

An Oracle Automatic Storage Management (Oracle ASM) disk group is the primary storage abstraction within Oracle ASM, and is composed of one or more grid disks. - Oracle ASM Failure Group

An Oracle ASM failure group is a subset of disks in an Oracle ASM disk group that can fail together because they share the same hardware. - Maximum Availability with Oracle ASM

Oracle recommends high redundancy Oracle ASM disk groups, and file placement configuration which can be automatically deployed using Oracle Exadata Deployment Assistant.

Related Topics

Parent topic: Oracle Exadata System Software Components

1.3.4.1 Oracle ASM Disk Groups

An Oracle Automatic Storage Management (Oracle ASM) disk group is the primary storage abstraction within Oracle ASM, and is composed of one or more grid disks.

Oracle Exadata Storage Server grid disks appear to Oracle ASM as individual disks available for membership in Oracle ASM disk groups. Whenever possible, grid disk names should correspond closely with Oracle ASM disk group names to assist in problem diagnosis between Oracle ASM and Oracle Exadata System Software.

Typically, Oracle Exadata is configured with the following Oracle ASM disk groups:

-

DATA is the primary data disk group.

-

RECO is the primary recovery disk group, which contains the Oracle Database Fast Recovery Area (FRA).

-

SPARSE is an optionally configured sparse disk group that is required to support Exadata snapshots.

-

XTND is the default name for the disk group that is used to collect storage from Exadata XT (Extended) storage servers.

-

DBFS is the system disk group that is typically configured on systems prior to Exadata X7. The DBFS disk group is primarily used to store the shared Oracle Clusterware files (Oracle Cluster Registry and voting disks) and provide some space to host Oracle Database File System (DBFS). This disk group is not configured on Exadata X7, and later, systems.

To take advantage of Oracle Exadata System Software features, such as predicate processing offload, the disk groups must contain only Oracle Exadata Storage Server grid disks, the tables must be fully inside these disk groups, and the group should have cell.smart_scan_capable attribute set to TRUE.

Note:

The Oracle Database and Oracle Grid Infrastructure software must be release 12.1.0.2.0 BP3 or later when using sparse grid disks.

Related Topics

Parent topic: About Oracle Automatic Storage Management

1.3.4.2 Oracle ASM Failure Group

An Oracle ASM failure group is a subset of disks in an Oracle ASM disk group that can fail together because they share the same hardware.

Oracle ASM considers failure groups when making redundancy decisions.

For Oracle Exadata Storage Servers, all grid disks, which consist of the Oracle ASM disk group members and candidates, can effectively fail together if the storage cell fails. Because of this scenario, all Oracle ASM grid disks sourced from a given storage cell should be assigned to a single failure group representing the cell.

For example, if all grid disks from two storage cells, A and B, are added to a single Oracle ASM disk group with normal redundancy, then all grid disks on storage cell A are designated as one failure group, and all grid disks on storage cell B are designated as another failure group. This enables Oracle Exadata System Software and Oracle ASM to tolerate the failure of either storage cell.

Failure groups for Oracle Exadata Storage Server grid disks are set by default so that the disks on a single cell are in the same failure group, making correct failure group configuration simple for Oracle Exadata Storage Servers.

You can define the redundancy level for an Oracle ASM disk group when creating a disk group. An Oracle ASM disk group can be specified with normal or high redundancy. Normal redundancy double mirrors the extents, and high redundancy triple mirrors the extents. Oracle ASM normal redundancy tolerates the failure of a single cell or any set of disks in a single cell. Oracle ASM high redundancy tolerates the failure of two cells or any set of disks in two cells. Base your redundancy setting on your desired protection level. When choosing the redundancy level, ensure the post-failure I/O capacity is sufficient to meet the redundancy requirements and performance service levels. Oracle recommends using three cells for normal redundancy. This ensures the ability to restore full redundancy after cell failure. Consider the following:

-

If a cell or disk fails, then Oracle ASM automatically redistributes the cell or disk contents across the remaining disks in the disk group as long as there is enough space to hold the data. For an existing disk group using Oracle ASM redundancy, the

USABLE_FILE_MBandREQUIRED_FREE_MIRROR_MBcolumns in theV$ASM_DISGKROUPview give the amount of usable space and space for redundancy, respectively. -

If a cell or disk fails, then the remaining disks should be able to generate the IOPS necessary to sustain the performance service level agreement.

After a disk group is created, the redundancy level of the disk group cannot be changed. To change the redundancy of a disk group, you must create another disk group with the appropriate redundancy, and then move the files.

Each Exadata Cell is a failure group. A normal redundancy disk group must contain at least two failure groups. Oracle ASM automatically stores two copies of the file extents, with the mirrored extents placed in different failure groups. A high redundancy disk group must contain at least three failure groups. Oracle ASM automatically stores three copies of the file extents, with each file extent in separate failure groups.

System reliability can diminish if your environment has an insufficient number of failure groups. A small number of failure groups, or failure groups of uneven capacity, can lead to allocation problems that prevent full use of all available storage.

Related Topics

Parent topic: About Oracle Automatic Storage Management

1.3.4.3 Maximum Availability with Oracle ASM

Oracle recommends high redundancy Oracle ASM disk groups, and file placement configuration which can be automatically deployed using Oracle Exadata Deployment Assistant.

High redundancy can be configured for DATA, RECO, or any other Oracle ASM disk group with a minimum of 3 storage cells. Starting with Exadata Software release 12.1.2.3.0, the voting disks can reside in a high redundancy disk group, and additional quorum disks (essentially equivalent to voting disks) can reside on database servers if there are fewer than 5 Exadata storage cells.

Maximum availability architecture (MAA) best practice uses two main Oracle ASM disk groups: DATA and RECO. The disk groups are organized as follows:

- The disk groups are striped across all disks and Oracle Exadata Storage Servers to maximize I/O bandwidth and performance, and to simplify management.

- The DATA and RECO disk groups are configured for high (3-way) redundancy.

The benefits of high redundancy disk groups are illustrated by the following outage scenarios:

- Double partner disk failure: Protection against loss of the database and Oracle ASM disk group due to a disk failure followed by a second partner disk failure.

- Disk failure when Oracle Exadata Storage Server is offline: Protection against loss of the database and Oracle ASM disk group when a storage server is offline and one of the storage server's partner disks fails. The storage server may be offline because of Exadata storage planned maintenance, such as Exadata rolling storage server patching.

- Disk failure followed by disk sector corruption: Protection against data loss and I/O errors when latent disk sector corruptions exist and a partner storage disk is unavailable either due to planned maintenance or disk failure.

If the voting disk resides in a high redundancy disk group that is part of the default Exadata high redundancy deployment, the cluster and database will remain available for the above failure scenarios. If the voting disk resides on a normal redundancy disk group, then the database cluster will fail and the database has to be restarted. You can eliminate that risk by moving the voting disks to a high redundancy disk group and creating additional quorum disks on database servers.

Oracle recommends high redundancy for ALL (DATA and RECO) disk groups because it provides maximum application availability against storage failures and operational simplicity during a storage outage. In contrast, if all disk groups were configured with normal redundancy and two partner disks fail, all clusters and databases on Exadata will fail and you will lose all your data (normal redundancy does not tolerate double partner disk failures). Other than better storage protection, the major difference between high redundancy and normal redundancy is the amount of usable storage and write I/Os. High redundancy requires more space, and has three write I/Os instead of two. The additional write I/O normally has negligible impact with Exadata smart write-back flash cache.

The following table describes that redundancy option, as well as others, and the relative availability trade-offs. The table assumes that voting disks reside in high redundancy disk group. Refer to Oracle Exadata Database Machine Maintenance Guide to migrate voting disks to high redundancy disk group for existing high redundancy disk group configurations.

| Redundancy Option | Availability Implications | Recommendation |

|---|---|---|

|

High redundancy for ALL (DATA and RECO) |

Zero application downtime and zero data loss for the preceding storage outage scenarios if voting disks reside in high redundancy disk group. If voting disks currently reside in normal redundancy disk group, refer to Oracle Exadata Database Machine Maintenance Guide to migrate them to high redundancy disk group. |

Use this option for best storage protection and operational simplicity for mission-critical applications. Requires more space for higher redundancy. |

|

High redundancy for DATA only |

Zero application downtime and zero data loss for preceding storage outage scenarios. This option requires an alternative archive destination. |

Use this option for best storage protection for DATA with slightly higher operational complexity. More available space than high redundancy for ALL. Refer to My Oracle Support note 2059780.1 for details. |

|

High redundancy for RECO only |

Zero data loss for the preceding storage outage scenarios. |

Use this option when longer recovery times are acceptable for the preceding storage outage scenarios. Recovery options include the following:

|

|

Normal Redundancy for ALL (DATA and RECO) Note: Cross-disk mirror isolation by using ASM disk group content type limits an outage to a single disk group when two disk partners are lost in a normal redundancy group that share physical disks and storage servers. |

The preceding storage outage scenarios resulted in failure of all Oracle ASM disk groups. However, using cross-disk group mirror isolation the outage is limited to one disk group. Note: This option is not available for eighth or quarter racks. |

Oracle recommends a minimum of high redundancy for DATA only. Use the Normal Redundancy for ALL option when the primary database is protected by an Oracle Data Guard standby database deployed on a separate Oracle Exadata Database Machine or when the Exadata Database Machine is servicing only development or test databases. Oracle Data Guard provides real-time data protection and fast failover for storage failures. If Oracle Data Guard is not available and the DATA or RECO disk groups are lost, then leverage recovery options described in My Oracle Support note 1339373.1. |

The optimal file placement for setup for MAA is:

- Oracle Database files — DATA disk group

- Flashback log files, archived redo files, and backup files — RECO disk group

- Redo log files — First high redundancy disk group. If no high redundancy disk group exists, then redo log files are multiplexed across the DATA and RECO disk groups.

- Control files — First high redundancy disk group. If no high redundancy disk groups exist, the use one control file in the DATA disk group. The backup control files should reside in the RECO disk group, and

RMAN CONFIGURE CONTROLFILE AUTOBACKUP ONshould be set. - Server parameter files (SPFILE) — First high redundancy disk group. If no high redundancy disk group exists, then SPFILE should reside in the DATA disk group. SPFILE backups should reside in the RECO disk group.

- Oracle Cluster Registry (OCR) and voting disks for Oracle Exadata Database Machine Full Rack and Oracle Exadata Database Machine Half Rack — First high redundancy disk group. If no high redundancy disk group exists, then the files should reside in the DATA disk group.

- Voting disks for Oracle Exadata Database Machine Quarter Rack or Eighth Rack — First high redundancy disk group, otherwise in normal redundancy disk group. If there are fewer than 5 Exadata storage cells with high redundancy disk group, additional quorum disks will be stored on Exadata database servers during OEDA deployment. Refer to Oracle Exadata Database Machine Maintenance Guide to migrate voting disks to high redundancy disk group for existing high redundancy disk group configurations.

- Temporary files — First normal redundancy disk group. If the high redundancy for ALL option is used, then the use the first high redundancy disk group.

- Staging and non-database files — DBFS disk group or ACFS volume

- Oracle software (including audit and diagnostic destinations) — Exadata database server local file system locations configured during OEDA deployment

Related Topics

- Database High Availability Checklist

- Configuration Prerequisites and Operational Steps for Higher Availability for a RECO disk group or Fast Recovery Area Failure (My Oracle Support Doc ID 2059780.1)

- Operational Steps for Recovery after Losing a Disk Group in an Exadata Environment (My Oracle Support Doc ID 1339373.1)

Parent topic: About Oracle Automatic Storage Management

1.3.5 Maintaining High Performance During Storage Interruptions

Exadata is engineered to deliver high performance by intelligently managing data across multiple storage tiers and caches. Early Exadata models feature high-performance low-latency flash storage, while later models deliver higher performance and extremely low latency by adding persistent memory (PMEM) or Exadata RDMA Memory (XRMEM). During normal operations, Exadata intelligently manages the storage tiers to ensure that the most relevant data uses the storage location with the highest performance and lowest latency. However, special features also ensure that high performance and low I/O latency are maintained when storage interruptions occur, regardless of whether the event is planned or unplanned.

One of the most common types of unplanned storage events is the failure of a flash device or hard disk, which can come in a variety of forms, including outright hardware failure, predictive failure, confinement, and so on. Other unplanned storage events can arise from different hardware or software component failures, such as an Operating System kernel crash that results in a cell reboot. The most common type of planned storage event is a storage server software update. Storage server software updates are performed in a rolling manner, where one cell is updated and re-synchronized before moving on to the next cell.

Whatever the storage interruption, I/O latency is primarily impacted by two factors:

-

Additional I/O load on the system required to deal with the interruption.

-

Cache misses caused by the interruption.

Exadata deals with these impacts using a suite of measures as follows:

Managing the I/O load associated with a storage interruption

-

When storage events occur, Exadata automatically orchestrates the appropriate response to efficiently maintain or restore data redundancy. By using the right approach for each situation, Exadata minimizes the impact of the I/O load required to maintain redundancy:

-

When a hard drive fails, Exadata automatically drops the disk from Oracle ASM. This action automatically triggers an ASM rebalance operation, which restores redundancy to the ASM disk group. The same occurs when failure impacts ASM disks that reside on a flash drive.

-

If a hard drive displays poor performance or enters a predictive failure state, Exadata provides the option to proactively drop the disk and perform a rebalance to maintain redundancy before the drive is replaced.

-

When using Exadata Smart Flash Cache in write-back mode, Exadata automatically maintains metadata that describes the cache contents. When a flash drive failure impacts the cache, Exadata automatically repopulates the cache after the device is replaced. Known as resilvering, this process repopulates the cache by reading from surviving mirrors using highly efficient cell-to-cell direct data transfers over the RDMA network fabric.

-

When storage comes back online after a short-term interruption, Exadata automatically instructs ASM to perform a resync operation to restore redundancy. A resync operation uses a bitmap that tracks storage extent changes. For short-term interruptions, such as those associated with software updates or cell reboots, a resync is a very efficient way to restore redundancy by copying just the changed data.

-

-