41.3 Performance Tuning Applicable to ALM

The following paragraphs provide tips to manage the performance of your ALM environment.

Enhance Performance with Large Payment Schedule Metadata

ALM engine takes a huge time to internally process when there is a huge volume of payment schedule metadata. As per the current design Engine queries a payment schedule table for each instrument record which would have a performance impact once instrument records grow in size. As a resolution to this issue, the engine would cache all payment schedule data at once instead and query the payment schedule table every time an instrument record is read.

In the 8.0.5.0.32 version, a new accessible table called "FSI_ALM_PROCESS_COMMON_CONFIGS " was incorporated to optimize the performance of the ALM process. This enhancement is specifically designed to efficiently handle a significant volume of payment schedules with a multiprocessing feature enabled through the process tuning screen.

This feature can be activated by manually populating the required details into the “FSI_ALM_PROCESS_COMMON_CONFIGS” table.

This feature becomes imperative under the circumstances where the ALM process is configured to operate according to the TP (Transfer Pricing) rule along with the methodology selected as the "Cash Flow Method." In the situation where the ALM process is failing with ORA errors - specifically the ORA-03146 error indicating an invalid buffer length for a TTC field, or the ORA-03106 error signifying a critical two-task communication protocol error, it becomes imperative to activate this feature.

Note:

The required payment scheduled data is getting cached for the performance enhancement. Hence it is imperative to allocate an adequate amount of heap memory, commensurate with the volume of payment schedule data being considered for the given process. If there is sufficient heap memory is available, then process can be executed seamlessly. If require more heap memory, then an additional 120MB of heap memory is necessary to allocate for each one million units of payment schedule data. The increments are usually handled in multiples of 128 MB for heap.You can configure the Infrastructure Application Memory settings as follows, and restart all OFSAA server after setting below changes and before execution of the process:

- Locate the .profile file.

- Edit below 2 X_ARGS fields in this file for customizing memory settings and garbage

collector settings depends on the hardware configuration.

X_ARGS_RLEXE="-Xms2g -Xmx2g -XX:+UseAdaptiveSizePolicy - XX:MaxPermSize=1024M -XX:+UseParallelOldGC -XX:+DisableExplicitGC" X_ARGS_RNEXE="-Xms2g -Xmx2g -XX:+UseAdaptiveSizePolicy - XX:MaxPermSize=1024M -XX:+UseParallelOldGC -XX:+DisableExplicitGC"

Note:

Found the above variables setting to Increase the memory on below Oracle reference documents.

https://docs.oracle.com/cd/E99053_01/PDF/8.1.0.x/8.1.0.0.0/LRS_PerfTun/3 _OFSAAI_Application_Performance_Tuning.htm

https://docs.oracle.com/en/industries/financial-services/ofs-analyticalapplications/analytical-applications-infrastructure/812/aaing/configureinfrastructure-server-memory.html#GUID-112C32F6-F947-4C0F-B051- 92C24DAD7F40

Doc ID 2349295.1

Invalid Key Processing Data

Key processing dimensions considered for ALM processing, you cannot use default values like Missing

(0) or Others (-1) in the corresponding instrument tables, otherwise, the Engine will exhibit undefined behavior or lead to hung state during process execution.

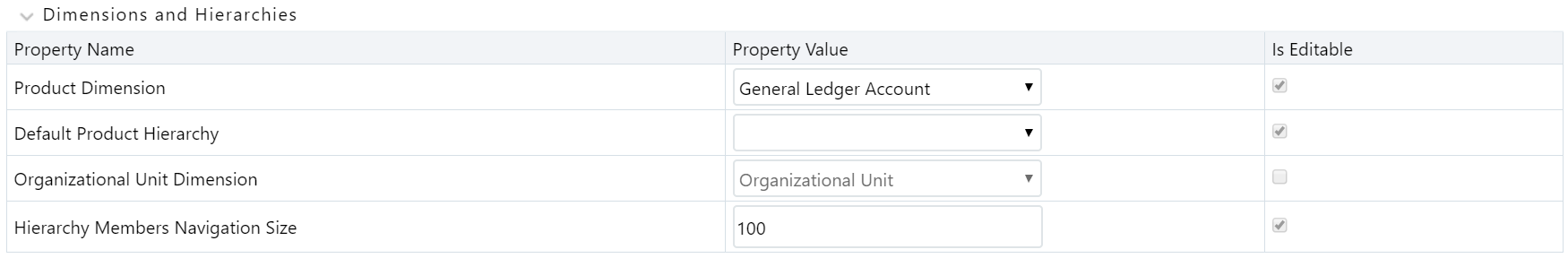

For example, if General Ledger Account is selected as Product Dimension and Organizational Unit as Organizational Unit Dimension, the Instrument Table Dimensions should contain valid values (that is, Non 0 or -1) for the process to execute successfully. Including these values will cause the Engine to hang during the Source data fetching and processing

Figure 41-15 Example of Invalid Processing Data

Process Cash Flow Clean up

If you observe a huge amount of data present in FSI_O_PROCESS_CASH_FLOWS

for your current process ID, then you can decide to delete cash flows from this table

for that process ID before rerunning the process.

Validate Node Map

An ALM process is an assumption driven execution. Based on process configuration, it reads business assumptions from Prepayment, Discount Rate, Product Characteristic, Pricing Margin rules, and soon. In general, these assumptions can be defined at either node or leaf level.

Based on the configuration, the assumptions defined at node level can be inherited by

itschildren which means the same definition is applied to all of its active children .

As part of this process, it is necessary to execute the ALM Validate Node Map

(VALIDATE_NODE_MAP_ALM) package.

By default, the ALM Engine executes this package whenever a process is executed or re-executed. Execution may take a long time when assumptions are defined for a large number of dimension members or are based on a very large hierarchy. In general, ensure that when defining your dimension member pool and hierarchies to keep the number of members to a minimum.

If no changes are made to the underlying hierarchies, execution of this package can be

skipped (after it has been run at least once). Users can indicate when to skip execution

of this procedure by adding the ALM Process ID or IDs as members of the Simple Dimension

Process Identifier Code through the dimension management user interface. You

can add a list of Process IDs for which the VALIDATE_NODE_MAP_ALM

package execution can be excluded by adding multiple members. During the ALM process

execution, the ALM Engine queries this underlying metadata table. If the process ID is

present, the ALM engine will not re-execute the VALIDATE_NODE_MAP_ALM

package. You should remove these dimension entries after any changes are made to

hierarchies so the procedure can run and refresh the node map.

Operating System-Level Tuning

The operating system level performance tuning is related to Semaphores and shared memory settings.

Semaphores

Linux Semaphore settings must be set to optimal under the SEMMSL configuration value.

Shared Memory

The ALM Engine consumes shared memory during any ALM process execution. It is crucial to

check the availability of current shared memory and tune it accordingly. Shared memory

kernel parameters must be set to optimal in the (ofsrm.ini)

configuration file.

An out of shared memory error may be reported in ALM Engine log files during process

execution. In such cases, verify and update shared memory size by updating the

SharedMemory parameter that can be found in the ofsrm.ini file which is located in the

$FIC_DB_HOME/conf directory. The default value of this parameter is

16500 KB.

Database Level Tuning

Database Level performance tuning describes a group of activities used to optimize and standardize the performance of a database. This can be improved using the following options:

Simple View vs Materialized View

If ALM processes a huge volume of source records; a significant number of hits to the

viewscreated on REV registration tables (such as, REV_TAB_COLUMNS_V)

can result in generating many I/O and cluster waits. These waits can spend too much

process execution time querying these views only. If this occurs, change the view to a

materialized view. However, you will need to maintain the changes post-installation

during any future upgrade or install.

Cursor Sharing

Cursor handling within the ALM Engine can be implemented using the CursorSharingMode entry in the ofsrm.ini file as mentioned below. It is observed that setting this value to FORCE results in the best performance.

- Update the CursorSharingMode entry in the ofsrm.ini file (File Location: $FIC_DB_HOME/conf) with the cursor sharing parameter (EXACT, FORCE, and SIMILAR).

- The engine reads the value against CursorSharingMode and sets

CURSOR_SHAREat that Database Session level accordingly. - The cursor level setting is applicable only for the process/session. The Engine

executes thefol- lowing statement if a value set against the

CursorSharingMode.

ALTER SESSION SET CURSOR_SHARING= <Input Value in upper case><Input Values> can be: EXACT, FORCE, SIMILAR

- If no value is supplied, then the Engine will not alter cursor sharing for that session orprocess.

Process Cash Flows Indexes Optimization

While persisting cash flows, the existing unique index on

FSI_O_PROCESS_CASH_FLOWS can be dropped and an index on

RESULT_SYS_ID should be created before running an ALM

process. Dropping the index on FSI_O_PROCESS_CASH_FLOWS is recommended

when outputting cash flows for a large number of instrument records. After the process

completes, the index should be added back.

Recommendations for Performance Improvement

While configuring an ALM process, you can choose to output detailed cash flows (either

all or partial). This data is stored in the FSI_O_PROCESS_CASH_FLOWS

table. When a process is a re-run either for the same As Of Date or for a different

date, existing data for that process is first deleted before new calculations begin.

When the volume of data is high this can impact overall performance. The following

recommendations will help improve this:

- Create a non-unique index on column

RESULT_SYS_IDof the tableFSI_O_PROCESS_CASH_- FLOWS. The index is expected to make DELETE of existing data faster when a process is a re- run. FSI_O_PROCESS_CASH_FLOWScan be partitioned byRESULT_SYS_ID. Automatic List Parti- tioning can be used so that partitions be added automatically when a new process is defined andexecuted.- Before executing an ALM process, existing data for that process can be removed

from

FSI_O_PROCESS_CASH_FLOWSby using DELETE of TRUNCATE PARTITION. This will ensure the engine does not spend time doing that activity.

Some other server and database level settings that can improve performance are:

REDOLog sizeof the database can be increased. This will reduce log switch waits and improve overall time for analyticaloperations.CURSOR_SHARINGparameter of the database can be set to FORCE. By doing this the database will try to convert SQL queries generated by cash flow engine to use bind variables which will reduce hard parses and can improve performance.

These suggestions are guidelines. Consult with your database administrators and other system personnel before implementing, taking your specific infrastructure and other requirementsinto consideration.