6 Load to Production Environment

After completing your conversion of data and validating all the resultant data, the last step is to promote the data into your production environment, also known as "lift and shift". This process will need to be performed in coordination with the Oracle Cloud Operations team. Log an SR to schedule with that team.

The extract of data from the non-production environment to the production environment is a full export of the schema and a full overwrite of the production schema. Therefore, you should ensure all data you do not want promoted from the schema has been removed, such as any data in the integration queue tables or any tran data records generated as part of PO or customer order conversion.

Integration queue tables, used for RIB publication, may have data if triggers were not disabled prior to running the conversion processes and if so will need to be cleaned up. As well, details on the publication tables also needs to be set up correctly for future integration. It is assumed that this would normally occur on the final day of cutover after completing multiple trial, or mock, conversions. In addition to clearing the integration queue tables, whether you disabled the triggers or not, the published indicator must be marked as Y (published) in the integration publishing tables to ensure future integration flows as expected.

To do both of these tasks this, once data conversion is complete and before the lift and shift of the converted data into your production environment, execute the INIT_PUBLISHING task in the System Administration screen. This task will clean up MFQUEUE tables if triggers were not disabled and initialize Merchandising publishing tables for the converted data. Details on how to do this can be found in the "Task Execution Engine" section of this document. See the "Publication Tables" for a list of tables to validate that records exist and that the flags are properly set.

For the actual lift and shift there is a standard set of tables that are excluded, such as those related to data filtering and security (roles, privileges, and duties), materialized views, temporary tables, spreadsheet template configuration, and Sales Audit rules and totals. If necessary, you can coordinate with the Oracle Cloud Operations team to add other tables to this list as well, such as if you are performing a phased conversion by functional area.

Prior to executing the lift and shift, the production schema will be backed up and RIB, Golden Gate, and Merchandising solutions will be brought down. After the import is completed, the Oracle Cloud Operations team will execute a series of standard clean-up activities in your production environment. These include

-

Creating an export file for re-syncing your target DAS environment via Golden Gate

-

Clearing any integration queues and re-enabling triggers and constraints

-

Resetting the sequences in production

-

Validating the correct business date is set

-

Bringing back up the RIB, Merchandising solutions, and Golden Gate

You will then be able to start your validation of the production environment.

Monitoring and Troubleshooting

As you are loading and validating data through this tool, the progress can be monitored using the reports visible in the user interface. Additionally, the outcome of the validation processes is available in the UI, including the status of the process, the start and end times, and the records processed successfully and in error. For further troubleshooting read-only access has also been provided to the Data Conversion schema through the Merchandising APEX Data Viewer link.

Contextual Reports

Additionally, to better analyze the errors, two contextual reports are available in each of the screens described above.

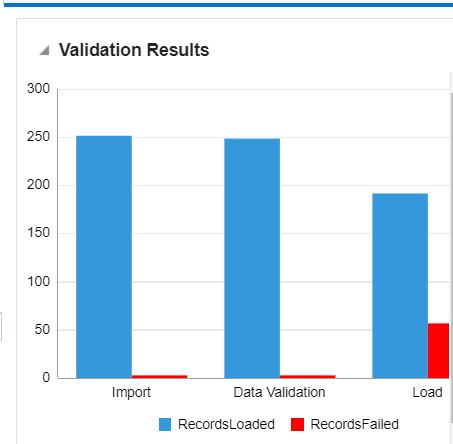

Figure 6-1 Validation Results

This summarizes validation results for the current process ID across all three steps in the loading process.

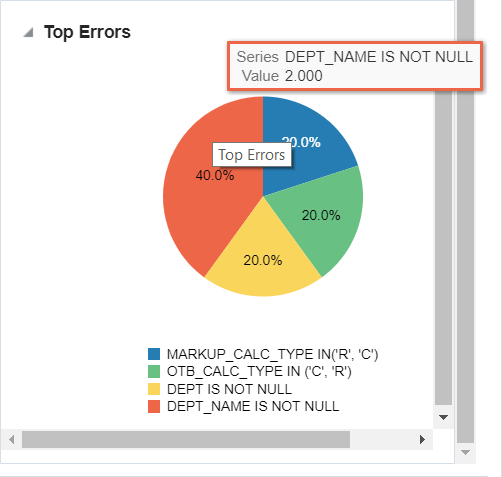

Figure 6-2 Top Errors

This shows the top errors encountered for the process ID being viewed. For example, if you are in the Import from File screen, it will show you the top errors from the file import; whereas in the Data Validation screen, it will show you the top errors from the data validation against the process ID. This may help highlight commonly occurring errors that require corrections on the legacy side or in your transformation programs.

User Interface Monitoring

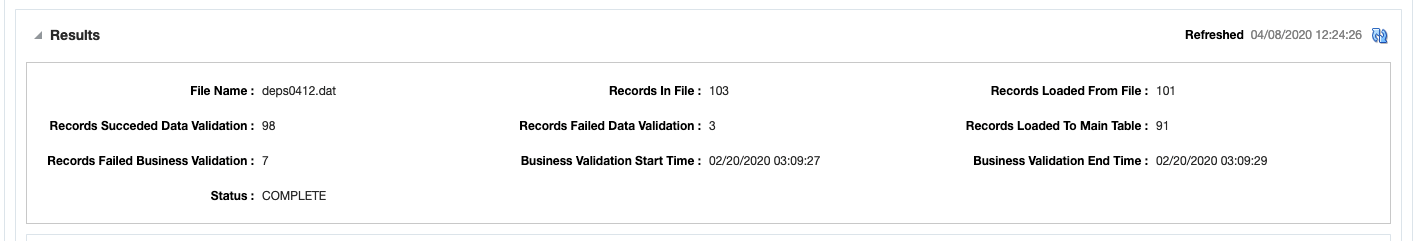

At each stage along the import and validation process, the Data Conversion tool screens provide statistics for the processing of the upload, including start and end times, number of errors, and number of records successfully processed. Also, for Business Validation and Mass Upload, if you have configured a certain data entity to run using threads or chunks, you will be able to monitor the completion by chunk during business validation by clicking the Refresh icon button (![]() ) in the results section.

) in the results section.

Database Monitoring

For larger processes that may take longer to complete due to the volume of data being processes, read-only access has been provided to the data conversion schema to support:

-

Monitoring completion by chunk

-

Debugging validation errors

To monitor database sessions query V_DC_SESSION_INFO view through the APEX Data Viewer by prefixing the data conversion schema.

The key tables that are available in the Data Conversion schema for troubleshooting and debugging are described in the table belowFoot 1.

| Table Name | Description |

|---|---|

|

DC_MASTER_PROCESS_TRACKER |

Used in data conversion and contains the processes associated to a mass upload process. It shows the relationship between each of the individual processes spawned in the mass upload. |

|

DC_PROCESS_TRACKER |

Contains information on the conversion process against a process ID. The information in this table is similar to what you see in the various screens, including start and end times for each phase of the process, and the count of records processed successfully and in error by phase. |

|

DC_IMPORT_STATUS |

Used during the Import from File process to calculate the file size and monitor progress. |

|

DC_FILE_ERRORS |

Used to hold the errors encountered during Import from File. In addition to the error messages, it also indicates the Merchandising table and column names for which the error applies. This is the same information displayed in the table in the Import from File screen. |

|

DC_FILE_ERROR_DATA |

Used to hold the rejected data during Import from File. Note: the data may be split across multiple columns for a single row in this table due to size. |

|

DC_VALIDATION_ERROR |

Holds the errors encountered in the Data Validation stage. In addition to the error messages, it also indicates the Merchandising table and column names for which the error applies. This is the same information displayed in the table in the Data Validation screen. |

|

SVC_ADMIN_UPLD_ER |

Contains errors encountered while uploading data to Merchandising tables from Data Conversion tool during the Business Validation stage. In addition to the error messages, it also indicates the Merchandising table and column names for which the error applies. This is the same information displayed in the errors table in the Business Validation screen. |

|

DC_MOCKS |

Used to hold data on a mock cleanup cycle, including the start and end date, and status (STARTING, STARTED and COMPLETED). |

|

DC_MOCK_EXCEPTION |

If any errors are encountered when performing the clean-up cycle, they will be shown in this table. |

Other tables in the data model should be considered more metadata for the solution and, although visible in the database, are likely not useful for troubleshooting. Some details on these tables below.

| Table Name | Description |

|---|---|

|

DC_ENTITY |

This table is used in data conversion for holding information on the entities supported in the Data Conversion tool |

|

DC_ENTITY_DETAIL |

This table provides the details of the staging tables into which data from the input files would be loaded |

|

DC_ENTITY_GROUP |

This table is used in data conversion for holding the entity groupings |

|

DC_ENTITY_GROUP_LIST |

This table is used in data conversion for holding the entity group to entity mappings |

|

DC_MERCH_SEED_TABLES |

This table holds information on the tables seeded during installation. |

|

DC_SEQ_MAPPING |

Contains the sequences used by the base Merchandising tables. These sequences would be automatically ramped up to keep in line with the data loaded in the Data Conversion tool. |

|

DC_SEQUENCE_BKP |

Used to back up the sequence values at the end of each mock cycle. |

|

DC_SYSTEM_OPTIONS |

Holds the system level parameters for the Data Conversion tool. Configuration changes to this by end users is not supported. |

|

RTK_ERRORS |

Contains one row for each error message used by the Data Conversion tool in your primary language. This table is populated during installation of the tool and cannot be modified. |

|

RTK_ERRORS_TL |

Contains translations of the error messages used by the Data Conversion tool into other languages applicable for your users. This table is populated during installation of the tool and cannot be modified. |

|

CORESVC_ITEM_ERR |

This is a temporary table used when loading item data during business validation. The errors are then moved to the SVC_ADMIN_UPLD_ER table. |

|

CORESVC_COSTCHG_CHUNKS |

This is a temporary table that contains information about chunks that are used to process cost change data. |

|

CORESVC_COSTCHG_ERR |

This is a temporary table used when loading cost change data during business validation. The errors are then moved to the SVC_ADMIN_UPLD_ER table. |

|

CORESVC_PO_CHUNKS |

This is a temporary table that holds the chunking information for the PO tables. |

|

CORESVC_PO_ERR |

This is a temporary table used when loading purchase order data during business validation. The errors are then moved to the SVC_ADMIN_UPLD_ER table. |

For more information on the columns for each of these tables as well as the staging tables used during the import and validation processes, please see the data model which can be viewed on My Oracle Support under ID 2619103.1.

Footnote Legend

Footnote 1:To query the tables in the Merchandising schema, you'll need to use the RDC01 qualifier on the table, as synonyms were not created for these objects (for example, RDC01_DC.DC_MOCKS).