6 Using the Flume Handler

Learn how to use the Flume Handler to stream change capture data to a Flume source database.

Topics:

6.1 Overview

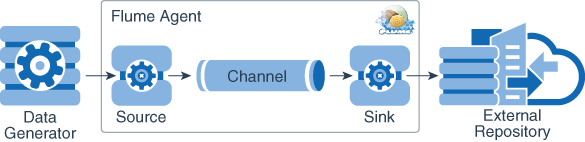

Apache Flume is a data ingestion mechanism for collecting, aggregating, and transporting large amounts of streaming data from various sources to a centralized data store.

The Flume Handler is designed to stream change capture data from an Oracle GoldenGate trail to a Flume source. Apache Flume is an open source application for which the primary purpose is streaming data into Big Data applications.

Data Generator

A data generator is any data feed that creates data to be collected by the Flume Agent.

Flume Agent

The Flume agent is a JVM daemon process that receives the events from data generator clients or other agents and forwards it to a destination sink or agent. The Flume agent is comprised of:

- Source

- The source component receives data from the data generators and transfers it to one or more channels in the form of Flume events.

- Channel

- A channel is a transient store that receives Flume events from the source and buffers them until they are consumed by sinks.

- Sink

- A sink consumes Flume events from the channels and delivers them to the destination. The destination of the sink may be another agent or an external repository.

Parent topic: Using the Flume Handler

6.2 Setting Up and Running the Flume Handler

Instructions for configuring the Flume Handler components and running the handler are described in this section.

To run the Flume Handler, a Flume Agent configured with an Avro or Thrift Flume source must be up and running. Oracle GoldenGate can be collocated with Flume or located on a different machine. If located on a different machine, the host and port of the Flume source must be reachable with a network connection. For instructions on how to configure and start a Flume Agent process, see the Flume User Guide https://flume.apache.org/releases/content/1.6.0/FlumeUserGuide.pdf.

Topics:

Parent topic: Using the Flume Handler

6.2.1 Classpath Configuration

For the Flume Handler to connect to the Flume source and run, the Flume Agent configuration file and the Flume client jars must be configured in gg.classpathconfiguration variable. The Flume Handler uses the contents of the Flume Agent configuration file to resolve the host, port, and source type for the connection to Flume source. The Flume client libraries do not ship with Oracle GoldenGate for Big Data. The Flume client library versions must match the version of Flume to which the Flume Handler is connecting. For a list of the required Flume client JAR files by version, see Flume Handler Client Dependencies.

The Oracle GoldenGate property, gg.classpath variable must be set to include the following default locations:

-

The default location of the

core-site.xmlfile isFlume_Home/conf. -

The default location of the Flume client JARS is

Flume_Home/lib/*.

The gg.classpath must be configured exactly as shown here. The path to the Flume Agent configuration file must contain the path with no wild card appended. The inclusion of the wildcard in the path to the Flume Agent configuration file will make the file inaccessible. Conversely, pathing to the dependency jars must include the * wildcard character in order to include all of the JAR files in that directory in the associated classpath. Do not use *.jar. The following is an example of a correctly configured gg.classpath variable:

gg.classpath=dirprm/:/var/lib/flume/lib/*

If the Flume Handler and Flume are not collocated, then the Flume Agent configuration file and the Flume client libraries must be copied to the machine hosting the Flume Handler process.

Parent topic: Setting Up and Running the Flume Handler

6.2.2 Flume Handler Configuration

The following are the configurable values for the Flume Handler. These properties are located in the Java Adapter properties file (not in the Replicat properties file).

To enable the selection of the Flume Handler, you must first configure the handler type by specifying gg.handler.jdbc.type=flume and the other Flume properties as follows:

| Property Name | Property Value | Required / Optional | Default | Description |

|---|---|---|---|---|

|

|

|

Yes |

List of handlers. Only one is allowed with grouping properties |

|

|

|

|

Yes |

Type of handler to use. |

|

|

|

Formatter class or short code |

No. |

Defaults to delimitedtext |

The formatter to be used. Can be one of the following:

You can also write a custom formatter and include the fully qualified class name here. |

|

|

Any choice of filename |

No. Defaults to |

Either the default |

|

|

|

|

No. Defaults to |

Operation mode (op) or Transaction Mode (tx). Java Adapter grouping options can be used only in tx mode. |

|

|

|

A custom implementation fully qualified class name |

No. Defaults to |

Class to be used to define what header properties are to be added to a flume event. |

|

|

|

|

No. Defaults to |

Defines whether each flume event represents an operation or a transaction. If |

|

|

|

|

No. Defaults to |

When set to |

|

|

|

|

No. Defaults to |

When set to |

Parent topic: Setting Up and Running the Flume Handler

6.2.3 Review a Sample Configuration

gg.handlerlist = flumehandler gg.handler.flumehandler.type = flume gg.handler.flumehandler.RpcClientPropertiesFile=custom-flume-rpc.properties gg.handler.flumehandler.format =avro_op gg.handler.flumehandler.mode =tx gg.handler.flumehandler.EventMapsTo=tx gg.handler.flumehandler.PropagateSchema =true gg.handler.flumehandler.includeTokens=false

Parent topic: Setting Up and Running the Flume Handler

6.3 Data Mapping of Operations to Flume Events

This section explains how operation data from the Oracle GoldenGate trail file is mapped by the Flume Handler into Flume Events based on different configurations. A Flume Event is a unit of data that flows through a Flume agent. The event flows from source to channel to sink and is represented by an implementation of the event interface. An event carries a payload (byte array) that is accompanied by an optional set of headers (string attributes).

Topics:

- Operation Mode

- Transaction Mode and EventMapsTo Operation

- Transaction Mode and EventMapsTo Transaction

Parent topic: Using the Flume Handler

6.3.1 Operation Mode

The configuration for the Flume Handler in the Oracle GoldenGate Java configuration file is as follows:

gg.handler.{name}.mode=op

The data for each operation from an Oracle GoldenGate trail file maps into a single Flume Event. Each event is immediately flushed to Flume. Each Flume Event has the following headers:

-

TABLE_NAME:The table name for the operation -

SCHEMA_NAME: The catalog name (if available) and the schema name of the operation -

SCHEMA_HASH: The hash code of the Avro schema (only applicable for Avro Row and Avro Operation formatters)

Parent topic: Data Mapping of Operations to Flume Events

6.3.2 Transaction Mode and EventMapsTo Operation

The configuration for the Flume Handler in the Oracle GoldenGate Java configuration file is as follows:

gg.handler.flume_handler_name.mode=tx

gg.handler.flume_handler_name.EventMapsTo=op

The data for each operation from Oracle GoldenGate trail file maps into a single Flume Event. Events are flushed to Flume when the transaction is committed. Each Flume Event has the following headers:

-

TABLE_NAME: The table name for the operation -

SCHEMA_NAME: The catalog name (if available) and the schema name of the operation -

SCHEMA_HASH: The hash code of the Avro schema (only applicable for Avro Row and Avro Operation formatters)

We recommend that you use this mode when formatting data as Avro or delimited text. It is important to understand that configuring Replicat batching functionality increases the number of operations that are processed in a transaction.

Parent topic: Data Mapping of Operations to Flume Events

6.3.3 Transaction Mode and EventMapsTo Transaction

The configuration for the Flume Handler in the Oracle GoldenGate Java configuration file is as follows.

gg.handler.flume_handler_name.mode=tx gg.handler.flume_handler_name.EventMapsTo=tx

The data for all operations for a transaction from the source trail file are concatenated and mapped into a single Flume Event. The event is flushed when the transaction is committed. Each Flume Event has the following headers:

-

GG_TRANID: The transaction ID of the transaction -

OP_COUNT: The number of operations contained in this Flume payload event

We recommend that you use this mode only when using self describing formats such as JSON or XML. In is important to understand that configuring Replicat batching functionality increases the number of operations that are processed in a transaction.

Parent topic: Data Mapping of Operations to Flume Events

6.4 Performance Considerations

Consider the following options for enhanced performance:

-

Set Replicat-based grouping

-

Set the transaction mode with

gg.handler.flume_handler_name.EventMapsTo=tx -

Increase the maximum heap size of the JVM in Oracle GoldenGate Java properties file (the maximum heap size of the Flume Handler may affect performance)

Parent topic: Using the Flume Handler

6.5 Metadata Change Events

The Flume Handler is adaptive to metadata change events. To handle metadata change events, the source trail files must have metadata in the trail file. However, this functionality depends on the source replicated database and the upstream Oracle GoldenGate Capture process to capture and replicate DDL events. This feature is not available for all database implementations in Oracle GoldenGate. To determine whether DDL replication is supported, see the Oracle GoldenGate installation and configuration guide for the appropriate database.

Whenever a metadata change occurs at the source, the Flume Handler notifies the associated formatter of the metadata change event. Any cached schema that the formatter is holding for that table will be deleted. The next time that the associated formatter encounters an operation for that table the schema is regenerated.

Parent topic: Using the Flume Handler

6.6 Example Flume Source Configuration

Topics:

Parent topic: Using the Flume Handler

6.6.1 Avro Flume Source

The following is a sample configuration for an Avro Flume source from the Flume Agent configuration file:

client.type = default

hosts = h1

hosts.h1 = host_ip:host_port

batch-size = 100

connect-timeout = 20000

request-timeout = 20000Parent topic: Example Flume Source Configuration

6.6.2 Thrift Flume Source

The following is a sample configuration for an Avro Flume source from the Flume Agent configuration file:

client.type = thrift hosts = h1 hosts.h1 = host_ip:host_port

Parent topic: Example Flume Source Configuration

6.7 Advanced Features

You may choose to implement the following advanced features of the Flume Handler:

Topics:

- Schema Propagation

- Security

- Fail Over Functionality

- Load Balancing Functionality

- Configuring for Apply Apache Flume File Roll Sink

Parent topic: Using the Flume Handler

6.7.1 Schema Propagation

The Flume Handler can propagate schemas to Flume. This feature is currently only supported for the Avro Row and Operation formatters. To enable this feature, set the following property:

gg.handler.name.propagateSchema=true

The Avro Row or Operation Formatters generate Avro schemas on a just-in-time basis. Avro schemas are generated the first time an operation for a table is encountered. A metadata change event results in the schema reference being for a table being cleared, and a new schema is generated the next time an operation is encountered for that table.

When schema propagation is enabled, the Flume Handler propagates schemas in an Avro Event when they are encountered.

Default Flume Schema Event headers for Avro include the following information:

-

SCHEMA_EVENT: true -

GENERIC_WRAPPER: true or false -

TABLE_NAME: The table name as seen in the trail -

SCHEMA_NAME: The catalog name (if available) and the schema name -

SCHEMA_HASH: The hash code of the Avro schema

Parent topic: Advanced Features

6.7.2 Security

Kerberos authentication for the Oracle GoldenGate for Big Data Flume Handler connection to the Flume source is possible. This feature is supported only in Flume 1.6.0 and later using the Thrift Flume source. You can enable it by changing the configuration of the Flume source in the Flume Agent configuration file.

The following is an example of the Flume source configuration from the Flume Agent configuration file that shows how to enable Kerberos authentication. You must provide Kerberos principal name of the client and the server. The path to a Kerberos keytab file must be provided so that the password of the client principal can be resolved at runtime. For information on how to administer Kerberos, on Kerberos principals and their associated passwords, and about the creation of a Kerberos keytab file, see the Kerberos documentation.

client.type = thrift hosts = h1 hosts.h1 =host_ip:host_port kerberos=true client-principal=flumeclient/client.example.org@EXAMPLE.ORG client-keytab=/tmp/flumeclient.keytab server-principal=flume/server.example.org@EXAMPLE.ORG

Parent topic: Advanced Features

6.7.3 Fail Over Functionality

It is possible to configure the Flume Handler so that it fails over when the primary Flume source becomes unavailable. This feature is currently supported only in Flume 1.6.0 and later using the Avro Flume source. It is enabled with Flume source configuration in the Flume Agent configuration file. The following is a sample configuration for enabling fail over functionality:

client.type=default_failover hosts=h1 h2 h3 hosts.h1=host_ip1:host_port1 hosts.h2=host_ip2:host_port2 hosts.h3=host_ip3:host_port3 max-attempts = 3 batch-size = 100 connect-timeout = 20000 request-timeout = 20000

Parent topic: Advanced Features

6.7.4 Load Balancing Functionality

You can configure the Flume Handler so that produced Flume events are load-balanced across multiple Flume sources. This feature is currently supported only in Flume 1.6.0 and later using the Avro Flume source. You can enable it by changing the Flume source configuration in the Flume Agent configuration file. The following is a sample configuration for enabling load balancing functionality:

client.type = default_loadbalance hosts = h1 h2 h3 hosts.h1 = host_ip1:host_port1 hosts.h2 = host_ip2:host_port2 hosts.h3 = host_ip3:host_port3 backoff = false maxBackoff = 0 host-selector = round_robin batch-size = 100 connect-timeout = 20000 request-timeout = 20000

Parent topic: Advanced Features

6.7.5 Configuring for Apply Apache Flume File Roll Sink

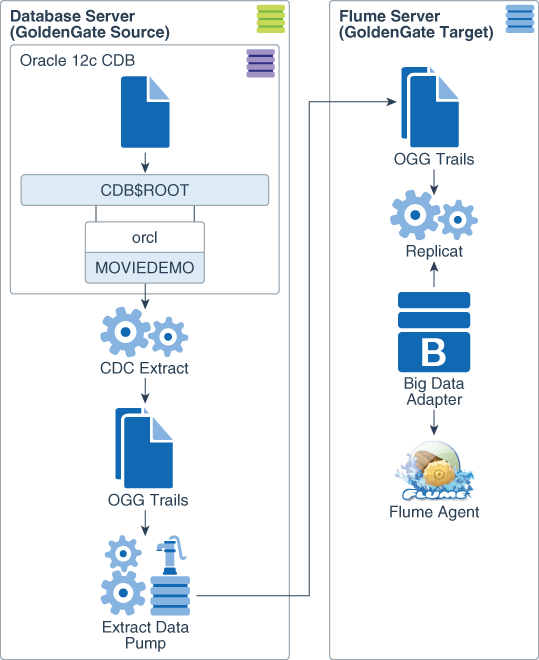

For an integration deployment that includes Apache Flume, you begin by setting up Oracle GoldenGate for Extract and Replicat.

- Install Oracle GoldenGate for the source Oracle Database.

- Alter the database settings to enable Extract.

- Create a GoldenGate change Extract to retrieve near real-time transactional data from the redo files.

The Oracle GoldenGate architecture to configure in this example is:

Configuring the Apache Flume Handler

To setup the Apache Flume Handler for Replicat, you need to configure three files:

-

Replicat parameter file.

-

Oracle GoldenGate Big Data properties file.

-

Your Apache Flume properties file.

Put all three files in the Oracle GoldenGate configuration file default

directory,dirprm.

Replicat Parameter File

A sample Replicat parameter file:

REPLICAT rflume

TARGETDB LIBFILE libggjava.so SET property=dirprm/flume_frs.properties

REPORTCOUNT EVERY 20 MINUTES, RATE

GROUPTRANSOPS 100

MAP orcl.moviedemo.movie TARGET orcl.moviedemo.movie;The TARGETDB LIBFILE libggjava.so SET

property=dirprm/flume_frs.properties parameter serves as a trigger to initialize

the Java module. The SET clause specifies the location and name of a Java

properties file for the Java module. The Java properties file location may be specified as

either an absolute path, or path relative to the Replicat executable location. In this

configuration, you use a relative path for the Oracle GoldenGate instance.

The GROUPTRANSOPS 100 parameter controls the number of SQL

operations that are contained in a Replicat transaction. The default setting is 1000, and is

the best practice setting for a production environment. However, if the test server is very

small and you don’t want to overwhelm the flume channel, you can reduce the number of

operations the Replicat will apply in a transaction.

Properties File

A sample properties file:

## RFLUME properties for Apache Flume Sink apply

##

gg.handlerlist=flumehandler

gg.handler.flumehandler.type=flume

gg.handler.flumehandler.RpcClientPropertiesFile=custom-flume-rpc.properties

gg.handler.flumehandler.format=delimitedtext

gg.handler.flumehandler.format.fieldDelimiter=,

gg.handler.flumehandler.mode=tx

gg.handler.flumehandler.EventMapsTo=tx

gg.handler.flumehandler.PropagateSchema=true

gg.handler.flumehandler.includeTokens=false

##

goldengate.userexit.timestamp=utc

goldengate.userexit.writers=javawriter

javawriter.stats.display=TRUE

javawriter.stats.full=TRUE

##

gg.log=log4j

gg.log.level=INFO

##

gg.report.time=30sec

##

gg.classpath=dirprm/:/home/oracle/apache-flume-1.8.0/lib/*:

##

javawriter.bootoptions=-Xmx512m -Xms32m -Djava.class.path=ggjava/ggjava.jar| Property | Description |

|---|---|

gg.handlerlist=flumehandler |

Defines the name of the handler configuration properties in this file. |

gg.handler.flumehandler.type=flume |

Defines the type of this handler |

gg.handler.flumehandler.RpcClientPropertiesFile=custom-flume-rpc.properties |

Specifies the name of the Flume Agent configuration file. This file defines the Flume connection information for Replicat to perform remote procedure calls |

gg.handler.flumehandler.format=delimitedtext |

Sets the format of the output. The supported formatters are:

|

gg.handler.flumehandler.format.fieldDelimiter=| |

The default delimiter is an unprintable character. The default is changed it to be a vertical bar using this property. |

gg.handler.flumehandler.mode=tx |

Sets the operating mode of the Java Adapter. In tx

mode, output is written in transactional groups defined by the Replicat

GROUPTRANSOPS property.

|

gg.handler.flumehandler.EventMapsTo=tx |

Defines whether each flume event would represent an operation or a

transaction, based upon the setting of

gg.handler.flumehandler.mode.

|

gg.handler.flumehandler.PropagateSchema=true |

Defines if the Flume handler publishes schema events. |

gg.handler.flumehandler.includeTokens=false |

When set to true, token data from the source

trail files is included in the output. Set to false, the token data

from the source trail files is excluded from the output

|

gg.classpath=dirprm/:/home/oracle/apache-flume-1.8.0/lib/*: |

Specifies user-defined Java classes and packages used by the Java

Virtual Machine to connect to Flume and run. The classpath setting must include:

/home/oracle/apache-flume-1.8.0/lib directory and a wildcard is

used to load all of the JARs.

Note: The Flume client library versions must match the version of Flume that the Flume Handler is connecting. |

javawriter.bootoptions=-Xmx512m -Xms32m

-Djava.class.path=ggjava/ggjava.jar |

Sets the JVM runtime memory allocation and the location of the

Oracle GoldenGate Java Adapter dependencies, ggjava.jar,

file.

|

Flume Agent Configuration File

A sample Flume Agent configuration file:

client.type=default

hosts=h1

hosts.h1=localhost:41414

batch-size=100

connect-timeout=20000

request-timeout=20000 This file contains the settings Oracle GoldenGate uses to connect to the Flume Agent. The Oracle GoldenGate Big Data Adapter attempts an Avro connection to a Flume Agent running on the local machine and listening for connections on port 41414. Data is sent to Flume in batches of 100 events. Connection and requests to the Flume Agent timeout and fail if there is no response within 2000 ms.

Flume Agent Configuration

The Oracle GoldenGate Big Data Adapter is configured to connect to a Flume

Agent on the local machine, listening on port 41414. To configure the Flume Agent, create

the file /home/oracle/flume/flume_frs.conf with these properties:

## Flume Agent Settings

##

oggfrs.channels = memoryChannel

oggfrs.sources = rpcSource

oggfrs.sinks = fr1

#

oggfrs.channels.memoryChannel.type = memory

#

oggfrs.sources.rpcSource.channels = memoryChannel

oggfrs.sources.rpcSource.type = avro

oggfrs.sources.rpcSource.bind = 0.0.0.0

oggfrs.sources.rpcSource.port = 41414

#

oggfrs.sinks.fr1.type = file_roll

oggfrs.sinks.fr1.channel = memoryChannel

oggfrs.sinks.fr1.sink.directory = /u01/flumeOut/moviedemo/movie

#

# Create a new file every 120 seconds, default is 30

oggfrs.sinks.fr1.sink.rollInterval = 120In this configuration file, a single Flume Agent is created, named

oggfrs. The oggfrs Agent consists of an Avro RPC source

that listens on local host, port 41414, a channel that buffers event data in memory, and a

sink that writes events to files in a directory of the local file system. The sink is set up

to close the existing file and create a new one every 120 seconds. The sink directory is set

to include the Oracle source schema and table name, because the file roll sink does not

provide a mechanism for naming the output files.

The file roll sink names the files based upon the current timestamp when the file is created. The Flume source code sets the file name as follows:

public class PathManager

{

private long seriesTimestamp;

private File baseDirectory;

private AtomicInteger fileIndex;

private File currentFile;

public PathManager()

{

seriesTimestamp = System.currentTimeMillis();

fileIndex = new AtomicInteger();

}

public File nextFile()

{

currentFile = new File(baseDirectory, seriesTimestamp + "-"

+ fileIndex.incrementAndGet());

return currentFile;

}

public File getCurrentFile()

{

if (currentFile == null)

{

return nextFile();

}

return currentFile;

}

}For more information on setting up Apache Flume Agents, see the Apache Flume Documentation.

Starting the Flume Agent

-

In the

/home/oracle/flumedirectory, you create a shell script calledflume_frs.shto start the agent, with the following command:flume-ng agent --conf conf --conf-file flume_frs.conf --name oggfrs -Dflume.root.logger=INFO,console -

Make the script executable using

chmod +x flume_frs.sh. -

Create the output directory

/u01/flumeOut/movidemo/movie. -

Execute the script to start the agent. Upon a successful agent start up, messages similar to the following appear:

16/10/17 10:22:28 INFO node.AbstractConfigurationProvider: Creating channels 16/10/17 10:22:28 INFO channel.DefaultChannelFactory: Creating instance of channel memoryChannel type memor; 16/10/17 10:22:28 INFO node.AbstractConfigurationProvider: Created channel memoryChannel 16/10/17 10:22:28 INFO source.DefauttSourceFactory: Creating instance of source rpcSource, type avro 16/10/17 10:22:28 INFO sink.DefaultSinkFactory: Creating instance of sink: frl, type: file_roll 16/10/17 10:22:28 INFO node.AbstractConfigurationProvider: Channel memoryChannel connected to frpcSource, frl] 16/10/17 10:22:28 INFO node.Application: Starting new configuration:{ sourceRunners:(rpcSource=EventerivenSourceRunner: { source:Avro source rpcSource: { bindAddress: 0.0.0.0, port: 41414 } II sinkRunners:{fr1=Sin Runner: { policy:org.apacheilume.sink.DefaultSinkProcessorD7fde4dbb counter6roup:( name:null counters:() )) channels: (memoryChannel=org.apacheilume.channelmemoryChannel(name: memoryChannel)) 16/10/17 10:22:28 INFO node.Application: Starting Channel memoryChannel 16/10/17 10:22:28 INFO instrumentation.MonitoredCounter6roup: Monitored counter group for type: CHANNEL, name: memoryChannel: Successfully registered new N8ean. 16/10/17 10:22:28 INFO instrumentation.monitoredCounter6roup: Component type: CHANNEL, name: memorychannel started 16/10/17 10:22:28 INFO node.Application: Starting Sink frl 16/10/17 10:22:28 INFO sink.RollingFilesink: Starting org.apacheilume.sink.RollingFileSink(name:fr1, chann el:memoryChannel)... 16/10/17 10:22:28 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: SINK, name: fr1: Successfully registered new Mean. 16/10/17 10:22:28 INFO instrumentation.HonitoredCounterGroup: Component type: SINK, name: frl started 16/10/17 10:22:28 INFO node.Application: Starting Source rpcSource 16/10/17 10:22:28 INFO sink.RollingFileSink: RottingFileSink fr1 started. 16/10/17 10:22:28 INFO source.AvroSource: Starting Avro source rpcSource: { bindAddress: 0.0.0.0, port: 414 14 }... 16/10/17 10:22:28 INFO instrumentation.MonitoredCounter6roup: Monitored counter group for type: SOURCE, name: rpcSource: Successfully registered new Mean. 16/10/17 10:22:28 INFO instrumentation.MonitoredCounter6roup: Component type: SOURCE, name: rpcSource start ed 16/10/17 10:22:28 INFO source.AvroSource: Avro source rpcSource started.

The output directory shows three files produced by the file roll sink:

$ pwd

/u01/ogg-bd/flumeOut/moviedemo/movie

$ ls -l

total 0

-rw-r--r--. 1 oracle oinstall 0 Oct 17 10:22 1476714148291-1

-rw-r--r--. 1 oracle oinstall 0 Oct 17 10:24 1476714148291-2

-rw-r--r--. 1 oracle oinstall 0 Oct 17 10:26 1476714148291-3 Note:

The file roll sink creates new files at the interval specified in the Flume Agent configuration file, even when there is no Oracle GoldenGate activity.Starting Oracle GoldenGate and Testing

-

To start and test your configuration, you select the target Oracle GoldenGate instance, and then make sure the Oracle GoldenGate Manager is in the

RUNNINGstate.GGSCI (bigdatalite.localdomain) 2> status manager Manager is running (IP port bigdatalite.localdomain.7810, Process ID 10507). GGSCI (bigdatalite.localdomain) 3> -

Change directory to select the source Oracle GoldenGate instance, start

GGSCIand then start the Extract and Extract Data Pump (if they are not already running.) -

In the Oracle GoldenGate target, start the

rflumeReplicat. Executing the commandstatus rflumeshould show it in theRUNNINGstate.GGSCI (bigdatalite.localdomain) 5> start rflume Sending START request to MANAGER ... REPLICAT RFLUME starting GGSCI (bigdatalite.localdomain) 6> status rflume REPLICAT RFLUME: RUNNINGThe Flume Agent shows the Replicat connect to the Flume Source:

16/10/17 12:06:17 INFO ipc.NettyServer: [id: 0x8008c345, /127.0.0.1:25167 => /127.0.0.1:41414] OPEN 16/10/17 12:06:17 INFO ipc.NettyServer: [id: 0x8008c345, /127.0.0.1:25167 => /127.0.0.1:41414] BOUND: /127. 0.0.1:41414 16/10/17 12:06:17 INFO ipc.NettyServer: [id: 0x8008c345, /127.0.0.1:25167 => /127.0.0.1:41414] CONNECTED: / 127.0.0.1:25167 -

To verify that data stored into the Oracle database flows through tp the Flume File Roll Sink, generate some data using either

sqlplusorSQL Developer:INSERT INTO "MOVIEDEMO"."MOVIE" (MOVIE_ID, TITLE, YEAR, BUDGET, GROSS, PLOT_SUMMARY) VALUES ('1173971','Jack Reacher: Never Go Back', '2016','96000000','0','Jack Reacher must uncover the truth behind a major government conspiracy in order to clear his name. On the run as a fugitive from the law, Reacher uncovers a potential secret from his past that could change his life forever.'); INSERT INTO "MOVIEDEMO"."MOVIE" (MOVIE_ID, TITLE, YEAR, BUDGET, GROSS, PLOT_SUMMARY) VALUES ('1173972','Boo! A Madea Holloween', '2016','0','0','Madea winds up in the middle of mayhem when she spends a haunted Halloween fending off killers, paranormal poltergeists, ghosts, ghouls and zombies while keeping a watchful eye on a group of misbehaving teens.'); INSERT INTO "MOVIEDEMO"."MOVIE" (MOVIE_ID, TITLE, YEAR, BUDGET, GROSS, PLOT_SUMMARY) VALUES ('1173973','Like Stars on Earth', '2007','0','1204660','An eight-year-old boy is thought to be lazy and a trouble-maker, until the new art teacher has the patience and compassion to discover the real problem behind his struggles in school.'); commit; -

Execute the GGSCI

view report rflumecommand on the Oracle GoldenGate target. The output shows that data was captured and sent to the Oracle GoldenGate Replicat:2016-10-17 12:36:33 INFO OGG-06505 MAP resolved (entry orcl.moviedemo.movie ): MAP "ORCL"."MOVIEDEMO"."MOVIE" TARGET orcl.moviedemo.movie. 2016-10-17 12:36:33 INFO OGG-02756 The definition for table ORCL.MOVIEDEMO. MOVIE is obtained from the tail file. 2016-10-17 12:36:33 INFO OGG-06511 Using following columns in default map by name: MOVIE_ID, TITLE, YEAR, BUDGET, GROSS, PLOT_SUMMARY. 2016-10-17 12:36:33 INFO OGG-06510 Using the following key columns for targ et table orcl.moviedemo.movie: MOVIE_ID. GGSCI (bigdatalite.localdomain) 11>A file containing data can be found in the Flume file roll sink output directory:

-rw-r--r--. 1 oracle oinstall 0 Oct 17 12:15 1476720205641-7 -rw-r--r--. 1 oracle oinstall 0 Oct 17 12:17 1476720205641-8 -rw-r--r--. 1 oracle oinstall 0 Oct 17 12:19 1476720205641-9 -rw-r--r--. 1 oracle oinstall 0 Oct 17 12:21 1476720205641-10 -rw-r--r--. 1 oracle oinstall 0 Oct 17 12:23 1476720205641-11 -rw-r--r--. 1 oracle oinstall 0 Oct 17 12:25 1476720205641-12 -rw-r--r--. 1 oracle oinstall 0 Oct 17 12:27 1476720205641-13 -rw-r--r--. 1 oracle oinstall 0 Oct 17 12:29 1476720205641-14 -rw-r--r--. 1 oracle oinstall 0 Oct 17 12:31 1476720205641-15 -rw-r--r--. 1 oracle oinstall 0 Oct 17 12:33 1476720205641-16 -rw-r--r--. 1 oracle oinstall 1723 Oct 17 12:36 1476720205641-17 -rw-r--r--. 1 oracle oinstall 0 Oct 17 12:37 1476720205641-18 -rw-r--r--. 1 oracle oinstall 0 Oct 17 12:39 1476720205641-19 -rw-r--r--. 1 oracle oinstall 0 Oct 17 12:41 1476720205641-20 -rw-r--r--. 1 oracle oinstall 0 Oct 17 12:43 1476720205641-21 [oracle@bigdatalite movie]$

The non-empty file in the file list is a delimited text file, so you can print

the content to your display using the Unix and Linux command, cat: cat

1476720205641-17

I|ORCL.MOVIEDEMO.110V1E12016-10-17 16:27:14.00095012016-10-18T10:49:14.338000|00000000040000002985

|1173981|Jack Reacher: Never Go Back|2016|96000000|0|Jack Reacher must uncover the truth behind a

major government conspiracy in order to clear his name. On the run as a fugitive from the law, R

eacher uncovers a potential secret from his past that could change his life forever.

I|ORCL.MOVIEDEMO.MOVIE|2016-10-17 16:27:14.000950|2016-10-18T10:49:14.339000|00000000040000003417

|1173982IBoo! A Madea Holloween|2016|0|0|Madea winds up in the middle of mayhem when she spends a

haunted Halloween fending off killers, paranormal poltergeists, ghosts, ghouls and zombies while

keeping a watchful eye on a group of misbehaving teens.

I|ORCL.MOVIEDEMO.MOVIE|2016-10-17 16:27:14.000950|2016-10-18T10:49:14.339001|00000000040000003789

|1173983|Like Stars on Earth|2007|0|1204660|An eight-year-old boy is thought to be lazy and a tro

uble-maker, until the new art teacher has the patience and compassion to discover the real proble

m behind his struggles in school.

[oracle@bigdatalite movie]$Data queued in Apache Flume is now ready for further processing.

Parent topic: Advanced Features

6.8 Troubleshooting the Flume Handler

Topics:

- Java Classpath

- Flume Flow Control Issues

- Flume Agent Configuration File Not Found

- Flume Connection Exception

- Other Failures

Parent topic: Using the Flume Handler

6.8.1 Java Classpath

Issues with the Java classpath are common. A ClassNotFoundException in the Oracle GoldenGate Java log4j log file indicates a classpath problem. You can use the Java log4j log file to troubleshoot this issue. Setting the log level to DEBUG allows for logging of each of the JARs referenced in the gg.classpath object to be logged to the log file. This way, you can make sure that all of the required dependency JARs are resolved. For more information, see Classpath Configuration.

Parent topic: Troubleshooting the Flume Handler

6.8.2 Flume Flow Control Issues

In some situations, the Flume Handler may write to the Flume source faster than the Flume sink can dispatch messages. When this happens, the Flume Handler works for a while, but when Flume can no longer accept messages, it will abend. The cause that is logged in the Oracle GoldenGate Java log file may probably be an EventDeliveryException, indicating that the Flume Handler was unable to send an event. Check the Flume log for the exact cause of the problem. You may be able to re-configure the Flume channel to increase capacity or increase the Java heap size if the Flume Agent is experiencing an OutOfMemoryException. This may not solve the problem. If the Flume Handler can push data to the Flume source faster than messages are dispatched by the Flume sink, then any change may only extend the period the Flume Handler can run before failing.

Parent topic: Troubleshooting the Flume Handler

6.8.3 Flume Agent Configuration File Not Found

If the Flume Agent configuration file is not in the classpath,, Flume Handler abends at startup. The result is usually a ConfigException that reports the issue as an error loading the Flume producer properties. Check the gg.handler.name. RpcClientProperites configuration file to make sure that the naming of the Flume Agent properties file is correct. Check the Oracle GoldenGate gg.classpath properties to make sure that the classpath contains the directory containing the Flume Agent properties file. Also, check the classpath to ensure that the path to the Flume Agent properties file does not end with a wildcard (*) character.

Parent topic: Troubleshooting the Flume Handler

6.8.4 Flume Connection Exception

The Flume Handler terminates abnormally at start up if it cannot connect to the Flume source. The root cause of this problem may probably be reported as an IOExeption in the Oracle GoldenGate Java log4j, file indicating a problem connecting to Flume at a given host and port. Make sure that the following are both true:

-

The Flume Agent process is running

-

The Flume agent configuration file that the Flume Handler is accessing contains the correct host and port.

Parent topic: Troubleshooting the Flume Handler

6.8.5 Other Failures

Review the contents of the Oracle GoldenGate Java log4j file to identify any other issues.

Parent topic: Troubleshooting the Flume Handler