| C H A P T E R 2 |

|

Sun Blade T6300 Server Module Diagnostics |

This chapter describes the diagnostics that are available for monitoring and troubleshooting the Sun Blade T6300 server module.

This chapter is intended for technicians, service personnel, and system administrators who service and repair computer systems.

The following topics are covered:

There are a variety of diagnostic tools, commands, and indicators you can use to monitor and troubleshoot a Sun Blade T6300 server module:

- An application that exercises the system, provides hardware validation, and discloses possible faulty components with recommendations for repair.

- An application that exercises the system, provides hardware validation, and discloses possible faulty components with recommendations for repair.

The LEDs, ALOM, Solaris OS PSH, and many of the log files and console messages are integrated. For example, a fault detected by the Solaris software will display the fault, log it, pass information to ALOM CMT where it is logged, and depending on the fault, might illuminate one or more LEDs.

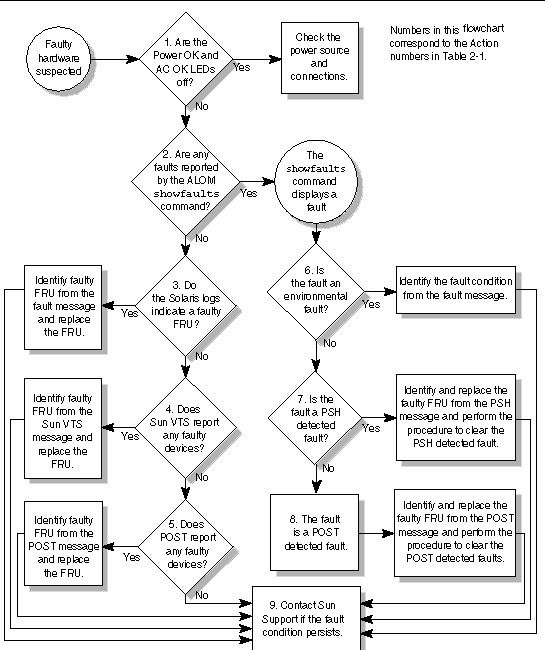

The diagnostic flowchart in FIGURE 2-1 and TABLE 2-1 describe an approach for using the server module diagnostics to identify a faulty field-replaceable unit (FRU). The diagnostics you use, and the order in which you use them, depend on the nature of the problem you are troubleshooting, so you might perform some actions and not others.

Use this flowchart to understand what diagnostics are available to troubleshoot faulty hardware, and use TABLE 2-1 to find more information about each diagnostic in this chapter.

|

The OK LED is located on the front of the chassis. If the LED is not lit, check that the blade is properly plugged in and the chassis has power. |

|||

|

The showfaults command displays the following kinds of faults: Faulty FRUs are identified in fault messages using the FRU name. For a list of FRU names, see TABLE 1-3. |

|||

|

The Solaris message buffer and log files record system events and provide information about faults.

|

Section 2.6, Collecting Information From Solaris OS Files and Commands |

||

|

SunVTS can exercise and diagnose FRUs. To run SunVTS, the server module must be running the Solaris OS.

|

|||

|

POST performs basic tests of the server module components and reports faulty FRUs.

|

|||

|

If the fault listed by the showfaults command displays a temperature or voltage fault, then the fault is an environmental fault. Environmental faults can be caused by faulty FRUs (power supply, fan, or blower) or by environmental conditions such as high ambient temperature, or blocked airflow. |

Section 2.3.2, Displaying System Faults See the Sun Blade 6000 Modular System Service Manual, 820-0051. |

||

|

If the fault message displays the following text, the fault was detected by the Solaris Predictive Self-Healing software:

If the fault is a PSH detected fault, identify the faulty FRU from the fault message and replace the faulty FRU. After the FRU is replaced, perform the procedure to clear PSH detected faults. |

Section 2.5, Using the Solaris Predictive Self-Healing Feature Section 4.2, Common Procedures for Parts Replacement Section 2.5.2, Clearing PSH Detected Faults Section 2.5.3, Clearing the PSH Fault From the ALOM CMT Logs |

||

|

POST performs basic tests of the server module components and reports faulty FRUs. When POST detects a faulty FRU, it logs the fault and if possible, takes the FRU offline. POST detected FRUs display the following text in the fault message: FRU-name deemed faulty and disabled In this case, replace the FRU and run the procedure to clear POST detected faults. |

|||

|

The majority of hardware faults are detected by the server module diagnostics. In rare cases it is possible that a problem requires additional troubleshooting. If you are unable to determine the cause of the problem, contact Sun for support. |

Sun Support information:

|

This section describes how the memory is configured and how the server module deals with memory faults.

The Sun Blade T6300 server module has eight slots that hold DDR-2 memory DIMMs in the following DIMM sizes:

The Sun Blade T6300 server module performs best if all eight connectors are populated with eight DIMMs. This configuration also enables the system to continue operating even when a DIMM fails, or if an entire channel fails.

Due to interleaving rules for the CPU, the system will operate at the lowest capacity of all the DIMMs installed. Therefore, it is ideal to install eight identical DIMMs (not four DIMMs of one capacity and four DIMMs of another capacity).

|

Caution - The following DIMM rules must be followed. The server module might not operate correctly if the DIMM rules are not followed. Always use DIMMs that have been qualified by Sun. |

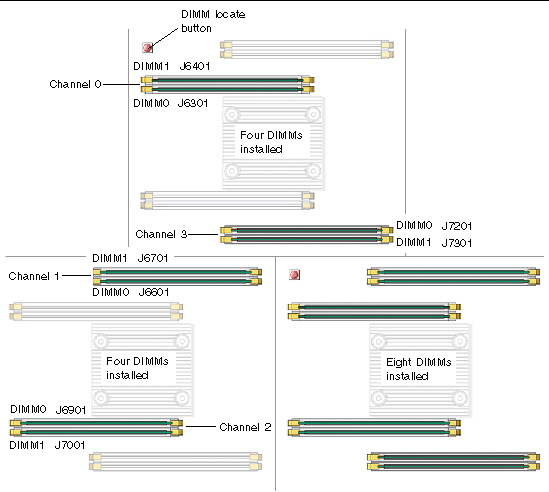

DIMMs are installed in groups of four, with four DIMMs of the same capacity (FIGURE 2-2).

If the DIMMs are not properly configured, the system issues a message and the system does not boot.

See Section 5.2, Installing DIMMS for DIMM installation instructions.

The Sun Blade T6300 server module uses advanced ECC technology, also called chipkill, that corrects up to 4-bits in error on nibble boundaries, as long as they are all in the same DRAM. If a DRAM fails, the DIMM continues to function.

The following server module features manage memory faults independently:

If a memory fault is detected, POST displays the fault with the FRU name of the faulty DIMMS, logs the fault, and disables the faulty DIMMs by placing them in the ASR blacklist. For a given memory fault, POST disables half of the physical memory in the system. When this occurs, you must replace the faulty DIMMs based on the fault message and enable the disabled DIMMs with the ALOM CMT enablecomponent command.

If you suspect that the server module has a memory problem, follow the flowchart (see FIGURE 2-1). Run the ALOM CMT showfaults command. The showfaults command lists memory faults and lists the specific DIMMS that are associated with the fault. Once you've identified which DIMMs to replace, see Chapter 4 for DIMM removal and replacement instructions. You must perform the instructions in that chapter to clear the faults and enable the replaced DIMMs.

The Sun Blade T6300 server module has LEDs on the front panel and the hard drives. The behavior of LEDs on your server module conform to the American National Standards Institute (ANSI) Status Indicator Standard (SIS). These standard LED behaviors are described in TABLE 2-2

The front panel LEDs and buttons are located in the center of the server module (FIGURE 2-3, TABLE 2-2, and TABLE 2-3, and TABLE 2-4).

The LEDs have assigned meanings, described in TABLE 2-3.

For information about Ethernet LEDs see the Sun Blade 6000 Modular System Service Manual, 820-0051, at:

http://www.sun.com/documentation/

The Sun Advanced Lights Out Manager (ALOM) CMT is a service processor in the Sun Blade T6300 server module that enables you to remotely manage and administer your server module.

ALOM CMT enables you to run remote diagnostics such as power-on self-test (POST), that would otherwise require physical proximity to the server module serial port. You can also configure ALOM CMT to send email alerts of hardware failures, hardware warnings, and other events related to the server module or to ALOM.

The ALOM CMT circuitry runs independently of the server module, using the server module standby power. Therefore, ALOM CMT firmware and software continue to function when the server module operating system goes offline or when the server module is powered off.

|

Note - Refer to the Advanced Lights out Management (ALOM) CMT v1.3 Guide, 819-7981, for comprehensive ALOM CMT information. |

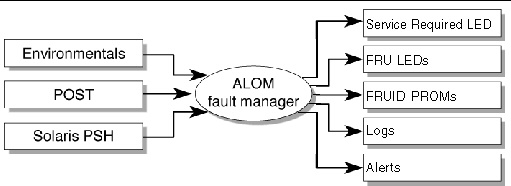

Faults detected by ALOM CMT, POST, and the Solaris Predictive Self-healing (PSH) technology are forwarded to ALOM CMT for fault handling (FIGURE 2-4).

In the event of a system fault, ALOM CMT ensures that the Service Action Required LED is lit, FRU ID PROMs are updated, the fault is logged, and alerts are displayed (faulty FRUs are identified in fault messages using the FRU name. For a list of FRU names, see Appendix A).

ALOM CMT sends alerts to all ALOM CMT users that are logged in, sending the alert through email to a configured email address, and writing the event to the ALOM CMT event log.

ALOM CMT can detect when a fault is no longer present and clears the fault in several ways:

ALOM CMT can detect the removal of a FRU, in many cases even if the FRU is removed while ALOM CMT is powered off. This enables ALOM CMT to know that a fault, diagnosed to a specific FRU, has been repaired. The ALOM CMT clearfault command enables you to manually clear certain types of faults without a FRU replacement or if ALOM CMT was unable to automatically detect the FRU replacement.

ALOM CMT does not automatically detect hard drive replacement.

Many environmental faults can automatically recover. For example, a temperature that is exceeding a threshold might return to normal limits. An unplugged power supply can be plugged in. The recovery of environmental faults is automatically detected. Recovery events are reported using one of two forms:

Environmental faults can be repaired through hot removal of the faulty FRU. FRU removal is automatically detected by the environmental monitoring and all faults associated with the removed FRU are cleared. The message for that case, and the alert sent for all FRU removals is:

fru at location has been removed.

There is no ALOM CMT command to manually repair an environmental fault.

ALOM CMT does not handle hard drive faults. Use the Solaris message files to view hard drive faults. See Section 2.6, Collecting Information From Solaris OS Files and Commands.

This section describes the ALOM CMT commands that are commonly used for service-related activities.

Before you can run ALOM CMT commands, you must connect to the ALOM. There are several ways to connect to the service processor:

|

Note - Refer to the Advanced Lights out Management (ALOM) CMT v1.3 Guide, 819-7981, for instructions on configuring and connecting to ALOM. |

TABLE 2-5 describes the typical ALOM CMT commands for servicing a Sun Blade T6300 server module. For descriptions of all ALOM CMT commands, issue the help command or refer to the Advanced Lights out Management (ALOM) CMT v1.3 Guide, 819-7981.

|

Displays a list of all ALOM CMT commands with syntax and descriptions. Specifying a command name as an option displays help for that command. |

|

|

Takes the host server from the OS to either kmdb or OpenBoot PROM (equivalent to a Stop-A command), depending on the Solaris mode that was booted. The -y option skips the confirmation question. The -c option executes a console command after completion of the break command. |

|

|

Manually clears host-detected faults. The UUID is the unique fault ID of the fault to be cleared. |

|

|

Connects you to the host system. The -f option forces the console to have read and write capabilities. |

|

|

Displays the contents of the system's console buffer. The following options enable you to specify how the output is displayed:

|

|

|

Enables control of the firmware during system initialization with the following options: |

|

|

Performs a poweroff followed by poweron. The -f option forces an immediate poweroff, otherwise the command attempts a graceful shutdown. |

|

|

Powers off the host server. The -y option enables you to skip the confirmation question. The -f option forces an immediate shutdown. |

|

|

Powers on the host server. Using the -c option executes a console command after completion of the poweron command. |

|

|

Indicates if it is OK to perform a hot-swap of a power supply. This command does not perform any action, but provides a warning if the power supply should not be removed because the other power supply is not enabled. |

|

|

Pauses the service processor tasks and illuminates the white locator LED indicating that it is safe to remove the blade. |

|

|

Turns off the locator LED and restores the service processor state. |

|

|

Generates a hardware reset on the host server. The -y option enables you to skip the confirmation question. The -c option executes a console command after completion of the reset command. |

|

|

Reboots the service processor. The -y option enables you to skip the confirmation question. |

|

|

Sets the virtual keyswitch. The -y option enables you to skip the confirmation question when setting the keyswitch to stby. |

|

|

Displays the environmental status of the host server. This information includes system temperatures, power supply, front panel LED, hard drive, fan, voltage, and current sensor status. See Section 2.3.3, Displaying the Environmental Status. |

|

|

Displays current system faults. See Section 2.3.2, Displaying System Faults. |

|

|

Displays information about the FRUs in the server.

|

|

|

Displays the current state of the Locator LED as either on or off. |

|

|

showlogs [-b lines | -e lines |-v] [-g lines] [-p logtype[r|p]]] |

Displays the history of all events logged in the ALOM CMT event buffers (in RAM or the persistent buffers). |

|

Displays information about the host system's hardware configuration, the system serial number, and whether the hardware is providing service. |

|

Note - See TABLE 2-8 for the ALOM CMT ASR commands. |

The ALOM CMT showfaults command displays the following kinds of faults:

Use the showfaults command for the following reasons:

At the sc> prompt, type the showfaults command.

At the sc> prompt, type the showfaults command.

The following showfaults command examples show the different kinds of output from the showfaults command:

The showenvironment command displays a snapshot of the server module environmental status. This command displays system temperatures, hard drive status, power supply and fan status, front panel LED status, voltage, and current sensors. The output uses a format similar to the Solaris OS command prtdiag (1m).

At the sc> prompt, type the showenvironment command.

At the sc> prompt, type the showenvironment command.

The output differs according to your system's model and configuration.

|

Note - Some environmental information might not be available when the server module is in standby mode. |

The showfru command displays information about the FRUs in the server module. Use this command to see information about an individual FRU, or for all the FRUs.

|

Note - By default, the output of the showfru command for all FRUs is very long. |

At the sc> prompt, enter the showfru command.

At the sc> prompt, enter the showfru command.

In the following example, the showfru command is used to get information about the motherboard (MB).

Power-on self-test (POST) is a group of PROM-based tests that run when the server module is powered on or reset. POST checks the basic integrity of the critical hardware components in the server module (CPU, memory, and I/O buses).

If POST detects a faulty component, it is disabled automatically, preventing faulty hardware from potentially harming any software. If the system is capable of running without the disabled component, the system will boot when POST is complete. For example, if one of the processor cores is deemed faulty by POST, the core will be disabled, and the system will boot and run using the remaining cores.

|

Note - Devices can be manually enabled or disabled using ASR commands (see Section 2.7, Managing Components With Automatic System Recovery Commands). |

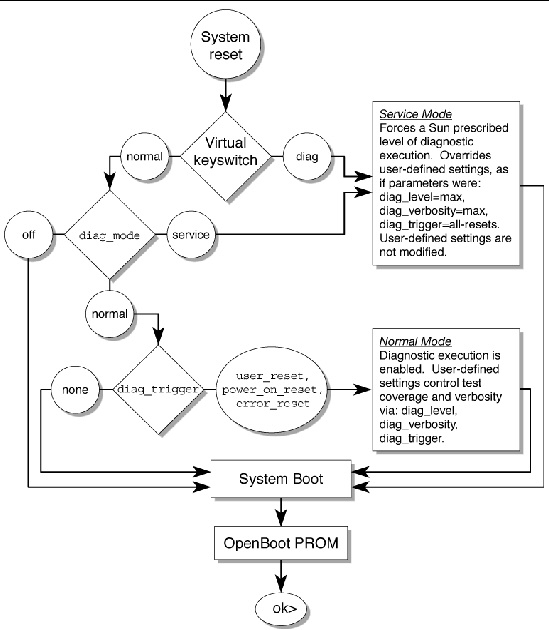

The server module can be configured for normal, extensive, or no POST execution. You can also control the level of tests that run, the amount of POST output that is displayed, and which reset events trigger POST by using ALOM CMT variables.

TABLE 2-6 lists the ALOM CMT variables used to configure POST and FIGURE 2-5 shows how the variables work together.

|

setkeyswitch[1] |

The system can power on and run POST (based on the other parameter settings). For details see FIGURE 2-5. This parameter overrides all other commands. |

|

|

The system can power on and run POST, but no flash updates can be made. |

||

|

Runs POST with preset values for diag_level and diag_verbosity. |

||

|

If diag_mode = normal, runs all the minimum tests plus extensive CPU and memory tests. |

||

|

POST output displays functional tests with a banner and pinwheel. |

||

|

POST displays all test, informational, and some debugging messages. |

TABLE 2-7 shows typical combinations of ALOM CMT variables and associated POST modes.

|

setkeyswitch[2] |

||||

|

This is the default POST configuration. This configuration tests the system thoroughly, and suppresses some of the detailed POST output. |

POST does not run, resulting in quick system initialization, but this is not a suggested configuration. |

POST runs the full spectrum of tests with the maximum output displayed. |

POST runs the full spectrum of tests with the maximum output displayed. |

1. Access the ALOM CMT sc> prompt:

At the console, issue the #. key sequence:

2. At the ALOM CMT sc> prompt, use the setsc command to set the POST parameter:

The setkeyswitch parameter is a command that sets the virtual keyswitch, so it does not use the setsc command. Example:

You can use POST to test and verify server module hardware.

POST tests critical hardware components to verify functionality before the system boots and accesses software. If POST detects an error, the faulty component is disabled automatically, preventing faulty hardware from potentially harming software.

Under normal operating conditions, the server module is usually configured to run POST in maximum mode for all power-on or error-generated resets.

You can use POST as an initial diagnostic tool for the system hardware. In this case, configure POST to run in diagnostic service mode for maximum test coverage and verbose output.

This procedure describes how to run POST when you want maximum testing, as in the case when you are troubleshooting a system.

1. Switch from the system console prompt to the ALOM CMT sc> prompt by issuing the #. escape sequence.

2. Set the virtual keyswitch to diag so that POST will run in service mode.

3. Reset the system so that POST runs.

There are several ways to initiate a reset. The following example uses the powercycle command. For other methods, refer to the Sun Blade T6300 Server Module Administration Guide, 820-0277.

4. Switch to the system console to view the post output:

Example of POST output with some output omitted:

5. Perform further investigation if needed.

When POST is finished running, and if no faults were detected, the system will boot.

If POST detects a faulty device, the fault is displayed and the fault information is passed to ALOM CMT for fault handling. Faulty FRUs are identified in fault messages using the FRU name. For a list of FRU names, see Appendix A.

a. Interpret the POST messages:

POST error messages use the following syntax:

c:s > ERROR: TEST = failing-test

c:s > H/W under test = FRU

c:s > Repair Instructions: Replace items in order listed by H/W under test above

c:s > MSG = test-error-message

c:s > END_ERROR

In this syntax, c = the core number, s = the strand number.

Warning and informational messages use the following syntax:

The following example shows a POST error message.

In this example, POST is reporting a memory error at DIMM location MB/CMP0/CH2/R0/D0. This error was detected by POST running on core 7, strand 2.

b. Run the showfaults command to obtain additional fault information.

The fault is captured by ALOM, where the fault is logged, the Service Action Required LED is lit, and the faulty component is disabled.

ok .# sc> showfaults -v ID Time FRU Fault 1 APR 24 12:47:27 MB/CMP0/CH2/R0/D0 MB/CMP0/CH2/R0/D0 deemed faulty and disabled |

In this example, MB/CMP0/CH2/R0/D0 is disabled. The system can boot using memory that was not disabled until the faulty component is replaced.

|

Note - You can use ASR commands to display and control disabled components. See Section 2.7, Managing Components With Automatic System Recovery Commands. |

In most cases, when POST detects a faulty component, POST logs the fault and automatically takes the failed component out of operation by placing the component in the ASR blacklist (see Section 2.7, Managing Components With Automatic System Recovery Commands).

After the faulty FRU is replaced, you must clear the fault by removing the component from the ASR blacklist.

1. At the ALOM CMT prompt, use the showfaults command to identify POST detected faults.

POST detected faults are distinguished from other kinds of faults by the text:

deemed faulty and disabled, and no UUID number is reported.

sc> showfaults -v ID Time FRU Fault 1 APR 24 12:47:27 MB/CMP0/CH2/R0/D0 MB/CMP0/CH2/R0/D0 deemed faulty and disabled |

If no fault is reported, you do not need to do anything else. Do not perform the subsequent steps.

2. Use the enablecomponent command to clear the fault and remove the component from the ASR blacklist.

Use the FRU name that was reported in the fault in the previous step.

The fault is cleared and should not show up when you run the showfaults command. Additionally, the Service Action Required LED is no longer on.

You must reboot the server module for the enablecomponent command to take effect.

4. At the ALOM CMT prompt, use the showfaults command to verify that no faults are reported.

sc> showfaults Last POST run: THU MAR 09 16:52:44 2006 POST status: Passed all devices No failures found in System |

The Solaris Predictive Self-Healing (PSH) technology enables the Sun Blade T6300 server module to diagnose problems while the Solaris OS is running, and mitigate many problems before they negatively affect operations.

The Solaris OS uses the fault manager daemon, fmd(1M), which starts at boot time and runs in the background to monitor the system. If a component generates an error, the daemon handles the error by correlating the error with data from previous errors and other related information to diagnose the problem. Once diagnosed, the fault manager daemon assigns the problem a Universal Unique Identifier (UUID) that distinguishes the problem across any set of systems. When possible, the fault manager daemon initiates steps to self-heal the failed component and take the component offline. The daemon also logs the fault to the syslogd daemon and provides a fault notification with a message ID (MSGID). You can use message ID to get additional information about the problem from Sun's knowledge article database.

The Predictive Self-Healing technology covers the following Sun Blade T6300 server module components:

The PSH console message provides the following information:

If the Solaris PSH facility has detected a faulty component, use the fmdump command to identify the fault. Faulty FRUs are identified in fault messages using the FRU name. For a list of FRU names, see Appendix A.

|

Note - Additional Predictive Self-Healing information is available at: http://www.sun.com/msg |

The fmdump command displays the list of faults detected by the Solaris PSH facility. Use this command for the following reasons:

If you already have a fault message ID, go to Step 2 to obtain more information about the fault from the Sun Predictive Self-Healing Knowledge Article web site.

|

Note - Faults detected by the Solaris PSH facility are also reported through ALOM CMT alerts. In addition to the PSH fmdump command, the ALOM CMT showfaults command also provides information about faults and displays fault UUIDs. See Section 2.3.2, Displaying System Faults. |

1. Check the event log using the fmdump command with -v for verbose output:

In this example, a fault is displayed, indicating the following details:

2. Use the Sun message ID to obtain more information about this type of fault.

a. In a browser, go to the Predictive Self-Healing Knowledge Article web site: http://www.sun.com/msg

b. Enter the message ID in the SUNW-MSG-ID field, and press Lookup.

In this example, the message ID SUN4U-8000-6H returns the following information for corrective action:

c. Follow the suggested actions to repair the fault.

When the Solaris PSH facility detects faults, the faults are logged and displayed on the console. After the fault condition is corrected, for example by replacing a faulty FRU, you must clear the fault.

|

Note - If you are dealing with faulty DIMMs, do not follow this procedure. Instead, perform the procedure in Section 4.3.3, Replacing a DIMM. |

1. After replacing a faulty FRU, boot the system.

2. At the ALOM CMT prompt, use the showfaults command to identify PSH detected faults.

PSH detected faults are distinguished from other kinds of faults by the text:

Host detected fault.

sc> showfaults -v ID Time FRU Fault 0 SEP 09 11:09:26 MB/CMP0/CH0/R0/D0 Host detected fault, MSGID: SUN4U-8000-2S UUID: 7ee0e46b-ea64-6565-e684-e996963f7b86 |

If no fault is reported, you do not need to do anything else. Do not perform the subsequent step.

3. Clear the fault from all persistent fault records.

In some cases, even though the fault is cleared, some persistent fault information remains and results in erroneous fault messages at boot time. To ensure that these messages are not displayed, perform the following command:

When the Solaris PSH facility detects faults, the faults are also logged by the ALOM CMT service processor. After the fault condition is corrected, for example by replacing a faulty FRU, you must clear the fault from the ALOM CMT logs.

|

Note - If you are dealing with faulty DIMMs, do not follow this procedure. Instead, perform the procedure in Section 4.3.3, Replacing a DIMM. |

1. After replacing a faulty FRU, at the ALOM CMT prompt, use the showfaults command to identify PSH detected faults.

PSH detected faults are distinguished from other kinds of faults by the text:

Host detected fault.

ID FRU Fault 0 MB Host detected fault, MSGID: SUNW-TEST07 UUID: 7ee0e46b-ea64-6565-e684-e996963f7b86 |

If no fault is reported, you do not need to do anything else. Do not perform the subsequent steps.

2. Run the clearfault command with the UUID provided in the showfaults output:

sc> clearfault 7ee0e46b-ea64-6565-e684-e996963f7b86 Clearing fault from all indicted FRUs... Fault cleared. |

With the Solaris OS running on the Sun Blade T6300 server module, you have all the Solaris OS files and commands available for collecting information and for troubleshooting.

In the event that POST, ALOM, or the Solaris PSH features did not indicate the source of a fault, check the message buffer and log files for notifications for faults. Hard drive faults are usually captured by the Solaris message files.

Use the dmesg command to view the most recent system message. To view the system messages log file, view the contents of the /var/adm/messages file.

The dmesg command displays the most recent messages generated by the system.

The error logging daemon, syslogd, automatically records various system warnings, errors, and faults in message files. These messages can alert you to system problems such as a device that is about to fail.

The /var/adm directory contains several message files. The most recent messages are in the /var/adm/messages file. After a period of time (usually every ten days), a new messages file is automatically created. The original contents of the messages file are rotated to a file named messages.1. Over a period of time, the messages are further rotated to messages.2 and messages.3, and then deleted.

2. Issue the following command:

3. If you want to view all logged messages, issue the following command:

The Automatic System Recovery (ASR) feature enables the server module to automatically unconfigure failed components to remove them from operation until they can be replaced. In the Sun Blade T6300 server module, the following components are managed by the ASR feature:

The database that contains the list of disabled components is called the ASR blacklist (asr-db).

In most cases, POST automatically disables a component when it is faulty. After the cause of the fault is repaired (FRU replacement, loose connector reseated, and so on), you must remove the component from the ASR blacklist.

The ASR commands (TABLE 2-8) enable you to view, and manually add or remove components from the ASR blacklist. These commands are run from the ALOM CMT sc> prompt.

|

showcomponent [3] |

|

|

Removes a component from the asr-db blacklist, where asrkey is the component to enable. |

|

|

Adds a component to the asr-db blacklist, where asrkey is the component to disable. |

|

|

Note - The components (asrkeys) vary from system to system, depending on how many cores and memory are present. Use the showcomponent command to see the asrkeys on a given system. |

The showcomponent command displays the system components (asrkeys) and reports their status.

1. At the sc> prompt, enter the showcomponent command.

Example with no disabled components:

Example showing a disabled component:

The disablecomponent command disables a component by adding it to the ASR blacklist.

1. At the sc> prompt, enter the disablecomponent command.

2. After receiving confirmation that the disablecomponent command is complete, reset the server module so that the ASR command takes effect.

The enablecomponent command enables a disabled component by removing it from the ASR blacklist.

1. At the sc> prompt, enter the enablecomponent command.

2. After receiving confirmation that the enablecomponent command is complete, reset the server module for so that the ASR command takes effect.

Sometimes a system exhibits a problem that cannot be isolated definitively to a particular hardware or software component. In such cases, it might be useful to run a diagnostic tool that stresses the system by continuously running a comprehensive battery of tests. Sun provides the SunVTS software for this purpose.

This procedure assumes that the Solaris OS is running on the Sun Blade T6300 server module, and that you have access to the Solaris command line.

1. Check for the presence of SunVTS packages using the pkginfo command.

TABLE 2-9 lists some SunVTS packages:

If SunVTS is not installed, you can obtain the installation packages from the following resources:

The SunVTS 6.3 software, and future compatible versions, are supported on the Sun Blade T6300 server module.

SunVTS installation instructions are described in the Sun VTS 6.3 User's Guide, 820-0080.

Before you begin, the Solaris OS must be running. You must verify that SunVTS validation test software is installed on your system. See Section 2.8.1, Checking SunVTS Software Installation.

The SunVTS installation process requires that you specify one of two security schemes to use when running SunVTS. The security scheme you choose must be properly configured in the Solaris OS for you to run SunVTS.

SunVTS software features both character-based and graphics-based interfaces.

For more information about the character-based SunVTS TTY interface, and specifically for instructions on accessing it by TIP or telnet commands, refer to the Sun VTS 6.3 User's Guide.

Copyright © 2007, Sun Microsystems, Inc. All Rights Reserved.