Shadow Migration

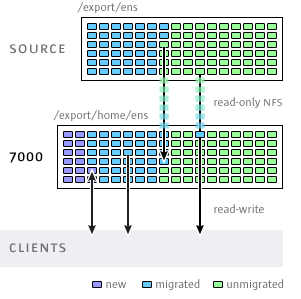

Figure 14-1 Shadow Migration

Shadow migration uses interposition, but is integrated into the ZFSSA and doesn't

require a separate physical machine. When shares are created, they can optionally

"shadow" an existing directory, either locally or over NFS. In this scenario,

downtime is scheduled once, where the source ZFSSA X is placed into read-only mode,

a share is created with the shadow property set, and clients are updated to point to

the new share on the Sun Storage 7000 ZFSSA. Clients can then access the ZFSSA in

read-write mode.

Once the shadow property is set, data is transparently migrated in the background

from the source ZFSSA locally. If a request comes from a client for a file that has

not yet been migrated, the ZFSSA will automatically migrate this file to the local

server before responding to the request. This may incur some initial latency for

some client requests, but once a file has been migrated all accesses are local to

the ZFSSA and have native performance. It is often the case that the current working

set for a filesystem is much smaller than the total size, so once this working set

has been migrated, regardless of the total native size on the source, there will be

no perceived impact on performance.

The downside to shadow migration is that it requires a commitment before the data

has finished migrating, though this is the case with any interposition method.

During the migration, portions of the data exists in two locations, which means that

backups are more complicated, and snapshots may be incomplete and/or exist only on

one host. Because of this, it is extremely important that any migration between two

hosts first be tested thoroughly to make sure that identity management and access

controls are setup correctly. This need not test the entire data migration, but it

should be verified that files or directories that are not world readable are

migrated correctly, ACLs (if any) are preserved, and identities are properly

represented on the new system.

Shadow migration is implemented using on-disk data within the filesystem, so there

is no external database and no data stored locally outside the storage pool. If a

pool is failed over in a cluster, or both system disks fail and a new head node is

required, all data necessary to continue shadow migration without interruption will

be kept with the storage pool.