17.1 Introducing the Multi-Data Center

Large organizations using Access Manager 11g typically deploy their applications across multi-data centers to distribute load as well as address disaster recovery. Deploying Access Manager in multi-data centers allows for the transfer of user session details transparently after configuration of single sign-on (SSO) between them.

The scope of a data center comprises protected applications, WebGate agents, Access Manager servers and other infrastructure entities including identity stores and databases.

Note:

Access Manager 11g supports scenarios where applications are distributed across two or more data centers.

The Multi-Data Center approach supported by Access Manager is a Master-Clone deployment in which the first data center is specified as the Master and one or more Clone data centers mirror it. (Master and Clone data centers can also be referred to as Supplier and Consumer data centers.) A Master Data Center is duplicated using Test-to-Production (T2P) tools to create one or more cloned Data Centers. See Administering Oracle Fusion Middleware for information on T2P.

During setup of the Multi-Data Center, session adoption policies are configured to determine where a request would be sent if the Master Data Center is down. Following the setup, a manner of replicating data from the Master to the Clone(s) will be designated. This can be done using the Automated Policy Sync (APS) Replication Service or it can be done manually. See Configuring Multi-Data Centers and Synchronizing Data In A Multi-Data Center for details on the setup and synchronization process.

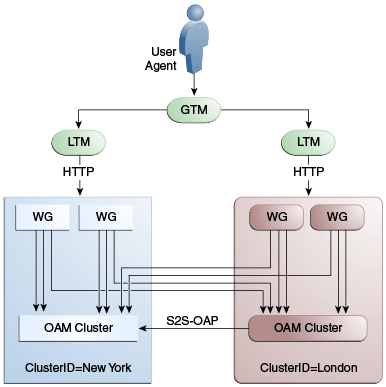

A data center may include applications, data stores, load balancers and the like. Each data center includes a full Access Manager installation. The WebLogic Server domain in which the instance of Access Manager is installed will not span data centers. Additionally, data centers maintain user to data center affinity. Figure 17-1 illustrates the Multi-Data Center system architecture.

Figure 17-1 Multi-Data Center System Architecture

Description of "Figure 17-1 Multi-Data Center System Architecture"

Note:

Global load balancers are configured to route HTTP traffic to the geographically closest data center. No load balancers are used to manage Oracle Access Protocol traffic.

All applications are protected by WebGate agents configured against Access Manager clusters in the respective local data centers. Every WebGate has a primary server and one or more secondary servers; WebGate agents in each data center have Access Manager server nodes from the same data center in the primary list and nodes from other data centers in the secondary list. Thus, it is possible for a user request to be routed to a different data center when:

-

A local data center goes down.

-

There is a load spike causing redistribution of traffic.

-

Certain applications are deployed in only one data center.

-

WebGates are configured to load balance within one data center but failover across data centers.

The following sections contain more information on how the Multi-Data Center solution works:

17.1.1 Understanding Cookies for Multi-Data Center

SSO cookies (OAM_ID, OAMAuthn, and OAM_GITO) are enhanced and used by the Multi-Data Centers.

This section describes the following topics:

17.1.1.1 OAM_ID Cookie

The OAM_ID cookie is the SSO cookie for Access Manager and holds the attributes required to enable the MDC behavior across all data centers.

If a subsequent request from a user in the same SSO session is routed to a different data center in the MDC topology, session adoption is triggered per the configured session adoption policies. ‘Session adoption’ refers to the action of a data center creating a local user session based on the submission of a valid authentication cookie (OAM_ID) that indicates a session for the user exists in another other data center in the topology. (It may or may not involve re-authentication of the user.)

When a user session is created in a data center, the OAM_ID cookie will be augmented or updated with the following:

- a

clusteridof the data center - a

sessionid - the

latest_visited_clusterid

In MDC deployments, OAM_ID is a host-scoped cookie. Its domain parameter is set to a virtual host name which is a singleton across data centers and is mapped by the global load balancer to the Access Manager servers in the Access Manager data center based on the load balancer level user traffic routing rules (for example, based on geographical affinity). The OAM_ID cookie is not accessible to applications other than the Access Manager servers.

17.1.1.2 OAMAuthn / ObSSO WebGate Cookies

OAMAuthn is the WebGate cookie for 11g and ObSSO is the WebGate cookie for 10g. On successful authentication and authorization, a user will be granted access to a protected resource. At that point, the browser will have a valid WebGate cookie with the clusterid:sessionid of the authenticating Data Center.

If authentication followed by authorization spans across multiple Data Centers, the Data Center authorizing the user request will trigger session adoption by retrieving the session's originating clusterid from the WebGate cookie. (WebGates need to have the same host name in each data center due to host scoping of the WebGate cookies.) After adopting the session, a new session will be created in the current Data Center with the synced session details.

Note:

The WebGate cookie cannot be updated during authorization hence the newly created sessionid cannot be persisted for future authorization references. In this case, the remote sessionid and the local sessionids are linked through session indexing. During a subsequent authorization call to a Data Center, a new session will be created when:

-

MDC is enabled.

-

A session matching the sessionid in the WebGate cookie is not present in the local Data Center.

-

There is no session with a Session Index that matches the sessionid in the WebGate cookie.

-

A valid session exists in the remote Data Center (based on the MDC SessionSync Policy).

In these instances, a new session is created in the local Data Center with a Session Index that refers to the sessionid in the WebGate cookie.

17.1.1.3 OAM_GITO (Global Inactivity Time Out) Cookie

OAM_GITO is a domain cookie set as an authorization response.

The session details of the authentication process will be recorded in the OAM_ID cookie. If the authorization hops to a different Data Center, session adoption will occur by creating a new session in the Data Center servicing the authorization request and setting the session index of the new session as the incoming sessionid. Since subsequent authentication requests will only be aware of the clusterid:sessionid mapping available in the OAM_ID cookie, a session hop to a different Data Center for authorization will go unnoticed during the authentication request. To address this gap, an OAM_GITO cookie (which also facilitates timeout tracking across WebGate agents) is introduced.

During authorization, the OAM_GITO cookie is set as a domain cookie. For subsequent authentication requests, the contents of the OAM_GITO cookie will be read to determine the latest session information and the inactivity/idle time out values. The OAM_GITO cookie contains the following data.

-

Data Center Identifier

-

Session Identifier

-

User Identifier

-

Last Access Time

-

Token Creation Time

Note:

For the OAM_GITO cookie, all WebGates and Access Manager servers should share a common domain hierarchy. For example, if the server domain is .us.example.com then all WebGates must have (at least) .example.com as a common domain hierarchy; this enables the OAM_GITO cookie to be set with the .example.com domain.

17.1.2 Session Adoption During Authorization

Multi-Data Center session adoption is supported during the authorization flow. After successful authentication, the OAMAuthn cookie will be augmented with the cluster ID details of the Data Center where authentication has taken place.

During authorization, if the request is routed to a different Data Center, Access Manager runtime checks to determine whether it is a Multi-Data Center scenario by looking for a valid remote session. If one is located, the Multi-Data Center session adoption process is triggered per the session adoption policy.

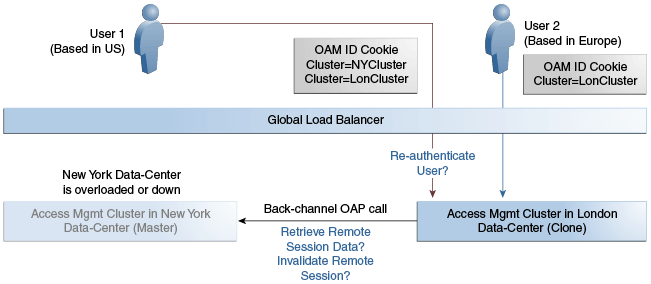

The session adoption policy can be configured so that the clone Access Manager cluster would make a back-end request for session details from the master Access Manager cluster using the Oracle Access Protocol (OAP). The session adoption policy can also be configured to invalidate the previous session so the user has a session only in one data center at a given time. Following the session adoption process, a new session will be created in the Data Center servicing the authorization request. See Multi-Data Center Deployments.

Note:

Since OAMAuthn cookie updates are not supported during authorization, the newly created session's session index will be set to that of the incoming session ID. See Session Indexing.

17.1.3 Session Indexing

A new session with a Session Index is created in the local data center during an authorization call to a data center. The Session Index refers to the session identifier in the OAMAuth cookie.

This will occur if all of the following conditions are met:

-

Session matching Session ID in the OAMAuth cookie is not present in the local Data Center.

-

MDC is enabled.

-

No session with Session Index matching Session ID in the OAMAuth cookie.

-

Valid Session exists in the remote Data Center based on the MDC SessionSync Policy.

17.1.4 Supported Multi-Data Center Topologies

Access Manager supports three Multi-Data Center topologies: Active-Active, Active-Passive, and Active-Standby modes.

The following sections contain details on these modes.

17.1.4.1 The MDC Active-Active Mode

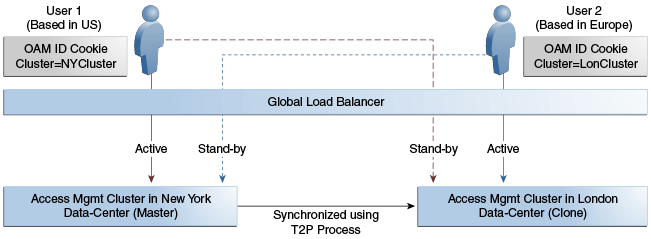

An Active-Active topology is when Master and Clone data centers are exact replicas and active at the same time.

They cater to different sets of users based on defined criteria; geography, for example. A load balancer routes traffic to the appropriate Data Center. Figure 17-2 illustrates a Multi-Data Center set up in Active-Active mode during normal operations.

Figure 17-2 Active-Active Deployment Mode

Description of "Figure 17-2 Active-Active Deployment Mode"

In Figure 17-2, the New York Data Center is designated as the Master and all policy and configuration changes are restricted to it. The London Data Center is designated as a Clone and uses T2P tooling and utilities to periodically synchronize data with the New York Data Center. The global load balancer is configured to route users in different geographical locations (US and Europe) to the appropriate data centers (New York or London) based on proximity to the data center (as opposed to proximity of the application being accessed). For example, all requests from US-based User 1 will be routed to the New York Data Center (NYDC) and all requests from Europe-based User 2 will be routed to the London Data Center (LDC).

Note:

The Access Manager clusters in Figure 17-2 are independent and not part of the same Oracle WebLogic domain. WebLogic domains are not recommended to span across data centers.

In this example, if NYDC was overloaded with requests, the global load balancer would start routing User 1 requests to the clone Access Manager cluster in LDC. The clone Access Manager cluster can tell (from the user's OAM_ID cookie) that there is a valid session in the master cluster and would therefore create a new session without prompting for authentication or re-authentication. Further, the session adoption policy can be configured such that the clone Access Manager cluster would make a back-end request for session details from the master Access Manager cluster using the Oracle Access Protocol (OAP). The session adoption policy can also be configured to invalidate the remote session (the session in NYDC) so the user has a session only in one data center at a given time.

Figure 17-3 illustrates how a user might be rerouted if the Master cluster is overloaded or down. If the Master Access Manager cluster were to go completely down, the clone Access Manager cluster would try to obtain the session details of User 1 but since the latter would be completely inaccessible, User 1 would be forced to re-authenticate and establish a new session in the clone Access Manager cluster. In this case, any information stored in the previous session is lost.

Note:

An Active-Active topology with agent failover is when an agent has Access Manager servers in one Data Center configured as primary and Access Manager servers in the other Data Centers configured as secondary to aid failover scenarios.

More details on an Active-Active topology can be found in Active-Active Multi-Data Center Topology Deployment

17.1.4.2 The MDC Active-Passive Mode

An Active-Passive topology is when the primary Data Center is operable but the clone Data Center is not. In this topology, the clone can be brought up within a reasonable time in cases when the primary Data Center fails. Thus, in the Active-Passive Mode one of the data centers is passive and services are not started.

In this use case, the data center does not have to be brought up immediately but within a reasonable amount of time in cases when the primary data center fails. There is no need to do an MDC setup although policy data will be kept in sync.

17.1.4.3 The MDC Active-Hot Standby Mode

Active–Hot Standby is when one of the Data Centers is in hot standby mode. In this case, traffic will not be routed to the Hot Standby Data Center unless the Active Data Center goes down.

In this use case, you do not need additional data centers for traffic on a daily basis but only keep one ready. Follow the Active-Active Mode steps to deploy in Active-Hot Standby Mode but do not route traffic to the center defined as Hot Standby. The Hot Standby center will continue to sync data but will only be used when traffic is directed there by the load balancer or by an administrator.