The implementation of server pool clustering within Oracle VM differs depending on whether the Oracle VM Servers used in the server pool are based on an x86 or SPARC architecture, however the behavior of a server pool cluster and the way in which it is configured within Oracle VM Manager is largely seamless regardless of the platform used. This means that server pool clustering is handled automatically by Oracle VM Manager as soon as you enable it for a server pool, as long as the necessary requirements are met to allow clustering to take place.

In this section we discuss how server pool clusters work for each of the different architectures, and describe the requirements for clustering to be enabled.

Oracle VM works in concert with Oracle OCFS2 to provide shared access to server pool resources residing in an OCFS2 file system. This shared access feature is crucial in the implementation of high availability (HA) for virtual machines running on x86 Oracle VM Servers that belong to a server pool with clustering enabled.

OCFS2 is a cluster file system developed by Oracle for Linux, which allows multiple nodes (Oracle VM Servers) to access the same disk at the same time. OCFS2, which provides both performance and HA, is used in many applications that are cluster-aware or that have a need for shared file system facilities. With Oracle VM, OCFS2 ensures that Oracle VM Servers belonging to the same server pool access and modify resources in the shared repositories in a controlled manner.

The OCFS2 software includes the core file system, which offers the standard file system interfaces and behavioral semantics and also includes a component which supports the shared disk cluster feature. The shared disk component resides mostly in the kernel and is referred to as the O2CB cluster stack. It includes:

A disk heartbeat to detect live servers.

A network heartbeat for communication between the nodes.

A Distributed Lock Manager (DLM) which allows shared disk resources to be locked and released by the servers in the cluster.

OCFS2 also offers several tools to examine and troubleshoot the OCFS2 components. For detailed information on OCFS2, see the OCFS2 documentation at:

http://oss.oracle.com/projects/ocfs2/documentation/

Oracle VM decouples storage repositories and clusters so that if a storage repository is taken off-line, the cluster is still available. A loss of one heartbeat device does not force an Oracle VM Server to self fence.

When you create a server pool, you have a choice to activate the cluster function which offers these benefits:

Shared access to the resources in the repositories accessible by all Oracle VM Servers in the cluster.

Protection of virtual machines in the event of a failure of any Oracle VM Server in the server pool.

You can choose to configure the server pool cluster and enable HA in a server pool, when you create or edit a server pool within Oracle VM Manager. See Create Server Pool and Edit Server Pool in the Oracle VM Manager User's Guide for more information on creating and editing a server pool.

During server pool creation, the server pool file system specified for the new server pool is accessed and formatted as an OCFS2 file system. This formatting creates several management areas on the file system including a region for the global disk heartbeat. Oracle VM formats the server pool file system as an OCFS2 file system whether the file system is accessed by the Oracle VM Servers as an NFS share, a FC LUN or iSCSI LUN. See Section 3.8, “How is Storage Used for Server Pool Clustering?” for more information on how storage is used for the cluster file system and the requirements for a stable cluster heartbeat function.

As Oracle VM Servers are added to a newly created server pool, Oracle VM:

Creates the cluster configuration file and the cluster time-out file.

Pushes the configuration files to all Oracle VM Servers in the server pool.

Starts the cluster.

Cluster timeout can only be configured during server pool creation. The cluster timeout determines how long a server should be unavailable within the cluster before failover occurs. Setting this value too low can cause false positives, where failover may occur due to a brief network outage or a sudden load spike. Setting the cluster timeout to a higher value can mean that a server is unavailable for a lengthier period before failover occurs. The maximum value for the cluster timeout is 300 seconds, which means that in the event that a server becomes unavailable, failover may only occur 5 minutes later. The recommended approach to setting timeout values is to use the functionality provided within the Oracle VM Manager when creating or editing a server pool. See Create Server Pool and Edit Server Pool in the Oracle VM Manager User's Guide. for more information on setting the timeout value.

On each Oracle VM Server in the cluster, the cluster configuration file

is located at /etc/ocfs2/cluster.conf, and

the cluster time-out file is located at

/etc/sysconfig/o2cb. Note that it is

imperative that every node in the cluster has the same o2cb

parameters set. Do not attempt to configure different parameters

for different servers within a cluster, or the heartbeat device

may not be mounted. Cluster heartbeat parameters within the

cluster timeout file are derived from the cluster timeout value

defined for a server pool during server pool creation. The

following algorithms are used to set the listed o2cb parameters

accordingly:

o2cb_heartbeat_threshold = (timeout/2) + 1

o2cb_idle_timeout_ms = (timeout/2) * 1000

Starting the cluster activates several services and processes on each of the Oracle VM Servers in the cluster. The most important processes and services are discussed in Table 6.1, “Cluster services”.

Table 6.1 Cluster services

Service | Description |

|---|---|

o2net | The o2net process creates TCP/IP intra-cluster node communication channels on port 7777 and sends regular keep-alive packages to each node in the cluster to validate if the nodes are alive. The intra-cluster node communication uses the network with the Cluster Heartbeat role. By default, this is the Server Management network. You can however create a separate network for this function. See Section 5.6, “How are Network Functions Separated in Oracle VM?” for information about the Cluster Heartbeat role. Make sure the firewall on each Oracle VM Server in the cluster allows network traffic on the heartbeat network. By default, the firewall is disabled on Oracle VM Servers after installation. |

o2hb-diskid | The server pool cluster also employs a disk heartbeat check. The o2hb process is responsible for the global disk heartbeat component of cluster. The heartbeat feature uses a file in the hidden region of the server pool file system. Each pool member writes to its own block of this region every two seconds, indicating it is alive. It also reads the region to maintain a map of live nodes. If a pool member stops writing to its block, then the Oracle VM Server is considered dead. The Oracle VM Server is then fenced. As a result, that Oracle VM Server is temporarily removed from the active server pool. This fencing process allows the active Oracle VM Servers in the pool to access the resources of the fenced Oracle VM Server. When the fenced server comes back online, it rejoins the server pool. However, this fencing process is internal behavior that does not result in noticeable changes to the server pool configuration. For instance, the Oracle VM Manager Web Interface still displays a fenced Oracle VM Server as a member of the server pool. |

o2cb | The o2cb service is central to cluster operations. When an Oracle VM Server boots, the o2cb service starts automatically. This service must be up for the mount of shared repositories to succeed. |

ocfs2 | The OCFS2 service is responsible for the file system operations. This service also starts automatically. |

ocfs2_dlm and ocfs2_dlmfs | The DLM modules (ocfs2_dlm, ocfs2_dlmfs) and processes (user_dlm, dlm_thread, dlm_wq, dlm_reco_thread, and so on) are part of the Distributed Lock Manager. OCFS2 uses a DLM to track and manage locks on resources across the cluster. It is called distributed because each Oracle VM Server in the cluster only maintains lock information for the resources it is interested in. If an Oracle VM Server dies while holding locks for resources in the cluster, for example, a lock on a virtual machine, the remaining Oracle VM Servers in the server pool gather information to reconstruct the lock state maintained by the dead Oracle VM Server. |

Do not manually modify the cluster configuration files, or start and stop the cluster services. Oracle VM Manager automatically starts the cluster on Oracle VM Servers that belong to a server pool. Manually configuring or operating the cluster may lead to cluster failure.

When you create a repository on a physical disk, an OCFS2 file system is created on the physical disk. This occurs for local repositories as well. The resources in the repositories, for example, virtual machine configuration files, virtual disks, ISO files, templates and virtual appliances, can then be shared safely across the server pool. When a server pool member stops or dies, the resources owned by the departing server are recovered, and the change in status of the server pool members is propagated to all the remaining Oracle VM Servers in the server pool.

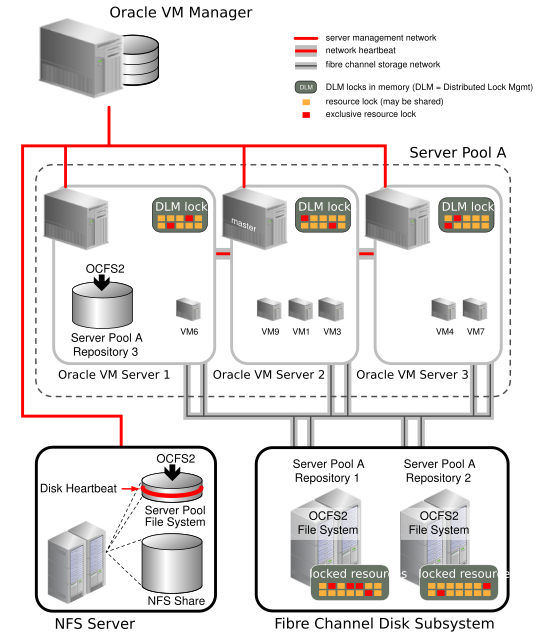

Figure 6.1, “Server Pool clustering with OCFS2 features” illustrates server pool clustering, the disk and network heartbeats, and the use of the DLM feature to lock resources across the cluster.

Figure 6.1, “Server Pool clustering with OCFS2 features” represents a server pool with three Oracle VM Servers. The server pool file system associated with this server pool resides on an NFS share. During server pool creation, the NFS share is accessed, a disk image is created on the NFS share and the disk image is formatted as an OCFS2 file system. This technique allows all Oracle VM Server pool file systems to be accessed in the same manner, using OCFS2, whether the underlying storage element is an NFS share, an iSCSI LUN or a Fibre Channel LUN.

The network heartbeat, which is illustrated as a private network connection between the Oracle VM Servers, is configured before creating the first server pool in your Oracle VM environment. After the server pool is created, the Oracle VM Servers are added to the server pool. At that time, the cluster configuration is created, and the cluster state changes from off-line to heartbeating. Finally, the server pool file system is mounted on all Oracle VM Servers in the cluster and the cluster state changes from heartbeating to DLM ready. As seen in Figure 6.1, “Server Pool clustering with OCFS2 features”, the heartbeat region is global to all Oracle VM Servers in the cluster, and resides on the server pool file system. Using the network heartbeat, the Oracle VM Servers establish communication channels with other Oracle VM Servers in the cluster, and send keep-alive packets to detect any interruption on the channels.

For each newly added repository on a physical storage element, an OCFS2 file system is created on the repository, and the repository is usually presented to all Oracle VM Servers in the pool. Figure 6.1, “Server Pool clustering with OCFS2 features” shows that Repository 1 and Repository 2 are accessible by all of the Oracle VM Servers in the pool. While this is the usual configuration, it is also feasible that a repository is accessible by only one Oracle VM Server in the pool. This is indicated in the figure by Repository 3, which is accessible by Oracle VM Server 1 only. Any virtual machine whose resources reside on this repository cannot take advantage of the high availability feature afforded by the server pool.

Note that repositories built on NFS shares are not formatted as OCFS2 file systems. See Section 3.9, “Where are Virtual Machine Resources Located?” for more information on repositories.

Figure 6.1, “Server Pool clustering with OCFS2 features” shows several virtual machines with resources in shared Repositories 1 and 2. As virtual machines are created, started, stopped, or migrated, the resources for these virtual machines are locked by the Oracle VM Servers needing these resources. Each Oracle VM Server ends up managing a subset of all the locked resources in the server pool. A resource may have several locks against it. An exclusive lock is requested when anticipating a write to the resource while several read-only locks can exist at the same time on the same resource. Lock state is kept in memory on each Oracle VM Server as shown in the diagram. The distributed lock manager (DLM) information kept in memory is exposed to user space in the synthetic file system called dlmfs, mounted under /dlm. If an Oracle VM Server fails, its locks are recovered by the other Oracle VM Servers in the cluster and virtual machines running on the failed Oracle VM Server are restarted on another Oracle VM Server in the cluster. If an Oracle VM Server is no longer communicating with the cluster via the heartbeat, it can be forcibly removed from the cluster. This is called fencing. An Oracle VM Server can also fence itself if it realizes that it is no longer part of the cluster. The Oracle VM Server uses a machine reset to fence. This is the quickest way for the Oracle VM Server to rejoin the cluster.

There are some situations where removing an Oracle VM Server from a server pool may generate an error. Typical examples include the situation where an OCFS2-based repository is still presented to the Oracle VM Server at the time that you attempt to remove it from the server pool, or if the Oracle VM Server has lost access to the server pool file system or the heartbeat function is failing for that Oracle VM Server. The following list describes steps that can be taken to handle these situations.

Make sure that there are no repositories presented to the server when you attempt to remove it from the server pool. If this is the cause of the problem, the error that is displayed usually indicates that there are still OCFS2 file systems present. See Present or Unpresent Repository in the Oracle VM Manager User's Guide for more information.

If a pool file system is causing the remove operation to fail, other processes might be working on the pool file system during the unmount. Try removing the Oracle VM Server at a later time.

In a case where you try to remove a server from a clustered server pool on a newly installed instance of Oracle VM Manager, it is possible that the file server has not been refreshed since the server pool was discovered in your environment. Try refreshing all storage and all file systems on your storage before attempting to remove the Oracle VM Server.

In the situation where the Oracle VM Server cannot be removed from the server pool because the server has lost network connectivity with the rest of the server pool, or the storage where the server pool file system is located, a critical event is usually generated for the server in question. Try acknowledging any critical events that have been generated for the Oracle VM Server in question. See Events Perspective in the Oracle VM Manager User's Guide for more information. Once these events have been acknowledged you can try to remove the server from the server pool again. In most cases, the removal of the server from the server pool succeeds after critical events have been acknowledged, although some warnings may be generated during the removal process. Once the server has been removed from the server pool, you should resolve any networking or storage access issues that the server may be experiencing.

If the server is still experiencing trouble accessing storage and all critical events have been acknowledged and you are still unable to remove it from the server pool, try to reboot the server to allow it to rejoin the cluster properly before attempting to remove it again.

If the server pool file system has become corrupt for some reason, or a server still contains remnants of an old stale cluster, it may be necessary to completely erase the server pool and reconstruct it from scratch. This usually involves performing a series of manual steps on each Oracle VM Server in the cluster and should be attempted with the assistance of Oracle Support.

Since the Oracle OCFS2 file system caters for Linux and not for Solaris, SPARC server pools are unable to use this file system to implement clustering functionality. Therefore, clustering for SPARC server pools cannot be implemented on physical disks and are limited to using NFS storage to host the cluster file system. Clustering for SPARC server pools relies on an additional package, which must be installed in the control domain for each Oracle VM Server in the server pool. This package contains a distributed lock manager (DLM) that is used to to facilitate the cluster. Installation of this package is described in more detail in Installing the Distributed Lock Manager (DLM) Package in the Oracle VM Installation and Upgrade Guide.

The DLM package used to achieve clustering for SPARC server pools is a port of the tools that are used within OCFS2 on Linux, but exclude the actual OCFS2 file system itself. The DLM package includes:

A disk heartbeat to detect live servers.

A network heartbeat for communication between the nodes.

A Distributed Lock Manager (DLM) which allows shared disk resources to be locked and released by the servers in the cluster.

The only major difference between clustering on SPARC and on x86 is that there are limitations to the types of shared disks that SPARC infrastructure can use to host the cluster file system. Without OCFS2, clustering depends on a file system that is already built to facilitate shared access and this is why only NFS is supported for this purpose. Unlike in x86 environments, when NFS is used to host a SPARC server pool file system, an OCFS2 disk image is not created on the NFS share. Instead, the cluster data is simply stored directly on the NFS file system itself.

With this information in mind, the description provided in Section 6.9.1, “Clustering for x86 Server Pools” largely applies equally to clustering on SPARC, although the implementation does not use OCFS2.

A final point to bear in mind is that clustering for SPARC server pools is only supported where a single control domain has been configured on all of the Oracle VM Servers in the server pool. If you have decided to make use of multiple service domains, you must configure an unclustered server pool. See Section 6.10, “Unclustered Server Pools” for more information.