Overview

THIS FEATURE IS NO LONGER SUPPORTED.

The Oracle Private Cloud Appliance Cloud Native Environment automates the provisioning of Oracle VM infrastructure and Kubernetes components to provide an integrated solution for Oracle Private Cloud Appliance. Oracle Private Cloud Appliance administrators define and build Kubernetes clusters and later scale them up or down depending on the Kubernetes administrator's needs.

Oracle Private Cloud Appliance automates deployment, scaling and management of Kubernetes application containers. To use the Oracle Private Cloud Appliance Cloud Native Environment 1.2, follow the steps in this tutorial.

If you are currently using Oracle Private Cloud Appliance Cloud Native Environment 1.0, the new version of the environment, 1.2, requires new clusters to be constructed. Clusters built with 1.0 and clusters built with 1.2 can run simultaneously in an environment, however mixed virtual appliance templates within a cluster are not supported. Clusters constructed with the 1.0 virtual appliance cannot be upgraded in place to the Kubernetes version shipped with the 1.2 virtual appliance.

If you have an application deployed on the 1.0 virtual appliance, consider a step by step application migration:

- Start a test cluster on the 1.2 virtual appliance that uses Kubernetes 1.18

- Test application compatibility between Kubernetes 1.17 (deployed with the 1.0 virtual appliance) and the updated Kubernetes version

- Migrate the application to the updated cluster

For instructions on using the prior version, Oracle Private Cloud Appliance Cloud Native Environment 1.0, see 2.13 Kubernetes Engine.

Guidelines and Limitations

This section describes the guidelines and limitations for Kubernetes on Oracle Private Cloud Appliance Cloud Native Environment.

Kubernetes clusters built with the Oracle Private Cloud Appliance Cloud Native Environment service always contain three master nodes and a load balancer.

A Kubernetes cluster requires a static, floating, IPv4 address for the load balancer (regardless of whether the virtual machines will use DHCP or static addresses for the cluster nodes).

Only IPv4 clusters are supported.

The DHCP Kubernetes cluster type can be used only if a DHCP server exists on the external network used for the Kubernetes cluster.

If an external network with a DHCP server is used, the load balancer must still have a static address that cannot be allocated by the DHCP server and all nodes must be able to obtain a DHCP address.

Mixed virtual appliance templates within a cluster are not supported.

If an external network with static host names and IP addresses is used, all host names must resolve to a single IP address.

A maximum of 255 Kubernetes clusters can be created per Oracle Private Cloud Appliance, the number of started Kubernetes clusters is limited by the memory and storage of the compute nodes provisioned to the tenancies.

There is a maximum of 16 worker nodes per Kubernetes cluster, though up to 255 node pools that define the shape of the worker nodes can be created per Kubernetes cluster.

An Oracle Private Cloud Appliance administrator should understand the Virtual Routing Redundancy Protocol (VRRP) and if it is already in use on the external network that will host their Kubernetes cluster(s) prior to provisioning Kubernetes clusters. For more information, see Virtual Routing Redundancy Protocol.

When deprovisioning an Oracle Private Cloud Appliance compute node running a Kubernetes cluster, follow this procedure: “Deprovisioning and Replacing a Compute Node”

A mirrored storage pool should be used with repositories hosted on the internal Oracle ZFS Storage Appliance. Without mirrored storage, etcd leadership changes are more frequent in the Kubernetes cluster.

The provisioned Kubernetes environment does not include an Ingress controller or Load Balancer for deployed applications, that should be added to the environment after deployment

The provisioned Kubernetes environment does not include CSI drivers for storage expansion. NFS volume mounts can be used with the internal Oracle ZFS Storage Appliance, see Add nfs-exception.

The Kubernetes cluster created in the tenancy is not backed up by the PCA backup process. A Kubernetes cluster administrator should implement an etcd backup strategy for active clusters, refer to etcd documentation for how to back up the etcd cluster: https://kubernetes.io/docs/tasks/administer-cluster/configure-upgrade-etcd/

Perform a manual backup of a Kubernetes cluster configuration after performing any changes (CRUD) on Kubernetes cluster configuration to ensure a consistent backup.

The time it takes to start a Kubernetes cluster after defining it can vary widely depending on the size of the Kubernetes cluster and on the usage of Oracle VM management operations during the building of the cluster. If Oracle VM is not heavily used at the same time as the Kubernetes cluster start, a default Kubernetes cluster that builds 3 master nodes and 3 worker nodes takes approximately 45 minutes. For each additional (or for each worker node subtracted), adjust the build time by approximately 5 minutes, depending on Oracle VM management usage and overlapping cluster operations.

Additionally, the more compute nodes there are in an Oracle Private Cloud Appliance, the more time it will take to build a cluster due to the start and stop timing increases as Network/VLAN interfaces are created and added to or removed or from all of the nodes.

Download the Oracle Private Cloud Appliance Cloud Native Environment VM Virtual Appliance

To prepare your Oracle Private Cloud Appliance for a cluster environment, download

the Oracle Private Cloud Appliance Cloud Native Environment VM Virtual Appliance, then

create the K8s_Private network for Kubernetes, as shown in

the next section.

The virtual appliance templates behave much like other virtual appliances in the Oracle VM environment. The Kubernetes virtual appliance requires 50 GB of space per virtual machine created from it. The virtual appliance must be added to each repository that will be used to host Kubernetes clusters or cluster node pools.

Download the Oracle Private Cloud Appliance Cloud Native Environment from Oracle Support to a reachable http server. Search for "Oracle Private Cloud Appliance Cloud Native Environment" to locate the file.

Tip To stage a simple http server, see https://docs.python.org/2/library/simplehttpserver.html. For example, change directory to where you downloaded the virtual appliance and issue this command:

python -m SimpleHTTPServer 8000

Enter this URL in the next step:

http://<your-client-IP-address>:8000/<the-downloaded-filename>

From the Oracle VM Manager Repositories tab, select the desired repository, then choose Virtual Appliances.

-

Click Import Virtual Appliances then:

Enter the URL for the virtual appliance you just downloaded.

The Oracle Private Cloud Appliance Cloud Native Environment VM Virtual Appliance is here: (input URL)

Enter the hostname or IP address of the proxy server.

-

If Oracle VM changed the name of the virtual appliance (it may have appended characters), rename it back to its base name (pca-k8s-1-0-0.ova, or pca-k8s-1-2-0.ova). For details, see version information.

Repeat for each repository where you want to build Kubernetes cluster nodes.

Check the README file or MOS note related to the downloaded virtual appliance. A script may need to be run on the Oracle Private Cloud Appliance management nodes to update the valid virtual appliance names. For example, downloading the pca-k8s-1-2-0.ova to an Oracle Private Cloud Appliance 2.4.3 will require a script to be run or the pca-k8s-1-2-0.ova will not be recognized by

create kube-cluster.

Create the K8s_Private Network

The K8s_Private network is required to

provide a way for the cluster to communicate internally. Once

configured, the K8s_Private network should

require minimal management.

Using SSH and an account with superuser privileges, log into the active management node.

NoteThe default

rootpassword is Welcome1. For security reasons, you must set a new password at your earliest convenience.Launch the Oracle Private Cloud Appliance command line interface.

# pca-admin Welcome to PCA! Release: 2.4.3 PCA>

Create the private network as follows:

PCA> create network K8S_Private rack_internal_network

Add the private network to each tenant group that will be used for Kubernetes clusters.

PCA> add network-to-tenant-group K8S_Private

tenant_group1NoteDepending upon the number of compute nodes available in Oracle Private Cloud Appliance, the

add network-to-tenant-groupcommand may take 10 or more minutes. You should perform cluster creation only after theK8S_Privatenetwork is assigned to all compute nodes.Verify the network was created.

PCA> list network

Create a Kubernetes Cluster on a DHCP Network

This section describes how to create a Kubernetes cluster definition for a 3 master node, 3 worker node cluster when a DHCP server is available on the external network used for the Kubernetes cluster nodes. The DHCP server and network must not allow multiple allocations of the same address or cluster creation could fail. A single static address is required for the load balancer on the same network (an address that the DHCP server does not control and will not be used by other users on the network).

-

From the Oracle Private Cloud Appliance command line interface, specify a name for the cluster, a server pool (tenancy), the external network that has the DHCP server on it, the static load balancer IP address, the storage repository, and, optionally, the virtual appliance name.

PCA> create kube-cluster cluster-1 Rack1_ServerPool vm_public_vlan load_balancer_ipaddress Rack1-Repository Kubernetes cluster configuration (cluster-1) created Status: Success

Notevm_public_vlanor any external network you use for your cluster must be up and reachable to successfully create a cluster. This network must be assigned to all compute nodes in the storage pool you use, otherwise clusters will not start. -

Verify the cluster definition was created correctly.

PCA> show kube-cluster cluster-1 ---------------------------------------- Cluster cluster-1 Tenant_Group Rack1_ServerPool Tenant_Group_ID 0004fb000020000001398d8312a2bc3b State CONFIGURED Sub_State VALID Ops_Required None Load_Balancer 100.80.151.119 External_Network vm_public_vlan External_Network_ID 1096679b1e Repository Rack1-Repository Repository_ID 0004fb000030000005398d83dd67126791 Assembly PCA_K8s_va.ova Assembly_ID 11af134854_PCA_K8s_OVM_OL71 Masters 3 Workers 3 ---------------------------------------- Status: Success

To add worker nodes to the cluster definition, specify the cluster name, and the quantity of nodes in the worker pool, or the names of the nodes in the worker pool. See “set kube-worker-pool”.

Start the cluster. This step builds the cluster from the cluster configuration you just created. Depending on the size of the cluster definition this process can take from 30 minutes to hours. A master node pool is defined with 3 master nodes and cannot be changed. However worker nodes may be added to the DHCP cluster definition.

PCA> start kube-cluster cluster-1

Follow the progress of the build using the

show kube-clustercommand.PCA> show kube-cluster cluster-1 ---------------------------------------- Cluster cluster-1 Tenant_Group Rack1_ServerPool Tenant_Group_ID 0004fb000020000001398d8312a2bc3b State AVAILABLE Sub_State None Ops_Required None Load_Balancer 172.16.0.157 Vrrp_ID 236 External_Network default_external Cluster_Network_Type dhcp Gateway None Netmask None Name_Servers None Search_Domains None Repository Rack2-Repository Assembly PCA_K8s_va.ova Masters 3 Workers 3 Cluster_Start_Time 2020-06-14 06:11:32.765239 Cluster_Stop_Time None Job_ID None Job_State None Error_Code None Error_Message None ---------------------------------------- Status: Success

For more information on cluster states, see Section 4.2.52, “start kube-cluster”.

Once the cluster is started, collect the node pool information for the cluster.

Save this information, you will use it to hand the clusters off to Kubernetes later.

PCA> list node-pool --filter-column=Cluster --filter=cluster-1 Cluster Node_Pool Tenant_Group CPUs Memory Nodes ------- --------- ------------ ---- ------ ----- cluster-1 master Rack1_ServerPool 4 16384 3 cluster-1 worker Rack1_ServerPool 2 8192 2 ---------------- 2 rows displayed Status: Success

Once the cluster is in the AVAILABLE state, consider performing a manual backup to capture the new cluster state. See “backup”.

Create a Kubernetes Cluster on a Static Network

This section describes how to create a Kubernetes cluster with 3 master nodes and 3 worker nodes on a static network. Before getting started, ensure that you have the following information for the external VM network that will be used:

The external network name that will be used (the example uses

default_externalas the external network)A static IP address for the load balancer to use

A host name and the associated IP address for the ‘primordial’ master node

Two more host names for the additional master nodes (these must resolve to valid IP addresses in the DNS server)

An additional host name for the worker node (this must resolve to a valid IP address in the DNS server)

The network mask and gateway on the external network

The IP address(es) of the DNS server and the search domain(s) for the network

Next, several commands must be run to configure the network parameters.

From the Oracle Private Cloud Appliance command line interface, specify a name for the cluster, a server pool, an external network, the load balancer IP address, the storage repository, and optionally a virtual appliance.

PCA> create kube-cluster cluster-2 Rack1_ServerPool default_external load-balancer_ipaddress Rack1-Repository Kubernetes cluster configuration (cluster-2) created Status: Success

Set the network type to static for the cluster. Specify the cluster name, network type, netmask, and gateway. See “set kube-network”.

NoteThe network you use for your cluster must be up and reachable to successfully create a cluster. This network must be assigned to all compute nodes in the storage pool you use, otherwise clusters will not start.

PCA> set kube-network cluster-2 static netmask gateway_IP

Set the DNS server for the cluster. Specify the cluster name, DNS name server address(es), and search domains. See “set kube-dns”.

PCA> set kube-dns cluster-2 dns_IP_1,dns_IP_2 mycompany.com

Verify the cluster definition was created correctly.

PCA> show kube-cluster cluster-2 ---------------------------------------- Cluster Static Tenant_Group Rack1_ServerPool State AVAILABLE Sub_State None Ops_Required None Load_Balancer 172.16.0.220 Vrrp_ID 152 External_Network default_external Cluster_Network_Type static Gateway 172.16.0.1 Netmask 255.254.0.0 Name_Servers 144.20.190.70 Search_Domains ie.company.com,us.voip.companyus.com Repository Rack1-Repository Assembly OVM_OL7U7_x86_64_PVHVM.ova Masters 0 Workers 0 Cluster_Start_Time 2020-07-06 23:53:17.717562 Cluster_Stop_Time None Job_ID None Job_State None Error_Code None Error_Message None ---------------------------------------- Status: Success

To add worker nodes to the cluster definition, specify the cluster name, then list the names of the nodes you want in the worker pool. See “set kube-worker-pool”.

PCA> set kube-worker-pool cluster-2 worker-node-vm7 worker-node-vm8 worker-node9

To add the master pool to the cluster definition, specify the cluster name, list the primary master node with its name and IP address, then list the names of the other nodes you want in the master pool. See “set kube-master-pool”.

PCA> set kube-master-pool demo-cluster cluster-master-0,192.168.0.10 cluster-master-1 cluster-master-2

Start the cluster. This step builds the cluster from the cluster configuration you just created. Depending on the size of the cluster definition this process can take from 30 minutes to several hours.

PCA> start kube-cluster cluster-2 Status: Success

Follow the progress of the build using the

show kube-clustercommand.PCA> show kube-cluster cluster-2 <need example>

For more information on cluster states, see “start kube-cluster”.

Once the cluster is started, collect the node pool information for the cluster.

Save this information, you will use it to hand the clusters off to Kubernetes later.

PCA> list node-pool --filter-column=Cluster --filter=cluster-2 Cluster Node_Pool Tenant_Group CPUs Memory Nodes ------- --------- ------------ ---- ------ ----- cluster-2 master Rack1_ServerPool 4 16384 3 cluster-2 worker Rack1_ServerPool 2 8192 2 ---------------- 2 rows displayed Status: Success

Consider performing a manual backup to capture the new cluster state. See “backup”.

Use the Kubernetes Dashboard

This section describes how to use the Kubernetes dashboard that is

deployed with your Kubernetes cluster during the start

kube-cluster operation.

Install

kubectlon your local machine. You do not need to be root user for this task.Follow the directions to Install and Set Up kubectl from kubernetes.io.

Create the .

kubesubdirectory on your local machine.# mkdir -p $HOME/.kube

Copy the cluster configuration from the master to your local machine.

# scp root@<

load-balancer-ip>:~/.kube/config ~/.kube/configSet your Kubernetes configuration file location.

# export KUBECONFIG=~/.kube/config

Confirm the nodes in the cluster are up and running.

# kubectl get nodes

Create default user roles for the Kubernetes dashboard, using

dashboard-rbac.yaml.# kubectl apply -f dashboard-rbac.yaml

NoteThere are multiple ways to create user roles to access the Kubernetes dashboard. The following example is one way to do so. Use a method that is most appropriate for your Kubernetes environment.

apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard

For more information on the Kubernetes dashboard, see https://docs.oracle.com/en/operating-systems/olcne/orchestration/dashboard.html.

NoteYou can ignore the Deploying the Dashboard UI step; the Dashboard UI is deployed by default on Oracle Private Cloud Appliance.

For more information on

kubectl, see https://docs.oracle.com/en/operating-systems/olcne/orchestration/kubectl-setup-master.html.Once an account is created, get a login token for the Kubernetes dashboard.

This example shows creating a login token for the user account

admin-user, as cited in the example above.kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')Follow the Getting a Bearer Token directions at the Kubernetes dashboard github site.

Start the proxy on your host.

# kubectl proxy

Open the Kubernetes dashboard.

http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/#/login

You should see your cluster in the dashboard. Now a Kubernetes administrator can manage the cluster through the dashboard just as you would any other Kubernetes cluster. For more information see Oracle Linux Cloud Native Container Orchestration documentation.

If needed, you can configure internet access for worker nodes. This can ease deployment of applications that have dependencies outside of your corporate network.

Managing Kubernetes Clusters

This section describes some of the common changes you, as a PCA administrator, might make to an existing cluster. Once you have made changes to a cluster, perform a manual backup of your Oracle Private Cloud Appliance to save the new configuration (see “backup”). Recall that the Kubernetes administrator is responsible for their cluster backups and should refer to Kubernetes documentation for individual cluster access.

Task | Description |

|---|---|

Stop a cluster | PCA> stop kube-cluster

See “stop kube-cluster”. |

Add a node pool | PCA> add node-pool

See “add node-pool”. |

Remove a node pool | PCA> remove node-pool

See “remove node-pool”. |

Add a node pool node | PCA> add node-pool-node

See “add node-pool-node”. |

Remove a node pool node | PCA> remove node-pool-node

|

Change the profile of the VMs that are part of the default node pool for masters or workers | PCA> set kube-vm-shape

See “set kube-vm-shape”. |

The commands in the table above have help text and examples available from the Oracle

Private Cloud Appliance. To access the help text type help command-name. For

example, to get the help text for add node-pool, type help add node-pool.

Stop a Cluster

Stopping cluster terminates and deletes all Virtual Machines that make up the cluster, but leaves the base cluster configuration in place for a PCA administrator to re-construct the cluster later. The Virtual Machines are first stopped, then terminated, any information on the Virtual Machines is lost. Re-starting the cluster starts new virtual machines and resets the configuration to the beginning.

To stop a cluster, you must empty all nodes from the cluster node pools other than the base master and worker node pools, then delete the extra node pools once they are emptied. This section describes the order to stop a running cluster, then to delete the configuration information afterward.

Note that the cluster configuration does not have to be deleted after stopping a cluster. The stopped cluster retains information about the master and worker node pools from when the cluster was stopped. Assuming other clusters are not built that would conflict with the addresses in the stopped the cluster, the cluster configuration could be used to start the cluster again with the contents reset to the original state.

Stopping a Kubernetes cluster or node terminates the virtual machines and deletes the system disks. All locally written data and modification on the virtual machine local system disk will be lost.

From the Oracle Private Cloud Appliance command line interface, remove the worker nodes.

PCA> remove node-pool-node

MyClusternode-pool-0hostnameRepeat for each worker node in the node pool, until the node pool is empty.

Remove the node pool.

PCA> remove node-pool

MyClusternode-pool-0Repeat for each node pool in the cluster, until the cluster is empty.

Stop the cluster once the non-master and non-worker node pools are removed.

PCA> stop kube-cluster

MyClusterNotethe

--forceoption can be used on thestop kube-clustercommand. This option attempts to stop all workers regardless of their node pool, remove the node pools (other than master and worker), and leave the cluster in a stopped state.Delete the cluster

PCA> delete kube-cluster

MyClusterConsider performing a manual backup to capture the new cluster state. See “backup”.

Monitor Cluster Status

There are two parts to a Kubernetes cluster status, the status of the virtual machines used in the Kubernetes cluster, and Kubernetes itself.

To monitor the virtual machines that host the Kubernetes cluster, get the list of those virtual machines using the Oracle Private Cloud Appliance command line. Once you have the list, log in to Oracle VM to look more deeply at each VM, its run state, and other relevant information.

An Oracle Private Cloud Appliance administrator has access to the Oracle VM health, but they do not have access to the Kubernetes runtime health. To view the status of Kubernetes, the Kubernetes administrator should use the “Use the Kubernetes Dashboard” and various

kubectlcommands. See Overview of kubectl .

Resize Kubernetes Virtual Machine Disk Space

Log in to Oracle VM Manager.

For details, see “Logging in to the Oracle VM Manager Web UI”.

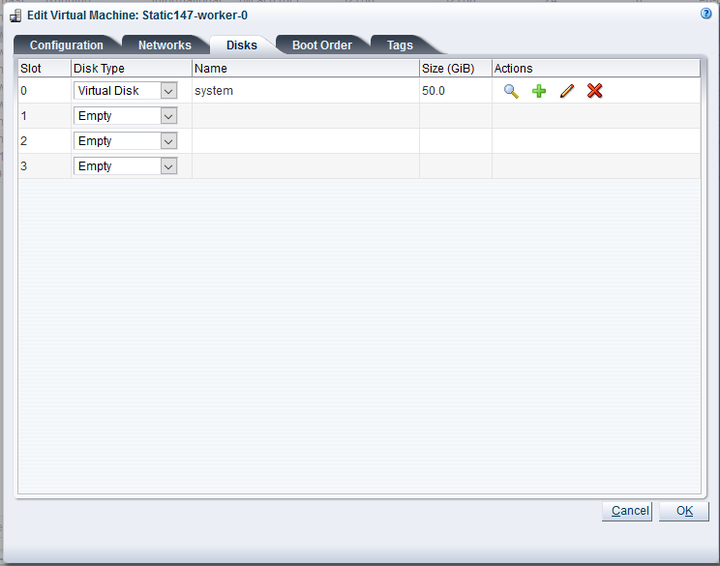

Select the Kubernetes virtual machine you wish to change, click Edit, select the Disks tab, and edit the desired disk size.

For details, see Edit the Virtual Machine

Log in to the Kubernetes virtual machine you just edited and check the amount of disk space.

[root@dhcp1-m-1 ~]# df -kh Filesystem Size Used Avail Use% Mounted on devtmpfs 16G 0 16G 0% /dev tmpfs 16G 0 16G 0% /dev/shm tmpfs 16G 18M 16G 1% /run tmpfs 16G 0 16G 0% /sys/fs/cgroup /dev/xvda3 46G 5.8G 39G 14% / /dev/xvda1 497M 90M 407M 19% /bootThis example shows increasing the size of

/dev/xvda3from 46G to 496G.Run

fdiskto partition the disk space.[root@dhcp1-m-1 ~]# fdisk /dev/xvda Welcome to fdisk (util-linux 2.23.2). Changes will remain in memory only, until you decide to write them. Be careful before using the write command. Command (m for help): d Partition number (1-3, default 3): 3 Partition 3 is deleted Command (m for help): n Partition type: p primary (2 primary, 0 extended, 2 free) e extended Select (default p): p Partition number (3,4, default 3): First sector (9414656-1048575999, default 9414656): Using default value 9414656 Last sector, +sectors or +size{K,M,G} (9414656-1048575999, default 1048575999): Using default value 1048575999 Partition 3 of type Linux and of size 495.5 GiB is set Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. WARNING: Re-reading the partition table failed with error 16: Device or resource busy. The kernel still uses the old table. The new table will be used at the next reboot or after you run partprobe(8) or kpartx(8) Syncing disks.Use the

partprobecommand to make the kernel aware of the new partition.[root@dhcp1-m-1 ~]# partprobe

Use the

btrfscommand to resize the partition tomax.[root@dhcp1-m-1 ~]# btrfs filesystem resize max / Resize '/' of 'max'

Verify the size of the new partition.

[root@dhcp1-m-1 ~]# df -kh Filesystem Size Used Avail Use% Mounted on devtmpfs 16G 0 16G 0% /dev tmpfs 16G 0 16G 0% /dev/shm tmpfs 16G 18M 16G 1% /run tmpfs 16G 0 16G 0% /sys/fs/cgroup /dev/xvda3 496G 5.7G 489G 2% / /dev/xvda1 497M 90M 407M 19% /boot

Maintain the Operating Systems on the Kubernetes Virtual Machines

When an Oracle Private Cloud Appliance administrator adds worker nodes or re-adds master nodes, the individual node becomes available with the Oracle Linux version, Kubernetes version, and the default settings that were a part of the Kubernetes virtual appliance.

Kubernetes administrators should update the new node(s) with:

an updated

rootpassword or changes to use passwordless authorizationupdate the proxies that are used by CRI-O to obtain new container images

possibly update the Oracle Linux distribution components

Many of these operation can be achieved with efficiency using Ansible playbooks that are applied when a new node is added.

The Kubernetes virtual appliance is based on Oracle Linux 7 Update 8. Administrators can update these images once they are running, keeping in mind new nodes have the original Oracle Linux 7 Update 8 on it.

Because the Kubernetes virtual appliances use Oracle Linux, administrators can follow the instructions at https://docs.oracle.com/en/operating-systems/oracle-linux/7/relnotes7.8/ol7-preface.html to put selected updates on their runtime nodes (such as updating the kernel or individual packages for security updates). Administrators should do as few updates on the runtime nodes as possible and look for Oracle guidance through My Oracle Support notes for specific suggestions for maintaining the Kubernetes virtual appliance.

Oracle Private Cloud Appliance Cloud Native Environment Version Matrix

This section describes the Oracle Private Cloud Appliance Cloud Native Environment release version, the name of the .ova file for that release, and the version of Kubernetes each version supports.

Oracle Private Cloud Appliance Cloud Native Environment Version |

Virtual Appliance Kubernetes Version |

OVA Name |

|---|---|---|

1.2 |

1.18 |

pca-k8s-1-2-0.ova |

1.1 |

1.17 |

pca-k8s-1-0-0.ova |

Want to Learn More?

Using the Oracle Private Cloud Appliance Cloud Native Environment Release 1.2

F38620-02

February 2022

Copyright © 2022, Oracle and/or its affiliates.

This tutorial provides instructions to use the Oracle Private Cloud Appliance Cloud Native Environment