Contents

One of the most important tasks when deploying an API Gateway system is confirming that the system is fit for purpose. Enterprise software systems are hugely valuable to the overall success of the business operation. For any organization, there are many implications of system downtime with important consequences to contend with. The most important factors to look at when architecting an API Gateway deployment are as follows:

-

What type of API Gateway policies will run on the system?

-

What type of message traffic will the API Gateway be required to process?

-

What is the expected distribution of the traffic?

-

How important is the system, and does it need to be always available?

-

How to make changes to the system without incurring system outage

-

Planning for outage and disaster recovery

The functional characteristics of any given policy run by an API Gateway can have a huge effect on the overall system throughput and latency times. Depending on the purpose of a particular policy, the demand on valuable processing power will vary. The following guidelines apply in terms of processing power:

-

Threat analysis and transport-based authentication tasks are relatively undemanding.

-

XML processing such as XML Schema and WS-Security username/password authentication are slightly more intensive.

-

Calling out to third-party systems is expensive due to network latency.

-

Cryptographic operations like encryption and signing are processor intensive.

The key point is that API Gateway policy performance depends on the underlying requirements, and customers should test their policies before deploying them into a production environment.

Architects and policy developers should adhere to the following guidelines when developing API Gateway policies:

-

Decide what type of policy you need to process your message traffic. Think in terms of functional requirements instead of technologies. Oracle can help you to map the technologies to the requirements. Example functional requirements include the following:

-

Only trusted clients should be allowed send messages into the network

-

An evidential audit trail should be kept

-

-

Think about what you already have in your architecture that could help to achieve these aims. Examples include LDAP directories, databases that already have replication strategies in place, and network monitoring tools.

-

Create a policy to match these requirements and test its performance. Oracle provides an integrated performance testing tool (Oracle API Gateway Explorer) to help you with this process.

-

Use the Oracle API Gateway Manager console to help identify what the bottlenecks are in your system. If part of the solution is slowing the overall system, try to find alternatives to meet your requirements.

-

View information on historic network usage in Oracle API Gateway Analytics.

-

Test the performance capability of the backend services.

Supplier A is creating a service that will accept Purchase Order (PO) documents from customers. The PO documents are formatted using XML. The functional requirements are:

-

The service should not accept anything that will damage the PO system.

-

Incoming messages must to be authenticated against a customer database to make sure they come from a valid customer account.

-

The supplier already has an LDAP directory and would like to use it to store the customer accounts.

-

The supplier must be able prove that the message came from the customer.

These requirements can be achieved using a policy that includes processing for Threatening Content and checking the XML Signature, which verifies the certificate against the LDAP Directory. For details on how to develop API Gateway policies using Policy Studio, see the API Gateway User Guide.

In the real world, messages do not arrive in a continuous stream with a fixed size like a lab-based performance test. Message traffic distribution has a major impact on system performance. Some of the questions that need to be answered are as follows:

-

Is the traffic smooth or does it arrive in bursts?

-

Are the messages all of the same size? If not, what is the size distribution?

-

Is the traffic spread out over 24 hours or only during the work day?

You should adhere to the following guidelines when analyzing message traffic:

-

Take traffic distribution into account when calculating performance requirements.

-

If traffic bursts cause problems for service producers, consider using the API Gateway to smooth the traffic. There are a number of techniques for doing this.

-

Take message size distribution into account when running performance tests.

The API Gateway scales well both horizontally and vertically. Customers can scale horizontally by adding more API Gateways to a cluster and load balancing across it using a standard load balancer. API Gateways being load balanced run the same configuration to virtualize the same APIs and execute the same policies. If multiple API Gateway groups are deployed, load balancing should be across groups also.

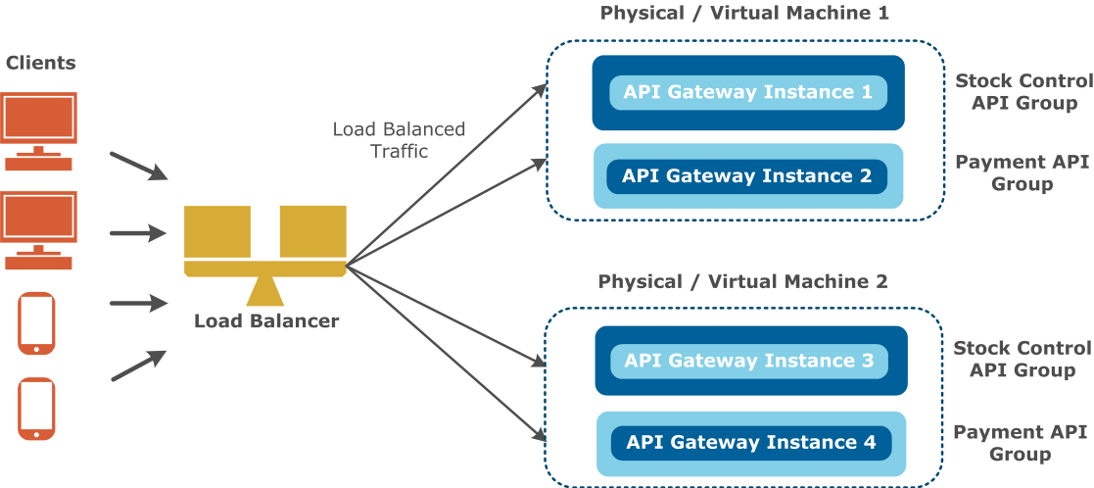

For example, the following diagram shows load balancing across two groups of API Gateways deployed on two hosts:

The API Gateway imposes no special requirements on load balancers. Loads are balanced on a number of characteristics including the response time or system load. The execution of API Gateway policies is stateless, and the route through which a message takes on a particular system has no bearing on its processing. Some items such as caches and counters are held on a distributed cache, which is updated on a per message basis. As a result, API Gateways can operate successfully in both sticky and non-sticky modes. For more details on caching, see the API Gateway User Guide.

The distributed state poses a number of questions in terms of active/active and active/passive clustering. For example, if the counter and cache state is important, you must design your overall system so that at least one API Gateway is active at all times. This means that for a resilient HA system, a minimum of at least two active API Gateways at any one time, with a third and fourth in passive mode is recommended.

The API Gateway ensures zero downtime by implementing configuration deployment in a rolling fashion. For example, while each API Gateway instance in the cluster or group takes a few seconds to update its configuration, it stops serving new requests, but all existing in-flight requests are honored. Meanwhile, the rest of the cluster or group can still receive new requests. The load balancer ensures that requests are pushed to the nodes that are still receiving requests. For more details on deploying API Gateway configuration, see the Deployment and Promotion Guide.

Oracle recommends the following guidelines for load balancing:

-

Use the Admin Node Manager and API Gateway groups to maintain the same policies on load-balanced API Gateways. For more details, see the API Gateway Concepts Guide.

-

Configure alerts to identify when API Gateways and backend services are approaching maximum capacity and need to be scaled.

-

Use the API Gateway Manager console to see which parts of the system are processing the most traffic.

Secure Socket Layer (SSL) connections can be terminated at the load balancer or API Gateway level. These options are described as follows:

-

SSL connection is terminated at load balancer:

-

The SSL certificate and associated private key are deployed on the load balancer, and not on the API Gateway. The subject name in the SSL certificate is the fully qualified domain name (FQDN) of the server (for example,

oracle.com). -

The traffic between the load balancer can be in the clear or over a new SSL connection. The disadvantage of a new SSL connection is that it puts additional processing load on the load balancer (SSL termination and SSL establishment).

-

If mutual (two-way) SSL is used, the load balancer can insert the client certificate into the HTTP header. For example, the F5 load balancer can insert the entire client certificate in

.pemformat as a multi-line HTTP header namedXClient-Certinto the incoming HTTP request. It sends this header to the API Gateway, which uses it for validation and authentication.

-

-

Load balancer is configured for SSL pass-through, and all traffic passed to the API Gateway:

With SSL pass-through, the traffic is encrypted so the load balancer cannot make any layer seven decisions (for example, if HTTP 500 is returned by API Gateway, route to HA API Gateway). To avoid this problem, you can configure the API Gateway so that it closes external ports on defined error conditions. In this way, the load balancer is alerted to switch to the HA API Gateway.

![[Note]](../common_oracle/images/admon/note.png) |

Note |

|---|---|

|

The API Gateway can also optionally use a cryptographic accelerator for SSL termination. For more details, see the API Gateway User Guide. |

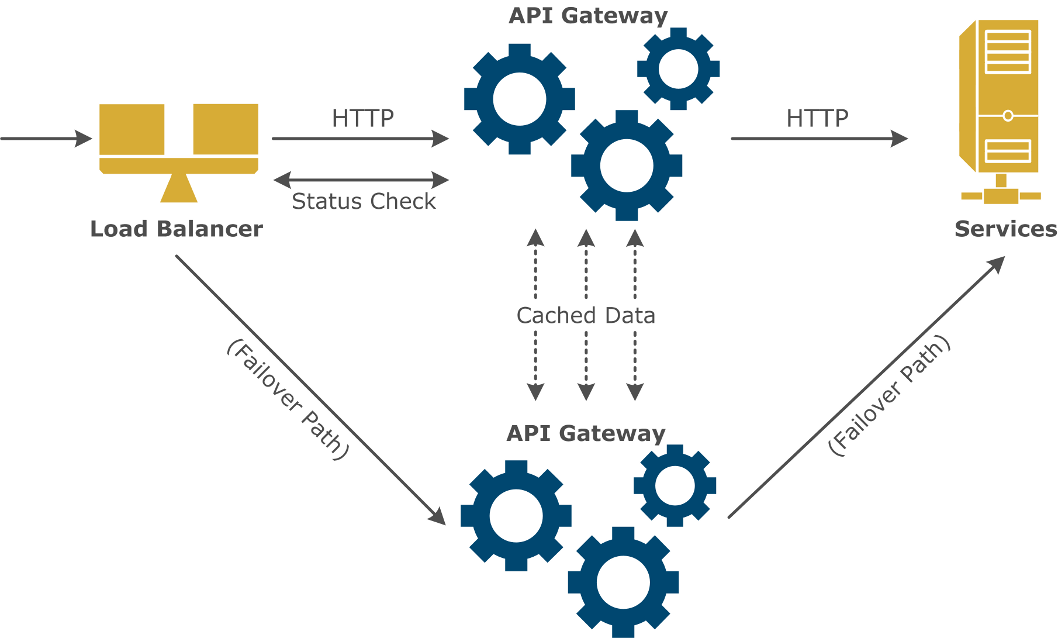

API Gateways are used in high value systems, and customers typically deploy them in High Availability (HA) mode to protect their investments. The API Gateway architecture enables this process as follows:

-

The Admin Node Manager is the central administration server responsible for performing all management operations across an API Gateway domain. It provides policy synchronization by ensuring that all API Gateways in an HA cluster have the same policy versions and configuration. For more details on the Admin Node Manager and API Gateway group-based architecture, see the API Gateway Concepts Guide.

-

API Gateway instances are stateless by nature. No session data is created, and therefore there is no need to replicate session state across API Gateways. However, API Gateways can maintain cached data, which can be replicated using a peer-to-peer relationship across a cluster of API Gateways. For more details, see the API Gateway User Guide.

-

API Gateway instances are usually deployed behind standard load balancers which periodically query the state of the API Gateway. If a problem occurs, the load balancer redirects traffic to the hot stand-by machine.

-

If an event or alert is triggered, the issue can be identified using API Gateway Manager or API Gateway Analytics, and the active API Gateway can then be repaired.

High Availability can be maintained using hot, cold, or warm stand-by systems. These are described as follows:

-

Cold stand-by: System is turned off.

-

Warm (passive) stand-by: System is operational but not containing state.

-

Hot (active) stand-by: System is fully operational and with current system state.

Oracle recommends the following guidelines for HA stand-by systems:

-

For maximum availability, use an API Gateway in hot stand-by for each production API Gateway.

-

Use API Gateways to protect against malicious attacks that undermine availability.

-

Limit traffic to backend services to protect against message flooding. This is particularly important with legacy systems that have been recently service-enabled. Legacy systems may not have been designed for the traffic patterns to which they are now subjected.

-

Monitor the network infrastructure carefully to identify issues early. You can do this using API Gateway Manager and API Gateway Analytics. Interfaces are also provided to standard monitoring tools such as syslog and Simple Network Management Protocol (SNMP).

Most customers have a requirement to keep a mirrored backup and disaster recovery site with full capacity to be able to recover from any major incidents. These systems are typically kept in a separate physical location on cold stand-by until the need arises for them to be brought into action.

The following applies for backup and recovery:

-

The backup and disaster site must be a full replica of the production site (for example, with the same number of API Gateway instances).

-

The Admin Node Manager helps get backup and recovery sites up and running fast by synchronizing the API Gateway policies in the backup solution.

-

Remember to also include any third-party systems in backup and recovery solutions.

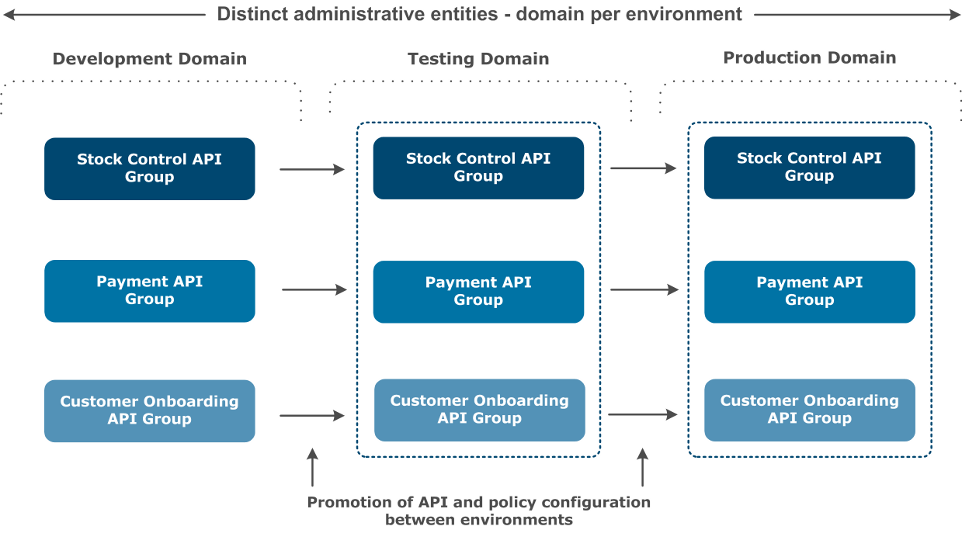

The most common reason for system downtime is change. Customers successfully alleviate this problem through effective change management as part of a mature software development lifecycle. A software development lifecycle controls change by gradually pushing it through a series of stages until it reaches production.

Each customer will have their own approach to staging depending on the value of the service and the importance of the data. Staging can be broken into a number of different milestones. Each milestone is intended to isolate a specific type of issue that could lead to system downtime. For example:

-

The development stage is where the policy and service are created.

-

Functional testing makes sure the system works as intended.

-

Performance testing makes sure the system meets performance requirements.

-

System testing makes sure the changes to the system do not adversely affect other parts.

In some cases, each stage is managed by a different group. The number of API Gateways depends on the number of stages and requirements of each of these stages. The following diagram shows a typical environment topology that includes separate API Gateway domains for each environment:

The following guidelines apply to development staging and testing:

-

Use API Gateway configuration packages (

.fed,.pol, and.env) to control the migration of policies from development through to production. -

Keep an audit trail of all system changes.

-

Have a plan in place to roll back quickly in the event of a problem occurring.

-

Test all systems and policy updates before promoting them to production.

-

Test High Availability and resiliency before going into production.

For more details, see the API Gateway Deployment and Promotion Guide.

The API Gateway platform is SSL-enabled by default, so you do not need to SSL-enable each API Gateway component. However, in a production environment, you must take additional precautions to ensure that the API Gateway environment is secure from external and internal threats.

The following guidelines apply to securing the API Gateway:

-

You must change all default passwords (for example, change the password for Policy Studio and API Gateway Manager from

admin/changeme). For more details, see Manage Admin users. -

The default X.509 certificates used to secure API Gateway components are self-signed (for example, the certificate used by the API Gateway Manager on port

8090). You can replace these self-signed certificates with certificates issued by a Certificate Authority (CA). For more details, see Manage certificates and keys. -

By default, API Gateway management ports bind on all HTTP interfaces (IP address set to

*). You can change these ports or restrict them to bind on specific IP addresses instead. For more details, see the chapter on "Configuring HTTP Services" in the API Gateway User Guide. -

By default, API Gateway configuration is unencrypted. You can specify a passphrase to encrypt API Gateway instance configuration. For more details, see Configure an API Gateway encryption passphrase.

-

You can configure user access at the following levels:

-

Policy Studio, API Gateway Manager, and Configuration Studio users—see Manage Admin users.

-

API Gateway users—see Manage API Gateway users.

-

Role-based access—see Configure Role-Based Access Control (RBAC).

-

The following is an example formula for deciding how big your API Gateway deployment will need to be. This example is purely intended for illustration purposes only:

numberOfGateways = (ceiling (requiredThroughput x factorOfSafety / testedPerformance) x HA) x (1 + numDR) + staging + eng))

This formula is described as follows:

-

factorOfSafetyhas a value of 2 for normal. -

Typically High Availability (

HA) has a value of 2 -

ceilingimplies that the number is rounded up. It is very important that this is not under provisioned by accident. -

Typically customers have a single backup and disaster recovery site (

numDR=1). -

There is a large variation in performance between API Gateways depending on the policy deployed so it important to performance test policies prior to final capacity planning.

-

stagingis the amount of stage testing licenses. Customers with very demanding applications should have a mirror of their production system. This is the only way to be certain.

For example, a software system is being designed that will connect an automobile manufacturer's purchasing system with a supplier. The system is designed to meet the most stringent load and availability requirements. An API Gateway system is being used to verify the integrity of the purchase orders.

The system as a whole is required to process 10,000,000 transactions per day. Each of these transactions will result in 8 individual request/responses. 90% of the load will happen between 9am in the morning and 5pm in the evening. The customer wishes to have a factor of safety of 2 for load spikes.

Overall throughput = (8 x 10,000,000 x 0.9)/8/60/60= 2,500 transactions per second (tps)

The development process for the example system should be as follows:

-

A Signature Verification policy is developed by one of the system engineers using a software license on his PC. When he is satisfied with the policy, he pushes it forward to the test environment.

-

Test engineers deploy the policy to the interoperability testing environment. They make sure that all of the systems work correctly together, and test the system as a whole. The API Gateway must show it can process the signature and integrate with the service backend and PKI systems. When the system as a whole is working correctly, the testing moves to system testing.

-

System testing will prove that the system as a whole is reliable and has the capacity to meet the performance requirements. It will also make sure that the system interoperates with monitoring software and behaves properly during failover and recovery. During system test the API Gateway is found to be capable of processing 1,000 messages per second for this policy.

-

The customer will have the following infrastructure:

-

1 backup and disaster site which is a full replica of the production site

-

A full HA system on hot standby

-

2 API Gateways for stage testing

-

A factor of safety on the load of 2

-

-

The number of API Gateways should be as follows:

((ceiling(2500/1000)*2)*2)*(1+1)+2+1 = 27

The resulting architecture provisioned should be 27 API Gateways made up of the following:

-

12 production licenses (6 live, 6 standby)

-

12 backup and disaster recovery licenses

-

2 staging licenses

-

1 engineering license

-