5 Preparing Storage

Each enterprise deployment requires shared storage. In addition, each host requires some private storage.

Private storage can be considered either local disk or disk space on the Storage Area Network (SAN), which is made exclusively available to a host. The advantage of using private storage on a SAN (as opposed to local disk) is you have the built-in storage redundancy and fast backup technologies available within the SAN itself, which makes management easier.

An Exalogic appliance comes with a ZFS Storage appliance built in. This chapter explains the best practices to setup and configure the ZFS storage for a typical enterprise deployment.

The advantage of using local disk is that access is generally faster. It is true in physical deployments where direct access to the underlying local flash disk is available. In a virtual deployment, then local disk is simply a virtual disk on ZFS storage.

This section contains the following topics:

- ZFS Concepts

This section provides conceptual information for setting up and configuring the ZFS storage for a typical enterprise deployment. - About the Default Storage Configuration

After commissioning an Oracle Exalogic Appliance, the storage will have the following default characteristics. - Overview of Enterprise Deployment Storage

This topic provides information on enterprise deployment storage which includes sharing requirements, read/write requirements and artifact characteristics. - Understanding the Enterprise Deployment Directory Structure

Each Oracle Fusion Middleware enterprise deployment is based on a similar directory structure. This directory structure has been designed to separate binaries, configuration, and runtime information. - Shared Storage Concepts

This section describes shared storage concepts. - Enterprise Deployment Storage Design Considerations

This topic provides information on ztorage design considerations and for storage requirements specific to your deployment type, refer to the appropriate product enterprise deployment guide. - Preparing Exalogic Storage for an Enterprise Deployment

Prepare storage for the physical Exalogic deployment by creating users and groups in NIS, creating projects using the storage appliance BUI and shares in a project using the BUI.

Parent topic: Preparing Exalogic for an Enterprise Deployment

5.1 ZFS Concepts

This section provides conceptual information for setting up and configuring the ZFS storage for a typical enterprise deployment.

- About Storage Pools

Physical disks inside the ZFS storage are allocated to storage pools. - About Projects

A project defines a common administrative control point for managing shares. All shares within a project can share common settings and can be backed up as a logical unit. - About Shares

Shares are file systems and LUNs that are exported over supported data protocols to clients of the appliance.

5.1.1 About Storage Pools

Physical disks inside the ZFS storage are allocated to storage pools.

Space within a storage pool is shared between all shares.

5.1.2 About Projects

A project defines a common administrative control point for managing shares. All shares within a project can share common settings and can be backed up as a logical unit.

Note:

On Exalogic, the use of LUNs or iSCSI are not supported. The only supported use of ZFS is through NFS shares.5.1.3 About Shares

Shares are file systems and LUNs that are exported over supported data protocols to clients of the appliance.

File systems export a file-based hierarchy and can be accessed using NFS over IPoIB in the case of Exalogic machines. The project/share tuple is a unique identifier for a share within a pool. Multiple projects can contain shares with the same name, but a single project cannot contain shares with the same name. A single project can contain both file systems and LUNs, and they share the same namespace.

File systems can grow or shrink dynamically as needed, though it is also possible to enforce space restrictions on a per-share basis. All shares within a project can be backed up as a logical unit.

5.2 About the Default Storage Configuration

After commissioning an Oracle Exalogic Appliance, the storage will have the following default characteristics.

-

Storage disks on the Sun ZFS Storage 7320 appliance are allocated to a single storage pool, such as

exalogic, by default. Every compute node in an Oracle Exalogic machine can access both of the server heads of the storage appliance. The storage pool uses one of the server heads, which are also referred to as controllers. The server heads use active-passive cluster configuration. Exalogic compute nodes access the host name or IP address of a server head and the mount point based on the distribution of the storage pool. -

If the active server head fails, the passive server head imports the storage pool and starts to offer services. From the compute nodes, the user may experience a pause in service until the storage pool is started to be serviced from the working server head. However, this delay might not affect client activity; it can affect disk I/O only.

-

By default, data is mirrored, which yields a highly reliable and high-performing system. The default storage configuration is done at the time of manufacturing, and it includes the following shares:

-

Two exclusive NFS shares for each of the Exalogic compute nodes - one for crash dumps and another for general purposes. In this scenario, you can implement access control for these shares based on your requirements.

-

Two common NFS shares to be accessed by all compute nodes - one for patches and another for general purposes.

-

5.3 Overview of Enterprise Deployment Storage

This topic provides information on enterprise deployment storage which includes sharing requirements, read/write requirements and artifact characteristics.

When deciding how to prepare shared storage, consider the following:

Sharing Requirements

-

Shared: The artifact resides on shared storage and can be simultaneously viewed and accessed by all client machines.

-

Available: The artifact resides on shared storage, but only one client machine can view and access the artifact at a time. If this machine fails, another client machine can then access the artifact.

-

Not-shared: The artifact can reside on local storage and never needs to be accessible by other machines.

Read/Write Requirements

-

Read-Only: This artifact is rarely altered but only read at runtime.

-

Read-Write: This artifact is both read and written to at runtime.

Artifact Characteristics

With the above requirements in mind, the artifacts deployed in a typical enterprise deployment can be classified as follows:

| Artifact Type | Sharing | Read/Write |

|---|---|---|

|

Binaries — Application Tier |

Shared |

Read-Only |

|

Binaries — Web Tier |

Private |

Read-Only |

|

Managed Server Domain Home |

Private |

Read-Write |

|

Admin Server Domain Home |

Available |

Read-Write |

|

Runtime Files |

Available |

Read-Write |

|

Node Manager Configuration |

Private |

Read-Write |

|

Application Specific Files |

Shared |

Read-Write |

5.4 Understanding the Enterprise Deployment Directory Structure

Each Oracle Fusion Middleware enterprise deployment is based on a similar directory structure. This directory structure has been designed to separate binaries, configuration, and runtime information.

Binaries are defined as the Oracle software installation. Binaries include such things as the JDK, WebLogic Server, and the Oracle Fusion Middleware software being used (for example, Oracle Identity and Access Management or WebCenter).

The Server Domain Home directories will be read to and written from at runtime.

The Runtime Directories are written at Runtime, and hold information such as JMS queue files. The Server Domain Home directories will be read and written at runtime.

This section contains the following topics:

- Shared Binaries

All machines use the same set of binaries to run processes. Binaries are installed once and then only modified during patches and upgrades. - Private or Shared Managed Server Domain Homes

Managed server domain homes are used to run managed servers on the local host machine. - A Domain Home for the Administration Server

The Domain Home from which the Administration Server runs is contained in a separate volume that can be mounted on any machine. - Shared Runtime Files

Files and directories that might need to be available to all members of a cluster are separated into their own directories. - Local Node Manager Directory

Each client machine will have its own Node Manager and Node Manager configuration directories. - Shared Application Files

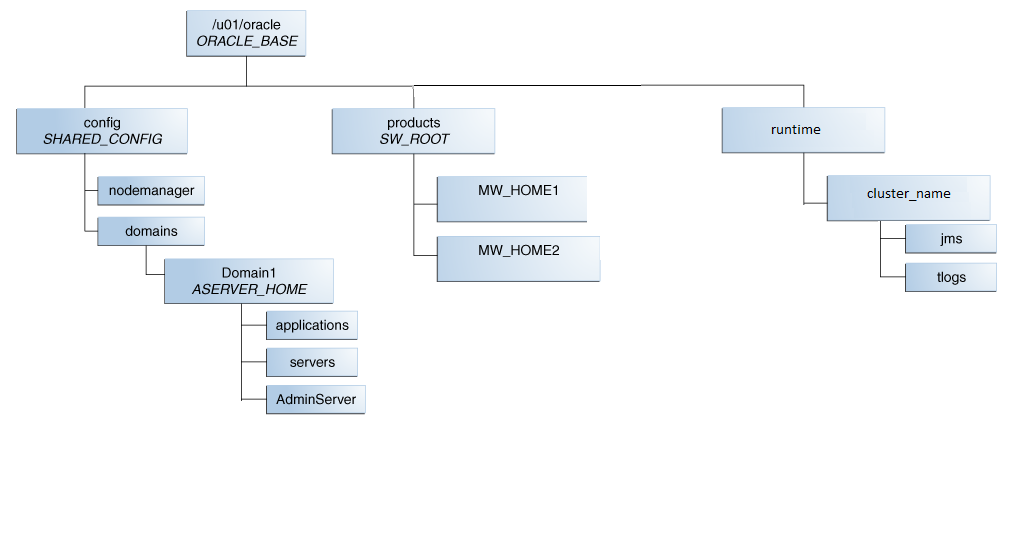

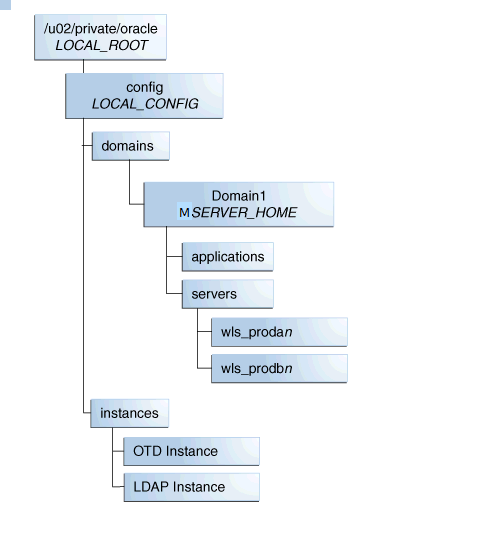

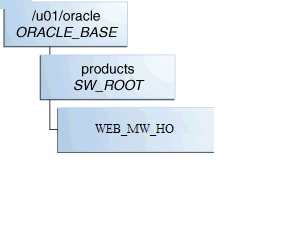

Any files that must be both shared and accessed concurrently (read/write) will be application dependent. - Diagrams of the Typical Enterprise Deployment Directory Structure

The following diagrams show the recommended use of shared and local storage for a typical enterprise deployment:

5.4.1 Shared Binaries

All machines use the same set of binaries to run processes. Binaries are installed once and then only modified during patches and upgrades.

For maximum availability and protection, it is recommended that redundant binaries are created (that is, two different shares are created that mirror each other). One share will be mounted to odd-numbered hosts, and one share will be mounted to even-numbered hosts. This is so that corruption in one set of binaries does not impact the entire system.

5.4.2 Private or Shared Managed Server Domain Homes

Managed server domain homes are used to run managed servers on the local host machine.

For performance reasons, it is recommended to have these domain homes reside on private storage attached to the host machine.

5.4.3 A Domain Home for the Administration Server

The Domain Home from which the Administration Server runs is contained in a separate volume that can be mounted on any machine.

This allows the Administration Server to be readily available if the machine that was hosting it has failed or is otherwise unavailable.

5.4.4 Shared Runtime Files

Files and directories that might need to be available to all members of a cluster are separated into their own directories.

These will include JMS files, transaction logs, and other artifacts that belong to only one member machine of a cluster but might need to be available to other machines in case of failover.

5.4.5 Local Node Manager Directory

Each client machine will have its own Node Manager and Node Manager configuration directories.

A Node Manager is associated with one physical machine, these directories can also optionally reside on private storage.

5.4.6 Shared Application Files

Any files that must be both shared and accessed concurrently (read/write) will be application dependent.

No files that are part of the Oracle Fusion Middleware WebLogic Infrastructure have this requirement, but some Fusion Middleware products (such as Oracle WebCenter Content) and custom applications might require shared files on disk.

5.4.7 Diagrams of the Typical Enterprise Deployment Directory Structure

The following diagrams show the recommended use of shared and local storage for a typical enterprise deployment:

Figure 5-1 Recommended Shared Storage Directory Structure

Figure 5-2 Recommended Private Storage Directory Structure

Figure 5-3 Recommended Directory Structure for Private Binary Storage

Description of "Figure 5-3 Recommended Directory Structure for Private Binary Storage"

Private binary storage is generally only required when the Web Tier is on a separate host (for example, virtual deployments).

5.5 Shared Storage Concepts

This section describes shared storage concepts.

This section contains the following topics:

- Shared Storage Protocols and Devices

ZFS can present storage either as block storage or as NFS shares. This document will describe setting up NFS (Network File System). NFS version 3 (v3) and NFS version 4 (v4) are supported by ZFS. - NFS Version 3

NFS version 3 (v3) is perhaps the most common standard for UNIX clients that requires a remote, shared file system. - NFS Version 4

NFS version 4 (v4) has several advantages over NFS v3. One advantage is support for an authentication protocol, such as NIS, to map user permissions instead of relying on UIDs. The other advantage is support for the expiration of file locks through leasing.

5.5.1 Shared Storage Protocols and Devices

ZFS can present storage either as block storage or as NFS shares. This document will describe setting up NFS (Network File System). NFS version 3 (v3) and NFS version 4 (v4) are supported by ZFS.

A shared file system is both a file system and a set of protocols used to manage multiple concurrent access. The most common of these protocols is NFS for UNIX clients. These solutions consist of a back-end file system, such as ZFS or EXT3, along with server processes (such as NFSD) to manage and mediate access.

5.5.2 NFS Version 3

NFS version 3 (v3) is perhaps the most common standard for UNIX clients that requires a remote, shared file system.

Permissions between the client machine and the storage server are mapped by using the UID of the users. NFS v3 can be configured and exported on most devices. The client machine can then mount the remote device locally with a command such as the following:

$ mount -t nfs storage_machine:/export/nfsshare /locmntddir -o rw,bg,hard,nointr,tcp,vers=3,timeo=300,rsize=32768,wsi ze=32768

This command mounts the remote file system at/locmnntdir. Most of the values specified above are default, and so an NFS v3 device can also be mounted as:

$ mount -t nfs storage_machine:/export/nfsshare /locmntddir -o nointr,timeo=300

The right values of rsize, wsize, and timeo will depend on attributes of the network between the client machine and the storage server. For example, a higher timeout value will help to minimize timeout errors.

The attributes noac and actimeo are not used here. These are used to disable attribute caching and may be necessary when objects such as Oracle data files are placed on NFS. They are not necessary, however, for most WebLogic applications or specific Fusion Middleware applications, such as SOA, WebCenter, or BI. Enabling these parameters will carry performance penalties as well.

Other options include noatime, which will provide a small performance boost on read-intensive applications by eliminating the requirement for access-time writes.

5.5.3 NFS Version 4

NFS version 4 (v4) has several advantages over NFS v3. One advantage is support for an authentication protocol, such as NIS, to map user permissions instead of relying on UIDs. The other advantage is support for the expiration of file locks through leasing.

After a specified time, the storage server releases unclaimed file locks. The default lease expiration time is storage server dependent. Typical values are 45 or 120 seconds. In Sun ZFS systems, the default is 90 seconds. In a failover scenario, where a server has failed and another server must claim or access the files owned by the failed server, it is able to do so after the lease expiration time. This value should be set lower than the failover time so that the new server can successfully acquire locks on the files. For example, this should be set lower than the time for server migration in a cluster using JMS file based persistence.

This differs from NFS v3 where locks are held indefinitely. The locks held by a process that has died have to be released manually in NFS v3.

An NFS v4 file system can be mounted on Linux similarly to NFS v3:

$ mount -t nfs4 storage_machine:/export/nfsv4share /locmntddir -o nointr,timeo=300

5.6 Enterprise Deployment Storage Design Considerations

This topic provides information on ztorage design considerations and for storage requirements specific to your deployment type, refer to the appropriate product enterprise deployment guide.

Based on the typical directory structures described in Diagrams of the Typical Enterprise Deployment Directory Structure, storage can be classified as follows:

Table 5-1 Summary of Enterprise Deployment Storage Design Considerations

| Project | Shares | Mount point |

|---|---|---|

|

product_binaries |

shared_binaries |

|

|

webhost1_local_binaries |

|

|

|

webhost2_local_binaries |

|

|

|

product_config |

shared_config |

|

|

host1_local_config |

|

|

|

host2_local_config |

|

|

|

Runtime |

shared-runtime |

|

Using this model, all binaries can be set to read-only after installation at the product level. Configuration information can still be read/write.

Backups can be taken at the project level. Disaster recovery can replicate content at the project level, allowing binaries to be replicated only when necessary and for configuration/jms queues to be replicated more frequently.

5.7 Preparing Exalogic Storage for an Enterprise Deployment

Prepare storage for the physical Exalogic deployment by creating users and groups in NIS, creating projects using the storage appliance BUI and shares in a project using the BUI.

This section contains the following topics:

- Prerequisite Storage Appliance Configuration Tasks

This topic provides the prerequisites for the storage appliance. - Creating Users and Groups in NIS

This step is optional. If you want to use the onboard NIS servers, you can create users and groups using the steps in this section. - Creating Projects Using the Storage Appliance Browser User Interface (BUI)

To configure the appliance for the recommended directory structure, you create a custom project using the Sun ZFS Storage 7320 appliance Browser User Interface (BUI). - Creating the Shares in a Project Using the BUI

After you have created projects, the next step is to create the required shares within the project. - Allowing Local Root Access to Shares

In order to allow compute nodes or virtual machines to access ZFS shares, you must add an NFS exception to allow you.

5.7.1 Prerequisite Storage Appliance Configuration Tasks

This topic provides the prerequisites for the storage appliance.

The instructions in this guide assume that the Sun ZFS Storage 7320 appliance is already set up and initially configured. Specifically, it is assumed you have reviewed the following sections in the Oracle Exalogic Elastic Cloud Machine Owner's Guide.

The examples below are for the typical enterprise deployment. Refer to the specific product Enterprise Deployment Guide for the list of shares you need to create for the product you are installing.

5.7.2 Creating Users and Groups in NIS

This step is optional. If you want to use the onboard NIS servers, you can create users and groups using the steps in this section.

First, determine the name of your NIS server by logging into the Storage BUI. For example:

5.7.3 Creating Projects Using the Storage Appliance Browser User Interface (BUI)

To configure the appliance for the recommended directory structure, you create a custom project using the Sun ZFS Storage 7320 appliance Browser User Interface (BUI).

After you set up and configure the Sun ZFS Storage 7320 appliance, the appliance has a set of default projects and shares. For more information, see "Default Storage Configuration" in the Oracle Exalogic Elastic Cloud Machine Owner's Guide.

The instructions in this section describe the specific steps for creating a new project for the enterprise deployment. For more general information about creating a custom project using the BUI, see "Creating Custom Projects" in the Oracle Exalogic Elastic Cloud Machine Owner's Guide.

To create a new custom project on the Sun ZFS Storage 7320 appliance:

5.7.4 Creating the Shares in a Project Using the BUI

After you have created projects, the next step is to create the required shares within the project.

For more general information about creating custom shares using the BUI, see "Creating Custom Shares" in the Oracle Exalogic Elastic Cloud Machine Owner's Guide.

To create each share, use the following instructions, replacing the name and privileges, as described in Table 5-1:

5.7.5 Allowing Local Root Access to Shares

In order to allow compute nodes or virtual machines to access ZFS shares, you must add an NFS exception to allow you.

You can create exceptions either at the individual, share, or project level. Additionally, if you want to run commands or traverse directories on the share as the root user, there is an option to enable.

To keep things simple, in this example you create the exception at the project level.

To create an exception for NFS at the project level: