1 Overview of TimesTen Scaleout

The following sections describe the features and components of Oracle TimesTen In-Memory Database in grid mode (TimesTen Scaleout).

Note:

For an overview of Oracle TimesTen In-Memory Database in classic mode (TimesTen Classic), see "Overview for the Oracle TimesTen In-Memory Database" in the Oracle TimesTen In-Memory Database Introduction.Introducing TimesTen Scaleout

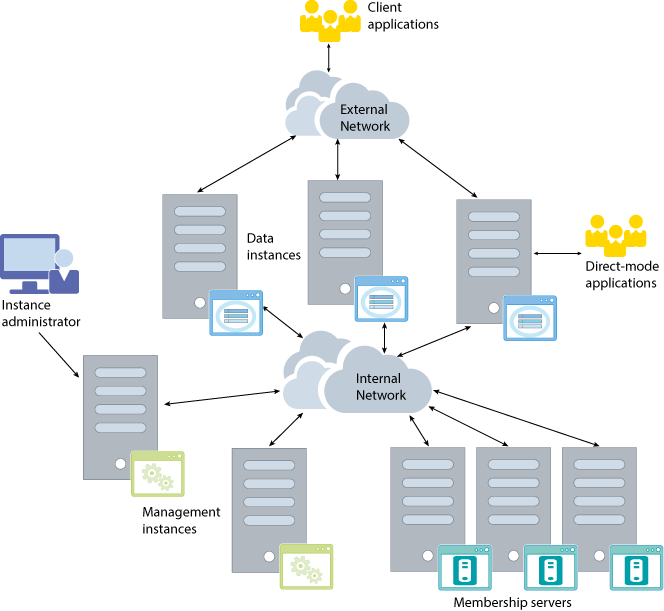

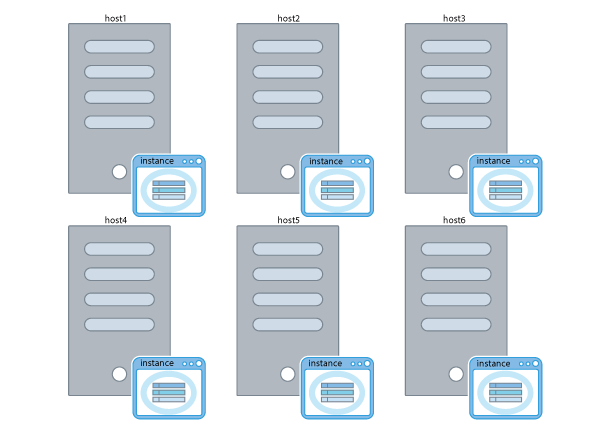

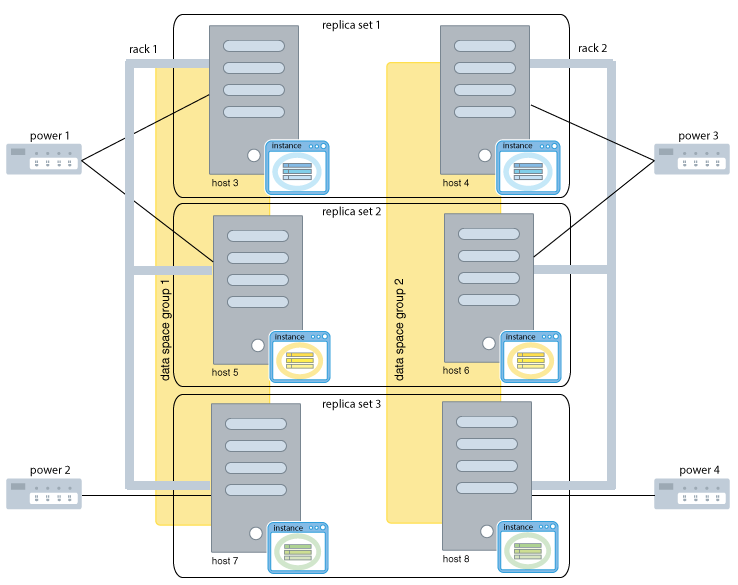

TimesTen Scaleout delivers high performance, fault tolerance, and scalability within a highly available in-memory database that provides persistence and recoverability. As shown in Figure 1-1, TimesTen Scaleout delivers these features by distributing the data of a database across a grid of multiple instances running on one or more hosts.

Note:

TimesTen Scaleout identifies physical or virtual systems as hosts. Each host represents a different system. You determine the name that TimesTen Scaleout uses as identifier for each host.Figure 1-1 A grid distributes data across many instances over multiple hosts

Description of ''Figure 1-1 A grid distributes data across many instances over multiple hosts''

TimesTen Scaleout enables you to:

-

Create a grid that is a set of interconnected instances installed on one or more hosts.

-

Create one or more in-memory, SQL relational, ACID-complaint databases.

-

Distribute the data of each database across the instances in the grid in a highly available manner using a shared-nothing architecture.

-

Connect applications to your database with full access to all the data, no matter what the distribution of the data is across the database.

-

Maintain one or more copies of your data. Your choice to maintain more than one copy protects you from data loss in the event of a single failure.

-

Add or remove instances from your grid to:

-

Expand or shrink the storage capacity of your database as necessary.

-

Expand or shrink the computing resources of your database to meet the performance requirements of your applications.

-

TimesTen Scaleout features

TimesTen Scaleout provides key capabilities, such as:

In-memory database

A database in TimesTen is a memory-optimized relational database that empowers applications with the responsiveness and high throughput required by today's enterprises and industries. Databases fit entirely in physical memory (RAM) and provide standard SQL interfaces.

TimesTen is designed with the knowledge that all data resides in memory. As a result, access to data is simpler and more direct resulting in a shorter code path and simpler algorithms and internal data structures. Thus, TimesTen delivers performance by optimizing data residency at run time. By managing data in memory and optimizing data structures and access algorithms accordingly, database operations execute with maximum efficiency, achieving dramatic gains in responsiveness and throughput.

Performance

TimesTen Scaleout achieves high performance by distributing the data of each database across instances in the grid in a shared-nothing architecture. TimesTen Scaleout spreads the work for the database across those instances in parallel, which computes the results of your SQL statements faster.

Persistence and durability

Databases in TimesTen are persistent across power failures and crashes. TimesTen accomplishes this by periodically saving to a file system:

-

All data through checkpoint files.

-

Changes made by transactions through transaction log files.

In TimesTen Scaleout, the data in your database is distributed into elements. Each element keeps its own checkpoint and transaction log files. As a result, the data stored in each element is independently durable. Each instance in a grid manages one element of a database. In the event of a failure, an instance can automatically recover the data stored in its element from the checkpoint and transaction logs files while the remaining instances continue to service applications.

TimesTen Scaleout also enables you to keep multiple copies of your data to increase durability and fault tolerance.

You can change the durability settings of a database according to your performance and data durability needs. For example, you may choose if data is flushed to the file system with every commit or periodically in batches in order to operate at a higher performance level.

SQL and PL/SQL functionality

Applications use SQL and PL/SQL to access data in a database. Any developer familiar with SQL can be immediately productive developing applications with TimesTen Scaleout.

For more information on SQL, see Chapter 7, "Using SQL in TimesTen Scaleout" in this guide and the Oracle TimesTen In-Memory Database SQL Reference. For more information on PL/SQL, see Table 1-9 and the Oracle TimesTen In-Memory Database PL/SQL Developer's Guide.

Transactions

TimesTen Scaleout supports transactions that provide atomic, consistent, isolated and durable (ACID) access to data.

For more information, see Chapter 6, "Understanding Distributed Transactions in TimesTen Scaleout" in this guide and "Transaction Management" in the Oracle TimesTen In-Memory Database Operations Guide.

Scalability

TimesTen Scaleout enables you to transparently distribute the data of a database across multiple instances, which are located on separate hosts, to dramatically increase availability, performance, storage capacity, processing capacity, and durability. When TimesTen Scaleout distributes the data of your database across multiple instances, it uses the in-memory resources provided by the hosts running those instances.

TimesTen Scaleout enables you to add or remove instances in order to control both performance and the storage capacity of your database. Adding instances expands the memory capacity of your database. It also improves the throughput of your workload by providing the additional computing resources of the hosts running those instances. If your business needs change, then removing instances (and their hosts) enables you to meet your targets with fewer resources.

Data transparency

While TimesTen Scaleout distributes your data across multiple instances, applications do not need to know how data is distributed. When an application connects to any instance in the grid, it has access to all of the data of the database without having to know where specific data is located.

Knowledge about the distribution of data is never required in TimesTen Scaleout, but it can be used to tune the performance of your application. You can use this knowledge to exploit locality where possible. See "Using pseudocolumns" for more information.

High availability and fault tolerance

TimesTen Scaleout automatically recovers from most transient failures, such as a congested network. TimesTen Scaleout recovers from software failures by recovering from checkpoint and transaction log files. Permanent failures, such as hardware failures, may require intervention by the user.

TimesTen Scaleout provides high availability and fault tolerance when you have multiple copies of data located across separate hosts. TimesTen Scaleout provides a feature called K-safety (k) where the value you set for k during the creation of the grid defines the number of copies of your data that will exist in the grid. This feature ensures that your database continues to operate in spite of various faults, as long as a single copy of the data is accessible.

-

To have only a single copy of the data, set

kto1. This setting is not recommended for production environments. -

To have two copies of the data, set

kto2. A grid can be fault tolerant with this setting. Thus, if one copy fails, another copy of the data exists. Ensure you locate each copy of the data on distinct physical hardware for maximum data safety.

TimesTen Scaleout provides fault tolerance for both software and hardware failures:

-

Software failures are often transient. When one copy of the data is unavailable due to a software error, SQL statements are automatically redirected to the other copy of the data (if possible). In the meantime, TimesTen Scaleout synchronizes the data on the failed system with the rest of the database. TimesTen Scaleout does not require any user intervention to recover as long as the instances are still running.

-

Hardware failures may eventually require user intervention. In some cases, all that is required is to restart the host.

TimesTen Scaleout provides a membership service to help resolve failures in a consistent manner. The membership service provides a consistent list of instances that are up. This is useful if a network error splits the hosts into two separate groups that cannot communicate with each other.

Centralized management

You do not need to log onto every host within a grid in order to perform management activities. Instead, you conduct all management activity from a single instance using the ttGridAdmin utility. The ttGridAdmin utility is the main utility you use to define, deploy, and check on the status of each database.

You can also use the ttGridRollout utility or the Oracle SQL Developer GUI (both of which use the ttGridAdmin utility under the covers to execute all requests) to facilitate creating, deploying, and managing your grid:

-

If you are creating a grid for the first time, you can use the

ttGridRolloututility to define and deploy your grid. After creation, use either thettGridAdminutility or Oracle SQL Developer to manage your grid. -

You can create and manage any grid using Oracle SQL Developer, which is a graphical user interface (GUI) tool that gives database developers a convenient way to create, manage, and explore a grid and its components. You can also browse, create, edit, and drop particular database objects; run SQL statements and scripts; manipulate and export data; and view and create reports. See the Oracle SQL Developer Oracle TimesTen In-Memory Database Support User's Guide for more information.

TimesTen Scaleout architecture

One OS user creates and manages a grid. This user is called the instance administrator. See "Instance administrator" in the Oracle TimesTen In-Memory Database Installation, Migration, and Upgrade Guide for details on the instance administrator. TimesTen Scaleout enables the instance administrator to:

-

Configure whether the grid creates one or two copies of your data by using K-safety.

-

Create one or two management instances through which the grid is managed.

-

Create multiple data instances in which data is contained and managed.

-

Set up a membership service to track which data instances are operational at any moment. The membership service consists of three or more membership servers.

-

Create one or more databases.

-

Create one or more repositories to store backups for your databases.

A database consists of multiple elements, where each element stores a portion of data from its database. Each data instance contains one element of each database. If you create multiple databases in the grid, then each data instance contains multiple elements (one from each database).

For each database you create, you decide which elements participate in data distribution. Usually, all elements participate, but when you bring online new data instances, you decide when the elements of those new data instances begin to participate in database operations. You need to explicitly add elements into the distribution map of database for them to participate in database operations. Likewise, you need to remove elements from the distribution map (which stops them from participating in database operations) before you can remove their data instances from the grid.

Upon including an element into the distribution map, each element of a database is automatically placed into a replica set. Each replica set contains the same number of elements as the value set for K-safety. Elements in the same replica set hold the same data.

The following sections provide a more detailed description of these components and their responsibilities within a grid:

Instances

A grid uses instances to manage, contain, and distribute one or more copies of your data. An instance is a running copy of the TimesTen software. When you create an instance on a host, you associate it with a TimesTen installation. An installation can be used by a single instance or shared by multiple instances. Each instance normally resides on its own host to provide maximum data availability and as a safeguard against data loss should one host fail.

Each instance has an associated instance administrator (who created the instance) and an instance home. The instance home is the location for the instance on your host. The same instance administrator manages all instances in the grid.

TimesTen Scaleout supports two types of instances:

Management instances

Management instances control a grid and maintain the model, which is the central configuration of a grid. To ensure that the administrator can easily control a grid, all management activity is executed through a single management instance using the ttGridAdmin utility.

Note:

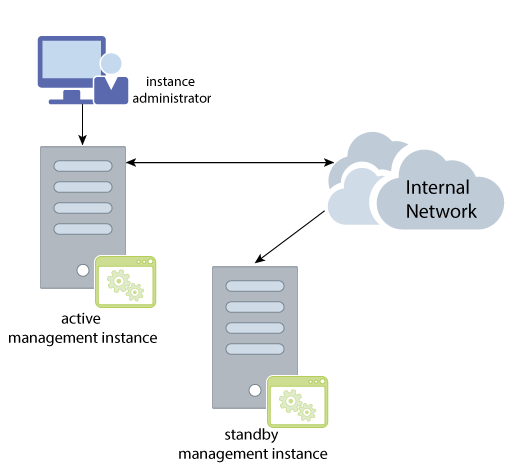

See "Central configuration of the grid" for more details on the model.TimesTen Scaleout enables you to create two management instances to provide for high availability and guard against a single management instance failure that could impede grid management. Consider having two management instances a best practice for a production environment. Once created, TimesTen Scaleout configures both management instances in an active standby configuration. You always execute all management operations through the active management instance. The standby management instance exists purely as a safeguard against failure of the active management instance.

If you create two management instances, as shown in Figure 1-3, then all information used by the active management instance is automatically replicated to the standby management instance. Thus, if the active management instance fails, you can promote the standby management instance to become the new active management instance through which you continue to manage the grid.

Note:

See "Managing failover for the management instances" for details on how TimesTen Scaleout replicates information for the management instances.Figure 1-3 Administrator manages the grid through management instances

Description of ''Figure 1-3 Administrator manages the grid through management instances''

Consider that:

-

You can manage a grid through a single management instance without a standby management instance. However, it is not recommended for production environments.

-

If both management instances fail, databases in the grid continue to operate. Some management operations are unavailable until you restart at least one of the management instances.

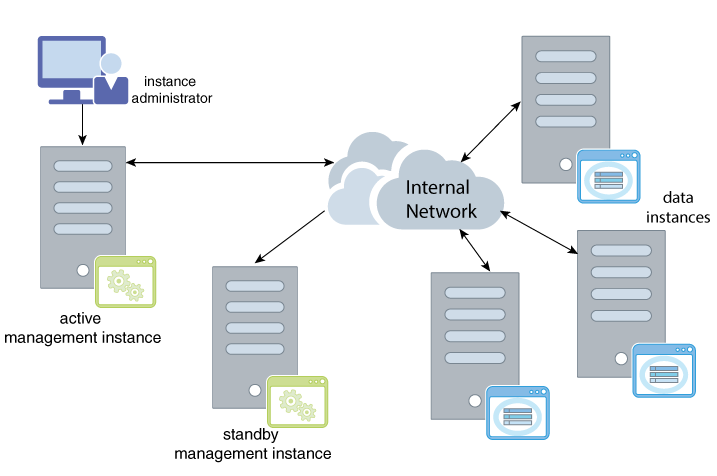

Data instances

Data instances store one element per database in the grid. Data instances execute SQL statements and PL/SQL blocks. A grid distributes the data within each database across data instances. You manage all data instances through the active management instance, as shown in Figure 1-4.

Figure 1-4 Management instances manage a grid of multiple data instances

Description of ''Figure 1-4 Management instances manage a grid of multiple data instances''

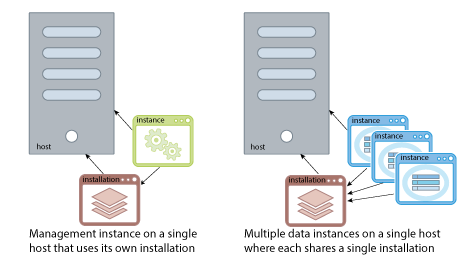

Installations

Instances need an installation of a TimesTen distribution to operate. An installation is read-only and can be used locally or shared across multiple instances. You create the installation of the initial management instance by extracting a TimesTen distribution on any given location on the system defined as the host of the management instance. TimesTen Scaleout can locally create any subsequent installation on the rest of the hosts in the grid and associate the new installations with the instances run by those hosts. All instances that run on the same host may share the same installation.

As long as an installation can be accessed by multiple hosts that installation can be shared by instances in those hosts. However, sharing an installation on a shared file server, such as NFS, between multiple instances on separate hosts may reduce availability. If the shared network storage or the network connecting all of the hosts to the NFS server fails or has performance issues then all instances sharing that installation are impacted. Thus, while sharing an installation on a shared file server across instances may be a valid option for a development environment, you may want to evaluate whether this is advisable for a production environment.

K-safety

You configure your grid to create either single or multiple copies of the data of each database within your grid. TimesTen Scaleout uses its implementation of K-safety (k) to manage one or multiple copies of your data. You specify the number of copies you want of your data by setting k to 1 or 2 when you create the grid.

You improve data availability and fault tolerance when you specify that the grid creates two copies of data located across separate hosts.

-

If you set

kto1, TimesTen Scaleout stores a single copy of the data (which is not recommended for production environments).When

kis set to1, then the following may occur if one or more elements fail:-

Any data contained in the element is unavailable until the element recovers.

-

Any data contained in the element is lost if the element does not recover.

Even though there is only a single copy of the data, the data is still distributed across separate elements to increase capacity and data accessibility.

-

-

If you set

kto2, then TimesTen Scaleout stores two copies of the data. A grid can tolerate multiple faults when you have two copies of the data.If one element fails, the second copy of the data is accessed to provide the requested data. K-safety enables availability to your data as long as one of the copies of the data is available. Where possible, locate each copy of the data on distinct physical hardware for maximum data safety.

The following sections describe how multiple copies are managed and organized.

Understanding replica sets

Each element of a database is automatically placed into a replica set depending on the value of k, where:

-

If you set

kto1, then each replica set contains a single element. -

If you set

kto2, then each replica set contains two elements (where each element is an exact copy of the other element in the replica set).

Thus, each replica set contains the same number of elements as the value set for k.

When k is set to 2, any change made to the data in one element is also made to the other element in the replica set to keep the data consistent on both elements in the replica set at all times. Because of the transparency capabilities of TimesTen Scaleout, you can initiate transactions on any element, even if the requested data is not contained in that element or if the requested data spans multiple replica sets. If an element fails, then the other element in the replica set is accessed to provide the requested data. All data in the database is available as long as one element in each replica set is functioning.

Understanding data spaces

Each database consists of a set of elements, where each element stores a portion of data from its database. The grid organizes the elements for each database into data spaces.

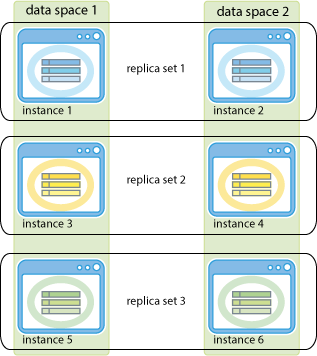

Each database consists of either one or two data spaces. When k is set to 2, the elements within each replica set are assigned to separate data spaces.

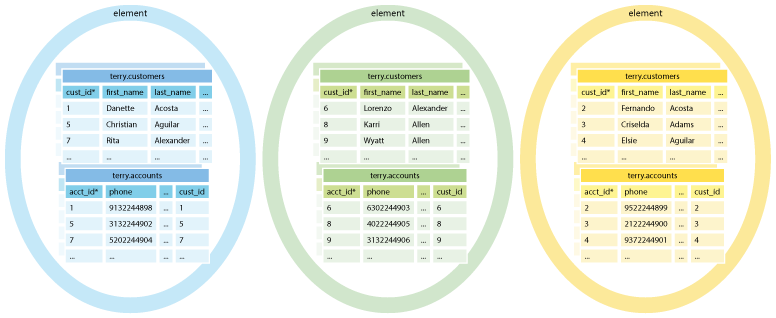

Figure 1-5 shows how two copies of the data are organized within two data spaces, where each data space contains the elements that make up a single copy of the data of the database. One copy is contained within data space 1 and the second copy is contained in data space 2. There are three replica sets and the elements of each replica set are assigned to a separate data space. Thus, each element in data space 1 is identical to its partner element in data space 2.

Figure 1-5 Two copies, each in own data space

Description of ''Figure 1-5 Two copies, each in own data space''

As your needs grow or diminish, you may add or remove replica sets to a grid. When you add data instances, the grid automatically creates elements for each database. However, the data is not automatically redistributed when you add or remove a data instance. You decide when it is appropriate to assign an element to a replica set and redistribute the data across all the elements in each data space.

Assigning hosts to data space groups

You decide how the data is physically located by assigning hosts into data space groups that represents the physical organization of your grid. As discussed in "Understanding data spaces", copies of the data are organized logically into data spaces. Each data space should use separate physical resources. Shared physical resources can include similar racks, the same power supply, or the same storage. Be aware that if all elements in a single replica set are stored on hosts that share a physical component, then data stored in that replica set becomes unavailable if that shared physical component fails.

TimesTen Scaleout requires you to assign all hosts that will run data instances into data space groups. When using K-safety, there are k copies of the data and the same number of data space groups (which are numbered from 1 to k). You should assign hosts that share the same physical resources into the same data space group. The elements in data instances running on hosts that are assigned to the same data space group are in the same data space. Each data space contains a full copy of all data in the database.

If you ensure that the hosts in one data space group do not share physically resources with the hosts in another data space group, then hosts in separate data space groups are less likely to fail simultaneously. This scenario makes it likely that all data in the database is available, even if a single hardware failure takes down multiple hosts. For example, you may ensure that all of the hosts in one data space group are plugged into a power supply that is separate from the power supply for the hosts in another data space group. If that is the case, pulling one plug does not power down both hosts in a single replica set, thus making some data unavailable.

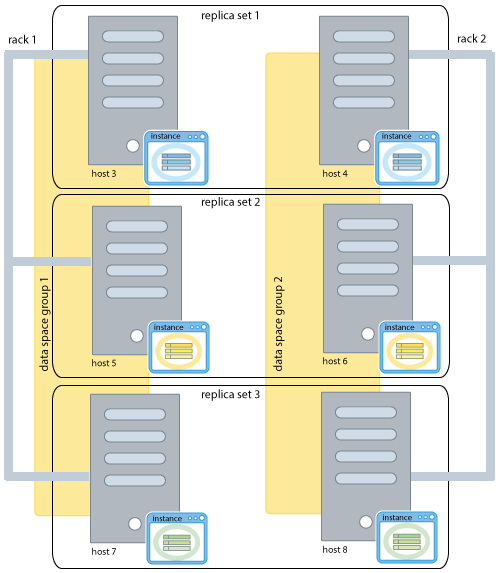

Figure 1-6 shows a grid configured where k is set to 2, so the grid contains two data space groups. There are two racks, each with two power sources and three hosts. Three hosts have been assigned to each data space group. TimesTen Scaleout creates replica sets such that such that one element in each replica set is in each data space group.

Figure 1-6 Hosts organized into data space groups

Description of ''Figure 1-6 Hosts organized into data space groups''

The process for assigning hosts to a data space group includes deciding how you will physically separate the hosts supporting the data spaces.

-

If the physical topology of your grid is simple, you can assign each host yourself to each data space group.

-

If the physical topology of your grid is complex, TimesTen Scaleout can recommend an appropriate data space group for each host based on the physical resources shared among those hosts by the use of physical groups.

Describe your physical topology with physical groups

If you have a complex configuration where analyzing the physical dependencies make it difficult to assign multiple hosts to separate data space groups, you can ask TimesTen Scaleout to recommend how to assign your hosts to data space groups. In order to do this, TimesTen Scaleout needs to know the physical topology of where your hosts are co-located or those that use the same resources. You can describe the physical topology of your grid where each host is identified with its physical dependencies using physical groups. The physical group informs TimesTen Scaleout what hosts share the same physical resources. Hosts grouped into the same physical group are likely to fail together.

Note:

Associating your host within a physical groups is optional.For example, multiple hosts can be grouped if they:

-

Use the same power supply.

-

Reside in the same rack consisting of several shelves.

-

Reside on the same shelf.

Once all of your hosts are assigned to physical groups, TimesTen Scaleout can suggest which hosts should be assigned to each data space group to minimize your risk. See "Description of the physical topography of the grid" for more details on assigning hosts to a physical group.

Data distribution

You can create one or more databases within a grid. Each database is independent, with separate users, schemas, tables, persistence, and data distribution. TimesTen Scaleout manages the distribution of the data according to the defined distribution map and the distribution scheme for each table.

Defining the distribution map for a database

You decide on the number of data instances in a grid, which dictates the maximum number of elements and replica sets for any one database. Each data instance hosts one element of each database in the grid. Thus, the data instances in a grid can manage one or more databases simultaneously. If you create multiple databases in the grid, then each data instance will contain multiple elements (one element from each database).

Each database consists of multiple replica sets, where each replica set stores a portion of data from its database. You define which elements of the available data instances store data of the database with a distribution map. Once the distribution map is defined and applied, TimesTen Scaleout automatically assigns each element to a replica set and distributes the data to its corresponding replica set, where each element communicates with other elements of different replica sets to provide a single database image. The details of how data is distributed may vary for each table of a database based on the distribution scheme of the table.

Note:

TimesTen Scaleout stores the composition of the distribution map, or how every data instance associates with each other, in a partition table that is managed by thettGridAdmin utility.Once you add the elements of the data instances that will manage and contain the data of each database to the distribution map, you can explicitly request that the data be distributed across the resulting replica sets.

As the needs of your business change, you can increase the capacity of a database by increasing the number of replica sets in the grid. To can accomplish this by:

-

Adding new hosts to the grid. The number of hosts you add must be proportional to the number of replica sets you want to add and the value of K-safety. For example, if you want to add another replica set to a database in a grid with

kset to2, you need to add a host for data space group1and another for data space group2. -

Creating an installation to support data instances on each new host.

-

Creating a data instance on each new host.

-

Adding the elements of the new data instances to the distribution map of each database you want to increase its capacity. TimesTen Scaleout automatically creates new replica sets as appropriate.

-

Redistributing the data across all replica sets.

When you add new data instances or remove existing data instances to the grid, the grid does not automatically re-distribute the data stored in the database across the replica sets of those new or remaining data instances. Instead, you decide when is the appropriate time to re-distribute the data across the existing data instances. Redistribution can negatively impact your operational database. You should redistribute in small increments to minimize the impact. The larger the number of data instances that you have, the less of an impact it is to incrementally add or remove a single replica set.

If you need to replace a data instance with a new data instance in the same data space group, this action does not require a redistribution of all data.

To reduce your capacity, remove the data instances that manage a replica set from the distribution map and redistribute the data across the remaining data instances in the grid.

Defining the distribution scheme for tables

TimesTen Scaleout distributes the data in a database across replica sets. All tables in a database are present in every replica set. You define the distribution scheme for each table in a database in the CREATE TABLE statement. The distribution scheme describes how the rows of the table are distributed across the grid.

How the data is distributed is defined by one of the following distribution schemes specified during table creation.

-

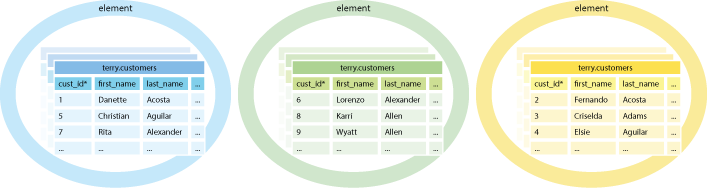

Hash: The data is distributed based on the hash of the primary key or a composite of multiple columns that are specified by the user. A given row is present in a replica set chosen by the grid. Rows are evenly distributed across the replica sets. This is the default method as it is appropriate for most tables.

See Figure 1-7 for an example of a table,

terry.customers, with a hash distribution scheme. Each element belongs to a different replica set. -

Reference: Distributes the data of a child table based on the location of the parent table that is identified by the foreign key. That is, a given row of a child table is present in the same replica set as its parent table. This distribution scheme optimizes the performance of joins by distributing related data within a single replica set. Thus, this distribution scheme is appropriate for tables that are logically children of a single parent table as parent and child tables are often referenced together in queries.

See Figure 1-8 for an example of a child table,

accounts, with a reference distribution scheme to a parent table,customers. Each element belongs to a different replica set.Figure 1-8 Table with reference distribution

Description of ''Figure 1-8 Table with reference distribution''

-

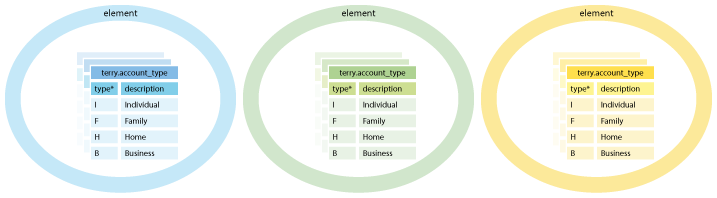

Duplicate: Distributes full identical copies of data to all the elements of a database. That is, all rows are present in every element. This distribution scheme optimizes the performance of reads by storing identical data in every data instance. This distribution scheme is appropriate for tables that are relatively small, frequently read, and infrequently modified.

See Figure 1-9 for an example of a table,

account_type, with a duplicate distribution scheme. Each element belongs to a different replica set.Figure 1-9 Table with duplicate distribution

Description of ''Figure 1-9 Table with duplicate distribution''

Backups

TimesTen Scaleout enables you to create backups of the databases in your grid and restore them to the same grid or another grid with a similar topology. TimesTen Scaleout also enables you to export your databases to a grid with a different topology. You define a repository as a location for your database backups, exports, and collections of log files. Multiple grids may use the same repository.

Internal and external networks

For most production environments, TimesTen Scaleout requires a single private internal network and at least one external network.

-

Internal network: Instances in a grid communicate with each other over a single internal network using the TCP protocol. In addition, instances communicate with membership servers through this network. Membership servers use this network to communicate among themselves.

-

External networks: Applications use the external network to connect to data instances to access a database. Applications do not need external network access to management instances or membership servers.

See "Network requirements" for more information.

Central configuration of the grid

TimesTen Scaleout maintains a single central configuration of the grid. This configuration is called the model. The model represents the logical topology of a grid. The model contains a set of objects that represent components of a grid, such as installations, hosts, database definitions, and instances.

You can have several different versions of the model. Each time you apply changes to the model, the grid saves the model as a version. Only one version of the model can be active in the grid at any given time.

-

The latest model is the model within which you are making changes, but has not yet been applied. If you are in the process of modifying a model, then this version describes a future desired structure of a grid that only becomes the current model when you apply it.

-

The current version of the model (the model that was most recently applied) always describes the current structure of the grid.

-

Previous model versions describe what the grid structure used to be.

Perform the following when creating a desired structure for your grid:

-

You design the desired structure of your grid by adding or removing grid components (such as installations, hosts, and instances) to the latest model.

-

Once you complete the desired structure of a model, you apply the model to cause these changes to take effect. This version of the model becomes the current version of the model.

-

After you apply the model, TimesTen Scaleout attempts to implement the current model in the operational grid.

It is not guaranteed that all components of the current model are running. For example, if your grid has 10 hosts configured, but only 6 of them are running at the moment, the definition of all 10 is still in the model.

Every time you use the ttGridAdmin utility to add a grid component, such as an installation, host or instance, you add model objects corresponding to these grid components to the model. Each model object specifies the attributes and relationships of the grid component.

Some model objects have relationships to other model objects. Figure 1-10 shows how the relationship is stored between model objects. That is, the host, installation and instances have a relationship where:

-

The installation model object points to the host model object on which it is installed.

-

Both the management instance model object and the data instance model object point to an installation model object of the installation that the instance will use and a host model object on which the instance is installed.

Figure 1-10 shows two different types of relationships between the hosts, installation, and instances that is stored within the model.

-

You install a single installation on a host with one data instance, where the data instance points to the installation and to the host on which it exists.

-

You create multiple data instances on a single host where they all share a single installation. Each data instance points to the same host and the same installation. The installation points to the host on which it is installed. To increase availability, avoid using multiple data instances on a single host.

Any time you add or remove model objects from the model, these changes do not immediately impact a grid until you explicitly apply these changes. After you apply the changes, TimesTen Scaleout implements the current model into the operational grid. For example, if you add a new installation model object and data instance model object to the latest version of the model, applying the changes to the model performs all of the necessary operations to create and initialize both the installation and the data instance in that host.

Planning your grid

Before you configure a grid and database in TimesTen Scaleout, gather the information necessary for creating a grid:

-

Define the network parameters of each host and membership server

-

Define the locations for the installation directory and instance home of each instance

-

Ensure you have all the information you need to deploy a grid

Determine the number of hosts and membership servers

You need to determine how many hosts and membership servers you are going to use based on these considerations:

-

Membership servers: In a production environment, you need an odd number of membership servers greater than or equal to three to ensure a majority quorum in case one or more membership servers fail. You should ensure that:

-

Each membership server uses independent physical resources (such as power, network nodes, and storage) from each other.

-

Membership servers do not run on the same system as hosts with data instances.

-

-

Management instances: You need two management instances to ensure some measure of availability to the configuration and management capabilities of your grid. Ensure that hosts with management instances use independent physical resources (such as power, network nodes, and storage) from each other.

-

Data instances: You determine the number of hosts you require for data instances based on the level of K-safety and the number of replica sets. For example, if you set

kto2and you decide to have three replica sets, you need six data instances.Also, the level of K-safety determines how many data space groups or independent physical locations you must have for your hosts. Ensure that the hosts with data instances assigned to data space group

1use independent physical resources than hosts with data instances that are assigned to data space group2.

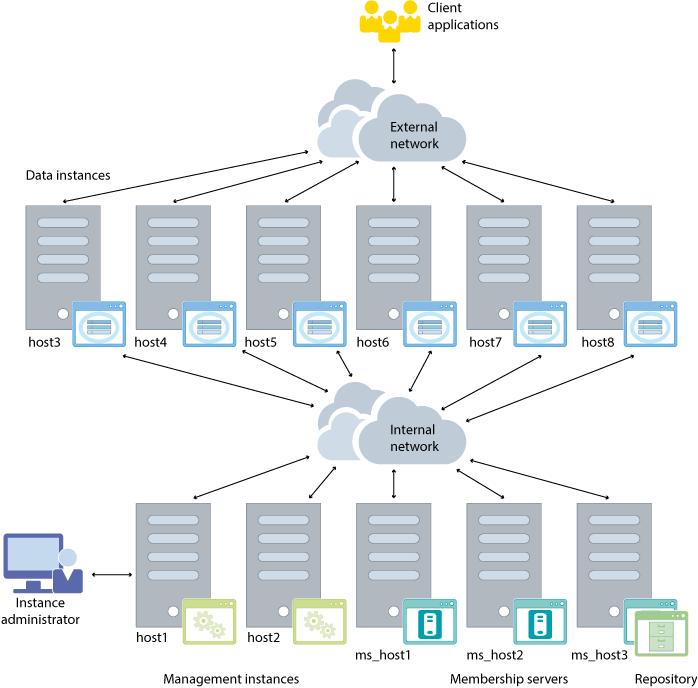

Figure 1-11 shows an example of a setup of three membership servers, one repository, two management instances, and six data instances. The example co-locates a membership server with repository for a total of 11 hosts.

Figure 1-12 shows how the hosts with data instances in this example are organized into two data space groups for a grid with k set to 2. The hosts of each data space group share a rack.

Figure 1-12 Example of hosts organized into data space groups

Description of ''Figure 1-12 Example of hosts organized into data space groups''

See Table 1-1 for an example of how you might assign the hosts with data instances into data space groups based on the physical resources they share.

Table 1-1 Systems and their roles

| Host name | Membership server | Repository | Management instance | Data instance | Data space group | Physical resources |

|---|---|---|---|---|---|---|

|

ms_host1 |

Yes |

Yes |

N/A |

N/A |

N/A |

Rack 1 |

|

ms_host2 |

Yes |

N/A |

N/A |

N/A |

N/A |

Rack 2 |

|

ms_host3 |

Yes |

N/A |

N/A |

N/A |

N/A |

Rack 2 |

|

host1 |

N/A |

N/A |

Yes |

N/A |

N/A |

Rack 1 |

|

host2 |

N/A |

N/A |

Yes |

N/A |

N/A |

Rack 2 |

|

host3 |

N/A |

N/A |

N/A |

Yes |

1 |

Rack 1 |

|

host4 |

N/A |

N/A |

N/A |

Yes |

2 |

Rack 2 |

|

host5 |

N/A |

N/A |

N/A |

Yes |

1 |

Rack 1 |

|

host6 |

N/A |

N/A |

N/A |

Yes |

2 |

Rack 2 |

|

host7 |

N/A |

N/A |

N/A |

Yes |

1 |

Rack 1 |

|

host8 |

N/A |

N/A |

N/A |

Yes |

2 |

Rack 2 |

Define the network parameters of each host and membership server

Ensure that you know the network addresses and TCP/IP ports that you expect each host and membership server to use. See "Network requirements" for details on using internal and external networks in your grid.

See Table 1-2 for an example of the internal and external addresses of the topology described in Table 1-1.

Table 1-2 Internal and external addresses

| Host name | Internal address | External address |

|---|---|---|

|

ms_host1 |

|

N/A |

|

ms_host2 |

|

N/A |

|

ms_host3 |

|

N/A |

|

host1 |

|

N/A |

|

host2 |

|

N/A |

|

host3 |

|

|

|

host4 |

|

|

|

host5 |

|

|

|

host6 |

|

|

|

host7 |

|

|

|

host8 |

|

|

Note:

All systems must be part of the same private network. It is recommended that you create an external network for applications outside of your private network to connect to your database.You need to consider which TCP/IP ports each instance will use, especially if your setup is behind a firewall. You must define the TCP/IP ports for the following:

-

Membership servers: You must define three port numbers (client, peer, and leader) for each membership server. See Table 3-1, "zoo.cfg configuration parameters" for details on these port numbers.

-

Management instances: There are three port numbers (daemon, server, and management) for each management instance. TimesTen Scaleout sets the default values for the daemon, server, and management ports if you do not specify them.

-

Data instances: There are two port numbers (daemon and server) for each data instance. TimesTen Scaleout sets the default values for the daemon and server ports if you do not specify them.

If a firewall is in place, you must open all the ports mentioned above plus the local ephemeral ports for the internal network, except the server ports assigned to each instance. The server ports assigned to each instance must be open for the external network.

See Table 1-3 for an example of the TCP/IP ports assigned to each membership server or instance. The example uses the default values for each port.

| Host name | Membership server (client/peer/leader) | Management instance (daemon/server/management) | Data instance (daemon/server) |

|---|---|---|---|

|

ms_host1 |

2181 / 2888 / 3888 |

N/A |

N/A |

|

ms_host2 |

2181 / 2888 / 3888 |

N/A |

N/A |

|

ms_host3 |

2181 / 2888 / 3888 |

N/A |

N/A |

|

host1 |

N/A |

6624 / 6625 / 3754 |

N/A |

|

host2 |

N/A |

6624 / 6625 / 3754 |

N/A |

|

host3 |

N/A |

N/A |

6624 / 6625 |

|

host4 |

N/A |

N/A |

6624 / 6625 |

|

host5 |

N/A |

N/A |

6624 / 6625 |

|

host6 |

N/A |

N/A |

6624 / 6625 |

|

host7 |

N/A |

N/A |

6624 / 6625 |

|

host8 |

N/A |

N/A |

6624 / 6625 |

Define the locations for the installation directory and instance home of each instance

You must define the locations for the installation directory and the instance home that you expect your grid to use. Defining the locations for these grid objects includes defining the name TimesTen Scaleout uses to identify them. Consider these while defining these locations:

-

In the case of the instance home, TimesTen Scaleout adds the instance name to the defined location. For example, if you define

/gridas the location for an instance namedinstance1, the full path for the instance home of that instance becomes/grid/instance1. -

A similar behavior applies for installation objects. Instead of adding the installation name, TimesTen Scaleout adds the release version to the defined location. For example, if you define

/gridas the location of the installation, the full path for the installation becomes/grid/tt18.1.4.1.0.

TimesTen Scaleout creates the locations you define for the installation directory and instance home if they do not exist already.

See Table 1-4 for an example of the locations for the membership server installation. You must create these locations on their respective systems prior to installing the membership server.

Table 1-4 installation of the membership servers

| Host name | Installation location |

|---|---|

|

ms_host1 |

|

|

ms_host2 |

|

|

ms_host3 |

|

See Table 1-5 for an example of the installation directory and instance home locations for the management instances.

Table 1-5 installation directory and instance home of the management instances

| Host name | Installation name | Installation directory | Instance name | Instance home |

|---|---|---|---|---|

|

host1 |

installation1 |

|

instance1 |

|

|

host2 |

installation1 |

|

instance1 |

|

See Table 1-6 for an example of the installation directory and instance home locations for the data instances.

Table 1-6 installation directory and instance home of the data instances

| Host name | Installation name | Installation directory | Instance name | Instance home |

|---|---|---|---|---|

|

host3 |

installation1 |

|

instance1 |

|

|

host4 |

installation1 |

|

instance1 |

|

|

host5 |

installation1 |

|

instance1 |

|

|

host6 |

installation1 |

|

instance1 |

|

|

host7 |

installation1 |

|

instance1 |

|

|

host8 |

installation1 |

|

instance1 |

|

See Table 1-7 for an example of the location for the repository.

Ensure you have all the information you need to deploy a grid

To verify that you have all the information you need before you start deploying your grid, answer the questionnaire provided in Table 1-8.

| Question | Source of information |

|---|---|

|

What will your K-safety setting be? |

|

|

How many membership servers will you have? |

"Determine the number of hosts and membership servers" and Chapter 3, "Setting Up the Membership Service" |

|

How many management instances will you have? |

|

|

How many replica sets will you have? |

|

|

Where will you store your database backups? |

"Backups" and "Define the locations for the installation directory and instance home of each instance" |

|

How many hosts are you going to use for your grid? |

|

|

Which of those hosts are going to run management instances? |

|

|

Which of those hosts are going to run data instances? |

|

|

What will be the data space group assignments of each host with a data instance? |

|

|

How will you organize your hosts and membership servers across independent physical resources? |

|

|

Will you use a single network or separate internal and external networks for your grid? |

|

|

What is the DNS name or IP address of each host and membership server? |

"Define the network parameters of each host and membership server" |

|

Which TCP/IP ports will you use for each instance? |

"Define the network parameters of each host and membership server" |

|

What will be the location for the installation files of each membership server? |

"Define the locations for the installation directory and instance home of each instance" |

|

What will be the locations for the installation directory and instance home of each instance? |

"Define the locations for the installation directory and instance home of each instance" |

Database connections

You can access a database either with a direct connection from a data instance or a client/server connection over an external network.

-

Direct connection: An application connects directly to a data instance of a database that they specify.

An application using a direct connection runs on the same system as the database. A direct connection provides extremely fast performance as no inter-process communication (IPC) of any kind is required. However, if the specified data instance is down, the connection is not forwarded to another data instance and an error is returned.

-

Client/server connection: An application using a client/server connection may run on a data instance or on any host with access to the external network. Client applications are automatically connected to a working data instance.

All exchanges between client and server are sent over a TCP/IP connection. If the client and server reside on separate hosts in the internal network, they communicate by using sockets and TCP/IP.

If a data instance fails, TimesTen Scaleout automatically re-connects to another working data instance. You can configure options to control this process, if necessary.

Note:

If desired, you can specify that a client/server connection connects to a specific data instance.

If your workload only requests data from the local element, then a direct connection is the best method for your application as this provides faster access than a client/server connection. However, if your workload entails that your application may need to switch between data instances for whichever data instance is readily available and retrieves data from the multiple elements, then a client/server connection may provide better throughput.

Comparison between TimesTen Scaleout and TimesTen Classic

The term TimesTen alone, without TimesTen Scaleout or Classic, typically applies to both single-instance and multiple-instance, such as in references to TimesTen utilities, releases, distributions, installations, actions taken by the database, and functionality within the database.

-

TimesTen Scaleout refers to TimesTen In-Memory Database in grid mode. TimesTen Scaleout is a multiple-instance environment that contains distributed databases.

-

TimesTen Classic refers to TimesTen In-Memory Database in classic mode. Classic mode is a single-instance environment and databases as in previous releases.

-

The Oracle TimesTen Application-Tier Database Cache (TimesTen Cache) product combines the responsiveness of the TimesTen Classic with the ability to cache subsets of an Oracle database for improved response time in the application tier.

-

TimesTen Scaleout supports and includes most of the features of TimesTen Classic; it does not support any of the features of the TimesTen Cache. The following list describes what features are not supported in TimesTen Scaleout from both of the previous products:

Note:

For more information about TimesTen Classic features, see the Oracle TimesTen In-Memory Database Operations Guide.Table 1-9 TimesTen Classic features that are unsupported in TimesTen Scaleout

| TimesTen Classic feature | Supported in TimesTen Scaleout (Y/N) | Description |

|---|---|---|

|

Cache Connect option for caching data from the Oracle database |

N |

None of the features documented in the Oracle TimesTen Application-Tier Database Cache User's Guide are supported for TimesTen Scaleout. However, TimesTen Scaleout provides facilities for loading data from an Oracle Database. |

|

Replication: both the active standby pair and classic replication schemes |

N |

Data protection and fault tolerance can be provided through the K-safety feature of TimesTen Scaleout. Thus, none of the features documented in the Oracle TimesTen In-Memory Database Replication Guide are supported for TimesTen Scaleout. See "K-safety" for more details. |

|

Bitmap indexes |

N |

|

|

LOB support |

N |

TimesTen Scaleout does not support LOB columns in tables. |

|

Column-based compression |

N |

Column-based compression within tables |

|

Aging policy for tables |

N |

|

|

RAM policy |

N |

TimesTen Scaleout supports the manually loading and unloading of the database through the |

|

X/Open XA standard and the Java Transaction API (JTA) |

N |

|

|

TimesTen Classic Transaction Log API (XLA) and the JMS/XLA Java API |

N |

|

|

Oracle Clusterware |

N |

|

|

Index Advisor |

N |

|

|

Online upgrade |

N |

|

|

PL/SQL |

Y |

While PL/SQL anonymous blocks are fully supported, user-created stored procedures, packages and functions are not supported. You can, however, call procedures and invoke functions from TimesTen-provided packages from your anonymous blocks. TimesTen Scaleout does not support SQL statements that create, alter or drop functions, packages, procedures. |

|

SQL statements |

Y |

TimesTen Scaleout does not support:

TimesTen Scaleout partially supports:

|

How supported TimesTen features are documented in this book

Throughout the Oracle TimesTen In-Memory Database Scaleout User's Guide, the TimesTen Classic features that are included within TimesTen Scaleout are documented as follows:

-

If the feature is supported completely as it is within TimesTen Classic, this book provides a small section describing the feature with a cross-reference to the description in other TimesTen books, such as the Oracle TimesTen In-Memory Database Operations Guide, Oracle TimesTen In-Memory Database SQL Reference and Oracle TimesTen In-Memory Database Reference.

-

If the feature is used as a base with additional support provided for the unique requirements of TimesTen Scaleout, then the new addition is described and a cross-reference link is provided to the feature in other TimesTen books, such as the Oracle TimesTen In-Memory Database Operations Guide, Oracle TimesTen In-Memory Database SQL Reference and Oracle TimesTen In-Memory Database Reference.

-

If the feature is not supported, no cross-reference is provided in this book.