Configure Tasks

This section covers the creation and configuration of tasks.

About Tasks

Tasks are short, functional blocks of code you can piece together into a flow as part of job or promote to jobs themselves.

Tasks are the primary building blocks of all workflows in Oracle AI Data Platform Workbench. The type of task determines the type of code it uses. As part of a job, you connect tasks to determine their sequence and priority when the job is run.

| Task type | Description |

|---|---|

| Notebook task | A task that has been saved to a notebook you can access |

| Python task | A task using a snippet of Python programming language |

| If/else condition | A task that uses if/else conditions |

| Nested job task | A task that uses an existing job and its tasks as a nested task |

When you have more than one task, you can create sets of task dependencies where the success or failure of one task can trigger subsequent tasks in sequence. You can only create dependencies in jobs that have more than one task. See Create a Notebook Task.

Tasks can be run in parallel with one another. You can do this by making two or more tasks dependent on the success or failure of another task in the same workflow, causing them to run at the same time.

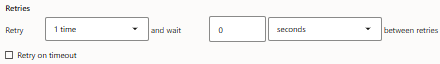

Tasks can fail due to transient issues, such as network disruptions, resource unavailability, or temporary service failures. In these cases, your AI Data Platform Workbench automatically retries the task based on retry policies you configure when the task is created. As part of these policies, you define:

- Retry Count: The maximum number of retry attempts.

- Retry Interval: The wait time between retries.

In addition to standard task retries, AI Data Platform Workbench also supports Retry on timeout. If a task exceeds its execution time limit due to resource constraints or slow processing and you want to retry only for these scenarios, you can choose to automatically trigger a retry. These retry policies enhance workflow resilience, ensuring tasks have a higher chance of successful execution without manual intervention.

When and How to Use Compute Logs

You should check your compute logs if your task fails with resource or system related errors, such as out-of-memory errors or CPU use exceeding limits.

Review Spark logs if you see long wait times, unexpected retries, or job performance bottlenecks. These logs provide insight into the driver and worker nodes of the compute cluster backing your task and can help identify the source of possible problems.

For guidance on how to check your logs, see Monitor a Specific Job Run.

You must have appropriate compute-level RBAC permission to view metadata and logs for the compute instance associated with the job. Contact your administrator to obtain these permissions if you are unable to view compute logs. For more information, see About Permissions.

Create a Notebook Task

You create tasks using notebooks you have built in your AI Data Platform Workbench.

Create a Nested Job Task

You can use another workflow job and its contained tasks as a nested task within another workflow.

Create an If/Else Task

You can create a task that uses if/else conditions based on catalog data to determine if the task triggers.

Modify a Task

You can change existing attributes of a task, such as name, type, and parameters to alter how it functions in your job.

- On the Home page, click Workflow.

- Click on the job you want to configure tasks for.

- In the Tasks tab, click the task you want to edit.

- In the Task Details pane on the right, modify the task attributes as needed. Changes are saved automatically.