33 Testing APIs and Mobile Backends

Use Case: End-to-End Testing

In this scenario, FixItFast (FiF) is a company that supplies maintenance services for large in-house appliances. To help facilitate speed and quality of service, FiF has requested a set of mobile apps for communicating and coordinating service requests. These mobile apps will be used by customers, customer representatives, and technicians.

Eric, the enterprise architect at FiF, has been assigned the task to design a convenient mobile interface for reporting household appliance issues. The interface must tie in with the existing repair dispatch service and an appliance manual service. While the MCS UI makes it easy for ad hoc testing, Eric puts strong emphasis on well-documented, traceable, repeatable tests that can be easily automated. To encourage his team to pursue his goals, when he designs the overall end-to-end mobile solution, he puts significant effort into defining tests to address business rules, security, scalability, and performance.

Using Eric’s specifications, Mia, the mobile app developer, designs the endpoints for the custom API. One of the apps that uses the API is for customers, another is for customer reps, and another is for the FiF technicians. Therefore, Mia must configure the appropriate security for each endpoint. Some endpoints can be used by only one role, and others can be used by two or more roles. She uses Eric’s test designs to create the mock data for the API. She then uses the test UI to verify her design, being sure to include security tests for any roles that she has associated with the endpoints.

As soon as the custom API is created with its mock data, Mia creates a mobile backend and associates it with the API. She then uses a REST testing library to implement the test suite that Eric designed for the API, and she performs the initial regression tests, which, at this point, use the API’s mock data. From this point forward, Mia will monitor the backend’s diagnostics page so that she can address runtime issues early, especially those caused by reconfiguring storage, realms, API roles, security policies, or service policies.

Before he starts implementing the code for the custom API, Jeff, the service developer, creates the connectors for the web services that feed data to the API, such as the repair dispatch service and the repair manual service. Jeff uses the test UI for ad hoc verification that the connectors work as expected. He also wants to implement formal tests for those connectors. These tests do nothing but check that the connectors are in a good state. To build the tests, Jeff creates a custom API for each connector. For each of his test APIs, he creates a module based on Node.js that serves as a pass-through for the HTTP requests. He then creates connector tests and adds them to the test suite to ensure that the connectors provide the expected results. If issues arise, the tests can help detect whether the problem stems from the web service or the code that uses the connector. After the connectors are moved to to a runtime environment, the tests will be used to ensure that the web services that are used by the connectors still work as expected.

It’s Jeff’s job to implement the code for the custom API that Mia designed. Before and during custom code implementation, Jeff uses the test UI to perform ad hoc testing. He also creates white box tests to cover code paths in depth. After each upload of the implementation zip file, Jeff makes it a practice to run the original black box tests and the new white box tests to ensure the integrity of the whole implementation. Jeff also makes the tests available to the quality assurance team.

Amanda, the mobile cloud administrator, creates pre- and post-publish checklists for the mobile backend, the associated APIs, the custom code implementation, and the connectors that the APIs depends on. These checklists use the API, connector, and mobile backend tests. Amanda is concerned not only with verifying functionality, but also with verifying other specified characteristics such as timing. She also creates checklists to be performed before and after moving to production, remembering that the differences in environment security policies, service policies, and user management configurations can introduce new issues.

Last, Amanda schedules automated tests to monitor for unexpected failures, such as those caused by larger-than-expected requests or a connector that uses a third-party web service that has become unavailable. These tests, along with the live performance data that the Administration page provides, are part of Amanda’s toolbox for ensuring a healthy system.

How Can I Test an API?

The Oracle Mobile Cloud Service (MCS) UI has test pages for testing the endpoints of the platform, connector, and custom APIs. You also can use HTTP tools such as cURL to test the platform and custom APIs remotely.

Testing a Platform or Custom API from the UI

Every platform and custom API has a test page that you can use to send a request to any of the API endpoints and view the response.

Testing a Connector API from the UI

Every connector has a test page that you can use to send a request to any of the API's endpoints and view its response. This test page enables you to determine if the connector is configured correctly. You can also use it to troubleshoot custom code that uses the connector. If the custom code response is not what you expected, then you can compare it with the test page response to determine whether your code introduced a bug.

To lean how to test a connector from the UI, see Testing the REST Connector API.

Testing Platform and Custom APIs Remotely

The MCS test pages are great for ad hoc testing and the MCS Custom Code Test Tools help with iterative testing and debugging of custom code. However, for formal testing, you need to set up tests that access the platform and custom APIs remotely. By formalizing the tests, you ensure that the entire test suite is exercised every run, and that the expected results are documented. In addition, you can set up the tests to run automatically. Another reason to run the tests remotely is that the MCS test UI automates some actions or exercises them differently, such that the UI tests might not represent true end-to-end simulations of external requests. For example, when you test a custom API from the MCS UI, some of the authentication setup is done automatically. Last, you must test remotely if you need to add or modify a header and the UI does not provide a field for doing so.

The way you remotely access a platform or custom API endpoint depends on the type of authentication that you want to use. See:

When you create a custom API, MCS creates a mock implementation that you can use for testing before you implement the custom code. You also can use the mock implementation to configure a response for a mobile application test case. After you have uploaded an implementation, you can switch to the mock implementation for testing purposes by making it the default. For more information, see Testing with Mock Data.

If your request is in a test suite, then you can put the name of the test suite in the Oracle-Mobile-Diagnostic-Session-ID header. The name appears as the app session ID in the log messages. This lets you filter the log data on the Logs page by entering the test suite name in the Search text box. Also, when you are viewing a message’s details, you can click the app session ID in the message to view all the messages with that ID. For more information about using the Oracle-Mobile-Diagnostic-Session-ID header, see How Client SDK Headers Enable Device and Session Diagnostics.

Troubleshooting Unexpected Test Results

When a test fails for a request, examine the response’s HTTP status code and the returned data to identify the issue. Status codes in the 200 range indicate success. Status codes in the 400 range indicate a client error where the calling client has done something the server doesn't expect or won’t allow. Depending on the 4XX error, this may require fixing custom code, giving a user the necessary privileges, or reconfiguring the server to allow requests of that type, for example. Status codes in the 500 range indicate that the server encountered a problem that it couldn't resolve. For example, the error might require reconfiguring server settings. Here are some common standard HTTP error codes and their meanings:

| Status Code | Description |

|---|---|

|

|

General error when fulfilling the request would cause an invalid state, such as missing data or a validation error. |

|

|

Error due to a missing or invalid authentication token. |

|

|

Error due to user not having authorization or if the resource is unavailable. |

|

|

Error due to the resource not being found. |

|

|

Error that although the requested URL exists, the HTTP method isn’t applicable. |

|

|

General error when an exception is thrown on the server side. |

To pinpoint where the error occurred, open the mobile backend, click Diagnostics, and then click Requests. Next, find the request, click View related log entries ![]() in the Related column, and then select Log Messages Related by API Request. To see a message’s details, click the time stamp. From the Message Details dialog, you can click the up and down arrows to see all the related log messages. Note that if there isn’t sufficient information in a request to enable MCS to determine the associated backend, then the related log messages appear only in the Logs page that is available from the Administration page.

in the Related column, and then select Log Messages Related by API Request. To see a message’s details, click the time stamp. From the Message Details dialog, you can click the up and down arrows to see all the related log messages. Note that if there isn’t sufficient information in a request to enable MCS to determine the associated backend, then the related log messages appear only in the Logs page that is available from the Administration page.

If you are a mobile cloud administrator, you can view the log from Administration page. Click ![]() to open the side menu. Next, click Administration, and then click Request History.

to open the side menu. Next, click Administration, and then click Request History.

For details about how to use the diagnostic logs, see Viewing Log Messages.

If you don’t see any messages that help identify the source of the problem, you can change to a finer level for logging messages. Click Log Level ![]() in the Logs page, change the log level for the mobile backend, and then rerun the test. If you’re troubleshooting custom code, then you can add your own log messages to the custom code, as described in Inserting Logging Into Custom Code, to help identify the code that’s causing the problem.

in the Logs page, change the log level for the mobile backend, and then rerun the test. If you’re troubleshooting custom code, then you can add your own log messages to the custom code, as described in Inserting Logging Into Custom Code, to help identify the code that’s causing the problem.

When troubleshooting an unexpected result, consider that the cause might be due to a rerouting of the call to the mobile backend as described in Making Changes After a Backend is Published (Rerouting). If the mobile backend was rerouted, check to see if the following conditions were met:

-

If the API was accessed using social identity, then the access token of the provider that was entered in the Authentication header must be the access token of the provider of the target mobile backend (that is, the mobile backend to which the original mobile backend was redirected).

-

If the API was accessed by a mobile user, then the user must be a member of the realm that is associated with the target mobile backend (the mobile backend to which the original mobile backend is being redirected).

Tip:

If, in a request, you set theOracle-Mobile-Diagnostic-Session-ID header to an identifier for the suite, that value is displayed in the message detail as the app session ID. If you click the app session ID in a message detail, then you can then click the up and down arrows to view all the messages for that ID. You can also enter the ID in the Search field to display only the log messages with that ID. For more information about using the Oracle-Mobile-Diagnostic-Session-ID header, see How Client SDK Headers Enable Device and Session Diagnostics.

These topics contain information about troubleshooting specific APIs:

Monitoring Runtime Issues and System Health

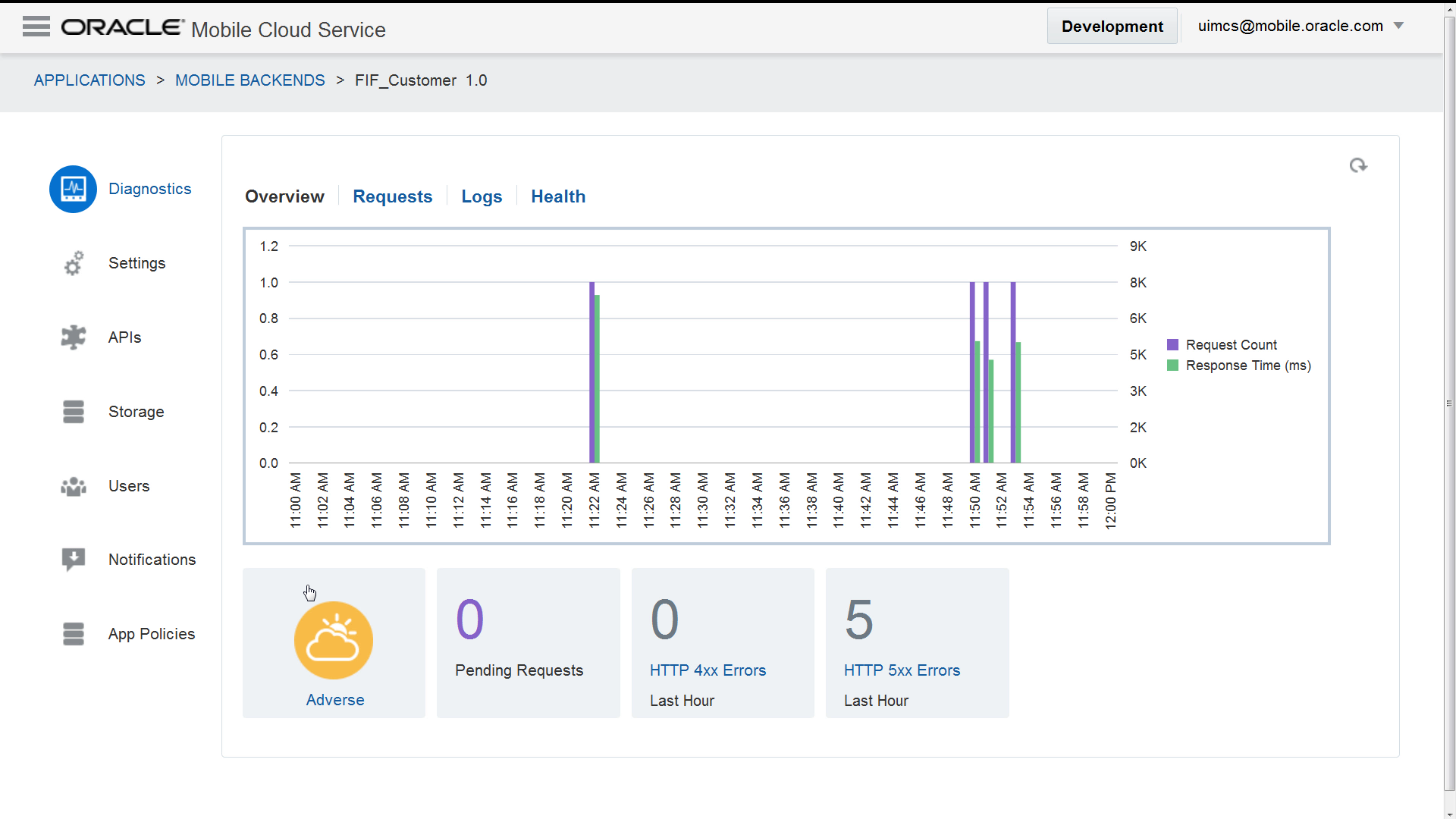

In addition to monitoring the results of white box and black box testing, you, as a mobile app developer or a mobile cloud administrator, will want to see live performance data to immediately address runtime issues, such as poor response times.

MCS provides two types of high-level monitoring consoles that display traffic-light indicators that convey overall environmental health, timelines that plot requests and responses, and counters of requests that result in HTTP 4xx and HTTP 5xx errors.

-

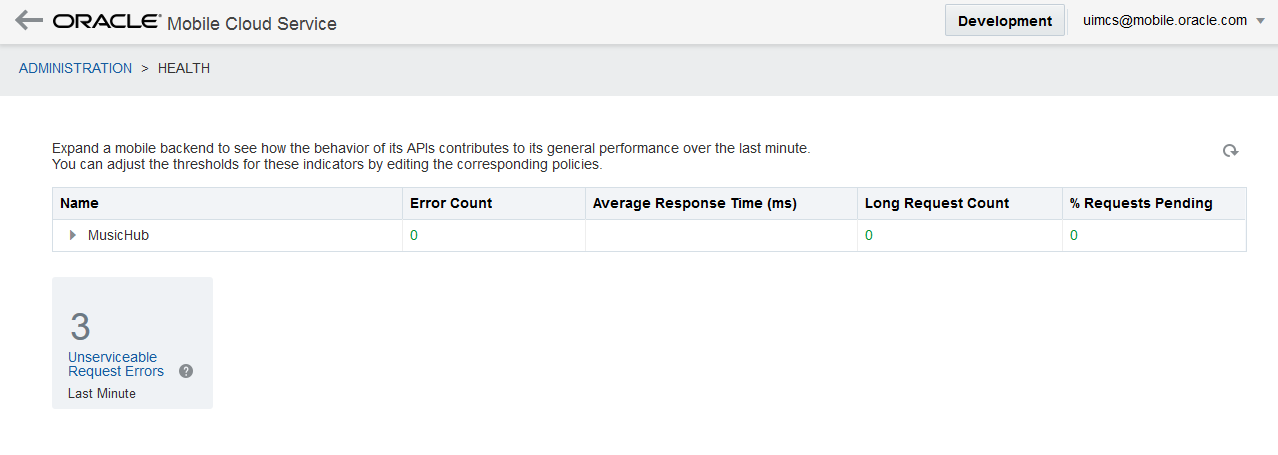

The Health page, which is available from the Administration page, lets mobile cloud administrators monitor and troubleshoot the overall system health.

-

The mobile backend Diagnostics page lets mobile developers monitor a mobile backend's health and troubleshoot issues with its associated mobile apps.

For in-depth information about these views and how you can use them, see Monitoring a Selected Backend, Viewing API Performance, and Viewing Status Codes for API Calls and Outbound Connector Calls.

Monitoring Overall Health

Green, amber, and red traffic-light indicators depict the overall health of an environment for the last minute. MCS bases this view on the health metrics for that environment. When the number of errors or the current request or response times exceed configured performance thresholds, the console changes the indicator from green (normal) to amber (adverse) or red (severe). For example, if the error count in the last minute is greater than 0, then the indicator is amber. If there are more than 9 errors in the last minute, then the indicator is red. To learn about the thresholds and how they affect the color of the indicators, see What Do the Health Indicator Thresholds Mean?

To see more detailed metrics for the last minute, such as the number of errors, the average duration of requests, the number of pending requests, and the number of long-running requests, hover over the indicator.

Monitoring Server Load and Request Backlog

To help monitor the server load and request backlog, the timeline plots the request counts and response times that occurred for the last hour, and the view displays the count of pending requests. To investigate a performance issue, click Requests or Request History to drill down to the request log.

Monitoring Mobile Backend and API Health

To see the error count, average response time, long request count, and percent requests pending for a mobile backend, click the traffic-light indicator to view the Health page. (The Health page shows all active mobile backends, whereas the Diagnostics view for a mobile backend shows just that backend). To see the same information for the mobile backend's APIs and their endpoints, expand the entry for the mobile backend.

To investigate the cause of an error, click Logs to drill down to the error log. To investigate a performance issue, click Requests or Request History to drill down to the request log.