Chapter 1 Concept, Architecture and Life Cycle of Oracle Private Cloud Appliance

This chapter describes what Oracle Private Cloud Appliance is, which hardware and software it consists of, and how it is deployed as a virtualization platform.

1.1 What is Oracle Private Cloud Appliance

Responding to the Cloud Challenges

Cloud architectures and virtualization solutions have become highly sophisticated and complex to implement. They require a skill set that no single administrator has had to acquire in traditional data centers: system hardware, operating systems, network administration, storage management, applications. Without expertise in every single one of those domains, an administrator cannot take full advantage of the features and benefits of virtualization technology. This often leads to poor implementations with sub-optimal performance and reliability, which impairs the flexibility of a business.

Aside from the risks created by technical complexity and lack of expertise, companies also suffer from an inability to deploy new infrastructure quickly enough to suit their business needs. The administration involved in the deployment of new systems, and the time and effort to configure these systems, can amount to weeks. Provisioning new applications into flexible virtualized environments, in a fraction of the time required for physical deployments, generates substantial financial benefits.

Fast Deployment of Converged Infrastructure

Oracle Private Cloud Appliance is an offering that industry analysts refer to as a Converged Infrastructure Appliance: an infrastructure solution in the form of a hardware appliance that comes from the factory pre-configured. It enables the operation of the entire system as a single unit, not a series of individual servers, network hardware and storage providers. Installation, configuration, high availability, expansion and upgrading are automated and orchestrated to an optimal degree. Within a few hours after power-on, the appliance is ready to create virtual servers. Virtual servers are commonly deployed from virtual appliances, in the form of Oracle VM templates (individual pre-configured VMs) and assemblies (interconnected groups of pre-configured VMs).

Modular Implementation of a Complete Stack

With Oracle Private Cloud Appliance, Oracle offers a unique full stack of hardware, software, virtualization technology and rapid application deployment through virtual appliances. All this is packaged in a single modular and extensible product. The minimum configuration consists of a base rack with infrastructure components, a pair of management nodes, and two compute nodes. This configuration can be extended by one compute node at a time. All rack units, whether populated or not, are pre-cabled and pre-configured at the factory in order to facilitate the installation of expansion compute nodes on-site at a later time.

Ease of Use

The primary value proposition of Oracle Private Cloud Appliance is the integration of components and resources for the purpose of ease of use and rapid deployment. It should be considered a general purpose solution in the sense that it supports the widest variety of operating systems, including Windows, and any application they might host. Customers can attach their existing storage or connect new storage solutions from Oracle or third parties.

1.2 Hardware Components

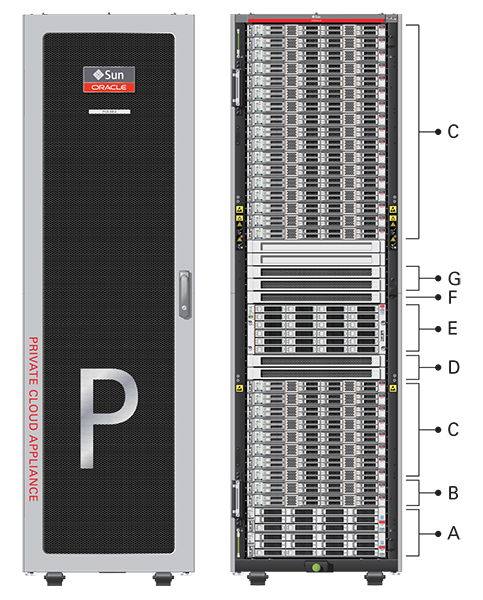

The current Oracle Private Cloud Appliance hardware platform, with factory-installed Controller Software Release 2.4.x, consists of an Oracle Rack Cabinet 1242 base, populated with the hardware components identified in Figure 1.1. Previous generations of hardware components continue to be supported by the latest Controller Software, as described below.

|

Item |

Quantity |

Description |

|---|---|---|

|

A |

2 |

Oracle ZFS Storage Appliance ZS7-2 controller server |

|

B |

2 |

Oracle Server X8-2, used as management nodes |

|

C |

2-25 |

Oracle Server X8-2, used as virtualization compute nodes (Due to the power requirements of the Oracle Server X8-2, if the appliance is equipped with 22kVA PDUs, the maximum number of compute nodes is 22. With 15KVA PDUs the maximum is 13 compute nodes.) |

|

D |

2 |

Cisco Nexus 9336C-FX2 Switch, used as leaf/data switches |

|

E |

1 |

Oracle ZFS Storage Appliance ZS7-2 disk shelf |

|

F |

1 |

Cisco Nexus 9348GC-FXP Switch |

|

G |

2 |

Cisco Nexus 9336C-FX2 Switch, used as spine switches |

Support for Previous Generations of Hardware Components

The latest version of the Oracle Private Cloud Appliance Controller Software continues to support all earlier configurations of the hardware platform. These may include the following components:

|

Component Type |

Component Name and Minimum Software Version |

|---|---|

|

Management Nodes |

|

|

Compute Nodes |

|

|

Storage Appliance |

|

|

InfiniBand Network Hardware |

|

|

Internal Management Switch |

|

1.2.1 Management Nodes

At the heart of each Oracle Private Cloud Appliance installation is a pair of management nodes. They are installed in rack units 5 and 6 and form a cluster in active/standby configuration for high availability: both servers are capable of running the same services and have equal access to the system configuration, but one operates as the active while the other is ready to take over the active functions in case a failure occurs. The active management node runs the full set of services required, while the standby management node runs a subset of services until it is promoted to the active role. The active role is determined at boot through OCFS2 Distributed Lock Management on an iSCSI LUN, which both management nodes share on the ZFS Storage Appliance installed inside the rack. Because rack units are numbered from the bottom up, and the bottom four are occupied by components of the ZFS Storage Appliance, the active management node is typically the server in rack unit 5. It is the only server that must be powered on by the administrator in the entire process to bring the appliance online.

For details about how high availability is achieved with Oracle Private Cloud Appliance, refer to Section 1.5, “High Availability”.

When you power on the Oracle Private Cloud Appliance for the first time, you can change the factory default IP configuration of the management node cluster, so that it can be easily reached from your data center network. The management nodes share a Virtual IP, where the management web interface can be accessed. This virtual IP is assigned to whichever server has the active role at any given time. During system initialization, after the management cluster is set up successfully, the active management node loads a number of Oracle Linux services, in addition to Oracle VM and its associated MySQL database – including network, sshd, ntpd, iscsi initiator, dhcpd – to orchestrate the provisioning of all system components. During provisioning, all networking and storage is configured, and all compute nodes are discovered, installed and added to an Oracle VM server pool. All provisioning configurations are preloaded at the factory and should not be modified by the customer.

For details about the provisioning process, refer to Section 1.4, “Provisioning and Orchestration”.

1.2.2 Compute Nodes

The compute nodes in the Oracle Private Cloud Appliance constitute the virtualization platform. The compute nodes provide the processing power and memory capacity for the virtual servers they host. The entire provisioning process is orchestrated by the management nodes: compute nodes are installed with Oracle VM Server 3.4.x and additional packages for Software Defined Networking. When provisioning is complete, the Oracle Private Cloud Appliance Controller Software expects all compute nodes in the same rack to be part of the same Oracle VM server pool.

For hardware configuration details of the compute nodes, refer to Server Components in the Introduction to Oracle Private Cloud Appliance Installation section of the Oracle Private Cloud Appliance Installation Guide.

The Oracle Private Cloud Appliance Dashboard allows the administrator to monitor the health and status of the compute nodes, as well as all other rack components, and perform certain system operations. The virtual infrastructure is configured and managed with Oracle VM Manager.

The Oracle Private Cloud Appliance offers modular compute capacity that can be increased according to business needs. The minimum configuration of the base rack contains just two compute nodes, but it can be expanded by one node at a time up to 25 compute nodes. Apart from the hardware installation, adding compute nodes requires no intervention by the administrator. New nodes are discovered, powered on, installed and provisioned automatically by the active management node. The additional compute nodes are integrated into the existing configuration and, as a result, the Oracle VM server pool offers increased capacity for more or larger virtual machines.

As a further expansion option, the Oracle Server X8-2 compute nodes can be ordered with pre-installed fibre channel cards, or equipped with fibre channel cards after installation. Once these compute nodes are integrated in the Oracle Private Cloud Appliance environment, the fibre channel HBAs can connect to standard FC switches and storage hardware in your data center. External FC storage configuration is managed through Oracle VM Manager. For more information, refer to the Fibre Channel Storage Attached Network section of the Oracle VM Concepts Guide.

When using expansion nodes containing fibre channel cards in a system with InfiniBand-based network architecture, the vHBAs must be disabled on those compute nodes.

Because of the diversity of possible virtualization scenarios it is difficult to quantify the compute capacity as a number of virtual machines. For sizing guidelines, refer to the chapter entitled Configuration Maximums in the Oracle Private Cloud Appliance Release Notes.

1.2.3 Storage Appliance

The Oracle Private Cloud Appliance Controller Software continues to provide support for previous generations of the ZFS Storage Appliance installed in the base rack. However, there are functional differences between the Oracle ZFS Storage Appliance ZS7-2, which is part of systems with an Ethernet-based network architecture, and the previous models of the ZFS Storage Appliance, which are part of systems with an InfiniBand-based network architecture. For clarity, this section describes the different storage appliances separately.

1.2.3.1 Oracle ZFS Storage Appliance ZS7-2

The Oracle ZFS Storage Appliance ZS7-2, which consists of two controller servers installed at the bottom of the appliance rack and disk shelf about halfway up, fulfills the role of 'system disk' for the entire appliance. It is crucial in providing storage space for the Oracle Private Cloud Appliance software.

A portion of the disk space, 3TB by default, is made available for customer use and is sufficient for an Oracle VM storage repository with several virtual machines, templates and assemblies. The remaining part of approximately 100TB in total disk space can also be configured as a storage repository for virtual machine resources. Further capacity extension with external storage is also possible.

The hardware configuration of the Oracle ZFS Storage Appliance ZS7-2 is as follows:

-

Two clustered storage heads with two 14TB hard disks each

-

One fully populated disk chassis with twenty 14TB hard disks

-

Four cache disks installed in the disk shelf: 2x 200GB SSD and 2x 7.68TB SSD

-

RAID-1 configuration, for optimum data protection, with a total usable space of approximately 100TB

The storage appliance is connected to the management subnet

(192.168.4.0/24) and the storage subnet

(192.168.40.0/24). Both heads form a cluster in

active-passive configuration to guarantee continuation of service in the event that one

storage head should fail. The storage heads share a single IP in the storage subnet, but

both have an individual management IP address for convenient maintenance access. The

RAID-1 storage pool contains two projects, named OVCA and

OVM .

The OVCA project contains all LUNs and file systems

used by the Oracle Private Cloud Appliance software:

-

LUNs

-

Locks(12GB) – to be used exclusively for cluster locking on the two management nodes -

Manager(200GB) – to be used exclusively as an additional file system on both management nodes

-

-

File systems:

-

MGMT_ROOT– to be used for storage of all files specific to the Oracle Private Cloud Appliance -

Database– placeholder file system for databases -

Incoming(20GB) – to be used for FTP file transfers, primarily for Oracle Private Cloud Appliance component backups -

Templates– placeholder file system for future use -

User– placeholder file system for future use -

Yum– to be used for system package updates

-

The OVM project contains all LUNs and file systems

used by Oracle VM:

-

LUNs

-

iscsi_repository1(3TB) – to be used as Oracle VM storage repository -

iscsi_serverpool1(12GB) – to be used as server pool file system for the Oracle VM clustered server pool

-

-

File systems:

-

nfs_repository1(3TB) – used bykdump; not available for customer use -

nfs_serverpool1(12GB) – to be used as server pool file system for the Oracle VM clustered server pool in case NFS is preferred over iSCSI

-

If the internal ZFS Storage Appliance contains customer-created LUNs, make sure they are not mapped to the default initiator group. See “Customer Created LUNs Are Mapped to the Wrong Initiator Group” within the

Known Limitations and Workarounds section of the Oracle Private Cloud Appliance Release Notes.

In addition to offering storage, the ZFS storage appliance also runs the xinetd and tftpd services. These complement the Oracle Linux services on the active management node in order to orchestrate the provisioning of all Oracle Private Cloud Appliance system components.

Along with the Section 1.6, “Oracle Private Cloud Appliance Backup” capabilities, you can also use the native ZFS features to backup the internal ZFS storage appliance. Oracle ZFS Storage Appliance supports snapshot-based replication of projects and shares from a source appliance to a replication target, to a different pool on the same appliance, or to an NFS server for offline replication. You can configure replication to be executed manually, on a schedule, or continuously. For more information see Remote Replication in the Oracle® ZFS Storage Appliance Administration Guide, Release OS8.8.x.

Do not configure the internal ZFS appliance as a storage device for external hosts.

1.2.3.2 Oracle ZFS Storage Appliance ZS5-ES and Earlier Models

The Oracle ZFS Storage Appliance ZS5-ES installed at the bottom of the appliance rack should be considered a 'system disk' for the entire appliance. Its main purpose is to provide storage space for the Oracle Private Cloud Appliance software. A portion of the disk space is made available for customer use and is sufficient for an Oracle VM storage repository with a limited number of virtual machines, templates and assemblies.

The hardware configuration of the Oracle ZFS Storage Appliance ZS5-ES is as follows:

-

Two clustered storage heads with two 3.2TB SSDs each, used exclusively for cache

-

One fully populated disk chassis with twenty 1.2TB 10000 RPM SAS hard disks

-

RAID-Z2 configuration, for best balance between performance and data protection, with a total usable space of approximately 15TB

Oracle Private Cloud Appliance base racks shipped prior to software release 2.3.3 use a Sun ZFS Storage Appliance 7320 or Oracle ZFS Storage Appliance ZS3-ES. Those systems may be upgraded to a newer software stack, which continues to provide support for each Oracle Private Cloud Appliance storage configuration. The newer storage appliance offers the same functionality and configuration, with modernized hardware and thus better performance.

The storage appliance is connected to the management subnet (

192.168.4.0/24 ) and the

InfiniBand (IPoIB) storage subnet (

192.168.40.0/24 ). Both heads

form a cluster in active-passive configuration to guarantee

continuation of service in the event that one storage head

should fail. The storage heads share a single IP in the

storage subnet, but both have an individual management IP

address for convenient maintenance access. The RAID-Z2 storage

pool contains two projects, named

OVCA and

OVM .

The OVCA project contains all

LUNs and file systems used by the Oracle Private Cloud Appliance software:

-

LUNs

-

Locks(12GB) – to be used exclusively for cluster locking on the two management nodes -

Manager(200GB) – to be used exclusively as an additional file system on both management nodes

-

-

File systems:

-

MGMT_ROOT– to be used for storage of all files specific to the Oracle Private Cloud Appliance -

Database– placeholder file system for databases -

Incoming(20GB) – to be used for FTP file transfers, primarily for Oracle Private Cloud Appliance component backups -

Templates– placeholder file system for future use -

User– placeholder file system for future use -

Yum– to be used for system package updates

-

The OVM project contains all

LUNs and file systems used by Oracle VM:

-

LUNs

-

iscsi_repository1(300GB) – to be used as Oracle VM storage repository -

iscsi_serverpool1(12GB) – to be used as server pool file system for the Oracle VM clustered server pool

-

-

File systems:

-

nfs_repository1(300GB) – to be used as Oracle VM storage repository in case NFS is preferred over iSCSI -

nfs_serverpool1(12GB) – to be used as server pool file system for the Oracle VM clustered server pool in case NFS is preferred over iSCSI

-

If the internal ZFS Storage Appliance contains customer-created LUNs, make sure they are not mapped to the default initiator group. See “Customer Created LUNs Are Mapped to the Wrong Initiator Group” within the

Known Limitations and Workarounds section of the Oracle Private Cloud Appliance Release Notes.

In addition to offering storage, the ZFS storage appliance also runs the xinetd and tftpd services. These complement the Oracle Linux services on the active management node in order to orchestrate the provisioning of all Oracle Private Cloud Appliance system components.

1.2.4 Network Infrastructure

For network connectivity, Oracle Private Cloud Appliance relies on a physical layer that provides the necessary high-availability, bandwidth and speed. On top of this, several different virtual networks are optimally configured for different types of data traffic. Only the internal administration network is truly physical; the appliance data connectivity uses Software Defined Networking (SDN). The appliance rack contains redundant network hardware components, which are pre-cabled at the factory to help ensure continuity of service in case a failure should occur.

Depending on the exact hardware configuration of your appliance, the physical network layer is either high-speed Ethernet or InfiniBand. In this section, both network architectures are described separately in more detail.

1.2.4.1 Ethernet-Based Network Architecture

Oracle Private Cloud Appliance with Ethernet-based network architecture relies on redundant physical high-speed Ethernet connectivity.

Administration Network

The administration network provides internal access to the

management interfaces of all appliance components. These have

Ethernet connections to the Cisco Nexus 9348GC-FXP Switch, and all have a

predefined IP address in the

192.168.4.0/24 range. In

addition, all management and compute nodes have a second IP

address in this range, which is used for Oracle Integrated Lights Out Manager (ILOM)

connectivity.

While the appliance is initializing, the data network is not accessible, which means

that the internal administration network is temporarily the only way to connect to the

system. Therefore, the administrator should connect a workstation to the reserved Ethernet

port 48 in the Cisco Nexus 9348GC-FXP Switch, and assign the fixed IP address

192.168.4.254 to the workstation. From this

workstation, the administrator opens a browser connection to the web server on the active

management node at https://192.168.4.216 , in order to

monitor the initialization process and perform the initial configuration steps when the

appliance is powered on for the first time.

Data Network

The appliance data connectivity is built on redundant Cisco Nexus 9336C-FX2 Switches in a leaf-spine design. In this two-layer design, the leaf switches interconnect the rack hardware components, while the spine switches form the backbone of the network and perform routing tasks. Each leaf switch is connected to all the spine switches, which are also interconnected. The main benefits of this network architecture are extensibility and path optimization. An Oracle Private Cloud Appliance rack contains two leaf and two spine switches.

The Cisco Nexus 9336C-FX2 Switch offers a maximum throughput of 100Gbit

per port. The spine switches use 5 interlinks (500Gbit); the

leaf switches use 2 interlinks (200Gbit) and 2x2 crosslinks to

the spines. Each compute node is connected to both leaf

switches in the rack, through the bond1

interface that consists of two 100Gbit Ethernet ports in link

aggregation mode. The two storage controllers are connected to

the spine switches using 4x40Gbit connections.

For external connectivity, 5 ports are reserved on each spine switch. Four ports are available for custom network configurations; one port is required for the default uplink. This default external uplink requires that port 5 on both spine switches is split using a QSFP+-to-SFP+ four way splitter or breakout cable. Two of those four 10GbE SFP+ breakout ports per spine switch, ports 5/1 and 5/2, must be connected to a pair of next-level data center switches, also called top-of-rack or ToR switches.

Software Defined Networking

While the physical data network described above allows the data packets to be transferred, the true connectivity is implemented through Software Defined Networking (SDN). Using VxLAN encapsulation and VLAN tagging, thousands of virtual networks can be deployed, providing segregated data exchange. Traffic can be internal between resources within the appliance environment, or external to network storage, applications, or other resources in the data center or on the internet. SDN maintains the traffic separation of hard-wired connections, and adds better performance and dynamic (re-)allocation. From the perspective of the customer network, the use of VxLANs in Oracle Private Cloud Appliance is transparent: encapsulation and de-encapsulation take place internally, without modifying inbound or outbound data packets. In other words, this design extends customer networking, tagged or untagged, into the virtualized environment hosted by the appliance.

During the initialization process of the Oracle Private Cloud Appliance, several essential default networks are configured:

-

The Internal Storage Network is a redundant 40Gbit Ethernet connection from the spine switches to the ZFS storage appliance. All four storage controller interfaces are bonded using LACP into one datalink. Management and compute nodes can reach the internal storage over the

192.168.40.0/21subnet on VLAN 3093. This network also fulfills the heartbeat function for the clustered Oracle VM server pool. -

The Internal Management Network provides connectivity between the management nodes and compute nodes in the subnet

192.168.32.0/21on VLAN 3092. It is used for all network traffic inherent to Oracle VM Manager, Oracle VM Server and the Oracle VM Agents. -

The Internal Underlay Network provides the infrastructure layer for data traffic between compute nodes. It uses the subnet

192.168.64.0/21on VLAN 3091. On top of the internal underlay network, internal VxLAN overlay networks are built to enable virtual machine connectivity where only internal access is required.One such internal VxLAN is configured in advance: the default internal VM network, to which all compute nodes are connected with their

vx2interface. Untagged traffic is supported by default over this network. Customers can add VLANs of their choice to the Oracle VM network configuration, and define the subnet(s) appropriate for IP address assignment at the virtual machine level. -

The External Underlay Network provides the infrastructure layer for data traffic between Oracle Private Cloud Appliance and the data center network. It uses the subnet

192.168.72.0/21on VLAN 3090. On top of the external underlay network, VxLAN overlay networks with external access are built to enable public connectivity for the physical nodes and all the virtual machines they host.One such public VxLAN is configured in advance: the default external network, to which all compute nodes and management nodes are connected with their

vx13040interface. Both tagged and untagged traffic are supported by default over this network. Customers can add VLANs of their choice to the Oracle VM network configuration, and define the subnet(s) appropriate for IP address assignment at the virtual machine level.The default external network also provides access to the management nodes from the data center network and allows the management nodes to run a number of system services. The management node external network settings are configurable through the Network Settings tab in the Oracle Private Cloud Appliance Dashboard. If this network is a VLAN, its ID or tag must be configured in the Network Setup tab of the Dashboard.

For the appliance default networking to be configured successfully, the default external uplink must be in place before the initialization of the appliance begins. At the end of the initialization process, the administrator assigns three reserved IP addresses from the data center (public) network range to the management node cluster of the Oracle Private Cloud Appliance: one for each management node, and an additional Virtual IP shared by the clustered nodes. From this point forward, the Virtual IP is used to connect to the active management node's web server, which hosts both the Oracle Private Cloud Appliance Dashboard and the Oracle VM Manager web interface.

It is critical that both spine Cisco Nexus 9336C-FX2 Switches have two 10GbE connections each to a pair of next-level data center switches. For this purpose, a 4-way breakout cable must be attached to port 5 of each spine switch, and 10GbE breakout ports 5/1 and 5/2 must be used as uplinks. Note that ports 5/3 and 5/4 remain unused.

This outbound cabling between the spine switches and the data center network should be crossed or meshed, to ensure optimal continuity of service.

1.2.4.2 InfiniBand-Based Network Architecture

Oracle Private Cloud Appliance with InfiniBand-based network architecture relies on a physical InfiniBand network fabric, with additional Ethernet connectivity for internal management communication.

Ethernet

The Ethernet network relies on two interconnected Oracle Switch ES1-24

switches, to which all other rack components are connected

with CAT6 Ethernet cables. This network serves as the

appliance management network, in which every component has a

predefined IP address in the

192.168.4.0/24 range. In

addition, all management and compute nodes have a second IP

address in this range, which is used for Oracle Integrated Lights Out Manager (ILOM)

connectivity.

While the appliance is initializing, the InfiniBand fabric is not accessible, which

means that the management network is the only way to connect to the system. Therefore, the

administrator should connect a workstation to the available Ethernet port 19 in one of the

Oracle Switch ES1-24 switches, and assign the fixed IP address

192.168.4.254 to the workstation. From this

workstation, the administrator opens a browser connection to the web server on the active

management node at http://192.168.4.216 , in order to

monitor the initialization process and perform the initial configuration steps when the

appliance is powered on for the first time.

InfiniBand

The Oracle Private Cloud Appliance rack contains two NM2-36P Sun Datacenter InfiniBand Expansion Switches. These redundant switches have redundant cable connections to both InfiniBand ports in each management node, compute node and storage head. Both InfiniBand switches, in turn, have redundant cable connections to both Fabric Interconnects in the rack. All these components combine to form a physical InfiniBand backplane with a 40Gbit (Quad Data Rate) bandwidth.

When the appliance initialization is complete, all necessary Oracle Private Cloud Appliance software packages, including host drivers and InfiniBand kernel modules, have been installed and configured on each component. At this point, the system is capable of using software defined networking (SDN) configured on top of the physical InfiniBand fabric. SDN is implemented through the Fabric Interconnects.

Fabric Interconnect

All Oracle Private Cloud Appliance network connectivity is managed through the Fabric Interconnects. Data is transferred across the physical InfiniBand fabric, but connectivity is implemented in the form of Software Defined Networks (SDN), which are sometimes referred to as 'clouds'. The physical InfiniBand backplane is capable of hosting thousands of virtual networks. These Private Virtual Interconnects (PVI) dynamically connect virtual machines and bare metal servers to networks, storage and other virtual machines, while maintaining the traffic separation of hard-wired connections and surpassing their performance.

During the initialization process of the Oracle Private Cloud Appliance, five essential networks, four of which are SDNs, are configured: a storage network, an Oracle VM management network, a management Ethernet network, and two virtual machine networks. Tagged and untagged virtual machine traffic is supported. VLANs can be constructed using virtual interfaces on top of the existing bond interfaces of the compute nodes.

-

The storage network, technically not software-defined, is a bonded IPoIB connection between the management nodes and the ZFS storage appliance, and uses the

192.168.40.0/24subnet. This network also fulfills the heartbeat function for the clustered Oracle VM server pool. DHCP ensures that compute nodes are assigned an IP address in this subnet. -

The Oracle VM management network is a PVI that connects the management nodes and compute nodes in the

192.168.140.0/24subnet. It is used for all network traffic inherent to Oracle VM Manager, Oracle VM Server and the Oracle VM Agents. -

The management Ethernet network is a bonded Ethernet connection between the management nodes. The primary function of this network is to provide access to the management nodes from the data center network, and enable the management nodes to run a number of system services. Since all compute nodes are also connected to this network, Oracle VM can use it for virtual machine connectivity, with access to and from the data center network. The management node external network settings are configurable through the Network Settings tab in the Oracle Private Cloud Appliance Dashboard. If this network is a VLAN, its ID or tag must be configured in the Network Setup tab of the Dashboard.

-

The public virtual machine network is a bonded Ethernet connection between the compute nodes. Oracle VM uses this network for virtual machine connectivity, where external access is required. Untagged traffic is supported by default over this network. Customers can add their own VLANs to the Oracle VM network configuration, and define the subnet(s) appropriate for IP address assignment at the virtual machine level. For external connectivity, the next-level data center switches must be configured to accept your tagged VLAN traffic.

-

The private virtual machine network is a bonded Ethernet connection between the compute nodes. Oracle VM uses this network for virtual machine connectivity, where only internal access is required. Untagged traffic is supported by default over this network. Customers can add VLANs of their choice to the Oracle VM network configuration, and define the subnet(s) appropriate for IP address assignment at the virtual machine level.

Finally, the Fabric Interconnects also manage the physical public network connectivity of the Oracle Private Cloud Appliance. Two 10GbE ports on each Fabric Interconnect must be connected to redundant next-level data center switches. At the end of the initialization process, the administrator assigns three reserved IP addresses from the data center (public) network range to the management node cluster of the Oracle Private Cloud Appliance: one for each management node, and an additional Virtual IP shared by the clustered nodes. From this point forward, the Virtual IP is used to connect to the active management node's web server, which hosts both the Oracle Private Cloud Appliance Dashboard and the Oracle VM Manager web interface.

It is critical that both Fabric Interconnects have two 10GbE connections each to a pair of next-level data center switches. This configuration with four cable connections provides redundancy and load splitting at the level of the Fabric Interconnects, the 10GbE ports and the data center switches. This outbound cabling should not be crossed or meshed, because the internal connections to the pair of Fabric Interconnects are already configured that way. The cabling pattern plays a key role in the continuation of service during failover scenarios involving Fabric Interconnect outages and other components.

1.3 Software Components

This section describes the main software components the Oracle Private Cloud Appliance uses for operation and configuration.

1.3.1 Oracle Private Cloud Appliance Dashboard

The Oracle Private Cloud Appliance provides its own web-based graphical user interface that can be used to perform a variety of administrative tasks specific to the appliance. The Oracle Private Cloud Appliance Dashboard is an Oracle JET application that is available through the active management node.

Use the Dashboard to perform the following tasks:

-

Appliance system monitoring and component identification

-

Initial configuration of management node networking data

-

Resetting of the global password for Oracle Private Cloud Appliance configuration components

The Oracle Private Cloud Appliance Dashboard is described in detail in Chapter 2, Monitoring and Managing Oracle Private Cloud Appliance.

1.3.2 Password Manager (Wallet)

All components of the Oracle Private Cloud Appliance have administrator accounts with a default password. After applying your data center network settings through the Oracle Private Cloud Appliance Dashboard, it is recommended that you modify the default appliance password. The Authentication tab allows you to set a new password, which is applied to the main system configuration components. You can set a new password for all listed components at once or for a selection only.

Passwords for all accounts on all components are stored in a global Wallet, secured with 512-bit encryption. To update the password entries, you use either the Oracle Private Cloud Appliance Dashboard or the Command Line Interface. For details, see Section 2.9, “Authentication”.

1.3.3 Oracle VM Manager

All virtual machine management tasks are performed within Oracle VM Manager, a WebLogic application that is installed on each of the management nodes and which provides a web-based management user interface and a command line interface that allows you to manage your Oracle VM infrastructure within the Oracle Private Cloud Appliance.

Oracle VM Manager is comprised of the following software components:

-

Oracle VM Manager application: provided as an Oracle WebLogic Server domain and container.

-

Oracle WebLogic Server 12c: including Application Development Framework (ADF) Release 12c, used to host and run the Oracle VM Manager application

-

MySQL 5.6 Enterprise Edition Server: for the exclusive use of the Oracle VM Manager application as a management repository and installed on the Database file system hosted on the ZFS storage appliance.

Administration of virtual machines is performed using the Oracle VM Manager web user interface, as described in Chapter 5, Managing the Oracle VM Virtual Infrastructure. While it is possible to use the command line interface provided with Oracle VM Manager, this is considered an advanced activity that should only be performed with a thorough understanding of the limitations of Oracle VM Manager running in the context of an Oracle Private Cloud Appliance.

1.3.4 Operating Systems

Hardware components of the Oracle Private Cloud Appliance run their own operating systems:

-

Management Nodes: Oracle Linux 6 with UEK R4

-

Compute Nodes: Oracle VM Server 3.4.6

-

Oracle ZFS Storage Appliance: Oracle Solaris 11

All other components run a particular revision of their respective firmware. All operating software has been selected and developed to work together as part of the Oracle Private Cloud Appliance. When an update is released, the appropriate versions of all software components are bundled. When a new software release is activated, all component operating software is updated accordingly. You should not attempt to update individual components unless Oracle explicitly instructs you to.

1.3.5 Databases

The Oracle Private Cloud Appliance uses a number of databases to track system states, handle configuration and provisioning, and for Oracle VM Manager. All databases are stored on the ZFS storage appliance, and are exported via an NFS file system. The databases are accessible to each management node to ensure high availability.

Databases must never be edited manually. The appliance configuration depends on them, so manipulations are likely to break functionality.

The following table lists the different databases used by the Oracle Private Cloud Appliance.

|

Item |

Description |

|---|---|

|

Oracle Private Cloud Appliance Node Database |

Contains information on every compute node and management node in the rack, including the state used to drive the provisioning of compute nodes and data required to handle software updates. Type: BerkeleyDB

Location:

|

|

Oracle Private Cloud Appliance Inventory Database |

Contains information on all hardware components

appearing in the management network

Type: BerkeleyDB

Location:

|

|

Oracle Private Cloud Appliance Netbundle Database |

Predefines Ethernet and bond device names for all possible networks that can be configured throughout the system, and which are allocated dynamically. Type: BerkeleyDB

Location:

|

|

Oracle Private Cloud Appliance DHCP Database |

Contains information on the assignment of DHCP addresses to newly detected compute nodes. Type: BerkeleyDB

Location:

|

|

Cisco Data Network Database |

Contains information on the networks configured for traffic through the spine switches, and the interfaces participating in the networks. Used only on systems with Ethernet-based network architecture. Type: BerkeleyDB

Location:

|

|

Cisco Management Switch Ports Database |

Defines the factory-configured map of Cisco Nexus 9348GC-FXP Switch ports to the rack unit or element to which that port is connected. It is used to map switch ports to machine names. Used only on systems with Ethernet-based network architecture. Type: BerkeleyDB

Location:

|

|

Oracle Fabric Interconnect Database |

Contains IP and host name data for the Oracle Fabric Interconnect F1-15s. Used only on systems with InfiniBand-based network architecture. Type: BerkeleyDB

Location:

|

|

Oracle Switch ES1-24 Ports Database |

Defines the factory-configured map of Oracle Switch ES1-24 ports to the rack unit or element to which that port is connected. It is used to map Oracle Switch ES1-24 ports to machine names. Used only on systems with InfiniBand-based network architecture. Type: BerkeleyDB

Location:

|

|

Oracle Private Cloud Appliance Mini Database |

A multi-purpose database used to map compute node hardware profiles to on-board disk size information. It also contains valid hardware configurations that servers must comply with in order to be accepted as an Oracle Private Cloud Appliance component. Entries contain a sync ID for more convenient usage within the Command Line Interface (CLI). Type: BerkeleyDB

Location:

|

|

Oracle Private Cloud Appliance Monitor Database |

Records fault counts detected through the ILOMs of all active components identified in the Inventory Database. Type: BerkeleyDB

Location:

|

|

Oracle Private Cloud Appliance Setup Database |

Contains the data set by the Oracle Private Cloud Appliance Dashboard setup facility. The data in this database is automatically applied by both the active and standby management nodes when a change is detected. Type: BerkeleyDB

Location:

|

|

Oracle Private Cloud Appliance Task Database |

Contains state data for all of the asynchronous tasks that have been dispatched within the Oracle Private Cloud Appliance. Type: BerkeleyDB

Location:

|

|

Oracle Private Cloud Appliance Synchronization Databases |

Contain data and configuration settings for the

synchronization service to apply and maintain across

rack components. Errors from failed attempts to

synchronize configuration parameters across appliance

components can be reviewed in the

Synchronization databases are not present by default. They are created when the first synchronization task of a given type is received. Type: BerkeleyDB

Location:

|

|

Oracle Private Cloud Appliance Update Database |

Used to track the two-node coordinated management node update process. Note Database schema changes and wallet changes between different releases of the controller software are written to a file. It ensures that these critical changes are applied early in the software update process, before any other appliance components are brought back up. Type: BerkeleyDB

Location:

|

|

Oracle Private Cloud Appliance Tenant Database |

Contains details about all tenant groups: default and custom. These details include the unique tenant group ID, file system ID, member compute nodes, status information, etc. Type: BerkeleyDB

Location:

|

|

Oracle VM Manager Database |

Used on each management node as the management database for Oracle VM Manager. It contains all configuration details of the Oracle VM environment (including servers, pools, storage and networking), as well as the virtualized systems hosted by the environment. Type: MySQL Database

Location:

|

1.3.6 Oracle Private Cloud Appliance Management Software

The Oracle Private Cloud Appliance includes software that is designed for the provisioning, management and maintenance of all of the components within the appliance. The controller software, which handles orchestration and automation of tasks across various hardware components, is not intended for human interaction. Its appliance administration functions are exposed through the browser interface and command line interface, which are described in detail in this guide.

All configuration and management tasks must be performed using the Oracle Private Cloud Appliance Dashboard and the Command Line Interface. Do not attempt to run any processes directly without explicit instruction from an Oracle Support representative. Attempting to do so may render your appliance unusable.

Besides the Dashboard and CLI, this software also includes a

number of Python applications that run on the active management

node. These applications are found in

/usr/sbin on each management node and some

are listed as follows:

-

pca-backup: the script responsible for performing backups of the appliance configuration as described in Section 1.6, “Oracle Private Cloud Appliance Backup” -

pca-check-master: a script that verifies which of the two management nodes currently has the active role -

ovca-daemon: the core provisioning and management daemon for the Oracle Private Cloud Appliance -

pca-dhcpd: a helper script to assist the DHCP daemon with the registration of compute nodes -

pca-diag: a tool to collect diagnostic information from your Oracle Private Cloud Appliance, as described in Section 1.3.7, “Oracle Private Cloud Appliance Diagnostics Tool” -

pca-factory-init: the appliance initialization script used to set the appliance to its factory configuration. This script does not function as a reset; it is only used for initial rack setup. -

pca-redirect: a daemon that redirects HTTP or HTTPS requests to the Oracle Private Cloud Appliance Dashboard described in Section 1.3.1, “Oracle Private Cloud Appliance Dashboard” -

ovca-remote-rpc: a script for remote procedure calls directly to the Oracle VM Server Agent. Currently it is only used by the management node to monitor the heartbeat of the Oracle VM Server Agent. -

ovca-rpc: a script that allows the Oracle Private Cloud Appliance software components to communicate directly with the underlying management scripts running on the management node

Many of these applications use a specific Oracle Private Cloud Appliance

library that is installed in

/usr/lib/python2.6/site-packages/ovca/ on

each management node.

1.3.7 Oracle Private Cloud Appliance Diagnostics Tool

The Oracle Private Cloud Appliance includes a tool that can be run to collect

diagnostic data: logs and other types of files that can help to

troubleshoot hardware and software problems. This tool is

located in /usr/sbin/ on each management

and compute node, and is named pca-diag. The

data it retrieves, depends on the selected command line

arguments:

-

pca-diag

When you enter this command, without any additional arguments, the tool retrieves a basic set of files that provide insights into the current health status of the Oracle Private Cloud Appliance. You can run this command on all management and compute nodes. All collected data is stored in

/tmp, compressed into a single tarball (ovcadiag_).<node-hostname>_<ID>_<date>_<time>.tar.bz2 -

pca-diag version

When you enter this command, version information for the current Oracle Private Cloud Appliance software stack is displayed. The

versionargument cannot be combined with any other argument. -

pca-diag ilom

When you enter this command, diagnostic data is retrieved, by means of

ipmitool, through the host's ILOM. The data set includes details about the host's operating system, processes, health status, hardware and software configuration, as well as a number of files specific to the Oracle Private Cloud Appliance configuration. You can run this command on all management and compute nodes. All collected data is stored in/tmp, compressed into a single tarball (ovcadiag_).<node-hostname>_<ID>_<date>_<time>.tar.bz2 -

pca-diag vmpinfo

CautionWhen using the

vmpinfoargument, the command must be run from the active management node.When you enter this command, the Oracle VM diagnostic data collection mechanism is activated. The

vmpinfo3script collects logs and configuration details from the Oracle VM Manager, and logs andsosreportinformation from each Oracle VM Server or compute node. All collected data is stored in/tmp, compressed into two tarballs:ovcadiag_and<node-hostname>_<ID>_<date>_<time>.tar.bz2vmpinfo3-.<version>-<date>-<time>.tar.gzTo collect diagnostic information for a subset of the Oracle VM Servers in the environment, you run the command with an additional

serversparameter: pca-diag vmpinfo servers='ovcacn07r1,ovcacn08r1,ovcacn09r1'

Diagnostic collection with pca-diag is possible from the command

line of any node in the system. Only the active management node allows you to use all of the

command line arguments. Although vmpinfo is not available on the compute

nodes, running pca-diag directly on the compute can help retrieve

important diagnostic information regarding Oracle VM Server that cannot be captured with

vmpinfo.

The pca-diag tool is typically run by multiple users with different roles. System administrators or field service engineers may use it as part of their standard operating procedures, or Oracle Support teams may request that the tool be run in a specific manner as part of an effort to diagnose and resolve reported hardware or software issues. For additional information and instructions, also refer to the section “Data Collection for Service and Support” in the Oracle Private Cloud Appliance Release Notes.

1.4 Provisioning and Orchestration

As a converged infrastructure solution, the Oracle Private Cloud Appliance is built to eliminate many of the intricacies of optimizing the system configuration. Hardware components are installed and cabled at the factory. Configuration settings and installation software are preloaded onto the system. Once the appliance is connected to the data center power source and public network, the provisioning process between the administrator pressing the power button of the first management node and the appliance reaching its Deployment Readiness state is entirely orchestrated by the active management node. This section explains what happens as the Oracle Private Cloud Appliance is initialized and all nodes are provisioned.

1.4.1 Appliance Management Initialization

Boot Sequence and Health Checks

When power is applied to the first management node, it takes approximately five minutes for the server to boot. While the Oracle Linux 6 operating system is loading, an Apache web server is started, which serves a static welcome page the administrator can browse to from the workstation connected to the appliance management network.

The necessary Oracle Linux services are started as the server comes

up to runlevel 3 (multi-user mode with networking). At this

point, the management node executes a series of system health

checks. It verifies that all expected infrastructure components

are present on the appliance administration network and in the

correct predefined location, identified by the rack unit number

and fixed IP address. Next, the management node probes the ZFS

storage appliance for a management NFS export and a management

iSCSI LUN with OCFS2 file system. The storage and its access

groups have been configured at the factory. If the health checks

reveal no problems, the ocfs2

and o2cb services are started

up automatically.

Management Cluster Setup

When the OCFS2 file system on the shared iSCSI LUN is ready, and

the o2cb services have started

successfully, the management nodes can join the cluster. In the

meantime, the first management node has also started the second

management node, which will come up with an identical

configuration. Both management nodes eventually join the

cluster, but the first management node will take an exclusive

lock on the shared OCFS2 file system using Distributed Lock

Management (DLM). The second management node remains in

permanent standby and takes over the lock only in case the first

management node goes down or otherwise releases its lock.

With mutual exclusion established between both members of the management cluster, the active management node continues to load the remaining Oracle Private Cloud Appliance services, including dhcpd, Oracle VM Manager and the Oracle Private Cloud Appliance databases. The virtual IP address of the management cluster is also brought online, and the Oracle Private Cloud Appliance Dashboard is activated. The static Apache web server now redirects to the Dashboard at the virtual IP, where the administrator can access a live view of the appliance rack component status.

Once the dhcpd service is

started, the system state changes to Provision

Readiness, which means it is ready to discover

non-infrastructure components.

1.4.2 Compute Node Discovery and Provisioning

Node Manager

To discover compute nodes, the Node Manager on the active management node uses a DHCP

server and the node database. The node database is a BerkeleyDB type database, located on

the management NFS share, containing the state and configuration details of each node in the

system, including MAC addresses, IP addresses and host names. The discovery process of a

node begins with a DHCP request from the ILOM. Most discovery and provisioning actions are

synchronous and occur sequentially, while time consuming installation and configuration

processes are launched in parallel and asynchronously. The DHCP server hands out

pre-assigned IP addresses on the appliance administration network (

192.168.4.0/24 ). When the Node Manager has verified that

a node has a valid service tag for use with Oracle Private Cloud Appliance, it launches a series of

provisioning tasks. All required software resources have been loaded onto the ZFS storage

appliance at the factory.

Provisioning Tasks

The provisioning process is tracked in the node database by means of status changes. The next provisioning task can only be started if the node status indicates that the previous task has completed successfully. For each valid node, the Node Manager begins by building a PXE configuration and forces the node to boot using Oracle Private Cloud Appliance runtime services. After the hardware RAID-1 configuration is applied, the node is restarted to perform a kickstart installation of Oracle VM Server. Crucial kernel modules and host drivers are added to the installation. At the end of the installation process, the network configuration files are updated to allow all necessary network interfaces to be brought up.

Once the internal management network exists, the compute node is rebooted one last time to reconfigure the Oracle VM Agent to communicate over this network. At this point, the node is ready for Oracle VM Manager discovery.

As the Oracle VM environment grows and contains more and more virtual machines and many different VLANs connecting them, the number of management operations and registered events increases rapidly. In a system with this much activity the provisioning of a compute node takes significantly longer, because the provisioning tasks run through the same management node where Oracle VM Manager is active. There is no impact on functionality, but the provisioning tasks can take several hours to complete. It is recommended to perform compute node provisioning at a time when system activity is at its lowest.

1.4.3 Server Pool Readiness

Oracle VM Server Pool

When the Node Manager detects a fully installed compute node that is ready to join the Oracle VM environment, it issues the necessary Oracle VM CLI commands to add the new node to the Oracle VM server pool. With the discovery of the first node, the system also configures the clustered Oracle VM server pool with the appropriate networking and access to the shared storage. For every compute node added to Oracle VM Manager the IPMI configuration is stored in order to enable convenient remote power-on/off.

Oracle Private Cloud Appliance expects that all compute nodes in one rack initially belong to a single clustered server pool with High Availability (HA) and Distributed Resource Scheduling (DRS) enabled. When all compute nodes have joined the Oracle VM server pool, the appliance is in Ready state, meaning virtual machines (VMs) can be deployed.

Expansion Compute Nodes

When an expansion compute node is installed, its presence is detected based on the DHCP request from its ILOM. If the new server is identified as an Oracle Private Cloud Appliance node, an entry is added in the node database with "new" state. This triggers the initialization and provisioning process. New compute nodes are integrated seamlessly to expand the capacity of the running system, without the need for manual reconfiguration by an administrator.

Synchronization Service

As part of the provisioning process, a number of configuration settings are applied, either globally or at individual component level. Some are visible to the administrator, and some are entirely internal to the system. Throughout the life cycle of the appliance, software updates, capacity extensions and configuration changes will occur at different points in time. For example, an expansion compute node may have different hardware, firmware, software, configuration and passwords compared to the servers already in use in the environment, and it comes with factory default settings that do not match those of the running system. A synchronization service, implemented on the management nodes, can set and maintain configurable parameters across heterogeneous sets of components within an Oracle Private Cloud Appliance environment. It facilitates the integration of new system components in case of capacity expansion or servicing, and allows the administrator to streamline the process when manual intervention is required. The CLI provides an interface to the exposed functionality of the synchronization service.

1.5 High Availability

The Oracle Private Cloud Appliance is designed for high availability at every level of its component make-up.

Management Node Failover

During the factory installation of an Oracle Private Cloud Appliance, the management nodes are configured as a cluster. The cluster relies on an OCFS2 file system exported as an iSCSI LUN from the ZFS storage to perform the heartbeat function and to store a lock file that each management node attempts to take control of. The management node that has control over the lock file automatically becomes the active node in the cluster.

When the Oracle Private Cloud Appliance is first initialized, the

o2cb service is started on each management

node. This service is the default cluster stack for the OCFS2 file

system. It includes a node manager that keeps track of the nodes

in the cluster, a heartbeat agent to detect live nodes, a network

agent for intra-cluster node communication and a distributed lock

manager to keep track of lock resources. All these components are

in-kernel.

Additionally, the ovca service is started on each management node.

The management node that obtains control over the cluster lock and is thereby promoted to the

active management node, runs the full complement of Oracle Private Cloud Appliance services. This process

also configures the Virtual IP that is used to access the active management node, so that it

is 'up' on the active management node and 'down' on the standby management node. This ensures

that, when attempting to connect to the Virtual IP address that you configured for the

management nodes, you are always accessing the active management node.

In the case where the active management node fails, the cluster

detects the failure and the lock is released. Since the standby

management node is constantly polling for control over the lock

file, it detects when it has control of this file and the

ovca service brings up all of the required

Oracle Private Cloud Appliance services. On the standby management node the

Virtual IP is configured on the appropriate interface as it is

promoted to the active role.

When the management node that failed comes back online, it no longer has control of the cluster lock file. It is automatically put into standby mode, and the Virtual IP is removed from the management interface. This means that one of the two management nodes in the rack is always available through the same IP address and is always correctly configured. The management node failover process takes up to 5 minutes to complete.

Oracle VM Management Database Failover

The Oracle VM Manager database files are located on a shared file system exposed by the ZFS storage appliance. The active management node runs the MySQL database server, which accesses the database files on the shared storage. In the event that the management node fails, the standby management node is promoted and the MySQL database server on the promoted node is started so that the service can resume as normal. The database contents are available to the newly running MySQL database server.

Compute Node Failover

High availability (HA) of compute nodes within the Oracle Private Cloud Appliance is enabled through the clustered server pool that is created automatically in Oracle VM Manager during the compute node provisioning process. Since the server pool is configured as a cluster using an underlying OCFS2 file system, HA-enabled virtual machines running on any compute node can be migrated and restarted automatically on an alternate compute node in the event of failure.

The Oracle VM Concepts Guide provides good background information about the principles of high availability. Refer to the section How does High Availability (HA) Work?.

Storage Redundancy

Further redundancy is provided through the use of the ZFS storage appliance to host storage. This component is configured with RAID-1 providing integrated redundancy and excellent data loss protection. Furthermore, the storage appliance includes two storage heads or controllers that are interconnected in a clustered configuration. The pair of controllers operate in an active-passive configuration (all data pools and data interfaces owned by only one head in the cluster), meaning continuation of service is guaranteed in the event that one storage head should fail. The storage heads share a single IP in the storage subnet, but both have an individual management IP address for convenient maintenance access.

Network Redundancy

All of the customer-usable networking within the Oracle Private Cloud Appliance is configured for redundancy. Only the internal administrative Ethernet network, which is used for initialization and ILOM connectivity, is not redundant. There are two of each switch type to ensure that there is no single point of failure. Networking cabling and interfaces are equally duplicated and switches are interconnected as described in Section 1.2.4, “Network Infrastructure”.

1.6 Oracle Private Cloud Appliance Backup

The configuration of all components within Oracle Private Cloud Appliance is automatically backed up and stored on the ZFS storage appliance as a set of archives. Backups are named with a time stamp for when the backup is run.

During initialization, a crontab entry is created on each management node to perform a global backup twice in every 24 hours. The first backup runs at 09h00 and the second at 21h00. Only the active management node actually runs the backup process when it is triggered.

To trigger a backup outside of the default schedule, use the Command Line Interface. For details, refer to Section 4.2.8, “backup”.

Backups are stored on the MGMT_ROOT file

system on the ZFS storage appliance and are accessible on each

management node at

/nfs/shared_storage/backups. When the backup

process is triggered, it creates a temporary directory named with

the time stamp for the current backup process. The entire

directory is archived in a *.tar.bz2 file when

the process is complete. Within this directory several

subdirectories are also created:

-

data_net_switch: used only on systems with Ethernet-based network architecture; contains the configuration data of the spine and leaf switches

-

mgmt_net_switch: used only on systems with Ethernet-based network architecture; contains the management switch configuration data

-

nm2: used only on systems with InfiniBand-based network architecture; contains the NM2-36P Sun Datacenter InfiniBand Expansion Switch configuration data

-

opus: used only on systems with InfiniBand-based network architecture; contains the Oracle Switch ES1-24 configuration data

-

ovca: contains all of the configuration information relevant to the deployment of the management nodes such as the password wallet, the network configuration of the management nodes, configuration databases for the Oracle Private Cloud Appliance services, and DHCP configuration.

-

ovmm: contains the most recent backup of the Oracle VM Manager database, the actual source data files for the current database, and the UUID information for the Oracle VM Manager installation. Note that the actual backup process for the Oracle VM Manager database is handled automatically from within Oracle VM Manager. Manual backup and restore are described in detail in the section entitled Backing up and Restoring Oracle VM Manager, in the Oracle VM Manager Administration Guide.

-

ovmm_upgrade: contains essential information for each upgrade attempt. When an upgrade is initiated, a time-stamped subdirectory is created to store the preinstall.log file with the output from the pre-upgrade checks. Backups of any other files modified during the pre-upgrade process, are also saved in this directory.

-

xsigo: used only on systems with InfiniBand-based network architecture; contains the configuration data for the Fabric Interconnects.

-

zfssa: contains all of the configuration information for the ZFS storage appliance

The backup process collects data for each component in the appliance and ensures that it is stored in a way that makes it easy to restore that component to operation in the case of failure [1] .

Taking regular backups is standard operating procedure for any production system. The internal backup mechanism cannot protect against full system failure, site outage or disaster. Therefore, you should consider implementing a backup strategy to copy key system data to external storage. This requires what is often referred to as a bastion host: a dedicated system configured with specific internal and external connections.

For a detailed description of the backup contents, and for guidelines to export internal backups outside the appliance, refer to the Oracle technical paper entitled Oracle Private Cloud Appliance Backup Guide.

1.7 Oracle Private Cloud Appliance Upgrader

Together with Oracle Private Cloud Appliance Controller Software Release 2.3.4, a new independent upgrade tool was introduced: the Oracle Private Cloud Appliance Upgrader. It is provided as a separate application, with its own release and update schedule. It maintains the phased approach, where management nodes, compute nodes and other rack components are updated in separate procedures, while at the same time it groups and automates sets of tasks that were previously executed as scripted or manual steps. The new design has better error handling and protection against terminal crashes, ssh timeouts or inadvertent user termination. It is intended to reduce complexity and improve the overall upgrade experience.

The Oracle Private Cloud Appliance Upgrader was built as a modular framework. Each module consists of pre-checks, an execution phase such as upgrade or install, and post-checks. Besides the standard interactive mode, modules also provide silent mode for programmatic use, and verify-only mode to run pre-checks without starting the execution phase.

The first module developed within the Oracle Private Cloud Appliance Upgrader framework, is the management node upgrade. With a single command, it guides the administrator through the pre-upgrade validation steps – now included in the pre-checks of the Upgrader –, software image deployment, Oracle Private Cloud Appliance Controller Software update, Oracle Linux operating system Yum update, and Oracle VM Manager upgrade.

For software update instructions, see Chapter 3, Updating Oracle Private Cloud Appliance.

For specific Oracle Private Cloud Appliance Upgrader details, see Section 3.2, “Using the Oracle Private Cloud Appliance Upgrader”.