Oracle OpenStack for Oracle Linux uses groups to associate the target nodes with OpenStack services. Target nodes in the same group run the same OpenStack services. The default groups are:

control: Contains the control-related services, such as glance, keystone, ndbcluster, nova, and rabbitmq.

compute: Contains the hypervisor part of the compute services, such as nova-compute.

database: Contains the data part of the database services.

network: Contains the shared network services, such as neutron-server, neutron-agents and neutron-plugins.

storage: Contains the storage part of storage services, such as cinder and swift.

A node can belong to more than one group and can run multiple OpenStack services.

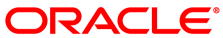

The minimum supported deployment of OpenStack contains at least three nodes (as shown in Figure 2.1):

Two controller nodes, each node belongs to the control, database, network and storage groups.

One or more nodes belonging to the compute group.

Single-node deployments (sometimes referred to as all-in-one deployments) are not supported.

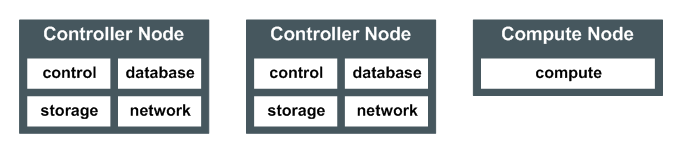

As your scaling and performance requirements change, you can increase the number of nodes and move groups on to separate nodes to spread the workload, as shown in Figure 2.2:

As your deployment expands, note the following "rules" for deployment:

The nodes in the compute group must not be assigned to the control group.

The control group must contain at least two nodes.

The number of nodes in the database group must always be a multiple of two.

Each group must contain at least two nodes to enable high availability.

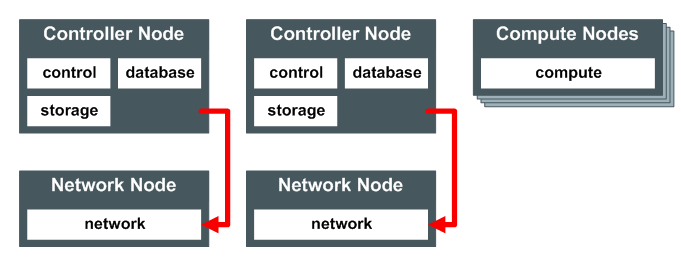

There is no limit on the number of nodes in a deployment. Figure 2.3 shows a fully-expanded deployment using the default groups. There are at least two nodes for each group to maintain high availability, and the number of database nodes is a multiple of two.

You are not restricted to using the default groups. You can change the services a group runs, or configure your own groups. If you configure your own groups, be sure to remember the rules of deployment listed above.