Determine the Data Storage Requirements

Before you can migrate data from a source system to a target system, you need to determine the amount of storage required to hold the data. The assessment approach differs based on the source system configuration, but these are typical determinations:

-

Total storage capacity and topology – Used to configure equivalent storage on the target system.

-

Data management type (or file system type) – Used to determine which commands you can use to gather storage capacity (described in this section) It is also used to determine which commands to use for the migration (see Migrating Data with Oracle Solaris Commands).

-

Disk capacity used by the data – Used to calculate the amount of space needed in the shared storage that is used during the migration (see Prepare Shared Storage).

To keep track of the storage information, you can create a worksheet like this example:

|

-

Identify the storage on the source system.

You can use the format utility to list the disks and the disk names that are used in other commands.

root@Source# format AVAILABLE DISK SELECTIONS: 0. c0t0d0 <SUN146G cyl 14087 alt 2 hd 24 sec 848> /pci@0,600000/pci@0/scsi@1/sd@0,0 1. c0t1d0 <SEAGATE-ST960004SSUN600G-0115 cyl 64986 alt 2 hd 27 sec 668> /pci@0,600000/pci@0/scsi@1/sd@1,0 2. c1t0d0 <SUN600G cyl 64986 alt 2 hd 27 sec 668> /pci@24,600000/pci@0/scsi@1/sd@0,0 3. c1t1d0 <SUN600G cyl 64986 alt 2 hd 27 sec 668> /pci@24,600000/pci@0/scsi@1/sd@1,0 4. c2t600144F0E635D8C700005AC7C46F0017d0 <SUN-ZFSStorage7420-1.0 cyl 8124 alt 2 hd 254 sec 254> /scsi_vhci/ssd@g600144f0e635d8c700005ac7c46f0017 5. c2t600144F0E635D8C700005AC7C4920018d0 <SUN-ZFSStorage7420-1.0 cyl 8124 alt 2 hd 254 sec 254> /scsi_vhci/ssd@g600144f0e635d8c700005ac7c4920018 6. c2t600144F0E635D8C700005AC56A6A0012d0 <SUN-ZFS Storage 7420-1.0-300.00GB> /scsi_vhci/ssd@g600144f0e635d8c700005ac56a6a0012 7. c2t600144F0E635D8C700005AC56A460011d0 <SUN-ZFS Storage 7420-1.0-300.00GB> /scsi_vhci/ssd@g600144f0e635d8c700005ac56a460011 8. c2t600144F0E635D8C700005AC56AB30013d0 <SUN-ZFS Storage 7420-1.0-1200.00GB> /scsi_vhci/ssd@g600144f0e635d8c700005ac56ab30013 9. c2t600144F0E635D8C700005AC56ADA0014d0 <SUN-ZFS Storage 7420-1.0-1200.00GB> /scsi_vhci/ssd@g600144f0e635d8c700005ac56ada0014 10. c2t600144F0E635D8C700005AC56B2E0016d0 <SUN-ZFS Storage 7420-1.0-200.00GB> /scsi_vhci/ssd@g600144f0e635d8c700005ac56b2e0016 11. c2t600144F0E635D8C700005AC56B080015d0 <SUN-ZFS Storage 7420-1.0-200.00GB> /scsi_vhci/ssd@g600144f0e635d8c700005ac56b080015 CTRL-C -

Determine the file system types used by the application data.

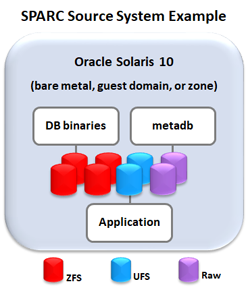

The application data might use a combination of file system or data management types. For example, the application might be on UFS, the database binaries on ZFS, and the metadb data on a raw file system.

These commands list the file system types.

-

fstyp /dev/rdsk/device_name (where device_name is the cntndnsn such as c2t600144F0E635D8C700005AC7C46F0017d0s6)

-

mount -v

Refer to the fstyp(1M) man page and the mount(1M) man page.

You can also use any available commands and utilities that provided by your data application.

-

-

Identify the amount of storage used by the application data.

The commands used to gather storage use depend on the file system type. The following table lists Oracle Solaris commands that are used to gather storage usage and topology information. Some examples are shown after the table.

Data Management TypeSpace Assessment CommandsMan PageUFSdf -hmountprtvtoc /dev/rdsk/device_nameSVMdf -hmetastatmetastat -p metasetmountZFSdf -hmountzpool listAlso see:Understanding How ZFS Calculates Used Space (Doc ID 1369456.1) from https://support.oracle.comASM and raw disksasmcmdASMCD> lsdgASMCD> lsdsk -p -G DATAiostat -En device_nameprtvotc /dev/rdsk/devicesqlplus / as sysasmSQL> select path, name from v$asm_disk;Examples

-

Identifying the amount of storage used by the ZFS file systems.

Sum the sizes under the SIZE column to determine total amount of storage allocated.

The values in the ALLOC column are used to calculate the amount of storage that is needed in shared storage and on the target system.

root@SourceGlobal #zpool list NAME SIZE ALLOC FREE CAP HEALTH ALTROOT dbzone 298G 16.4G 282G 5% ONLINE - dbzone_db_binary 149G 22.2G 127G 14% ONLINE - rpool 556G 199G 357G 35% ONLINE -

-

Identifying the amount of storage used by the UFS file systems.

In this example, the database zone logs are on UFS file systems on disks 4 and 5.

The values in the used column are used to calculate the amount of storage that is needed in shared storage and on the target system.

root@Source# df -h -F ufs Filesystem size used avail capacity Mounted on /logs/archivelogs 197G 13G 182G 7% /logs/archivelogs /logs/redologs 9.8G 1.2G 8.6G 13% /logs/redologs

-

Identifying the amount of storage used by ASM raw disks.

In this example, the ASM raw disks are on slice 0 of disks 10 and 11 (as numbered by the format utility).

The capacities in GB can be extracted from the disk partition table using the following scripts.

In this example, each ASM raw disk is approximately 199 GB.

root@Source# echo $((`prtvtoc /dev/rdsk/c2t600144F0E635D8C700005AC56B2E0016d0s2 | grep " 0 " | awk '{ print $5 }'`*512/1024/1024/1024)) 199 root@Source# echo $((`prtvtoc /dev/rdsk/c2t600144F0E635D8C700005AC56B080015d0s2 | grep " 0 " | awk '{ print $5 }'`*512/1024/1024/1024)) 199 -

To list the SVM metadevice state database

(metadb) configuration.

In this example, two internal disks (c0t0d0s4 and c1t0d0s4) are used for redundancy of the metadevice state database.

root@Source# metadb flags first blk block count a m pc luo 16 8192 /dev/dsk/c0t0d0s4 a pc luo 8208 8192 /dev/dsk/c0t0d0s4 a pc luo 16400 8192 /dev/dsk/c0t0d0s4 a pc luo 16 8192 /dev/dsk/c1t0d0s4 a pc luo 8208 8192 /dev/dsk/c1t0d0s4 a pc luo 16400 8192 /dev/dsk/c1t0d0s4 -

To display disk capacities.

You can use the iostat -En command with each disk name that was provided in the previous step. For example:

iostat -En disk_name

Repeat the iostat command for each disk.

Note that the sizes shown represent the raw whole disk capacity including a reserved area. The actual usable capacity is less.

This example shows that the target system is configured to provide the exact same virtual disks and capacities that the guest domain had on the source system.

root@Target# iostat -En c0t600144F09F2C0BFD00005BE4C424000Cd0 | grep -i size Size: 1.07GB <1073741824 bytes>

-

Identifying the amount of storage used by the ZFS file systems.

-

On the Target system, use similar commands to ensure there is equal or greater

storage available for the incoming data.

If needed, configure virtual storage for the incoming data.

To configure a LUN for a guest domain, see Configure the Target's Virtual Storage (Logical Domain Virtualization).

To configure a LUN for a zone, see Configure the Target's Virtual Storage (Zone Virtualization).