Trunk Aggregations

Trunk aggregations are based on the IEEE 802.3ad standard and work by enabling multiple flows of traffic to be spread across a set of aggregated ports. IEEE 802.3ad requires switch configuration, as well as switch-vendor proprietary extensions in order to work across multiple switches. In trunk aggregations, the clients configured over the aggregations get a consolidated bandwidth of the underlying links, because each network port is associated with every configured datalink over the aggregation. When you create a link aggregation, the aggregation is by default created in the trunk mode. You might use a trunk aggregation in the following situations:

-

For systems in the network that run applications with distributed heavy traffic, you can dedicate a trunk aggregation to that application's traffic to take advantage of the increased bandwidth.

-

For sites with limited IP address space that require large amounts of bandwidth, you need only one IP address for the trunk aggregation of datalinks.

-

For sites that need to hide any internal datalinks, the IP address of the trunk aggregation hides these datalinks from external applications.

-

For applications that need reliable network connection, trunk aggregation protects network connections against link failure.

Trunk aggregation supports the following features:

-

Using a switch

-

Using a switch with the Link Aggregation Control Protocol (LACP)

-

Back-to-back trunk aggregation configuration

-

Aggregation policies and load balancing

The following sections describe the features of trunk aggregations.

Using a Switch

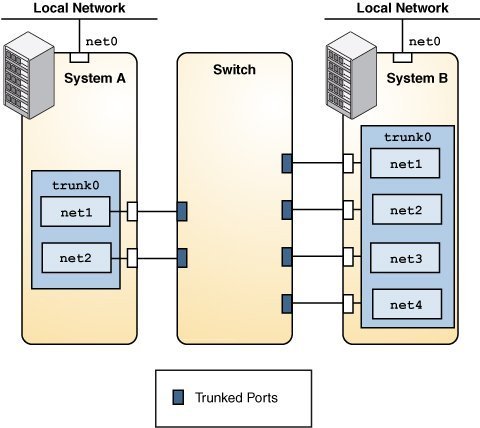

Systems that are configured with trunk aggregations might use an external switch to connect to other systems. The following figure depicts a local network with two systems where each system has a trunk aggregation configured.

Figure 2 Trunk Aggregation Using a Switch

The two systems are connected by a switch. System A has a trunk aggregation that consists of two datalinks, net1 and net2. These datalinks are connected to the switch through aggregated ports. System B has a trunk aggregation of four datalinks, net1 through net4. These datalinks are also connected to the aggregated ports on the switch. In this trunk aggregation topology, the switch must support the IEEE 802.3ad standard and the switch ports must be configured for aggregation. See the switch manufacturer's documentation to configure the switch.

Back-to-Back Trunk Aggregation Configuration

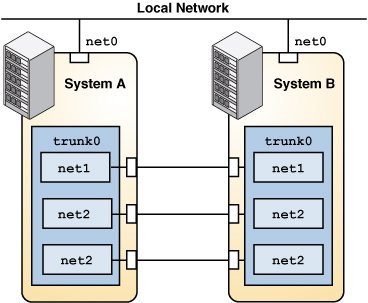

Trunk aggregations support back-to-back configuration. Instead of using a switch, two systems are directly connected to run parallel aggregations, as shown in the following figure.

Figure 3 Back-to-Back Trunk Aggregation Configuration

The illustration shows trunk aggregation trunk0 on System A directly connected to trunk aggregation trunk0 on System B by means of the corresponding links between their respective underlying datalinks. This setup enables Systems A and B to provide redundancy, high availability, and high-speed communication between both systems. Each system also has net0 configured for traffic flow within the local network.

The most common application of a back-to-back trunk aggregation is the configuration of mirrored database servers in large deployments such as data centers. Both servers must be updated together and therefore require significant bandwidth, high-speed traffic flow, and reliability.

Using a Switch With the Link Aggregation Control Protocol

If your setup of a trunk aggregation has a switch and the switch supports LACP, you can enable LACP for the switch and the system. Oracle Switch ES1-24 supports LACP and for more information about configuring LACP, see Sun Ethernet Fabric Operating System, LA Administration Guide. If you are using any other switch, see the switch manufacturer's documentation to configure the switch.

LACP enables a more reliable detection method of datalink failures. Without LACP, a link aggregation relies only on the link state reported by the device driver to detect the failure of an aggregated datalink. With LACP, LACPDUs are exchanged at regular intervals to ensure that the aggregated datalinks can send and receive traffic. LACP also detects some misconfiguration cases, for example, when the grouping of datalinks does not match between the two peers.

LACP exchanges special frames called Link Aggregation Control Protocol Data Units (LACPDUs) between the aggregation and the switch if LACP is enabled on the system. LACP uses these LACPDUs to maintain the state of the aggregated datalinks.

-

off – The default mode for aggregations. The system does not generate LACPDUs.

-

active – The system generates LACPDUs at specified intervals.

-

passive – The system generates an LACPDU only when it receives an LACPDU from the switch. When both the aggregation and the switch are configured in passive mode, they do not exchange LACPDUs.

For information about how to configure LACP, see How to Create a Link Aggregation.

Use the dladm create-aggr command to configure an aggregation's LACP to one of the following three modes:

Defining Aggregation Policies for Load Balancing

You can define a policy for the outgoing traffic that specifies how to distribute load across the available links of an aggregation, thus establishing load balancing. You can use the following load specifiers to enforce various load balancing policies:

-

L2 – Determines the outgoing link by using the MAC (L2) header of each packet

-

L3 – Determines the outgoing link by using the IP (L3) header of each packet

-

L4 – Determines the outgoing link by using the TCP, UDP, or other ULP (L4) header of each packet

Any combination of these policies is also valid. The default policy is L4.