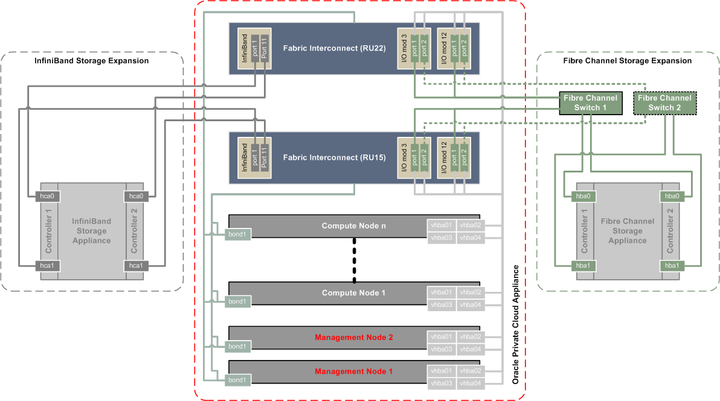

This section shows how external storage is connected to Oracle PCA.

All nodes in the Oracle PCA rack are connected to the IPoIB storage network (

192.168.40.0/24) through theirbond1interface, which consists of two virtual InfiniBand interfaces. For Fibre Channel storage connectivity all compute nodes have 4 virtual HBAs that terminate on the Fibre Channel I/O modules of the Fabric Interconnects. The underlying physical connection of all storage traffic consists of redundant InfiniBand cabling. All external storage connectivity is managed by the Fabric Interconnects.External InfiniBand storage, shown on the left-hand side of the diagram, is connected directly to InfiniBand ports 1 and 11 of the Fabric Interconnects.

External Fibre Channel storage, shown on the right-hand side of the diagram, is connected to the I/O modules 3 and 12 of the Fabric Interconnects, but through one or two external Fibre Channel switches.

The minimum configuration uses 1 port per FC I/O module for a total of 4 physical connections to one external Fibre Channel switch. In this configuration both HBAs of both storage controllers are connected to the same Fibre Channel switch.

The full HA configuration uses both ports in each FC I/O module for a total of 8 physical connections: 4 per external Fibre Channel switch. In this configuration the HBAs of both storage controllers are cross-cabled to the Fibre Channel switches: one HBA is connected to switch 1 and the other HBA is connected to switch 2. The additional connections for the HA configuration are marked with dotted lines in the diagram.

The vHBAs in the servers and the Fibre Channel ports in the I/O modules of the Fabric Interconnects are connected according to a round-robin scheme for high availability. In a field installation the compute nodes are provisioned in random order. Therefore it is impossible to predict which nodes are connected to which I/O ports. The software does ensure that half the compute nodes follow the connection scheme of management node 1, while the other half follows the connection scheme of management node 2.

The connection scheme presented in Table C.1 shows that the four vHBAs in each server node are virtually cross-cabled to the Fabric Interconnects and their Fibre Channel I/O modules. To find out exactly how a particular compute node connects to the external FC storage you have to run the CLI command list wwpn-info.

Table C.1 Fibre Channel vHBA, I/O Port and Storage Cloud Mapping

vHBA

Fabric Interconnect

I/O Port

Storage Cloud

vhba01

ovcasw22r1 RU22

3/1 or 12/1

A

vhba02

ovcasw22r1 RU22

3/2 or 12/2

B

vhba03

ovcasw15r1 RU15

3/1 or 12/1

C

vhba04

ovcasw15r1 RU15

3/2 or 12/2

D