Datalink Multipathing Aggregations

Datalink multipathing (DLMP) aggregation is a type of link aggregation that does not require switch configuration. It also supports link-based failure detection and probe-based failure detection to ensure continuous availability of the network to send and receive traffic. DLMP aggregations can be created from the following components:

-

Physical NICs

-

VNICs

-

Single root I/O virtualization (SR-IOV) VNICs on the aggregation of SR-IOV NICs

-

IP over InfiniBand (IPoIB) VNICs on the aggregation of IPoIB partition datalinks

DLMP aggregations span multiple switches. As a Layer 2 technology, aggregations integrate well with other Oracle Solaris virtualization technologies.

How DLMP Aggregation Works

In a trunk aggregation, each port is associated with every configured datalink over the aggregation. In a DLMP aggregation, a port is associated with any of the aggregation's configured datalinks.

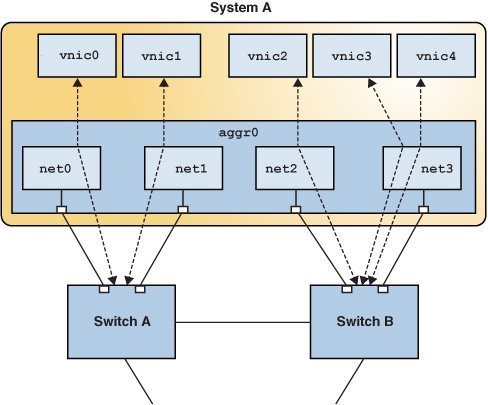

The following figure shows how a DLMP aggregation works.

Figure 4 DLMP Aggregation

System A's link aggregation aggr0 consists of four underlying links. The aggregation is connected to both Switch A and Switch B through which the system accesses the wider network.

VNICs are associated with aggregated ports through the underlying links. Thus, vnic0 through vnic3 are associated with the aggregated ports through the underlying links net0 through net3.

If an equal number of VNICs and underlying links exists, then each port has a corresponding underlying link. If more VNICs exist than underlying links, then one port is associated with multiple datalinks. Thus, in the figure, vnic4 shares a port with vnic3.

Failure Detection in DLMP Aggregation

Failure detection in DLMP aggregation detects failure in aggregated ports.

When a port fails, the clients associated with that port are failed over to an active port to maintain network connectivity. Failed aggregated ports remain unusable until they are repaired. The remaining active ports continue to function while any existing ports are deployed as needed. After the failed port recovers from the failure, clients from other active ports can be associated with it.

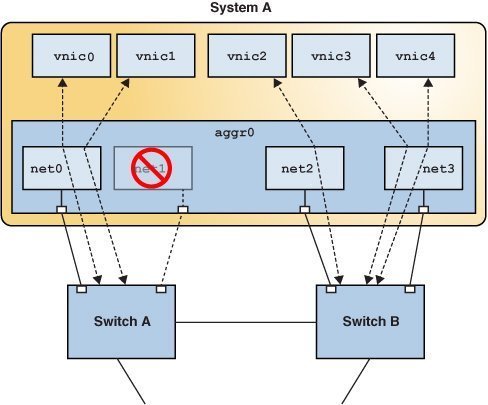

To understand the failover mechanism, compare the following figure with DLMP Aggregation.

Figure 5 DLMP Aggregation When a Port Fails

The link net1 has failed and its connection with the switch is down. Thus, net0 becomes a shared port between vnic0 and vnic1.

DLMP aggregation supports both link-based and probe-based failure detection.

Link-Based Failure Detection

Link-based failure detection detects failure arising from loss of direct connection between the datalink and the first-hop switch. Link-based failure detection is enabled by default when a DLMP aggregation is created.

Probe-Based Failure Detection

Probe-based failure detection detects failures between an end system and the configured targets. This method is useful when a default router is down or when the network becomes unreachable.

Probe-based failure detection works by sending and receiving probe packets. It uses two types of probes together to determine the health of the aggregated physical datalinks: Internet Control Message Protocol (ICMP (L3)) probes and transitive (L2) probes.

-

ICMP packets are sent from a source address to a target address. You can specify the source and target IP addresses manually or let the system choose them. If a source address is not specified, it is chosen from the IP addresses that are configured on the aggregation or on a VNIC on the aggregation. If a target address is not specified, it is chosen from one of the next hop routers on the same subnet as a selected source IP addresses.

-

L2 packets are sent from ports with no configured source addresses to targets with configured source addresses. Therefore, the source cannot receive ICMP probe replies. If the source receives an L2 reply from the target, then the ICMP target is reachable.

Transitive probing is performed when the health state of all network ports cannot be determined by using only ICMP probing.

Oracle Solaris includes proprietary protocol packets for transitive probes that are transmitted over the network. For more information, see Packet Format of Transitive Probes.

DLMP Aggregation of SR-IOV NICs

By grouping several SR-IOV-enabled NICs, you can create a SR-IOV-enabled DLMP aggregation on top of which you configure SR-IOV VNICs. The VNICs can be associated with the virtual functions (VFs) of the ports. Ports can be dynamically added or removed ports without disrupting the network connections or removing the existing configurations. See How to Configure DLMP Aggregation of SR-IOV NICs.

For information about SR-IOV VNICs, see Using Single Root I/O Virtualization With VNICs in Managing Network Virtualization and Network Resources in Oracle Solaris 11.4.

DLMP Aggregation of InfiniBand Host Channel Adapter (HCA) Ports

By grouping multiple InfiniBand host channel adapter (HCA) ports, you can create a DLMP aggregation over which you configure IPoIB VNICs over this DLMP aggregation.

Note - Only the IPoIB ULP through IPoIB VNIC configured over DLMP aggregation of InfiniBand HCA ports can be highly available and not the other InfiniBand ULPs.

You cannot directly configure an IP address on the DLMP aggregation of InfiniBand HCA ports. Instead, you configure IP addresses on the IPoIB VNICs configured over the DLMP aggregation.

When a link status of an IPoIB datalink is changed to being up or down, individual HCA ports are automatically failed over and network high availability of IPoIB partition datalinks is achieved.

Note - For DLMP aggregations of InfiniBand HCA ports, only link-based failover is supported and probe-based failover is not supported.

See How to Configure DLMP Aggregation of InfiniBand HCA Ports.