Features in NFS Version 4

This section provides descriptions of the features that were introduced in NFS Version 4:

Note - Starting in the Oracle Solaris 10 release, NFS Version 4 does not support the LIPKEY/SPKM security flavor. Also, NFS Version 4 does not use the mountd, nfslogd, and statd daemons.

For information about setting up NFS services, see Setting Up the NFS Service.

Unsharing and Resharing a File System in NFS Version 4

With both NFS Version 3 and NFS Version 4, if a client attempts to access a file system that has been unshared, the server responds with an error code. However, with NFS Version 3, the server maintains any locks that the clients had obtained before the file system was unshared. Thus, when the file system is reshared, NFS Version 3 clients can access the file system as though that file system had never been unshared.

With NFS Version 4, when a file system is unshared, all the state information for any open files or file locks in that file system is destroyed. If the client attempts to access these files or locks, the client receives an error. This error is usually reported as an I/O error to the application. However, resharing a currently shared file system to change options does not destroy any of the state information on the server.

For information about client recovery in NFS Version 4, see Client Recovery in NFS Version 4. For information about available options for the unshare command, see the unshare_nfs (1M) man page.

File System Namespace in NFS Version 4

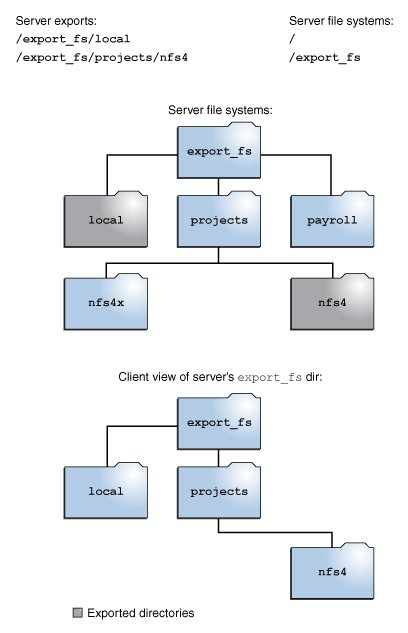

NFS Version 4 servers create and maintain a pseudo file system that provides clients with seamless access to all exported objects on the server. Prior to NFS Version 4, the pseudo file system did not exist. Clients were forced to mount each shared server file system for access.

A pseudo file system is a structure that contains only directories and is created by the server. The pseudo file system permits a client to browse the hierarchy of exported file systems. Thus, the client's view of the pseudo file system is limited to paths that lead to exported file systems.

-

Restricts the client's file system view to directories that lead to server exports.

-

Provides clients with seamless access to server exports without requiring the client to mount each underlying file system. However, different operating systems might require the client to mount each server file system.

Previous versions of NFS did not permit a client to traverse server file systems without mounting each file system. However, in NFS Version 4, the server namespace does the following:

Figure 2-2 Views of the Server File System and the Client File System in NFS Version 4

In the example shown in the figure, the client cannot see the payroll directory and the nfs4x directory because these directories are not exported and do not lead to exported directories. However, the local directory is visible to the client because local is an exported directory. The projects directory is visible to the client because projects leads to the exported directory, nfs4. Thus, portions of the server namespace that are not explicitly exported are bridged with a pseudo file system that views only the exported directories and those directories that lead to server exports.

Volatile File Handles in NFS Version 4

-

If a file was deleted and replaced with a file of the same name, the server would generate a new file handle for the new file. If the client used the old file handle, the server would return an error that the file handle was stale.

-

If a file was renamed, the file handle would remain the same.

-

If the server was rebooted, the file handles would remain the same.

File handles are created on the server and contain information that uniquely identifies files and directories. In NFS Version 2 and NFS Version 3, the server returned persistent file handles. Thus, the client could guarantee that the server would generate a file handle that always referred to the same file. For example:

Thus, when the server received a request from a client that included a file handle, the resolution was straightforward and the file handle always referred to the correct file.

The method of using persistent file handles for identifying files and directories for NFS operations was fine for most UNIX-based servers. However, the method could not be implemented on servers that relied on other methods of identification, such as a file's path name. To resolve this problem, the NFS Version 4 protocol permits a server to declare that its file handles are volatile. If the file handle does change, the client must find the new file handle.

Like NFS Versions 2 and NFS Version 3 servers, the Oracle Solaris NFS Version 4 server always provides persistent file handles. However, Oracle Solaris NFS Version 4 clients that access non Oracle Solaris NFS Version 4 servers must support volatile file handles if the server uses them. Specifically, when the server communicates to the client that the file handle is volatile, the client must cache the mapping between the path name and file handle. The client uses the volatile file handle until it expires. On expiration, the client does the following:

-

Flushes the cached information that refers to that file handle

-

Searches for that file's new file handle

-

Retries the operation

Note - The server always communicates to the client which file handles are persistent and which file handles are volatile.

-

When you close a file

-

When the file handle's file system migrates

-

When a client renames a file

-

When the server reboots

Volatile file handles might expire in any of these situations:

If the client is unable to find the new file handle, an error message is logged in the syslog file. Further attempts to access this file fail with an I/O error.

Client Recovery in NFS Version 4

The NFS Version 4 protocol is a stateful protocol. Both the client and the server maintain current information about the open files and file locks.

When a server crashes and is rebooted, the server loses its state. The client detects that the server has rebooted and begins the process of helping the server re-establish the open and lock states that existed prior to the failure. This process is known as client recovery because the client directs the process.

When the client detects that the server has rebooted, the client immediately suspends its current activity and begins the process of client recovery. When the recovery process starts, a message such as the following is displayed in the system error log /var/adm/messages:

NOTICE: Starting recovery server server-name

During the recovery process, the client sends the server information about the client's previous state. However, during this period, the client does not send any new requests to the server. Any new requests to open files or set file locks must wait for the server to complete its recovery process before proceeding.

When the client recovery process is complete, the following message is displayed in the system error log /var/adm/messages:

NOTICE: Recovery done for server server-name

At this point, the client has successfully completed sending its state information to the server. However, even though the client has completed this process, other clients might not have done so. Therefore, for a period of time, known as the grace period, the server does not accept any open or lock requests to enable all clients to complete their recovery.

During the grace period, if the client attempts to open any new files or establish any new locks, the server denies the request with the GRACE error code. Upon receiving this error, the client must wait for the grace period to end and then resend the request to the server. During the grace period, the following message is displayed:

NFS server recovering

During the grace period, the commands that do not open files or set file locks can proceed. For example, the commands ls and cd do not open a file or set a file lock, these commands are not suspended. However, a command such as cat, which opens a file, would be suspended until the grace period ends.

When the grace period has ended, the following message is displayed:

NFS server recovery ok.

The client can now send new open and lock requests to the server.

Client recovery can fail for a variety of reasons. For example, if a network partition exists after the server reboots, the client might not be able to re-establish its state with the server before the grace period ends. When the grace period has ended, the server does not permit the client to re-establish its state because new state operations could create conflicts. For example, a new file lock might conflict with an old file lock that the client is trying to recover. When such situations occur, the server returns the NO_GRACE error code to the client.

If the recovery of an open operation for a file fails, the client marks the file as unusable and the following message is displayed:

WARNING: The following NFS file could not be recovered and was marked dead (can't reopen: NFS status n): file : filename

If re-establishing a file lock during recovery fails, the following error message is displayed:

NOTICE: nfs4_send_siglost: pid process-ID lost lock on server server-name

In this situation, the SIGLOST signal is posted to the process. The default action for the SIGLOST signal is to terminate the process.

To recover from this state, you must restart any applications that had files open at the time of the failure. Some processes that did not reopen the file could receive I/O errors. Other processes that did reopen the file, or performed the open operation after the recovery failure, can access the file without any problems.

Thus, some processes can access a particular file while other processes cannot.

OPEN Share Support in NFS Version 4

-

DENY_NONE mode permits other clients read and write access to a file.

-

DENY_READ mode denies other clients read access to a file.

-

DENY_WRITE mode denies other clients write access to a file.

-

DENY_BOTH mode denies other clients read and write access to a file.

The NFS Version 4 protocol provides several file-sharing modes that the client can use to control file access by other clients. A client can specify the following:

The Oracle Solaris NFS Version 4 server fully implements these file-sharing modes. Therefore, if a client attempts to open a file in a way that conflicts with the current share mode, the server denies the attempt by failing the operation. When such attempts fail with the initiation of the open or create operations, the NFS Version 4 client receives a protocol error. This error is mapped to the application error EACCES.

Even though the protocol provides several sharing modes, the open operation in Oracle Solaris does not offer multiple sharing modes. When opening a file, an Oracle Solaris NFS Version 4 client can only use the DENY_NONE mode.

Note - Even though the fcntl system call has an F_SHARE command to control file sharing, the fcntl commands cannot be implemented correctly with NFS Version 4. If you use these fcntl commands on an NFS Version 4 client, the client returns the EAGAIN error to the application.

Delegation in NFS Version 4

NFS Version 4 provides both client support and server support for delegation. Delegation is a technique by which the server delegates the management of a file to a client. For example, the server could grant either a read delegation or a write delegation to a client. Because read delegations do not conflict with each other, they can be granted to multiple clients at the same time. A write delegation can be granted to only one client because a write delegation conflicts with any file access by any other client. While holding a write delegation, the client does not send various operations to the server because the client is guaranteed exclusive access to a file. Similarly, the client does not send various operations to the server while holding a read delegation. Because the server guarantees that no client can open the file in write mode.

The effect of delegation is to greatly reduce the interactions between the server and the client for delegated files. Therefore, network traffic is reduced, and performance on the client and the server is improved. However, the degree of performance improvement depends on the kind of file interaction used by an application and the amount of network and server congestion.

A client does not request a delegation. The decision about whether to grant a delegation is made entirely by the server based on the access patterns for a file. If a file has been recently accessed in write mode by several different clients, the server might not grant a delegation because this access pattern indicates the potential for future conflicts.

A conflict occurs when a client accesses a file in a manner that is inconsistent with the delegations that are currently granted for that file. For example, if a client holds a write delegation on a file and a second client opens that file for read or write access, the server recalls the first client's write delegation. Similarly, if a client holds a read delegation and another client opens the same file for writing, the server recalls the read delegation. In both situations, the second client is not granted a delegation because a conflict now exists.

When a conflict occurs, the server uses a callback mechanism to contact the client that currently holds the delegation. Upon receiving this callback, the client sends the file's updated state to the server and returns the delegation. If the client fails to respond to the recall, the server revokes the delegation. In such instances, the server rejects all operations from the client for this file, and the client reports the requested operations as failures. Generally, these failures are reported to the application as I/O errors. To recover from these errors, the file must be closed and then reopened. Failures from revoked delegations can occur when a network partition exists between the client and the server while the client holds a delegation.

Note that one server cannot resolve access conflicts for a file that is stored on another server. Thus, an NFS server only resolves conflicts for files that it stores. Furthermore, in response to conflicts that are caused by clients that are running various versions of NFS, an NFS server can initiate recalls only to the client that is running NFS Version 4. An NFS server cannot initiate recalls for clients that are running earlier versions of NFS.

The process for detecting conflicts varies. For example, unlike NFS Version 4, because NFS Version 2 and NFS Version 3 do not have an open procedure, the conflict is detected only after the client attempts to read, write, or lock a file. The server's response to these conflicts varies also. For example:

-

For NFS Version 3, the server returns the JUKEBOX error, which causes the client to halt the access request and try again later. The client displays the message File unavailable.

-

For NFS Version 2, because an equivalent of the JUKEBOX error does not exist, the server makes no response, which causes the client to wait and then try again. The client displays the message NFS server not responding.

The error messages clear when the delegation conflict is resolved.

By default, server delegation is enabled. You can disable delegation by setting the server_delegation parameter to off.

# sharectl set -p server_delegation=off nfs

No keywords are required for client delegation. The NFS Version 4 callback daemon, nfs4cbd, provides the callback service on the client. This daemon is started automatically whenever a mount for NFS Version 4 is enabled. By default, the client provides the necessary callback information to the server for all Internet transports that are listed in the /etc/netconfig system file. If the client is enabled for IPv6 and if the IPv6 address for the client's name can be determined, then the callback daemon accepts IPv6 connections.

The callback daemon uses a transient program number and a dynamically assigned port number. This information is provided to the server, and the server tests the callback path before granting any delegations. If the callback path does not test successfully, the server does not grant delegations, which is the only externally visible behavior.

Because callback information is embedded within an NFS Version 4 request, the server is unable to contact the client through a device that uses Network Address Translation (NAT). Also, the callback daemon uses a dynamic port number. Therefore, the server might not be able to traverse a firewall, even if that firewall enables normal NFS traffic on port 2049. In such situations, the server does not grant delegations.

ACLs and nfsmapid in NFS Version 4

An access control list (ACL) provides file security by enabling the owner of a file to define file permissions for the file owner, the group, and other specific users and groups. On ZFS file systems, you can set ACLs on the server and the client by using the chmod command. For UFS file systems, you can use the setfacl command. For more information, see the chmod (1) and setfacl (1) man pages. In NFS Version 4, the ID mapper, nfsmapid, is used to map user IDs or group IDs in ACL entries on a server to user IDs or group IDs in ACL entries on a client. The reverse is also true: The user and group IDs in the ACL entries must exist on both the client and the server.

For more information about ACLs and nfsmapid, see the following:

ID Mapping Problems

-

If a user or group that exists in an ACL entry on the server cannot be mapped to a valid user or group on the client, the user can read the ACL but some of the users or groups will be shown as unknown.

For example, in this situation when you issue the ls –lv or ls –lV command, some of the ACL entries will have the group or user displayed as unknown.

-

If the user ID or group ID in any ACL entry that is set on the client cannot be mapped to a valid user ID or group ID on the server, the setfacl and chmod commands can fail and return the Permission denied error message.

-

If the client and server have mismatched nfsmapid_domain values, ID mapping fails. For more information, see NFS Daemons.

The following situations can cause ID mapping to fail:

-

Make sure that the value for nfsmapid_domain is set correctly. The currently selected NFSv4 domain is available in the /var/run/nfs4_domain file.

-

Make sure that all user IDs and group IDs in the ACL entries exist on both the NFS Version 4 client and server.

To avoid ID mapping problems, do the following:

Checking for Unmapped User IDs or Group IDs

To determine whether any user or group cannot be mapped on the server or client, use the following script:

#! /usr/sbin/dtrace -Fs

sdt:::nfs4-acl-nobody

{

printf("validate_idmapping: (%s) in the ACL could not be mapped!",

stringof(arg0));

}

Note - The probe name that is used in this script is an interface that could change in the future. For more information, see Stability Levels in Oracle Solaris 11.2 Dynamic Tracing Guide .